Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | hojat Behrooz | -- | 1315 | 2022-09-21 19:42:01 | | | |

| 2 | Catherine Yang | Meta information modification | 1315 | 2022-09-22 02:59:59 | | | | |

| 3 | Catherine Yang | -71 word(s) | 1244 | 2022-09-23 04:58:30 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Behrooz, H.; Hayeri, Y.M. Machine Learning Applications in Surface Transportation Systems. Encyclopedia. Available online: https://encyclopedia.pub/entry/27451 (accessed on 07 February 2026).

Behrooz H, Hayeri YM. Machine Learning Applications in Surface Transportation Systems. Encyclopedia. Available at: https://encyclopedia.pub/entry/27451. Accessed February 07, 2026.

Behrooz, Hojat, Yeganeh M. Hayeri. "Machine Learning Applications in Surface Transportation Systems" Encyclopedia, https://encyclopedia.pub/entry/27451 (accessed February 07, 2026).

Behrooz, H., & Hayeri, Y.M. (2022, September 21). Machine Learning Applications in Surface Transportation Systems. In Encyclopedia. https://encyclopedia.pub/entry/27451

Behrooz, Hojat and Yeganeh M. Hayeri. "Machine Learning Applications in Surface Transportation Systems." Encyclopedia. Web. 21 September, 2022.

Copy Citation

Surface transportation has evolved through technology advancements using parallel knowledge areas such as machine learning (ML). ML is the most sophisticated state-of-the-art knowledge branch offering the potential to solve unsettled or difficult-to-solve problems as a data-driven approach.

artificial intelligence

machine learning

surface transportation systems

traffic management

transportation policy

intelligent transportation systems

1. Machine Learning

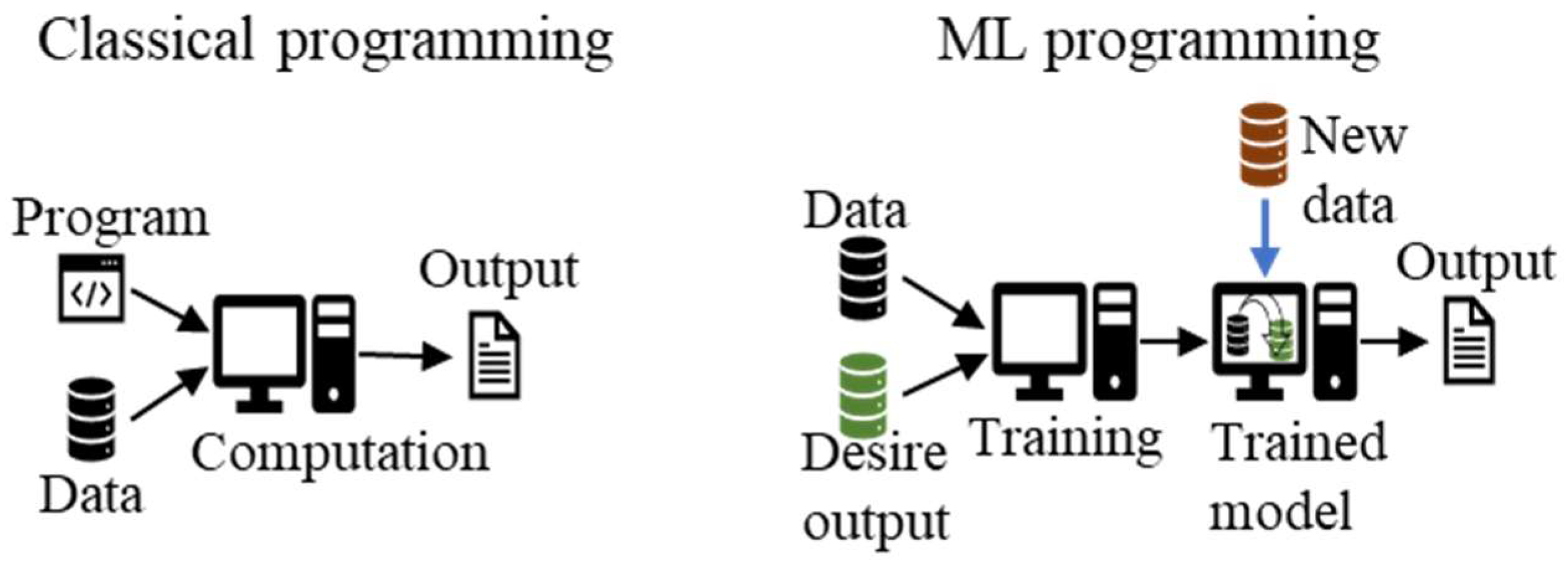

Machine learning is an evolving field that has impacted many applied sciences, including healthcare [1], the automotive industry [2], cybersecurity [3], and robotics [4][5]. Unlike classical programming, ML data processing is modeled in advance (Figure 1). Numerous ML publications cover various applications, algorithms, fundamentals, and theories [6][7][8][9][10][11][12][13]. Machine learning is the knowledge of applying data and techniques to imitate human learning competence patterns and behaviors. In their basic form, ML algorithms receive input data, analyze them, and provide output data by learning any perceived patterns and behavior inherent to the data. These algorithms typically apply a set of data, treat it as prior knowledge, train themselves, and search for similar patterns and ingrained behavior in the new data. Access to a large amount of prior knowledge would improve the efficiency of the learning experience.

Figure 1. ML versus analytical approach in programming.

As data-driven tools, ML algorithms learn from input data that contain correct results or functions. Several approaches exist for implementing this simple concept, depending on how the algorithm uses the input data and maintains the existing hyperparameters. These algorithms are organized into three primary categories: (1) classical algorithms, (2) neural network and deep learning algorithms, and (3) ensemble algorithms, as presented in Figure 2 and described below.

Figure 2. ML algorithms’ categories.

1.1. Classical Algorithms

Classical algorithms can be from supervised, unsupervised, or reinforcement learning algorithms. In the supervised learning algorithm, the input dataset includes both the input and desired output data. The algorithm is trained on how the input data are related to the output data. The supervised learning algorithms can be used for regression to predict a continuously variable output or classification to predict predefined classes or groups. The classification models are used for the prediction of the discrete variables. Logistic regression, naive Bayes (NB), supporting vector machine (SVM), decision trees, and k-nearest neighbor (KNN) represent the most popular ML models for supervised learning algorithm classification.

Additionally, popular supervised regression ML algorithms include linear, polynomial, and ridge/lasso regression. The output data in the unsupervised learning algorithm are not fed into the model. Instead, the model only looks at the input data, organizes them based on internally recognized patterns, and clusters them into structured groups. Subsequently, the trained model can predict the appropriate group to which the new input data belong.

The reinforcement learning algorithm is a reward-based algorithm. The algorithm is fed by an environment with predefined rewarding actions. Each action corresponds to a specific reward. The algorithm then experiences the action in that environment and based on the bonuses, learns how to deal with the input data, movement, and maximization of the total compensation. A reinforcement learning algorithm is a form of trial and error that learns from past experiences and adapts to the knowledge to achieve the best conceivable outcome.

1.2. Neural Networks and Deep Learning Algorithms

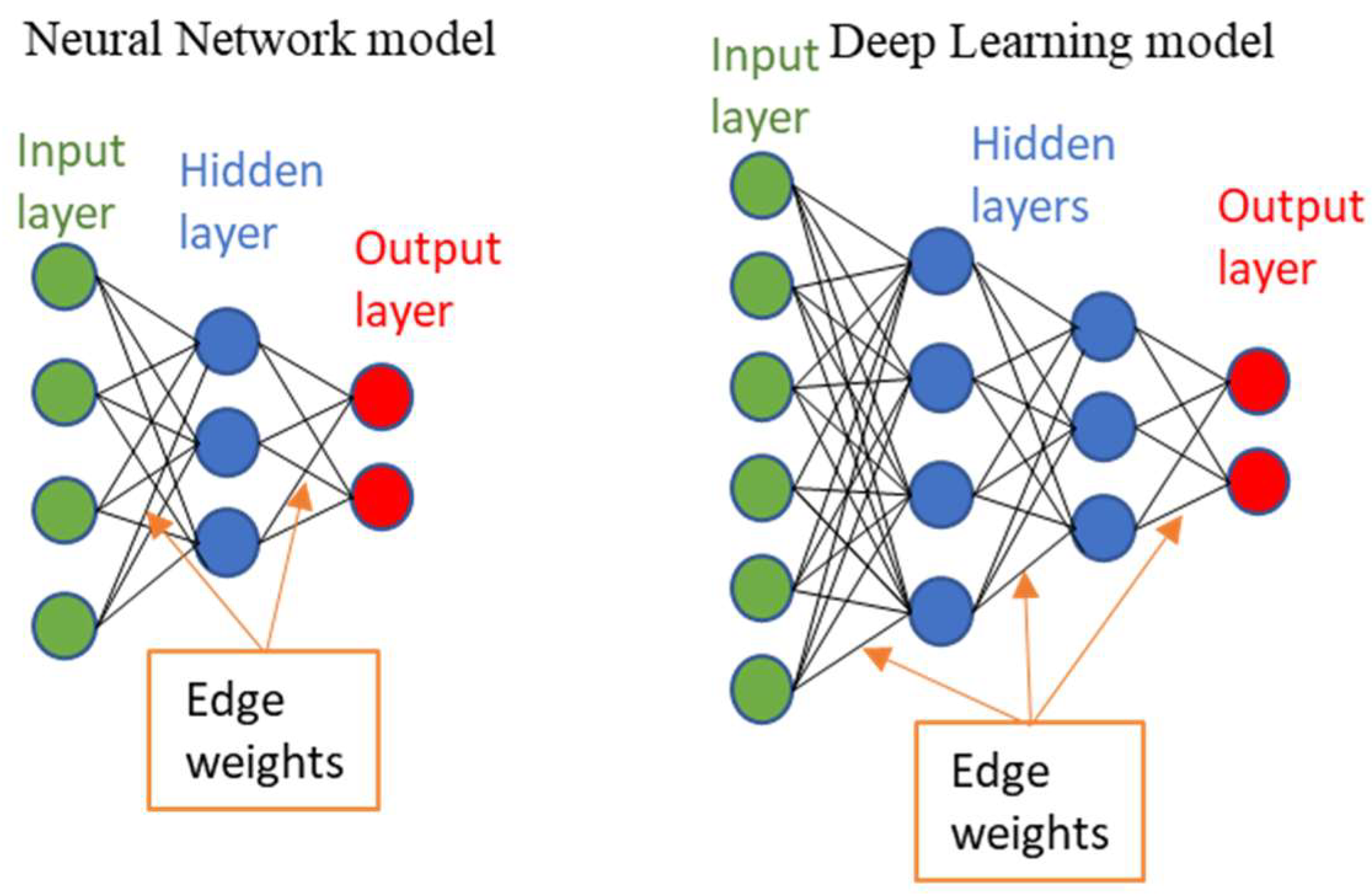

Inspired by the human brain’s neural structure, the NN and DL algorithms are based on multi-layered perceptrons. These algorithms contain an input layer, an output layer, and some hidden layers consisting of neurons or perceptrons. These layers are connected through weighted edges, and the training process is based on backpropagation. The input can be any real-world digitized data, such as an image, voice, or text. The input data establish proper edge weights and perceptron activation to improve the output accuracy by matching trained data. Neural network learning algorithms include supervised, unsupervised, reinforcement, clustering, regression, and classification strategies. The only difference between the NN and DL is the number of hidden layers (Figure 3). A DL algorithm can contain several hidden layers, each of which helps to extract a specific feature from the input data.

Figure 3. Neural network and deep learning model format.

Recurrent neural networks are special NNs that allow the model to receive a series as input and another as output. This type of NN is suitable for time-series prediction. Feedforward neural networks (FNNs) send the data in one direction from input to output. Additionally, RNNs have an internal circular structure (loop feedback) in which the output data of one layer can be fed into the same layer again as feedback. Therefore, the output at state t depends on the input data at state t and the network status at state t-1. This property makes RNNs suitable for temporal prediction. Convolutional neural networks (CNNs), another category of NNs, can extract features from a multidimensional graph. These networks apply several convolutional and pooling layers to extract the input graph features. This capability makes CNNs suitable for image and video recognition.

1.3. Ensemble Algorithms

Ensemble algorithms, or multiple learning classifier algorithms, combine numerous learning algorithms to solve a single problem. Ensemble algorithms train several individual ML algorithms and then combine the results by applying specific strategies to improve the accuracy. Homogeneous ensemble learners employ the same ML algorithms (weak classifiers), whereas the heterogeneous ensemble algorithms contain various learners’ algorithm types. As a result, the heterogeneous ensemble is more substantial than the homogeneous ensemble in generalization.

The boosting algorithm, a subcategory of the ensemble ML algorithm, tries to make a robust classifier from some weak classifiers. The algorithm groups input data using several classifiers and strategies to accomplish the best outcome. In this category, well-known algorithms include adaptive boosting (AdaBoost); categorical gradient boosting (CatBoost); light gradient boosted machine (LightGBN); and scalable, portable, and distributed gradient boosting (XGBoost).

The Bagging algorithm is another subcategory that feeds the ensemble learners with a separate pack of the input data to help the weak learner-trained models be independent of each other. Bagging is referred to as the input data class sampling. Random forest (RF) is a well-known classification and regression algorithm based on building several decision trees in the training process. The final result would be the most selected or average of the decision trees’ conclusions. Finally, in the stacking subcategory, the primary ensemble learners use the original training set and generate a new dataset for training in second-level models.

2. Surface Transportation Systems

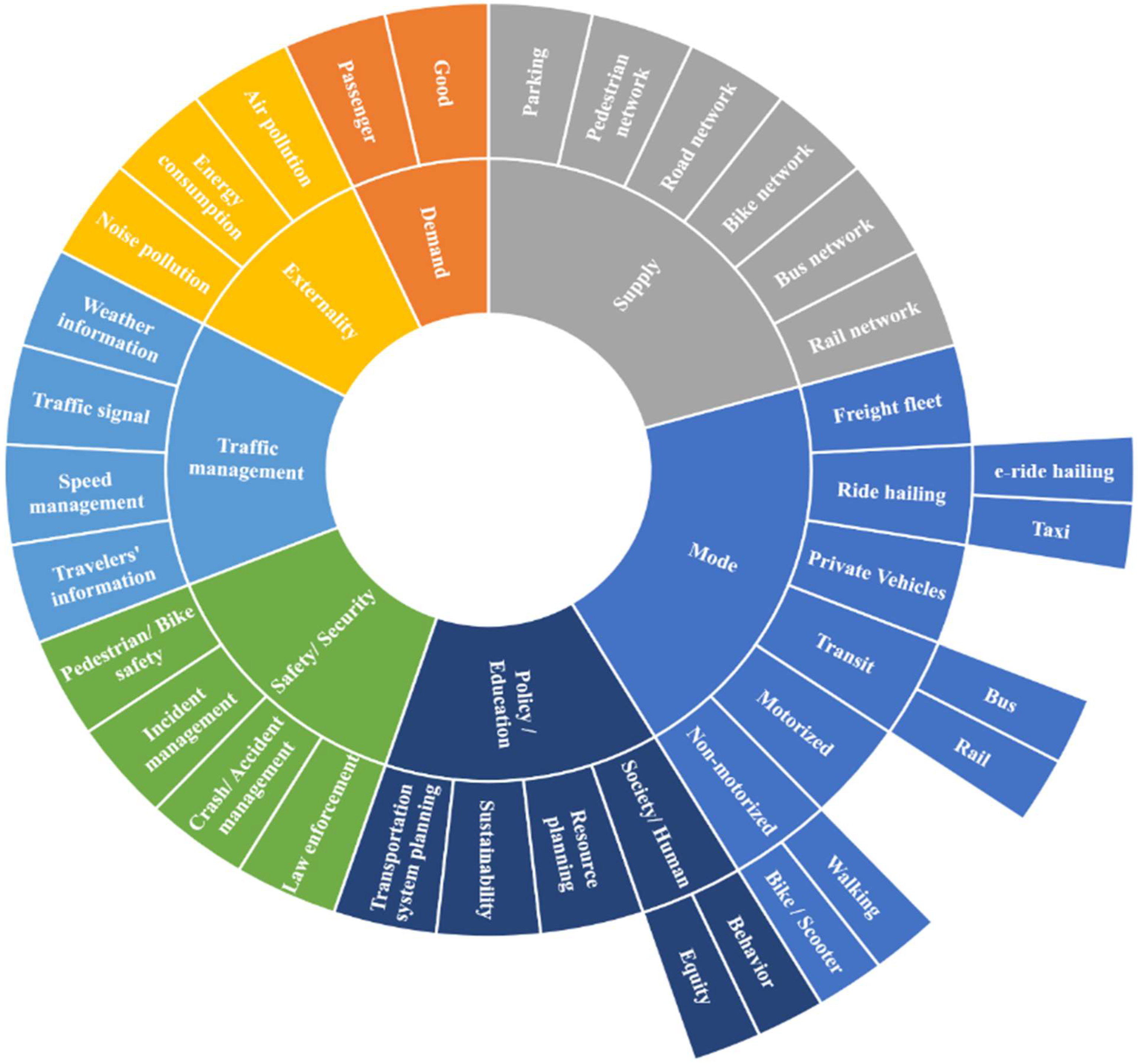

The surface transportation component ecosystem’s entire dynamic is illustrated in Figure 4. The top categories include mode, policy/education, safety/security, traffic management, externality, demand, and supply. Each STS subcategory, independently or in combination with others, has been considered according to interdisciplinary knowledge and technology application areas for performance improvement.

Figure 4. STS ecosystem component dynamics.

These knowledge and technology areas have addressed mobility challenges and problems from various approaches to make specific advancements. Figure 5 presents multiple aspects of advancements and their subcategories impacting STSs. For instance, autonomous vehicle (AV) research and development by technology providers and academic research have resulted in advanced technologies and enhancements for drivers and the automobile industry. Technologies and enhancements include automated parking technology, car-following and platooning technologies, adaptive cruise control, collision detection warning, lane change warning and maintenance systems, location-based and navigation systems, and dynamic routing services. Intelligent transportation systems, as a multidisciplinary knowledge area, have likewise produced some significant accomplishments during the past three decades (i.e., adaptive traffic signal control, opposite direction driving detection, over-speeding detection, automatic license plate recognition systems, red-light surveillance sensors, and traffic simulations). Each subcategory has fulfilled or improved one or more surface transportation components. For instance, weather condition forecasting improves “weather information” in the “traffic management” component of the STSs.

Figure 5. Application areas in STSs.

References

- Bailey, G.; Joffrion, A.; Pearson, M. A Comparison of Machine Learning Applications Across Professional Sectors; Social Science Research Network: Rochester, NY, USA, 2018.

- Luckow, A.; Cook, M.; Ashcraft, N.; Weill, E.; Djerekarov, E.; Vorster, B. Deep Learning in the Automotive Industry: Applications and Tools. In Proceedings of the 2016 IEEE International Conference on Big Data (Big Data), Washington, DC, USA, 5–8 December 2016; pp. 3759–3768.

- Xin, Y.; Kong, L.; Liu, Z.; Chen, Y.; Li, Y.; Zhu, H.; Gao, M.; Hou, H.; Wang, C. Machine Learning and Deep Learning Methods for Cybersecurity. IEEE Access 2018, 6, 35365–35381.

- Artificial Intelligence, Machine Learning, Automation, Robotics, Future of Work and Future of Humanity: A Review and Research Agenda: Computer Science & IT Journal Article|IGI Global. Available online: https://www.igi-global.com/article/artificial-intelligence-machine-learning-automation-robotics-future-of-work-and-future-of-humanity/230295 (accessed on 27 August 2021).

- Pierson, H.A.; Gashler, M.S. Deep Learning in Robotics: A Review of Recent Research. Adv. Robot. 2017, 31, 821–835.

- Zhou, Z.-H. Machine Learning; Springer: Singapore, 2021; ISBN 9789811519666.

- Mitchell, T.M. Machine Learning; McGraw-Hill: New York, NY, USA, 1997; ISBN 978-0-07-115467-3.

- The Hundred-Page Machine Learning Book—Andriy Burkov—Google Books. Available online: https://books.google.com/books/about/The_Hundred_Page_Machine_Learning_Book.html?id=0jbxwQEACAAJ (accessed on 27 August 2021).

- The Elements of Statistical Learning: Data Mining, Inference, and Prediction...—Trevor Hastie, Robert Tibshirani, Jerome Friedman—Google Books. Available online: https://books.google.com/books/about/The_Elements_of_Statistical_Learning.html?id=tVIjmNS3Ob8C (accessed on 27 August 2021).

- Abu-Mostafa, Y.S.; Magdon-Ismail, M.; Lin, H.-T. Learning from Data: A Short Course; AMLBook: Pasadena, CA, USA, 2012; ISBN 978-1-60049-006-4.

- Bayesian Reasoning and Machine Learning—Google Books. Available online: https://www.google.com/books/edition/Bayesian_Reasoning_and_Machine_Learning/yxZtddB_Ob0C?hl=en&gbpv=1&dq=Bayesian+Reasoning+and+Machine+Learning&printsec=frontcover (accessed on 27 August 2021).

- Chollet, F.; Allaire, J.J. Deep Learning with R; Manning Publications: Greenwich, CT, USA, 2018; ISBN 978-1-61729-554-6.

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms; Cambridge University Press: Cambridge, UK, 2014; ISBN 978-1-139-95274-3.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.1K

Revisions:

3 times

(View History)

Update Date:

23 Sep 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No