1. Classical Image Compression Approaches

1.1. Methods Used in Medical Imaging and Telemedicine

This is one of the main areas in which image compression is used in the medical field. Focusing on this domain, Dokur et al.

[1] propose a framework for image compression and segmentation using artificial neural networks. The author uses two neural networks, a Kohonen map and incremental self-organizing map (ISOM). First, the input image is separated in 8 × 8 pixels blocks, and then, two-dimensional discrete cosine transform coefficients are calculated for each resulting block. A low-pass filter is applied to eliminate high frequencies that are not visible to the human eye. The output of the low-pass filter, codeword, serves as an input for the learning process of the neural network. Computer simulations were performed, and different quality metrics (CR and MSE) were computed. It is observable from the provided metrics that a Kohonen map does not provide a satisfactory output. Even if the compression ratio is better than for the JPEG method (31 compared to 22.94), the MES has a bigger value of 139.91 compared to 102.32 for JPEG. For sure, even if the incipient results were not great, the whole-image compression based on a neural network approach shall not be excluded for future work in this domain.

Fast and safe transmission of images is vital in the medical domain; this is why different compression methods must be applied. The lossy compression type can achieve high compression ratios, but the image quality is affected, while the lossless compression type retains the image quality but does not have a good compression ratio. Starting from these two types of compression, the authors of paper

[2] (Punitha et al.) presents the idea of a near-lossless compression which can achieve satisfactory results for both compression rate and image quality. The proposed method uses sub-bands thresholds to increase the number of zero coefficients. Entropy run-length encoding is used to compress the image to retain valuable information and quality. The first step of the proposed method consists of wavelet decomposition completed using discrete wavelet transform using db1 wavelet. Then, the separation of coefficients is performed, and four sub-bands are obtained using approximation and detailed coefficients. The next step is called thresholding coefficients, which is a process used to isolate the relevant information from the image. Encoding the sub-bands using RLE is completed next. RLE is a lossless encoding mechanism used to compress images. The final part is the decompression process where the sub-bands are decoded, and the image is reconstructed. Metrics such as CR, PSNR, time complexity and BPP were used to examine the results. The achieved performances were compared to other consecrated and generally used compression techniques. For the evaluation of 256 × 256 images with 8 bit depth were used. The average CR was 5.67, and the average PSNR was 42.43 dB, meaning that from a visual point of view the output image can be considered almost identical to the original one. The shortcoming of the utilized method was that the image-size reduction is not significant.

Vallathan et al. in paper

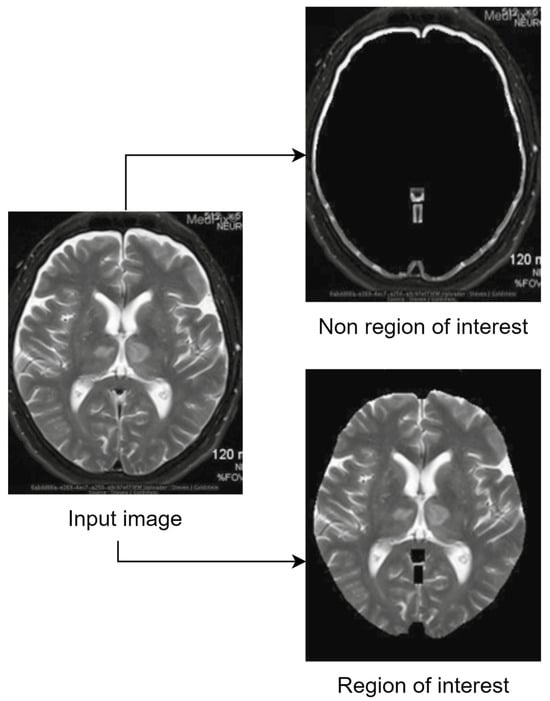

[3] focus on a lossless image compression that relies on hierarchical extrapolation using Haar transform and the embedded encoding technique. First, the color image is decomposed to Y,

C0, and Cg by applying color transform depicted in (16). The luminance component Y is processed using Haar transformation and then using SPIHT encoding [4]. When encoding the chrominance component, a hierarchical extrapolation scheme is used and then Huffman coding is applied. For the decompression part, SPIHT and Huffman decoding are applied, and the image is reconstructed. The utilized compression techniques show potential when analyzed using different relevant metrics such as PSNR, CR, or MSE. The results are compared to JPEG2000.

where R is the red component, G is the green component, and B is the blue component of a pixel.

During compression, images are susceptible to data loss and interferences; therefore, Nemirovsky-Rotman et al.

[5] proposed a compression and speckle denoising method. The algorithm is based on optimizing the quantization coefficients when applying wavelet representation on the input image. The noise reduction is an important part of the proposed method, with the objective being to reduce the effective speckle while preserving the edges in the image. Experiments and measurements were made to prove that the algorithm can simultaneously compress and despeckle the images based on the optimization of the quantization coefficients, taking into consideration an a priori version of the image. Different metrics were applied to demonstrate the value of the proposed method, and a comparison to JPED2000 was also discussed with the average PSNR metric having the value of 33.7 dB with a CR of around 25. Thes result show that the size reduction can be satisfactory, but the image quality may be too degraded for certain uses.

The storage of images for long periods of time is a necessity nowadays, but it also is a rising challenge, since there is limited storage capacity and any increase in this capacity results in extra costs. The presented study by Ammah et al.

[6] propose a solution to the storage issue, a DWT-VQ (Discrete Wavelet Transform–Vector Quantization) method to compress images but at the same time to preserve its quality. The technique also aims to reduce the speckle and salt and paper noises while preserving the edges of the input image. DWT filtering is applied to the images, and then, a threshold approach is used to generate coefficients. The obtained result is then vector quantized, and Huffman encoding is applied. The best results were achieved when two levels of DWT were applied before the quaternization process. When the image is needed, the reconstruction process is applied using the Huffman decoder, VQ decoder, and inverse DWT. The proposed solution was compared to different existing methods in the literature using PSNR, SSIM, CR, or MSE metrics. The results achieved are promising; the values using PSNR metrics are around 43 dB, and for CR they are around 90.

One clinical area where scans are usually required is oral medicine; in certain situations, stomatologists use dental images to better plan an intervention. As in other situations mentioned before, the efficient storage (achieving a high CR) and transmission of information contained in images is an issue. Because dental images are analyzed by specialists, it is critical to retain useful diagnostic information (for example, having a high PSNR value) while avoiding the appearance of visible distortions caused by the applied compression technique. Starting from an image’s discrete cosine transform (DCT) and partition scheme optimization, the authors of paper

[7] (Krivenko et al.) developed a noniterative approach to achieve lossless compression of dental images. In this paper, the authors examined and applied the relationships between three quality metrics: PSNR, PSNR using Human Visual System and Masking (PSNR-HVS-M), and feature similarity (FSIM) and the quantization step (QS), which controls the compression ratio. The distortion-visibility-threshold values for these metrics have been considered, while making sure that any detectable changes in noise intensity have been incorporated into the QS configuration. To achieve this, an advanced DTC coder available online was used. Tests were performed on 12 dental images with the size of 512 × 512 pixels. When testing, the observed and expected behavior was that the CR and PSNR metrics were affected by the value of the quantization step in a monotonous manner. This statement means that an increase in the QS value to a higher compression ratio was registered, but the PSNR value was dropping. Depending on the input image, the results may vary drastically, since for the same QS of 10, the CR results were inside the

[3][8] interval. While for a QS of 20, the CR obtained is between 12 and 78, with a PSNR ranging from 37.5 dB to 52.5 dB. This means that the wrong selection of QS might lead to unexpected results and loss of data. Therefore, testing, and extra analyses are necessary to define a set of properties that images must have to achieve predictable results for a given quantization step. Reaching the right value means that a visual-based comparison made by an expert between the original and compressed image will lead to the conclusion that the introduced distortions are undetectable.

When talking about the software-based ultrasound-imaging systems, the high data rate required for data transfer is a major challenge, especially when referring to real-time imaging. In the current paper

[9], a Binary cLuster (BL) code which yields a good compression is used by Kim et al. The starting point of the proposed method is the conventional exponential Golomb code. The aim is to compress any integers in real time without any overhead data when performing encoding and decoding; the specific steps for each operation are presented. An improved compression ratio was recorded by reducing the prefix size; the recorded values were around 40%, and in any tested scenario the CR was better than when the Golomb code was used. The average time for encoding took 0.15 s more than the Golomb code, while the average decoding time took 0.76 s less. Therefore, the average time for the whole encoding and decoding process was reduced by 0.61 s.

Certain real-time services require a very fast transfer of images to a host, and the high transfer rate required can be an issue. In the presented study

[10], Cheng et al. focus on the use of MPEG technology to increase the image-compression rate for ultrasound RF data. The images were encoded and decoded using an MPEG freeware. The constant rate factor (CRF) was used as rate control for the encoding process. A high value for CRF means larger quaternization which will result in encoding the frames into a smaller size. Setting the CRF to 0 means there will be lossless compression. The aim was to reduce the compression ratio for real-time applications. The best CR recorded was 7.7.

Focusing on real-time medical processes, an algorithm for lossless compression of ultrasound sensor data is proposed in paper

[11] by Mansour et al. The method is designed to operate on the transducer’s RF data after the ADC (Analog to Digital Converter) and before the receiver beamformer. The algorithm significantly reduced the data throughput by exploiting the existing redundancy in the transducer’s data. Lossless compression of the data would reduce the costs and would simplify the interfaces used for digital signal processing. The idea is to exploit the high correlation between the data of adjacent transducers to significantly reduce the energy on each line and encode the residual data instead of the original high-energy data. The compression ratios obtained are up to 3.59.

1.2. Methods Used for Spatial Images and Remote-Sensing Images

Because of hackers’ rising skills and the large volume of sensitive data circulating through digital channels, protecting remote-sensing photos during data transfer is crucial. Joint picture compression and encryption approaches for data transfer serve the purpose of achieving high transmission reliability, while maintaining reduced implementation costs. Existing solutions for multiband remote-sensing pictures usually have drawbacks such as long preprocessing durations, not supporting images with a high number of bands, and lack of security. To address the issues, Cao et al.

[12] propose a multiband remote-sensing image joint encryption and compression algorithm (JECA), which includes several stages: preprocessing encryption phase, crypto compression phase, and decoding phase. The preprocessing stage serves the purpose of obtaining a greyscale image which is obtained by receiving and combining all the bands. The greyscale image is divided into blocks on which discrete cosine transform (DTC) is applied. After applying DTC, two coefficients called DC (which represents the low-frequency component inside the block; it is usually a large value) and AC (which represents the high-frequency component inside the block; it is usually a small value) are obtained and then encrypted in the second stage. In the final stage, the reverse processes are performed to achieve the greyscale image again. The DC and AC coefficients are decrypted, and then the blocks are restored and merged into a grayscale image. Finally, postprocessing is applied to the grayscale image to create a remote-sensing image. According to the experimental results, which were performed on a multispectral image dataset containing images with 14 bands, JECA can reduce the sender’s preprocessing time by half when compared to already-existing joint encryption and compression methods that rely on the encryption-then-compression principle. JECA also improves security while retaining the same compression ratio as existing methods, particularly in terms of visual security and key sensitivity. JECA can achieve compression efficiency comparable to JPEG and other similar algorithms. Another observed fact was that increasing the number of bands does not affect performance. For the reconstructed image, the SSIM metric was close to 0.93, and it was measured that the remote-sensing image-file size was reduced to about 5% of the original, meaning a CR of 20. The obtained PSNR values are very low, meaning that the encrypted images have a high degree of distortion. Unfortunately, the PSNR values for the reconstructed images were not available.

1.3. Methods Used in Automotive

Until now, a vehicle did not rely on images when making any decisions; distance sensors such as ultrasound, LIDAR, or radar were widely used in obstacle detection and avoidance features. In the context of autonomous driving vehicles, cameras are a very important part for the perception mechanism. Transmission of large images can represent a major challenge and a bottleneck for certain memories and processors. Therefore, modern encoder/decoder mechanisms are required for data transfer inside the automobile. A key aspect being computational time, because the process of encode, transmit, and decode shall be faster than just directly transmitting the image. Löhdefink et al. in paper

[13] present an approach based on generative adversarial networks (GANs), with compression and decompression being made by means of an autoencoder. The authors investigated several scenarios, and, of course, when a compression was performed with a higher bit rate, better results were achieved when analyzing the metrics. An interesting result was that the semantic segmentation in a low bit rate regime yielded better results than JPEC2000 even if the PSNR metric is better for the latter method. Therefore, the method has the potential to yield high semantic segmentation performances even if the reconstruction of the original image is not as good as other conventional methods, and the PSNR metric has the value of 27.73 dB.

1.4. Methods Not Targeting a Specific Domain

Several papers do not focus on a certain domain, aiming for a more general area of use when testing the algorithms; the authors usually use standard test images. For example, in paper

[14], Wei et al. propose a multi-image compression–encryption algorithm based on two-dimensional compressed sensing (2D CS) and optical encryption to achieve large-capacity, fast, and secure image transmission. First, the study designs a new structured measurement matrix and applies compressed sensing to compress and encrypt multiple images at once. The multiple images are then encrypted for secondary encryption using double-random-phase encoding, which is based on the multi-parameter fractional quaternion Fourier transform. This enhances the security performance of the images. Additionally, a fractional-order chaotic system built for encryption and image compression exhibits more intricate chaotic behavior. The utilized algorithms for image encryption based on 2D CS and image compression or decompression are presented in the paper and are backed using flowcharts. The algorithm exhibits strong security and robustness, according to the experimental results. The test images used include 512 × 512 well-known color images (Lena, Peppers, Lake, and Airplane) and 256 × 256 grayscale images (Lena and Cameraman). The average CR is two, and the average PSNR value is 35.59 dB.

2. Hybrid Image-Compression Approaches

Classical lossy and lossless methods take the whole input image and compress it. Only parts of that image contain useful information; therefore, in recent years, the contextual or region-of-interest approach gained more visibility. The principles are simple; from the whole image, only a region is isolated and considered of high interest. There are multiple methods of how to define the ROI, and it either can be defined manually or automatically. In this chapter, different ROI-selection methods are presented: mathematical, masked-based, segmentation, interactive, growth, or based on neuronal networks. After all, the selection of ROI is very important because metrics such as compression ratio and computational time depend on its size. After ROI and RONI parts are defined, it is a matter of choosing the right filtering and compression methods to preserve the details inside the important parts and save space by sacrificing the quality of the non-important area.

The current chapter will present the state-of-the-art methods from the last decade when discussing ROI-based compression and decompression. The general approach of each method will be discussed alongside some relevant quality metrics. The obtained results are also compared to existing methods which can be considered proper references. The chapter will be divided into subchapters that will emphasize the domain in which the described algorithms were applied.

2.1. Methods Used in Medical Imaging and Telemedicine

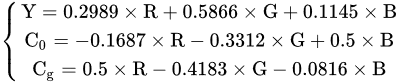

The whole idea behind the ROI-based techniques can be graphically summarized in the example presented in Figure 1.

Figure 1. Example of how an ROI-based algorithm works; image based on

[15].

A scheme for context-based encoding and decoding is presented by Ansari and Anand

[16] with the goal of achieving better compression rates for an image, while maintaining the quality of the region of interest. Therefore, the contextual region of interest is defined as the area containing the most vital information that must be preserved. Following this approach, the image is split into two parts, one is the ROI which is encoded using a very low compression ratio, and the other part is the background which is encoded with a high compression ratio. The ROI is identified using segmentation and interactive methods, using a generated mask which describes the characteristics of the areas that must be encoded with higher quality. The compression method is based on contextual set partitioning in hierarchical trees and on the segmentation method of selecting the contextual region-of-interest (CROI) mask. The main idea is that all pixels corresponding to the same region are grouped in trees. The authors also propose a 17-step algorithm designed to obtain the compressed images. The results were analyzed using CR, MSE, PSNR, and CoC metrics. Also, the obtained images were compared to other existing methods such as JPEG, JPEG2000, Scaling, Maxshift, Implicit, and EBCOT. Ansari continues their work in their next paper

[17], where different selection methods for the contextual region of interest are presented: a mathematical approach, segmentation approach, interactive approach, and the generation of an ROI mask. The proposed contextual set partitioning in a hierarchical tree algorithm is applied after the CROI is separated from the background. Also, the proposed contextual set partitioning in hierarchical tree (CSPIHT) algorithms is extended to 20 steps. The authors achieved compressions at various ratios from 10:1 to 256:1 and bpp from 1.0 to 0.03125 for the proposed CSPIHT algorithm. For testing purposes, an 8 bit image of size 667 × 505 was considered. The compression performance parameters such as BPP, CR, MSE, PSNR, and CoC were calculated for the proposed CSPIHT algorithm. The average PSNR for the ROI area was 36.54 dB with a CoC of 0.983.

Hierarchical lossless image compression aims to improve accuracy, reduce bitrate, and extend compression efficiency to improve the image storage and transmission procedure. Because usually only a part of the image is of high interest, the authors of paper

[18] (Sumalatha et al.) focus on maintaining the quality of a defined contextual region. In their work, the ROI is encoded with AMWT (adaptive multiwavelet transform) using MLZC (multi-dimensional layered zero coding). As preprocessing, filtering (spatial adaptive mask filter) is applied to reduce noise. Then, the ROI is extracted and encoded using contextual multidimensional layered zero coding with high bits per pixel and a low compression ratio. The RONI is encoded using low bits per pixel and a high compression ratio. Finally, the ROI and the background are merged. This paper used the adaptive lifting scheme to derive the AMWT-filter coefficients. The predictor in the adaptive lifting scheme was adjusted to compute the current pixel using two prior values. The computational complexity is decreased by the suggested predictor. Experiments and tests were performed on an 8 bit image, with the usual metrics (PSNR, RMSE, CR, MAE, CC and MSSIM) analyzed. Also, a comparison between the proposed method and existing methods was performed. The compression ratio ranges from 1.23 up to 17.23 with PSNR values of 29.99 dB up to 43.25 dB.

The authors (Devadoss et al.) of paper

[19] emphasize an image-compression model using block BWT-MTF with Huffman encoding alongside hybrid fractal encoding. The same approach for defining a region of interest is used in this paper too. Therefore, the critical zone is separated from the non-critical regions, and then, for the ROI, block-based Burrows–Wheeler compression is used. The rest of the image is encoded using a hybrid fractal encoding technique. The output image is reconstructed by merging the two zones. Burrows–Wheeler transform (BWT) groups similar repetitive elements by sorting the input data, therefore, making the compression more efficient. The move-to-front transform (MTF) has the purpose to transforming the local context to a global context by assigning indexes to symbols (the ones that occur more frequently have a smaller index). The splitting of the ROI from the other unimportant data is completed using morphological segmentation. As a first step of this process, the input image is turned into a grayscale image. Following that, structuring elements are assigned. Using morphological operators such as erosion and dilation, the input image is integrated with the specified structuring element. In the resulting binary image, pixels with a value of one are replaced with the original pixel value from the input image, resulting in the ROI component of the image. To obtain the NROI component, the binary image is inverted, and then the pixel with a value equal to 0 in the input image is replaced with the original pixel value. Because the compression algorithm has a high computational complexity, the ROI is divided into smaller blocks (128 × 128, 64 × 64, 32 × 32, 16 × 16, and 8 × 8). The performance of the proposed algorithms were evaluated using CR, space saving, time consumption, and PSNR quality metrics. The obtained values were compared to conventional methods. The average value for PSNR was 34.42 dB and for CR it was 11.67; multiple tests were conducted with different bpp values.

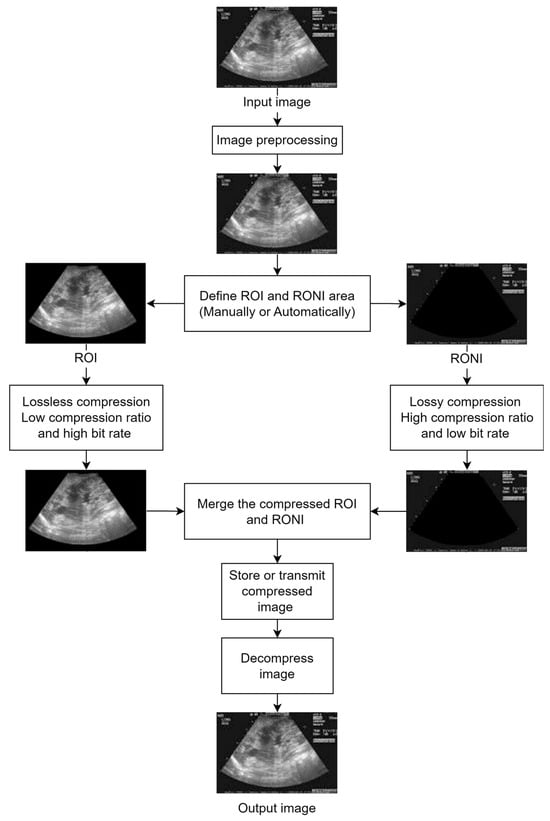

Another example of how the output of an ROI and RONI separation algorithm shall work is presented in Figure 2.

Figure 2. Example of separating the input image into ROI and RONI; image based on

[19].

In the medical field, region-of-interest-based coding techniques have become more important due to their ability to compress and transmit data efficiently. Pre-processing images is the first step proposed by Kaur et al. in paper

[20]. The image is then separated into ROI and non-ROI segments using segmentation. Lastly, compression is used to lower network and storage bandwidth. In this paper, two efficient compression methods—fractal lossy compression for non-ROI images and context tree weighting lossless for the ROI portion of an image—are proposed and compared with other methods, including scalable RBC and integer wavelet transform. When compared to earlier techniques, such as IWT and Scalable RBC, the suggested strategies have shown a low mean-squared-error rate, high PSNR, and high compression ratio. According to the results, the average CR is 89.6, and the average PSNR is 55.2 dB.

Fahrni et al., in

[21], developed a novel approach to medical-image compression that offers the optimal trade-off between compression efficiency and image quality. The approach is predicated on various contexts and ROIs, which are determined by the level of clinical interest. Primary ROIs, or high-priority areas, are given a lossless compression. The background and secondary ROIs are compressed with moderate to severe losses. The primary region of interest (PROI), secondary region of interest (SROI), and background (NROI or RONI) are the three regions into which the authors suggest dividing the images. The primary region of interest in an image is called the PROI. This region can be defined by the radiologist manually or automatically using computer-aided segmentation (CAS)

[22]. Since the PROI holds the most important information, it is best to keep this area intact. It will go through lossless compression, which will result in a small size reduction but will guarantee that the exam’s most important data are fully retained. Regions of lesser clinical interest that can be compressed with a moderate loss of visual quality are included in the SROI. The remainder of the image, which is of no real significance, is included in the background. Two methods were proposed one with a variable bit rate (MVAR) and one with a fixed bit rate (MFIX). The MVAR method obtained PSNR values over 40 dB and an average MOS of 5 (excellent). When executing the tests, the DICOM format was used to store the images, with a bit depth of 16 bpp and an in-plane resolution of 512 × 512 pixels. With a 9:1 compression ratio when compared to the original, non-compressed images, the average-compressed-image size was up to 61% smaller than that of standard compression techniques such as JPEG2000. The suggested approach also has the benefit of not requiring the conventional compression engine. Stated differently, the method can be applied to any ROI-based compression scheme. The idea of a multi-level compression approach could still be used in the future, with automatic segmentation of any pre-defined PROIs, SROIs, and background, to achieve even greater results in terms of compression ratio, while maintaining satisfactory image quality. This is especially true given the ongoing development of artificial intelligence (AI)-based methods

[23].

2.2. Image Watermarking in Medical Field

There are domains in which the images contain sensitive diagnoses information that should be protected from any tampering risk. Therefore, an authentication method that assures the image security but does not affect its quality was introduced in parallel to the compression process. With this purpose, Badshah et al., in their paper

[24], focus on the lossless compression of an image’s ROI and on the importance of watermarking using different techniques. The Lempel–Ziv–Welsh (LZW) technique is the one that stands out, with a compression ratio of 13, which is better than PNG, GIF, JPG, and JPEG2000. The LZW technique can preserve the ROI qualities, unchanged, while ensuring there is less payload encapsulation into the image. The LZW approach is based on a dictionary approach to achieve lossless compression that can be applied for both images and texts. An initial image with a size of 480 × 640 was taken and an ROI of 100 × 200 pixels was defined using image segmentation. Generally, the image’s central part can be defined as ROI as it is a more informative zone. Then, the ROI is used as a watermark in the watermarking process of images. The choice of a larger ROI can increase the LZW-image-compression ratio. ROI was obtained for the experiment phase and then transformed into binary values of zeroes and ones. Each binary-converted pixel value was added to a file. Binary values are repeated in sequences in the binary file meaning that greater size ROIs have more binary sequences that repeat, and higher sequence counts enhance the image’s compression ratio. The authors saw the benefits of LZW-based watermarking and developed a more robust approach in their next paper

[25].

The work presented in

[25] focuses on the watermarking of images to authenticate them and detect illegal changes. The process of digital watermarking refers to the actions taken to embed relevant information to an image with the purpose of copyright protection, recovery, and authentication. But, to maintain the image quality, the watermark shall be losslessly compressed, with the focus on Lempel–Ziv–Welch (LZW) losslessly compressed watermarks. The LZW method is a dictionary-based compression technique that can be used for text data and images. In this case, the watermark can be considered a combination of the defined region of interest and the applied secret key. In each image, a 100 × 100 pixel segment was selected as the ROI, and then a secret key was generated and applied to obtain the watermark. The watermark was processed with LZW lossless compression and placed in the image RONI portion. The compression ratio achieved using the LZW algorithm is compared to other utilized formats available, obtaining the best results with an average CR of 18.5. The average PSNR value for the watermarked image is around 54 dB. If LZW is used more than once, the entire watermark size can theoretically be reduced to two binaries, a zero and a one. The addition of a two bit watermark to an image will permanently fix the image deterioration and watermark accommodation issues. To verify the authenticity of the ROI area, the watermarking secret key that was used before the watermarking process is compared to the secret key obtained after performing the decompression. If the codes do not match then tamper localization and lossless recovery are required. Similarly, if both values match, it indicates that the ROI is genuine, and the image can be used for further research.

Watermarking an image is a common process because some sensitive information needs to be protected against illegal alterations. Haddad et al.

[26] present a watermarking scheme based on the lossless compression standard JPEG-LS. The novelty is that the access to security services can be granted without decompressing the image. The JPEG-LS standard refers to a lossless or near-lossless compression, with the purpose of providing a low-complexity image-compressing algorithm proposed by the International Standards Organization ISO/IEC JTC1

[27]. The technique relies on the LOCO-I algorithm (lOw cOmplexity Lossless cOmpression for Images)

[28] which is based on pixel prediction using a contextual statistical model. The values for the PSNR metric are greater than 40 dB (up to 50.5 dB), while the smallest capacity achieved regarding the bit of information per pixel of an image is 0.03. The visual-saliency-based index (VSI) is between 0.94 and 0.99, depending on the capacity (bit of information per pixel of image). Even if some information from the image was lost, the visual quality of the watermarked region is almost similar to the original. The experiments were conducted on a dataset consisting of 60 images with a pixel size of 576 × 690, with 8 bits per pixel.

To precisely recover tampered with medical images, Liew et al. in

[29] proposed the tamper-localization and lossless-recovery (TALLOR) scheme. This method allows the tampered image to be restored to its original state through lossless compression. The image that was recovered can be considered the same as the original and could still be utilized for a diagnosis. The problem with this plan was that the original image was compressed using lossless compression and then embedded as part of the watermark. As a result, it took longer to locate the tamper and recover the tampered image by decompressing and using the embedded watermark. The tamper localization and recovery processing would take longer if the user had asked for the image to be authenticated at the time of usage. In the next paper

[30], the authors suggested improvements to their earlier work to decrease the recovery time and tamper localization by proposing a reversible watermarking scheme (TALLOR) by dividing the image into the ROI and RONI. The ROI is the important portion of medical images that physicians use to make diagnoses, and RONI is the region outside of the ROI. Using Jasni’s scheme

[31], watermarking is carried out in the ROI region for tamper detection and recovery. After compression, the original least-significant digits (LSBs) that are eliminated during the watermark-embedding process are kept in RONI. The watermarking scheme can be reversible because the saved LSBs can be used later to return the image to its original bit value. By further segmenting the ROI into smaller parts, tamper localization and lossless recovery with ROI segmentation (TALLOR-RS) is an enhanced watermarking scheme. Each segment requires separate authentication. Only segments that are suspected of tampering can be further examined as part of a multilevel authentication process.

Tampering with sensible images can be prevented by applying watermarking. Usually, an image can be divided into two separate zones, the region of interest (ROI) and region of non-interest (RONI). The authors of paper

[32] continued the work from

[30], revealing a ROI-based tamper detection and watermarking that embeds the important information into the least-significant bits of the image which can be used anytime for data authentication and recovery. The watermarking process is described as a 14-step algorithm. The image can be split into multiple regions, each marked if they are of interest or not. The authors choose to define one ROI and five RONI. Then, the watermarking authentication process consists of a configuration step followed by the authentication process, verification process (where image is checked if it is corrupted or not), a process that checks that no tampering has occurred in the RONI areas where they were used to store ROI watermark bits, and, finally, the recovery of the image. Experiments were conducted to prove the usability and the performance of the shown work. The ROI-DR (detection and recovery) method was also compared to other existing methods. Because TALLOR and TALLOR-RS, the proposed watermarking schemes, share certain characteristics, like storing ROI bits in RONI’s LSB, implementing JPEG compression techniques, and using the SHA-256 hashing method in the algorithm, a comparison of these three watermarking schemes will be carried out, and the ROI-DR watermarking scheme’s speed-up factors in comparison to TALLOR and TALLOR-RS will be measured. These three watermarking schemes will conduct their experiments using the same hardware and software environment, as well as the same set of image samples for testing purposes, to ensure fairness in the comparison of results. The process of watermarking embedding proved to be faster than other existing schemes. The outcome of the experiment demonstrated that the ROI-DR is robust against different types of tampering and can restore the tampered ROI to its original form. It also achieved a good result in imperceptibility with peak signal-to-noise ratio (PSNR) values of roughly 48 dB. The images used for testing purposes had a size of 640 × 480 and 8 bits per pixel.

2.3. Methods Used in Automotive

When discussing the automotive field, the development towards autonomous vehicles is clear; increasingly, well-known manufacturers have started developing smart vehicles that can handle certain situations on their own, with the driver only being required for supervision. In this context, the use of onvehicle cameras will increase, capturing images to be analyzed and processed to serve as an input for several security features. Akutsu et al. proposed in paper

[33] an ROI-based image-compression algorithm that is meant to be used inside an infrastructure-quality-control system. The purpose of this system is to use the cameras available on a vehicle to detect the structural quality of the road; this means that a large amount of data needs to be transmitted and stored for further processing, making image compression a vital part of the whole system. The proposed compression method uses annotation information such as bounding boxes or segmentation maps for a defined weighted quality metric that is based on SSIM. This will be used for the region of interest part, with the convolutional auto encoder (CAE) being used for the non-important regions. Experiments were conducted on a dataset containing damaged-road images of 256 × 256 pixels alongside annotation data. The results show that the method can be compared to other existing methods (BPG—better portable graphics). Even if the PSNR for the ROI is slightly lower (27 dB compared to 33 dB for BPG), the bits per pixels were reduced by 31%, meaning that the quality is almost preserved, but the size is considerably reduced.

The interest in autonomous vehicles has led to the need for wireless communication between the elements involved in traffic (vehicle to vehicle, vehicle to infrastructure, infrastructure to vehicle communication). Realizing that the increase in cameras and sensors involved in vehicle to X communications will lead to hard-to-reach processing requirements and bottlenecks. Löhdefink et al.

[34] proposed an ROI-based image-compression algorithm that focuses on the reduction of overall data size. The ROI is defined using a binary mask which uses semantic segmentation networks to extract important information. The network was trained using a loss function applied only on the ROI. Therefore, the sender acquires the image, and then semantic segmentation and the ROI mask generator are applied before the encoding of the data. For testing purposes, a dataset containing almost 4000 training, validation, and testing images was used. The size of each picture was downscaled to 1024 × 512 pixels due to limited GPU video memory. The PSNR for the ROI was around 27 dB. As seen, for now, the neural network approaches do not weld the same results as other methods, but for certain scenarios they can be useful, especially for selecting the ROI.

In time, the vehicles will rely increasingly on artificial vision, meaning that the large amount of data that must be transmitted will become a problem, especially knowing that, historically, in the automotive field, the available resources are rigorously checked and limited to reduce the costs of production. ROI-based image-compression algorithms can be very useful for infrastructure-only applications such as speed traps where only the license plate can be considered important. Efficient image compression can be used in systems that check whether the road tax was paid or not; in this scenario, the license plate is again a region of interest with the other ROI being a sticker on the windshield that is usually the proof of payment. Another example where a compression method could be useful is the application described in

[35] where the visibility on a portion of a road during foggy weather is estimated using a laser, LIDAR, and cameras.

2.4. Methods Used for Spatial Images and Remote-Sensing Images

Hyperspectral sensors have gained a lot of popularity recently for remote sensing on Earth. These systems can provide the user with images that contain information about both the spectrum and the spatial domain. Present-day hyperspectral spaceborne sensors have improved spectral and spatial resolution, enabling them to capture large areas. Because of their limited storage capacity, it is necessary to lower the volume of data acquired on board to prevent a low orbital duty cycle. The focus of the recent literature has been on effective methods for on-board data compression. The harsh environment (outer space) and the constrained time, power, and computing resources make this a difficult task. Graphic processing unit (GPU) hardware characteristics have frequently been used to speed up processing through parallel computing. In the presented work

[36] (which continues the work from

[37]), a GPU on-board operating framework is proposed by Giordano et al., utilizing NVIDIA’s CUDA (Compute Unified Device Architecture) architecture. The algorithm uses the related strategy of the target to perform on-board compression. Specifically, the primary functions involve using an unsupervised classifier to automatically identify land cover types or detect events in nearly real time in regions of interest (this is a user-related option). This means, compressing regions using space-variant different bit rates, such as principal component analysis (PCA), wavelet, or arithmetic coding and managing the volume of data sent to the ground station. One impediment can be that supervised classification cannot be used because the labels, in this case, the topographic classes, are unknown in advance; unsupervised classification techniques are better suited for the automatic segmentation and identification of ROIs in hyperspectral images. Algorithms for clustering detect meaningful patterns without label knowledge and do not require training stages. The distortion index (DI), which is the inverse of the mean square error (MSE) between the reconstructed and original image, has been used to gauge accuracy. Rather than using the entire image (ROI and background), the DI has only been calculated in the ROI area to provide a fair comparison. The algorithm has superior performance in the ROI part compared to JPEG2000.

In his work, Zhang et al.

[38] proposed a deep-learning self-encoder framework-based region-of-interest (ROI) compression algorithm to enhance image-reconstruction performance and minimize ROI distortion. Since most traditional ROI-based image-compression algorithms rely on manual ROI labeling to achieve region separation in images, the authors first adopted a remote-sensing image cloud detection algorithm for detecting important targets in images. This involves first separating the important regions in the remote-sensing images from the remote-sensing background and then identifying the target regions. To synthesize images and to more effectively reduce spatial redundancy, a multiscale ROI self-coding network from coarse to fine with a hierarchical super priority layer was designed. This significantly improved the distortion-rate performance of image compression. The authors improved compression performance by employing a spatial-attention mechanism for the ROI in the image-compression network. Using an accurate key information enhancement mechanism, the suggested algorithm can compress the ROI more successfully and enhance the impact of image compression using a higher bit rate. The Landsat-8 satellite-image dataset was used to train and test the designed ROI image-compression algorithm. Its performance was then compared to that of the conventional image-compression algorithm and with deep-learning image-compression algorithms without the attention mechanism. Two different methods were tested: coarse to fine hyper prior modeling (CFHPM) for learned image compression, and multiscale region of interest coarse to fine hyper prior modeling (MROI-CFHPM) for learned image compression, with the second one providing better results with a PSNR value of up to 37.5 dB. MROI-CFHPM can be used to achieve the differential compression of the ROI, significantly enhancing the overall image’s compression performance.

Focusing on the fact that wireless image-sensor networks are usually poorly equipped nodes, with a camera, a radio transceiver, and a limited processor, Kouadria et al., proposed in paper

[39], a solution that reduces energy consumption for the image-compression process. Using discrete Tchebichef transform (DTT) as an alternative to the discrete cosine transform (DCT), the ROI of the image will be compressed, with the expected outcome being that less computational complexity is required, and, therefore, less energy is consumed. The goal is to achieve the same compression ratio as other state-of-the-art methods but with a reduced number of operations. The approach taken is to have an image as a reference frame and a separate sensor that is used for movement detection. When that sensor provides a trigger, a new frame will be taken; the ROI will be generated based on the differences between the new frame and the reference image. Therefore, when transmitting, only the region of interest will be compressed using DTT and forwarded; the rest of the image will be discarded. The key element in the proposed method is the change-detection algorithm. Different datasets containing pedestrians or cars moving on roads have been used (with different sizes: 240 × 320, 768 × 576 or 512 × 512 pixels). The obtained performances for the PSNR metric are around 40 dB, which can be considered good enough, but the main advantage of ROI-only compression based on change detection and DTT is the fact that the energy consumption was reduced by half compared to classical formats such as JPEG. The only drawback of the method is that the testing was conducted using a simulation, not a real-life scenario.