| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Kai Hu | + 1948 word(s) | 1948 | 2022-02-17 04:24:08 | | | |

| 2 | Camila Xu | + 2268 word(s) | 4216 | 2022-03-01 04:24:11 | | | | |

| 3 | Camila Xu | + 2267 word(s) | 4215 | 2022-03-01 04:26:37 | | | | |

| 4 | Camila Xu | Meta information modification | 4215 | 2022-03-01 04:44:02 | | | | |

| 5 | Camila Xu | Meta information modification | 4215 | 2022-03-01 04:48:44 | | | | |

| 6 | Camila Xu | Meta information modification | 4215 | 2022-03-03 04:36:11 | | |

Video Upload Options

Underwater video images, as the primary carriers of underwater information, play a vital role in human exploration and development of the ocean. Due to the absorption and scattering of incident light by water bodies, the video images collected underwater generally appear blue-green and have an apparent fog-like effect. In addition, blur, low contrast, color distortion, more noise, unclear details, and limited visual range are the typical problems that degrade the quality of underwater video images. Underwater vision enhancement uses computer technology to process degraded underwater images and convert original low-quality images into a high-quality image. The problems of color bias, low contrast, and atomization of original underwater video images are effectively solved by using vision enhancement technology. Enhanced video images improve the visual perception ability and are benefificial for subsequent visual tasks. Therefore, underwater video image enhancement technology has important scientifific signifificance and application value.

1. Introduction

Visual information, which plays an essential role in detecting and perceiving the environment, is easy for underwater vehicles to obtain. However, due to many uncertaintiesin the aquatic environment and the inflfluence of water on light absorption and scattering,and the quality of directly captured underwater images can degrades signifificantly. Largeamounts of solvents, particulate matter, and other inhomogeneous media in the water cause less light to enter the camera than in the natural environment. According to the Beer-Lambert-Bouger law, the attenuation of light has an exponential relationship with the medium. Therefore, the attenuation model of light in the process of underwater propagation is expressed as:

(1)

(1)

In Equation (1), E is the illumination of light, r is the distance, a is the absorption coeffificient of the water body, and b is the scattering coeffificient of the water body. The sum of a and b is equivalent to the total attenuation coeffificient of the medium.

The process of underwater imaging is shown in Figure 1. As light travels through water, it is absorbed and scattered. Water bodies have different absorption effects on light with different wavelengths. As shown in Figure 1, red light attenuats the fastest, and will disappear at about 5 meters underwater, blue and green light attenuate slowly, and blue light will disappear at about 60 meters underwater. The scattering of suspended particles and other media causes light to change direction during transmission and spread unevenly. The scattering process is inflfluenced by the properties of the medium, the light, and polarization. McGlamery et al. [1] presented a model for calculating underwater camera systems. The irradiance of non-scattered light, scattered light and backscattered light can be calculated by input geometry, source properties and optical properties of water. Finally, the parameters such as contrast, transmittance and the signal-to-noise ratio can be obtained. Then, the classical Jaffe–McGlamery [2] underwater imaging model was proposed. It indicates that the total illuminance entering the camera is a linear superposition of the direct component, the forward scatter component, and the backscattered component

(2)

(2)

In the equation, Ed, Ej and Eb represent the components of direct irradiation, forward scattering, and backscattering, respectively. The direct irradiation component is the light directly reflflected from the surface of the object into the receiver. The forward scattering component refers to the light reflflected by the target object in the water, deflflected into the receiver by the small angle of suspended particles in the water during straight propagation. Backscattering refers to illuminated light that reaches the receiver through the scattering of the water body. In general, the forward scattering of light attenuates more energy than the back scattering of light.

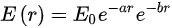

Due to the absorption and scattering of incident light by water bodies, the video images collected underwater generally appear blue-green and have an apparent fog-like effect. In addition, blur, low contrast, color distortion, more noise, unclear details, and limited visual range are the typical problems that degrade the quality of underwater video images [3]. Figure 1 shows some low-quality underwater images.

Figure 1. Some low-quality underwater images.

Figure 1. Some low-quality underwater images.

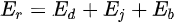

The existing underwater image enhancement techniques are classifified and summarized. As shown in Figure 2. The current algorithms are mainly divided into traditional and deep learning-based methods. Traditional methods include model-based and non-model methods. Non-model enhancement methods, such as the histogram algorithm, can directly enhance the visual effect through pixel changes without considering the imaging principle. Model-based enhancement is also known as the image restoration method. According to the imaging model, the relationship between clear, fuzzy, and transmission images is estimated, and clear images are derived, such as through the dark channel prior (DCP) algorithm [4]. With the rapid development of deep learning technology and its excellent performance in computer vision, underwater image enhancement technology based on deep learning is also developing rapidly. The methods based on deep learning can be divided into those based on convolution neural networks (CNN) [5] and those based on generative adversarial networks (GAN) [6]. Most of the existing enhancement techniques are extensions of underwater single image enhancement techniques in the video fifield. Since the development of underwater video enhancement technology is not fully mature, it will not classify for the time being here.

Figure 2. Classification of underwater image enhancement methods.

Figure 2. Classification of underwater image enhancement methods.

2. Traditional Underwater Image Enhancement Methods

2.1. Non-Physical Model Enhancement Methods

Due to the unique underwater optical environment, there are some limitations when traditional image enhancement methods are directly applied to image enhancement, so many targeted algorithms are proposed, including histogram-based, retinex-based, and image fusion-based algorithms.

(1) Histogram-based methods

Image enhancement based on the histogram equalization (HE) algorithm [7] transforms the image histogram from narrow unimodal to balanced distribution. As a result, the original image has roughly the same number of pixels in most gray levels (Table 1).

Table 1. Histogram-based underwater image enhancement methods.

| Author | Algorithm | Contribution |

|---|---|---|

| Iqbal et al. [8] | Unsupervised color correction method | Effectively removes blue bias and improves low component red channel and brightness |

| Ahmad et al. [9] | Adaptive histogram with Rayleigh stretch limit contrast | Enhances detail and reduces over-enhancement, supersaturated areas, and noise introduction |

| Ahmad et al. [10] | Recursive adaptive histogram modification combined with HSV model color correction | Better contrast in background area |

| Li et al. [11] | Contrast enhancement algorithm combining dehazing and prior histogram | Improves contrast and brightness |

| Li et al. [12] | Underwater white balance algorithm combined with histogram stretch phase | Shows better results in terms of color correction, haze removal, and detail clarification |

(2) Retinex-based methods

Retinex theory, based on color constancy, obtains the true picture of the scene by eliminating the influence of the irradiation component on the color of the object and removing the uneven illumination (Table 2).

Table 2. Underwater image enhancement methods based on retinex theory.

| Author | Algorithm | Contribution |

|---|---|---|

| Fu et al. [13] | Variational framework based on retinex to decompose and optimize reflectivity and illumination | Solves problems of color distortion, underexposure, and blurring |

| Bianco et al. [14] | The chromatic components are changed, moving their distributions around the white point (white balancing) and histogram cutoff, and stretching of the luminance component | Improves image contrast and it is suitable for real-time implementation. |

| Zhang et al. [15] | Extended multiscale retinex for underwater image enhancement | Suppresses halo phenomenon |

| Mercado et al. [16] | Multiscale retinex combined with reverse color loss (MSRRCL) | Overcomes problem of uneven lighting; color is more obvious |

| Li et al. [17] | Color correction algorithm based on MSR algorithm combined with histogram quantization of each color channel | Enhances underwater image contrast and removes color bias |

| Zhang et al. [18] | Multiscale retinex with color recovery based on multi-channel convolution (MC) | Enhances image’s global contrast and detail information, reduces noise, and eliminates the influence of illumination |

| Tang et al. [19] | Underwater image and video enhancement method based on multi-scale retinex (IMSRCP) | Improves image contrast and color, and suitable for underwater video |

| Hu et al. [20] | Use the gravitational search algorithm (GSA) to optimize the underwater image enhancement algorithm based on MSR and the NIQE index | Improves adaptive ability to environmental changes |

| Tang et al. [21] | Propose an underwater image enhancement algorithm based on adaptive feedback and Retinex algorithm | Reduces the time required for underwater image processing, improves the color saturation and color richness |

| Zhuang et al. [22] | A Bayesian retinex algorithm for enhancing single underwater image with multiorder gradient priors of reflectance and illumination | Solves problems of color correction, naturalness preservation, structures, and details promotion, artifacts, or noise suppression |

(3) Fusion-based methods

The image fusion algorithm fuses multiple images of the same scene to realize omplementary information of various images to achieve richer and more accurate image information after enhancement (Table 3).

Table 3. Underwater image enhancement methods based on image fusion algorithms.

| Author | Algorithm | Contribution |

|---|---|---|

| Ancuti et al. [23] | White balance and histogram equalization used to obtain two images, and multiscale fusion algorithm used to integrate underwater features | Noise reduction, improved global contrast, significantly enhanced edges and details for underwater video enhancement |

| Ancuti et al. [24][25] | Image is synthesized by using complementary information between multiple images; process of acquiring fused images and definition of weight information are optimized | Images are more informative and clearer, improves image exposure, and maintains image edges |

| Pan et al. [26] | Fusion strategy of Laplacian pyramid is used to fuse defogging image and color correction image | Enhances underwater image contrast and removes color bias |

| Chang et al. [27] | Transmission mapping fusion based on optical properties and image knowledge | Foreground has improved clarity, while the background remains somewhat blurry and more natural |

| Gao et al. [28] | A method based on local contrast correction (LCC) and multiscale fusion and the local contrast corrected images are fused with sharpened images by the multiscale fusion method | Solves the color distortion, low contrast, and unobvious details of underwater images |

| Song et al. [29] | An updated strategy of saliency weight coefficient combining contrast and spatial cues to achieve high-quality fusion combine with white-balancing and the global stretching | Eliminates color deviation, achieves high-quality fusion and a better de-hazing effect |

2.2. Physical Model-Based Enhancement Algorithm

Different from the non-physical model enhancement algorithm, the algorithm based on the physical model analyzes the imaging process and uses the inverse operation of the imaging model to get a clear image to improve the image quality from the imaging principle. It is also known as the image restoration technique. Underwater imaging models play a crucial role in physical model-based enhancement methods. The Jaffe–McGlamery underwater imaging model is a very widely used recovery model.

(1) Polarization-based methods

An underwater image restoration method based on the principle of polarization imaging utilizes the polarization characteristics of scattered light to separate scene light and scattered light, estimate the intensity and transmission coefficient of scattered light, and realize the imaging intensification (Table 4).

Table 4. Underwater image restoration algorithms based on polarization.

| Author | Algorithm | Contribution |

|---|---|---|

| Schechner et al. [30] | Polarization effect of underwater scattering is used to recover underwater images | Improved visibility and contrast |

| Namer et al. [31] | Polarization degree and intensity of background light are estimated from polarized image | More accurate estimation of depth map |

| Chen et al. [32] | If there is an artificial lighting area, area is compensated | Eliminates effects of artificial lighting on underwater images |

| Han et al. [33] | Backscattering effect is considered, and light source is changed to alleviate the scattering effect | Point diffusion estimation based on light polarization is proposed |

| Ferreira et al. [34] | Polarization parameters are estimated by the bionic optimization method, and unreferenced mass measure is used as the cost function for restoration | Achieves better visual quality and adaptability |

(2) Dark channel prior–based methods

He et al. [4] proposed the dark channel prior (DCP) algorithm. According to statistics, it is found that there is always a channel in most areas of a fog-free image, and a pixel has a meager gray value, which is called a dark channel. The dark channel prior theory is used to solve the transmission image and atmospheric light value, and the atmospheric scattering model is used to restore the image.

DCP algorithm has excellent defogging performance. When applied to underwater images, the dark channel is affected because the water absorbs too much red light. Therefore, underwater DCP algorithm is usually improved for this feature. Table 5 lists the underwater-specific DCP algorithms (Table 5).

Table 5. Underwater image restoration algorithms based on the DCP algorithm.

| Author | Algorithm | Contribution |

|---|---|---|

| Yang et al. [35] | Median filtering is used to estimate depth of field, and a color correction method is introduced | Improves calculation speed and contrast |

| Chiang et al. [36] | Combined wavelength compensation and image dehazing (WCID) | Corrects image blurring where artificial light is present |

| Drews et al. [37][38] | Underwater dark channel prior (UDCP) method considering only blue and green channels | Underwater images have a more obvious defogging effect |

| Galdran et al. [39] | DCP algorithm improved by using minimization of reverse red channel and blue-green channel | Processes influence of artificial light area, improves image color trueness |

| Li et al. [40] | Red channel uses gray world color correction algorithm, and blue and green channels use the DCP algorithm | Significantly improves visibility and contrast |

| Meng et al. [41] | Different strategies (color balance or DCP) selected to restore RGB combined with maximum posterior probability (MAP) sharpening | Eliminates underwater color projection, reduces blur, improves visibility, and better retains foreground textures |

(3) Integral imaging-based methods

Integral imaging technology is based on a multi-lens stereo vision system, which uses lens array or camera array to quickly obtain information from different perspectives of the target, and combines all element images (each image that records information from ifferent perspectives of a three-dimensional object) into element image array (EIA) (Table 6).

Table 6. Underwater image restoration algorithms based on the integral imaging.

| Author | Algorithm | Contribution |

|---|---|---|

| Cho et al. [42] | Use statistical image processing and computational 3D reconstruction algorithms to remedy the effects of scattering | The first report on 3D reconstruction of objects in turbid water using integral imaging |

| Lee et al. [43] | Applies spectral analysis and introduces a signal model with a visibility parameter to analyze the scattering signal | Reconstructed image presents better color presentation, edge, and detail information. |

| Satoruet et al. [44] | Combined with maximum posterior estimation, bayesian scattering suppression is achieved | This method achieves a higher structural similarity index measure |

| Neumann et al. [45] | Three-dimensional reconstruction is realized by combining the gray-world assumption applied in the Ruderman-opponent color space | Locally changing luminance and chrominance are taken into account |

| Bar et al. [46] | Single-shot multi-view circularly polarized speckle images collected by lens array and deconvolution algorithm based multiple medium sub-PSFs viewpoints are combined | Improve recovery of hidden objects in cloudy liquids |

| Li et al. [47] | Reconstruct the images obtained in a high-loss underwater environment by using photon-limited computational algorithms | Improves the PSNR in the high-noise regime |

3.1. Convolutional Neural Network Methods

LeCun et al. [5] first proposed the convolutional neural network structure LeNET. The convolutional neural network is a kind of deep feedforward artificial neural network. It is composed of multiple convolutional layers that can effectively extract different feature expressions, from low-level details to high-level semantics, and is widely used in computer vision. In the underwater image enhancement algorithm based on CNN, according to whether the algorithm uses a physical imaging model to restore, it can be divided into non-physical and combined physical methods.

(1) Combined physical methods

Traditional model-based underwater image enhancement methods usually need to estimate the transmission graph and parameters of the underwater image based on prior knowledge and other strategies, and those estimated values thus have poor adaptability. The method combined with the physical model mainly uses the excellent feature extraction ability of the convolutional neural network to solve the parameter values in the imaging model, such as the transmission diagram. In this process, CNN replaces the assumptions or prior knowledge used in traditional methods, such as dark channel prior theory (Table 7).

Table 7. Underwater image enhancement method combined with physical model CNN.

| Author | Algorithm | Contribution |

|---|---|---|

| Shin et al. [48] | Learn the transmission image and background light of the underwater image at the same time | Good defogging performance |

| Ding et al. [49] | Adaptive color correction method is used for color compensation of underwater images, combined with convolutional neural network model | Reduces image blur |

| Wang et al. [50] | Color correction and “defog” processes trained simultaneously, and pixel interference strategy is used to optimize the training process | Improves convergence speed and accuracy of learning process |

| Barbosa et al. [51] | Set of image quality metrics is used to guide restoration process, and image is recovered by processing analog data | Avoids difficulty of real scene data measurement |

| Hou et al. [52] | Combined residual learning for underwater residual CNN | Deep learning approaches combine data-driven and model-driven approaches |

| Cao et al. [53] | Convolutional neural network is used to learn background light and transmission images directly from input images | Reveals more image details |

| Wang et al. [54] | Parallel convolutional neural network estimates transmission image and background light | Prevents halo, maintains edge features |

| Li et al. [55] | Design an underwater image enhancement network via medium transmission-guided multi-color space embedding | Exploiting multiple color spaces embedding and the advantages of both physical model-based and learning-based methods |

(2) Non-physical model methods

In the non-physical model, the original underwater image is sent into the network model with the help of CNN’s powerful learning ability. The enhanced underwater image is directly output after convolution, pooling, deconvolution, and other operations (Table 8).

Table 8. Underwater image enhancement methods of non-physical model CNNs.

| Author | Algorithm | Contribution |

|---|---|---|

| Perez et al. [56] | Deep learning method is used to learn the mapping model of degraded and clear images | First to use deep learning for underwater image enhancement |

| Sun et al. [57] | Underwater image is enhanced using an encoder–decoder structure | Significant denoising effect, enhanced image details |

| Li et al. [58] | Gated fusion network with white balance, histogram equalization, and gamma correction algorithms is used | Reference model for underwater image enhancement with good generalization performance |

| Li et al. [59] | Training data are synthesized by combining the physical model of the image and optical characteristics of underwater scenes and used to train the network | Retains original structure and texture while recreating a clear underwater image |

| Naik et al. [60] | Shallow neural networks connected by convolutional blocks and jumps are used | Maintains performance while having fewer parameters and faster speed |

| Han et al. [61] | A deep supervised residual dense network uses residual dense blocks, adds residual path blocks between the encoder and decoder, and employs a deep supervision mechanism to guide network training | Retains the local details of the image while performing color restoration and defogging |

| Yang et al. [62] | A non-parameter layer for the preliminary color correction and a parametric layers for a self-adaptive refinement constitute a trainable end-to-end neural model | The results have better details, contrast and colorfulness |

| Wang et al. [63] | Integrate both RGB Color Space and HSV Color Space in one single CNN | Addresses the problem that RGB color space is insensitive to image properties such as luminance and saturation |

3.2. Generative Adversarial Network-Based Methods

Generative adversarial network(GAN) was proposed by GoodFellow et al. [6]. A generative adversarial network (GAN) is used to produce better output through the game confrontation learning of generator and discriminator. By learning, the generator generates an image as similar to the actual image as possible so that the discriminator cannot distinguish between true and false images. The discriminator is used to indicate whether the image is a composite or actual image. If the discriminator cannot be cheated, the generator will continue to learn. The process is shown in Figure 5. The input of the generator is a low-quality image, and the output is a generated image. The input of the discriminant network is the generated image and the actual sample, and the output is the probability value that the generated image is true. The probability value is between 1 and 0. As an excellent generation model, GAN has a wide range of applications in image generation, image enhancement and restoration, and image style transfer mutual (Tables 9 and 10).

Table 9. Underwater image enhancement methods based on CGAN.

| Author | Algorithm | Contribution |

|---|---|---|

| Li et al. [64] | Underwater image generation countermeasure network WaterGAN, using atmospheric images and depth maps to synthesize underwater images as the training dataset | Constructs two-stage deep learning network using raw underwater images, authentic atmospheric color images, and depth maps |

| Guo et al. [65] | New multiscale dense generated adversarial network(UWGAN) | Multiscale manipulation, dense cascading, and residual learning improve performance, render more detail, and take full advantage of features |

| Liu et al. [66] | Multiscale feature fusion network for underwater image color correction (MLFcGAN) realized multiscale global and local feature fusion in the generator part | Conducive to more effective and faster online learning |

| Yang et al. [67] | Dual discriminator designed to obtain local and global semantic information, thus constraining the multiscale generator | Generated images are more realistic and natural |

| Li et al. [68] | A simple and effective fusion adversarial network that employs the fusion method and combines four different losses | Corrects color and has superiority in both qualitative and quantitative evaluations |

| Liu et al. [69] | Combine the Akkaynak–Treibitz model and generative adversarial network | Achieves clear results with good white balance and visually quite close to the ground-truth images |

Table 10. Underwater image enhancement and restoration based on CycleGAN.

| Author | Algorithm | Contribution |

|---|---|---|

| Fabbri et al. [70] | Unpaired underwater images are used for training, then generated clear images and corresponding degraded images are formed into a training set | Absolute error loss and gradient loss are added to the loss function |

| Lu et al. [71] | Underwater image restoration based on a multiscale CycleGAN network; establishes adaptive image restoration process by using dark channel prior to obtaining depth information of underwater images | Improves underwater image quality, enhances detail structure information, has good performance in contrast enhancement and color correction |

| Park et al. [72] | A pair of discriminators is added based on Cyc1eGAN; introduces adaptive weighting method to limit loss of the two discriminators | Stable training process |

| Islam et al. [73] | Supervises training based on global content, image content, local texture, and style information | Good color restoration and image sharpening effect, fast processing speed can be used in underwater video enhancement |

| Hu et al. [74] | Add the natural image quality evaluation (NIQE) index to the GAN | Provides generated images with higher contrast and tries to generate a better image than the truth images set by the existing dataset |

| Zhang et al. [75] | An end-to-end dual generative adversarial network (DuGAN) uses two discriminators to complete adversarial training toward different areas of images | Restores detail textures and colour degradations |

4. Underwater Video Enhancement

With the development of underwater video acquisition and data communication technology,real-time underwater video transmission becomes possible. Underwater video with spatiotemporal information and motion characteristics has higher application prospects than underwater images in ocean development. Because of the optical properties, underwater video has some similar problems to underwater images, such as color bias, image blur, low contrast, uneven illumination, etc. At the same time, due to the influence of water flow on video acquisition equipment, the texture features and details of moving objects are weakened or disappear. These problems seriously affect the ability of the underwater video system to accurately collect scene and object features. Unlike atmospheric video enhancement technology, which tends to solve blur and jitter, underwater video enhancement focuses more on solving the harmful effects of the unique optical environment on color and visibility.

Compared with underwater image enhancement technology, underwater video enhancement is more complicated. The research in this direction has not yet reached a mature stage. Most of the existing underwater video enhancement methods are extensions of single image enhancement algorithms. When underwater image enhancement technology is directly applied to video, each frame is enhanced and then connected into a new video. Due to the differences in transmission images and background light between frames, the continuity of the enhanced video is not well maintained, and time artifacts and interframe flicker phenomena can occur (Table 11).

Table 11. Underwater video enhancement algorithms.

| Author | Algorithm | Contribution |

|---|---|---|

| Tang et al. [19] | Extracts features from low-resolution images after subsampling; subsampling and IIR gaussian filter are used to form a fast filter to complete the fast two-bit convolution operation | Solves the problem of the strain on computing resources by increased scale effectively |

| Li et al. [59] | The convolutional layer in the network structure of UMCNN is not connected to other convolutional layers in the same block and the network does not use any full connection layer or batch normalization processing | The total depth of the network is only 10 layers, which reduces the computational cost and is easy to train |

| Islam et al. [73] | In the generator part, the model only learns 256 feature graphs of size 8 × 8; in the discriminant part, the recognition is only based on patch-level information | The entire network structure requires only 17 MB of memory and calculates more efficiently |

| Ancuti et al. [23] | The time-bilateral filtering strategy is used for the white balance version of the video frame. Time-sequence information is added in time-domain bilateral filtering | Enhances sharpness and improves the stability of the smooth region. Achieves smoothing between frames and maintains temporal coherence |

| Li et al. [76] | The depth cues from stereo matching and fog information reinforce each other. Calculates the fog transmission of each pixel directly from the scene depth and the estimated fog density | Eliminating the ambiguity of air albedo during defogging maintains the time consistency of the final defogging video |

| Qing et al. [77] | The images of subsequent frames are guided by the grayscale image of the first frame and combine the current frame’s background light estimation to avoid frequent changes of the atmospheric light value | Reduces the computational complexity and the scintillation caused by changes in the transmission image and atmospheric light value |

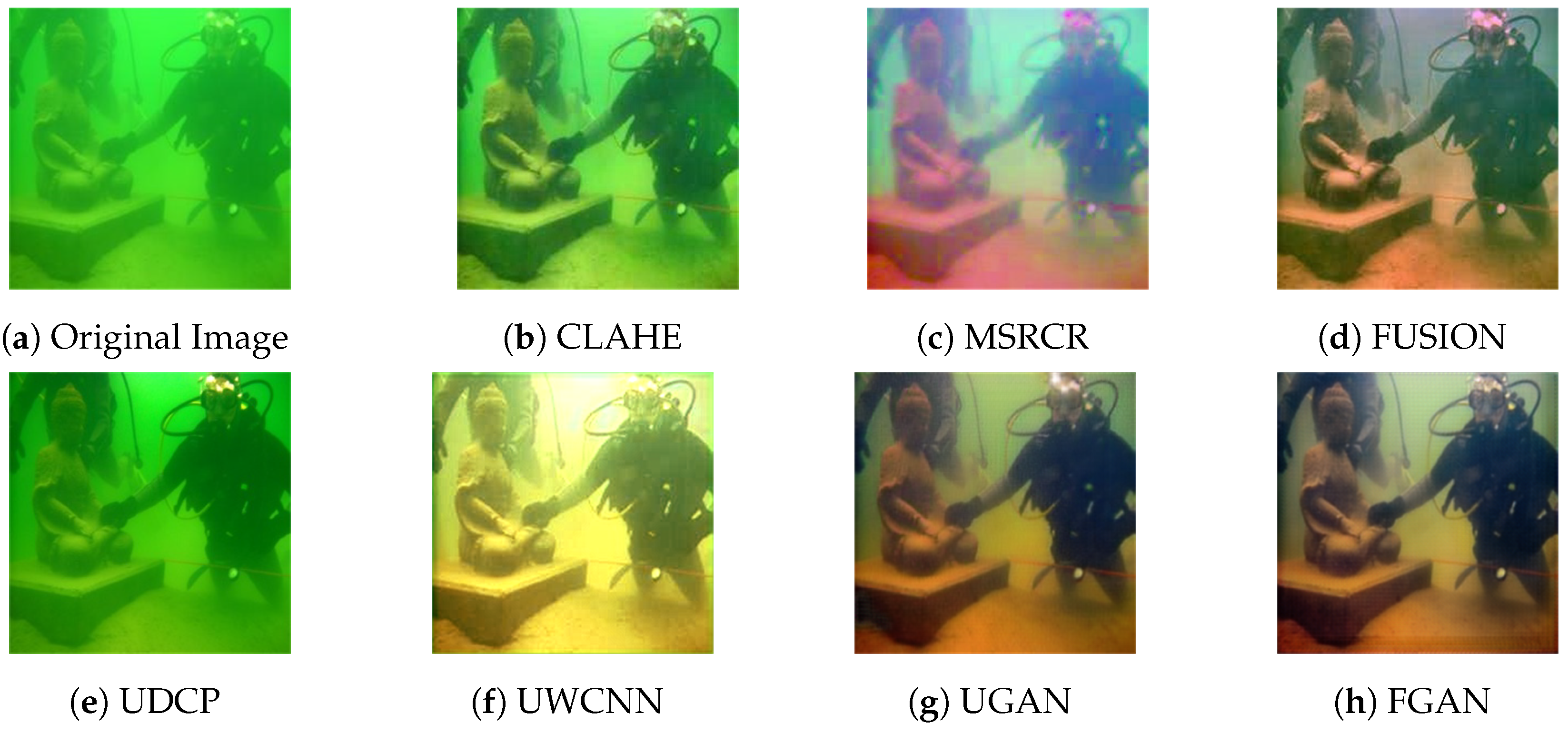

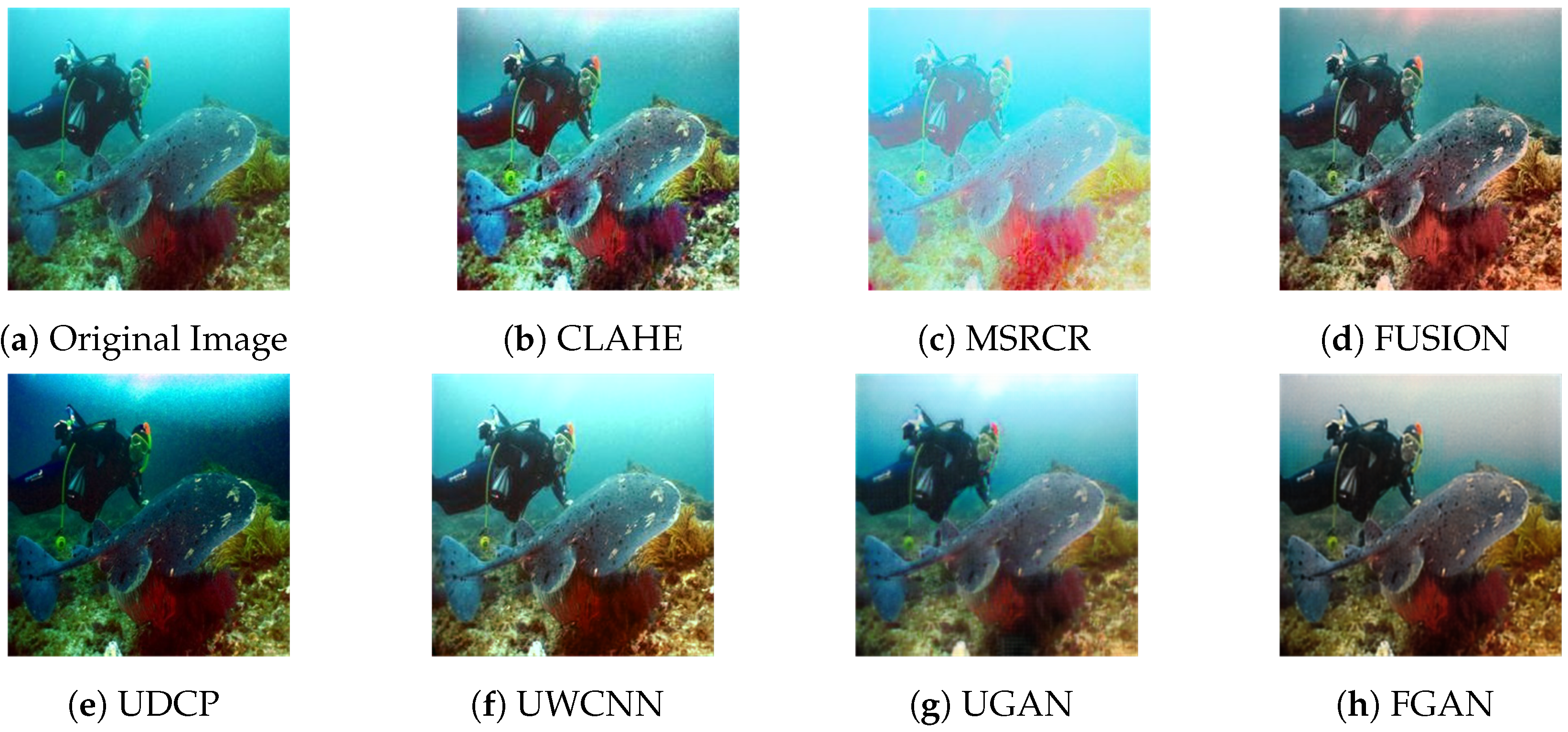

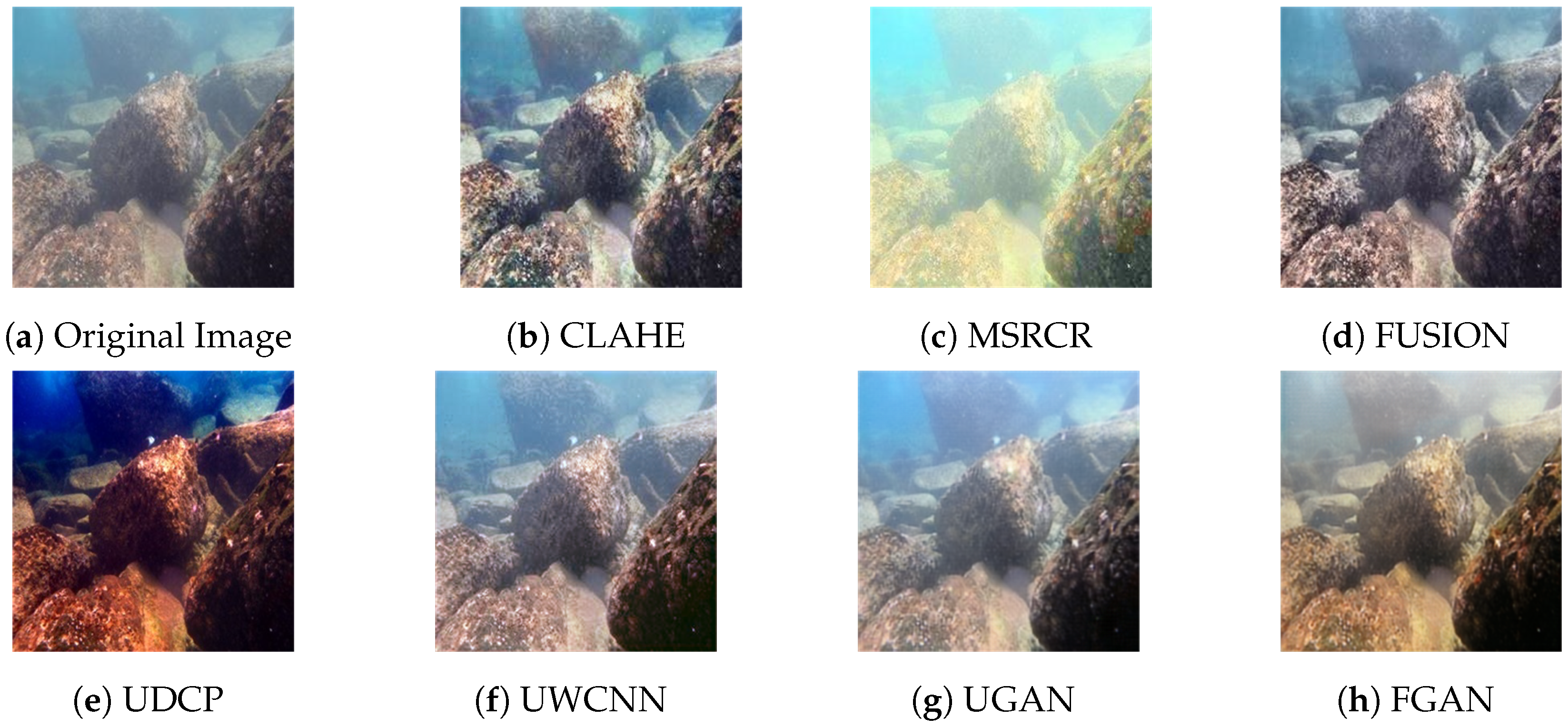

To verify the performance of these algorithms, some typical algorithms from different categories were selected, including CLAHE [78], MSRCR [16], FUSION [24], UDCP [37], UWCNN [59], UGAN [70], and FGAN [73]. Being tested it on an effective and public underwater test dataset (U45) [79], which includes the color casts, low contrast and haze-like effects of underwater degradation. This represents a typical feature of low-quality underwater images. The results are shown in Figure 3, Figure 4 and Figure 5.

Figure 3. Enhanced results of color casts.

Figure 3. Enhanced results of color casts.

Figure 4. Enhanced results of low contrast.

Figure 4. Enhanced results of low contrast.

Figure 5. Enhanced results of haze.

Figure 5. Enhanced results of haze.

References

- McGlamery, B.L. A Computer Model For Underwater Camera Systems. Int. Soc. Opt. Photonics 1980, 208, 221–231.

- Jaffe, J.S. Computer modeling and the design of optimal underwater imaging systems. IEEE J. Ocean. Eng. 1990, 15, 101–111.

- Cong, R.M.; Zhang, Y.M.; Zhang, C.; Li, Y.Z.; Zhao, Y. Research progress of deep learning driven underwater image enhancement and restoration. J. Signal Process. 2020, 36, 1377–1389.

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353.

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324.

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, USA, 8–13 December 2014; p. 27.

- Hummel, R. Image enhancement by histogram transformation. Comput. Graph. Image Process. 1977, 6, 184–195.

- Iqbal, K.; Odetayo, M.; James, A.; Salam, R.A.; Talib, A.Z.H. Enhancing the low quality images using unsupervised colour correction method. IEEE Int. Conf. Syst. Man Cybern. 2010, 1703–1709.

- Ghani, A.S.A.; Isa, N.A.M. Enhancement of low quality underwater image through integrated global and local contrast correction. Appl. Soft Comput. 2015, 37, 332–344.

- Ghani, A.S.A.; Isa, N.A.M. Automatic system for improving underwater image contrast and color through recursive adaptive histogram modification. Comput. Electron. Agric. 2017, 141, 181–195.

- Li, C.-Y.; Guo, J.-C.; Cong, R.-M.; Pang, Y.-W.; Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 2016, 99, 1.

- Li, X.; Hou, G.; Tan, L.; Liu, W. A hybrid framework for underwater image enhancement. IEEE Access 2020, 8, 197448–197462.

- Fu, X.; Zhuang, P.; Huang, Y.; Liao, Y.; Zhang, X.P.; Ding, X. A retinex-based enhancing approach for single underwater image. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4572–4576.

- Bianco, G.; Muzzupappa, M.; Bruno, F.; Garcia, R.; Neumann, L. A new color correction method for underwater imaging. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 25.

- Zhang, S.; Wang, T.; Dong, J.; Yu, H. Underwater image enhancement via extended multi-scale retinex. Neurocomputing 2017, 245, 1–9.

- Mercado, M.A.; Ishii, K.; Ahn, J. Deep-sea image enhancement using multi-scale retinex with reverse color loss for autonomous underwater vehicles. In Proceedings of the OCEANS 2017-Anchorage, Anchorage, AK, USA, 18–21 September 2017; pp. 1–6.

- Li, S.; Li, H.; Xin, G. Underwater image enhancement algorithm based on improved retinex method. Comput. Sci. Appl. 2018, 8, 9–15.

- Zhang, W.; Dong, L.; Pan, X.; Zhou, J.; Qin, L.; Xu, W. Single image defogging based on multi-channel convolutional msrcr. IEEE Access 2019, 7, 72492–72504.

- Tang, C.; von Lukas, U.F.; Vahl, M.; Wang, S.; Wang, Y.; Tan, M. Efficient underwater image and video enhancement based on retinex. Signal Image Video Process. 2019, 13, 1011–1018.

- Hu, K.; Zhang, Y.; Lu, F.; Deng, Z.; Liu, Y. An underwater image enhancement algorithm based on msr parameter optimization. J. Mar. Sci. Eng. 2020, 8, 741.

- Tang, Z.; Jiang, L.; Luo, Z. A new underwater image enhancement algorithm based on adaptive feedback and retinex algorithm. Multimed. Tools Appl. 2021, 312, 1–13.

- Zhuang, P.; Li, C.; Wu, J. Bayesian retinex underwater image enhancement. Eng. Appl. Artif. Intell. 2021, 101, 104171.

- Ancuti, C.; Ancuti, C.O.; Haber, T.; Bekaert, P. Enhancing underwater images and videos by fusion. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 81–88.

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color balance and fusion for underwater image enhancement. IEEE Trans. Image Process. 2017, 27, 379–393.

- Ancuti, C.; Ancuti, C.O.; De Vleeschouwer, C.; Garcia, R.; Bovik, A.C. Multi-scale underwater descattering. In Proceedings of the 2016 23rd International Conference on Pattern Recognition(ICPR), Cancun, Mexico, 4–8 December 2016; pp. 4202–4207.

- Pan, P.-w.; Yuan, F.; Cheng, E. Underwater image de-scattering and enhancing using dehazenet and hwd. J. Mar. Sci. Technol. 2018, 26, 6.

- Chang, H.-H. Single underwater image restoration based on adaptive transmission fusion. IEEE Access 2020, 8, 38650–38662.

- Gao, F.; Wang, K.; Yang, Z.; Wang, Y.; Zhang, Q. Underwater image enhancement based on local contrast correction and multi-scale fusion. J. Mar. Sci. Eng. 2021, 9, 225.

- Song, H.; Wang, R. Underwater image enhancement based on multi-scale fusion and global stretching of dual-model. Mathematics 2021, 9, 595.

- Schechner, Y.Y.; Karpel, N. Clear underwater vision. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; p. I.

- Namer, E.; Shwartz, S.; Schechner, Y.Y. Skyless polarimetric calibration and visibility enhancement. Opt. Express 2009, 17, 472–493.

- Chen, Z.; Wang, H.; Shen, J.; Li, X.; Xu, L. Region-specialized underwater image restoration in inhomogeneous optical environments. Optik 2014, 125, 2090–2098.

- Han, P.; Liu, F.; Yang, K.; Ma, J.; Li, J.; Shao, X. Active underwater descattering and image recovery. Appl. Opt. 2017, 56, 6631–6638.

- Sánchez-Ferreira, C.; Coelho, L.S.; Ayala, H.V.; Farias, M.C.; Llanos, C.H. Bio-inspired optimization algorithms for real underwater image restoration. Signal Process. Image Commun. 2019, 77, 49–65.

- Yang, H.-Y.; Chen, P.-Y.; Huang, C.-C.; Zhuang, Y.-Z.; Shiau, Y.-H. Low complexity underwater image enhancement based on dark channel prior. In Proceedings of the 2011 Second International Conference on Innovations in Bio-inspired Computing and Applications, Shenzhen, China, 16–18 December 2011; pp. 17–20.

- Chiang, J.Y.; Chen, Y.-C. Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. Image Process. 2011, 21, 1756–1769.

- Drews, P.; Nascimento, E.; Moraes, F.; Botelho, S.; Campos, M. Transmission estimation in underwater single images. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 2–8 December 2013; pp. 825–830.

- Drews, P.L.; Nascimento, E.R.; Botelho, S.S.; Campos, M.F.M. Underwater depth estimation and image restoration based on single images. IEEE Comput. Graph. Appl. 2016, 36, 24–35.

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 2015, 26, 2015.

- Li, C.; Quo, J.; Pang, Y.; Chen, S.; Wang, J. Single underwater image restoration by blue-green channels dehazing and red channel correction. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 1731–1735.

- Meng, H.; Yan, Y.; Cai, C.; Qiao, R.; Wang, F. A hybrid algorithm for underwater image restoration based on color correction and image sharpening. Multimed. Syst. 2020, 1–11.

- Cho, M.; Javidi, B. Three-dimensional visualization of objects in turbid water using integral imaging. J. Disp. Technol. 2010, 6, 544–547.

- Lee, Y.; Yoo, H. Three-dimensional visualization of objects in scattering medium using integral imaging and spectral analysis. Opt. Lasers Eng. 2016, 77, 31–38.

- Komatsu, S.; Javidi, B. Three-dimensional Integral Imaging Visualization in Scattering Medium with Baysian Estimation. In Proceedings of the 2018 17th Workshop on Information Optics (WIO), Quebec, QC, Canada, 16–19 July 2018; pp. 1–3.

- Neumann, L.; Garcia, R.; Jánosik, J.; Gracias, N. Fast underwater color correction using integral images. Instrum. Viewp. 2018, 20, 53–54.

- Shpilman, B.; Abookasis, D. Experimental results of imaging objects in turbid liquid integrating multiview circularly polarized speckle images and deconvolution method. Opt. Laser Technol. 2020, 121, 105774.

- Li, Z.M.; Zhou, H.; Li, Z.Y.; Yan, Z.Q.; Hu, C.Q.; Gao, J.; Jin, X.M. Thresholded single-photon underwater imaging and detection. Opt. Express 2021, 29, 28124–28133.

- Shin, Y.-S.; Cho, Y.; Pandey, G.; Kim, A. Estimation of ambient light and transmission map with common convolutional architecture. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–7.

- Ding, X.; Wang, Y.; Zhang, J.; Fu, X. Underwater image dehaze using scene depth estimation with adaptive color correction. In Proceedings of the OCEANS 2017-Aberdeen, Aberdeen, UK, 19–22 June 2017; pp. 1–5.

- Wang, Y.; Zhang, J.; Cao, Y.; Wang, Z. A deep cnn method for underwater image enhancement. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 1382–1386.

- Barbosa, W.V.; Amaral, H.G.B.; Rocha, T.L.; Nascimento, E.R. Visual-quality-driven learning for underwater vision enhancement. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3933–3937.

- Hou, M.; Liu, R.; Fan, X.; Luo, Z. Joint residual learning for underwater image enhancement. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 4043–4047.

- Cao, K.; Peng, Y.-T.; Cosman, P.C. Underwater image restoration using deep networks to estimate background light and scene depth. In Proceedings of the 2018 IEEE Southwest Symposium on Image Analysis and Interpretation (SSIAI), Las Vegas, NV, USA, 8–10 April 2018; pp. 1–4.

- Wang, K.; Hu, Y.; Chen, J.; Wu, X.; Zhao, X.; Li, Y. Underwater image restoration based on a parallel convolutional neural network. Remote Sens. 2019, 11, 1591.

- Li, C.; Anwar, S.; Hou, J.; Cong, R.; Guo, C.; Ren, W. Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Trans. Image Process. 2021, 30, 4985–5000.

- Perez, J.; Attanasio, A.C.; Nechyporenko, N.; Sanz, P.J. A deep learning approach for underwater image enhancement. In Proceedings of the International Work-Conference on the Interplay Between Natural and Artificial Computation, IWINAC 2017, Corunna, Spain, 19–23 June 2017; pp. 183–192.

- Sun, X.; Liu, L.; Li, Q.; Dong, J.; Lima, E.; Yin, R. Deep pixel-to-pixel network for underwater image enhancement and restoration. IET Image Process. 2019, 13, 469–474.

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389.

- Li, C.; Anwar, S.; Porikli, F. Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 2020, 98, 107038.

- Naik, A.; Swarnakar, A.; Mittal, K. Shallow-uwnet: Compressed model for underwater image enhancement (student abstract). In Proceedings of the AAAI Conference on Artificial Intelligence, Palo Alto, CA, USA, 2–9 February 2021; pp. 15853–15854.

- Han, Y.; Huang, L.; Hong, Z.; Cao, S.; Zhang, Y.; Wang, J. Deep supervised residual dense network for underwater image enhancement. Sensors 2021, 21, 3289.

- Yang, X.; Li, H.; Chen, R. Underwater image enhancement with image colorfulness measure. Signal Process. Image Commun. 2021, 95, 116225.

- Wang, Y.; Guo, J.; Gao, H.; Yue, H. Uiecˆ 2-net: Cnn-based underwater image enhancement using two color space. Signal Process. Image Commun. 2021, 96, 116250.

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. Watergan: Unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robot. Autom. Lett. 2017, 3, 387–394.

- Guo, Y.; Li, H.; Zhuang, P. Underwater image enhancement using a multiscale dense generative adversarial network. IEEE J. Ocean. Eng. 2019, 45, 862–870.

- Liu, X.; Gao, Z.; Chen, B.M. Mlfcgan: Multilevel feature fusion-based conditional gan for underwater image color correction. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1488–1492.

- Yang, M.; Hu, K.; Du, Y.; Wei, Z.; Sheng, Z.; Hu, J. Underwater image enhancement based on conditional generative adversarial network. Signal Process. Image Commun. 2020, 81, 115723.

- Li, H.; Zhuang, P. Dewaternet: A fusion adversarial real underwater image enhancement network. Signal Process. Image Commun. 2021, 95, 116248.

- Liu, X.; Gao, Z.; Chen, B.M. Ipmgan: Integrating physical model and generative adversarial network for underwater image enhancement. Neurocomputing 2021, 453, 538–551.

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing underwater imagery using generative adversarial networks. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation(ICRA), Brisbane, Australia, 21–25 May 2018; pp. 7159–7165.

- Lu, J.; Li, N.; Zhang, S.; Yu, Z.; Zheng, H.; Zheng, B. Multi-scale adversarial network for underwater image restoration. Opt. Laser Technol. 2019, 110, 105–113.

- Park, J.; Han, D.; Ko, H. Adaptive weighted multi-discriminator cyclegan for underwater image enhancement. J. Mar. Sci. Eng. 2019, 7, 200.

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234.

- Hu, K.; Zhang, Y.; Weng, C.; Wang, P.; Deng, Z.; Liu, Y. An underwater image enhancement algorithm based on generative adversarial network and natural image quality evaluation index. J. Mar. Sci. Eng. 2021, 9, 691.

- Zhang, H.; Sun, L.; Wu, L.; Gu, K. Dugan: An effective framework for underwater image enhancement. IET Image Process. 2021, 15, 2010–2019.

- Li, Z.; Tan, P.; Tan, R.T.; Zou, D.; Zhou, S.Z.; Cheong, L.-F. Simultaneous video defogging and stereo reconstruction. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4988–4997.

- Qing, C.; Yu, F.; Xu, X.; Huang, W.; Jin, J. Underwater video dehazing based on spatial–temporal information fusion. Multidimens. Syst. Signal Process. 2016, 27, 909–924.

- Zuiderveld, K. Contrast limited adaptive histogram equalization. Graph. Gems 1994, 474–485.

- Li, H.; Li, J.; Wang, W. A Fusion Adversarial Underwater Image Enhancement Network with a Public Test Dataset. Image and Video Processing. Available online: https://arxiv.org/abs/1906.06819 (accessed on 30 June 2019).