Underwater video images, as the primary carriers of underwater information, play a vital role in human exploration and development of the ocean. Due to the absorption and scattering of incident light by water bodies, the video images collected underwater generally appear blue-green and have an apparent fog-like effect. In addition, blur, low contrast, color distortion, more noise, unclear details, and limited visual range are the typical problems that degrade the quality of underwater video images.

Underwater vision enhancement uses computer technology to process degraded underwater images and convert original low-quality images into a high-quality image. The problems of color bias, low contrast, and atomization of original underwater video images are effectively solved by using vision enhancement technology. Enhanced video images improve the visual perception ability and are benefificial for subsequent visual tasks. Therefore, underwater video image enhancement technology has important scientifific signifificance and application value.

- underwater vision

- video/image enhancement

- deep learning

1. Introduction

Visual information, which plays an essential role in detecting and perceiving the environment, is easy for underwater vehicles to obtain. However, due to many uncertaintiesin the aquatic environment and the inflfluence of water on light absorption and scattering,and the quality of directly captured underwater images can degrades signifificantly. Largeamounts of solvents, particulate matter, and other inhomogeneous media in the water cause less light to enter the camera than in the natural environment. According to the Beer-Lambert-Bouger law, the attenuation of light has an exponential relationship with the medium. Therefore, the attenuation model of light in the process of underwater propagation is expressed as:

(1)

(1)

In Equation (1), E is the illumination of light, r is the distance, a is the absorption coeffificient of the water body, and b is the scattering coeffificient of the water body. The sum of a and b is equivalent to the total attenuation coeffificient of the medium.

The process of underwater imaging is shown in Figure 1. As light travels through water, it is absorbed and scattered. Water bodies have different absorption effects on light with different wavelengths. As shown in Figure 1, red light attenuats the fastest, and will disappear at about 5 meters underwater, blue and green light attenuate slowly, and blue light will disappear at about 60 meters underwater. The scattering of suspended particles and other media causes light to change direction during transmission and spread unevenly. The scattering process is inflfluenced by the properties of the medium, the light, and polarization. McGlamery et al.[2] presented a model for calculating underwater camera systems. The irradiance of non-scattered light, scattered light and backscattered light can be calculated by input geometry, source properties and optical properties of water. Finally, the parameters such as contrast, transmittance and signal-to-noise ratio can be obtained. Then the classical Jaffe–McGlamery[3]underwater imaging model was proposed. It indicates that the total illuminance entering the camera is a linear superposition of the direct component, the forward scatter component, and the backscattered component

(2)

(2)

In the equation, Ed, Ej and Eb represent the components of direct irradiation, forward scattering, and backscattering, respectively. The direct irradiation component is the light directly reflflected from the surface of the object into the receiver. The forward scattering component refers to the light reflflected by the target object in the water, deflflected into the receiver by the small angle of suspended particles in the water during straight propagation. Backscattering refers to illuminated light that reaches the receiver through the scattering of the water body. In general, the forward scattering of light attenuates more energy than the back scattering of light.

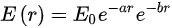

Due to the absorption and scattering of incident light by water bodies, the video images collected underwater generally appear blue-green and have an apparent fog-like effect. In addition, blur, low contrast, color distortion, more noise, unclear details, and limited visual range are the typical problems that degrade the quality of underwater video images[4]. Figure 1 shows some low-quality underwater images

Fig 1. Some low-quality underwater images

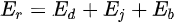

the existing underwater image enhancement techniques are classifified and summarized. As shown in Figure 2. The current algorithms are mainly divided into traditional and deep learning-based methods. Traditional methods include model-based and non-model methods. Non-model enhancement methods, such as the histogram algorithm, can directly enhance the visual effect through pixel changes without considering the imaging principle. Model-based enhancement is also known as the image restoration method. According to the imaging model, the relationship between clear, fuzzy, and transmission images is estimated, and clear images are derived, such as through the dark channel prior (DCP) algorithm [10]. With the rapid development of deep learning technology and its excellent performance in computer vision, underwater image enhancement technology based on deep learning is lso developing rapidly. The methods based on deep learning can be divided into those based on convolution neural network (CNN)[11] and those based on generative adversarial network (GAN)[12]. Most of the existing enhancement techniques are extensions of underwater single image enhancement techniques in the video fifield. Since the development of underwater video enhancement technology is not fully mature, this paper will not classify it for the time being.

Fig 2. Classification of underwater image enhancement methods

2. Traditional underwater image enhancement methods

2.1. Non-physical model enhancement methods

Due to the unique underwater optical environment, there are some limitations when traditional image enhancement methods are directly applied to image enhancement, so many targeted algorithms are proposed, including histogram-based, retinex-based, and image fusion-based algorithms.

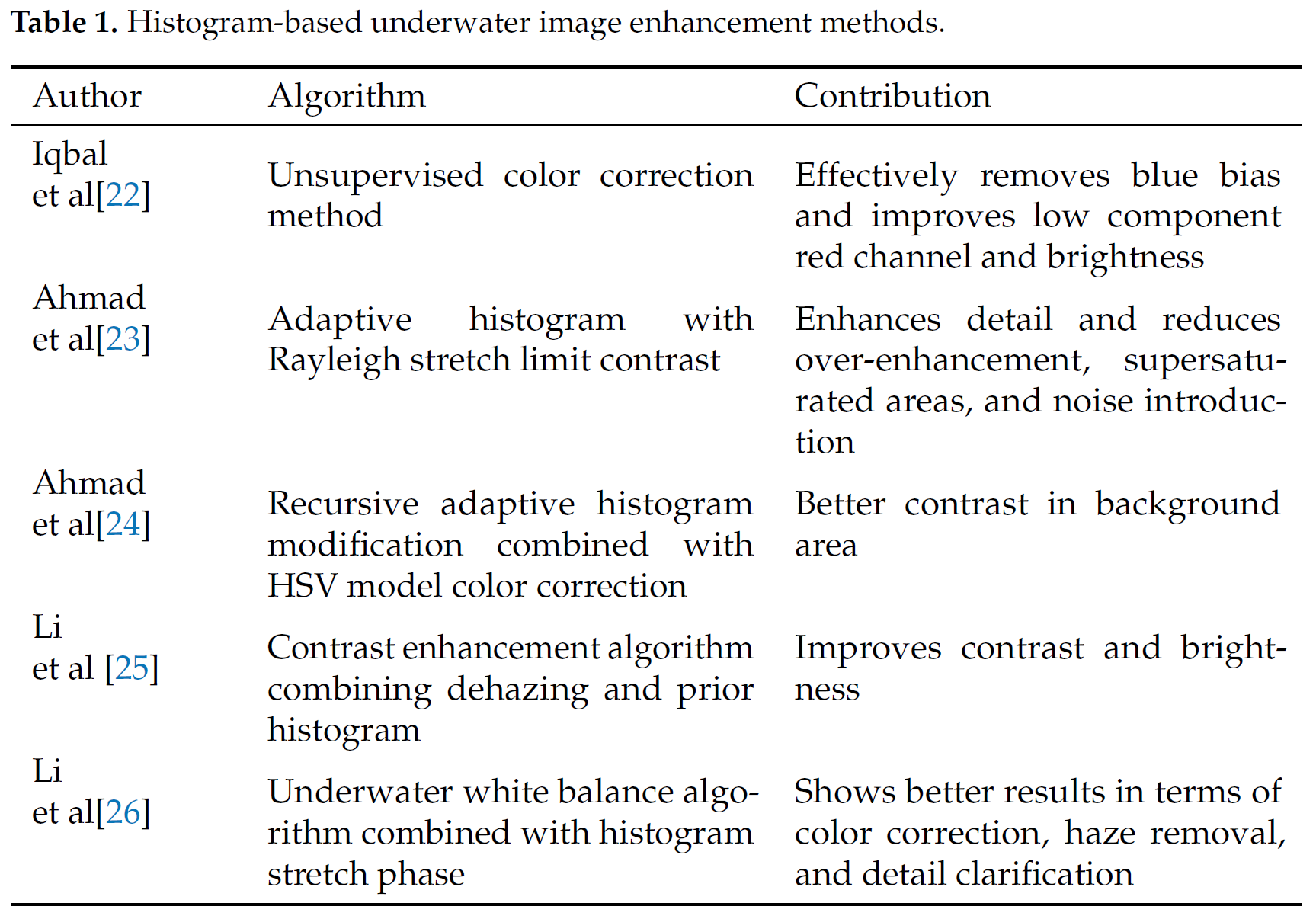

(1)Histogram-based methods

Image enhancement based on the histogram equalization (HE) algorithm [19] transforms the image histogram from narrow unimodal to balanced distribution. As a result, the original image has roughly the same number of pixels in most gray levels.

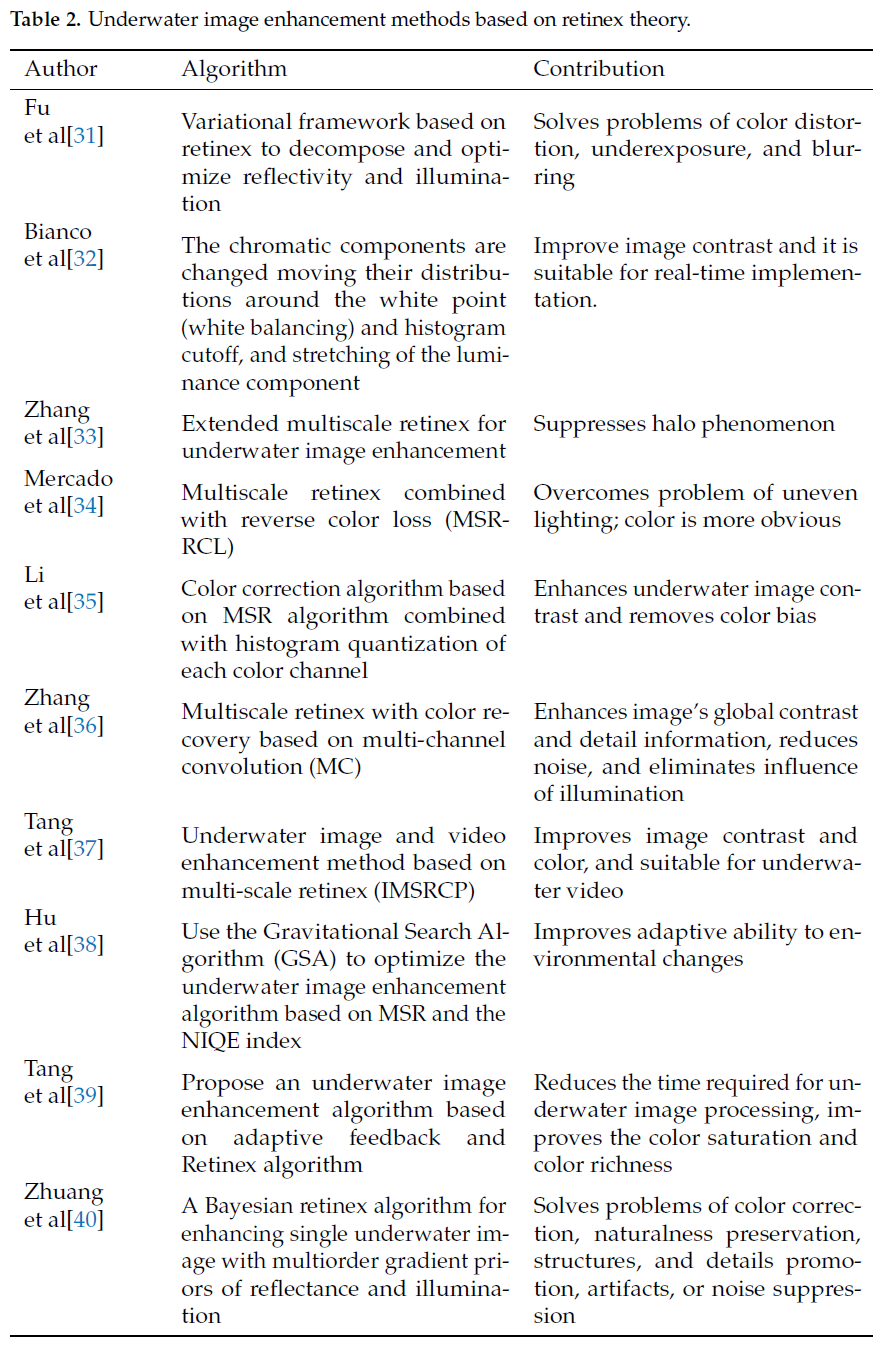

(2)Retinex-based methods

Retinex theory, based on color constancy, obtains the true picture of the scene by eliminating the influence of the irradiation component on the color of the object and removing the uneven illumination.

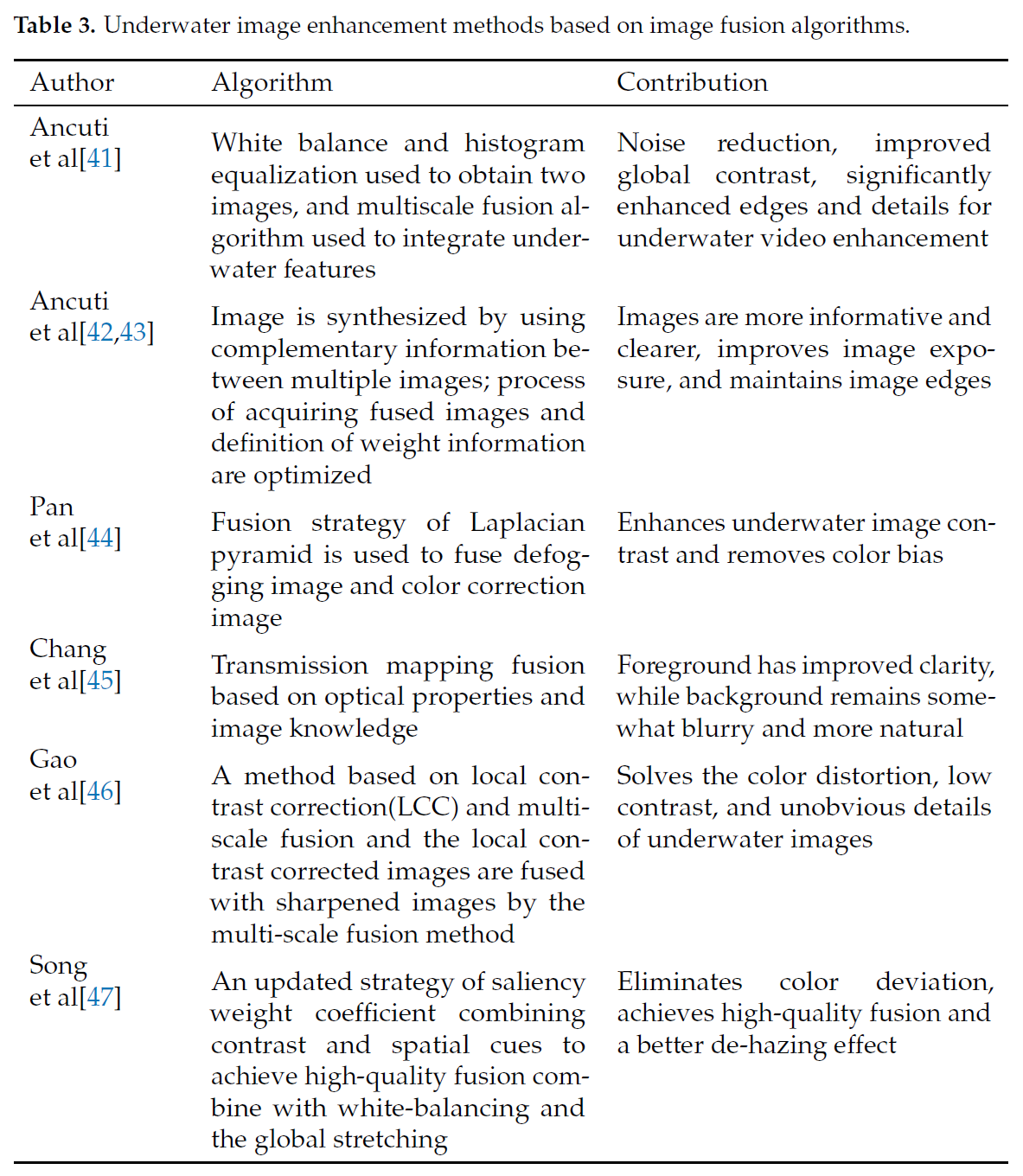

(3)Fusion-based methods

The image fusion algorithm fuses multiple images of the same scene to realize omplementary information of various images to achieve richer and more accurate image information after enhancement.

2.2. Physical model-based enhancement algorithm

Different from the non-physical model enhancement algorithm, the algorithm based on the physical model analyzes the imaging process and uses the inverse operation of the imaging model to get a clear image to improve the image quality from the imaging principle. It is also known as the image restoration technique. Underwater imaging models play a crucial role in physical model-based enhancement methods. The Jaffe–McGlamery underwater imaging model is a very widely used recovery model.

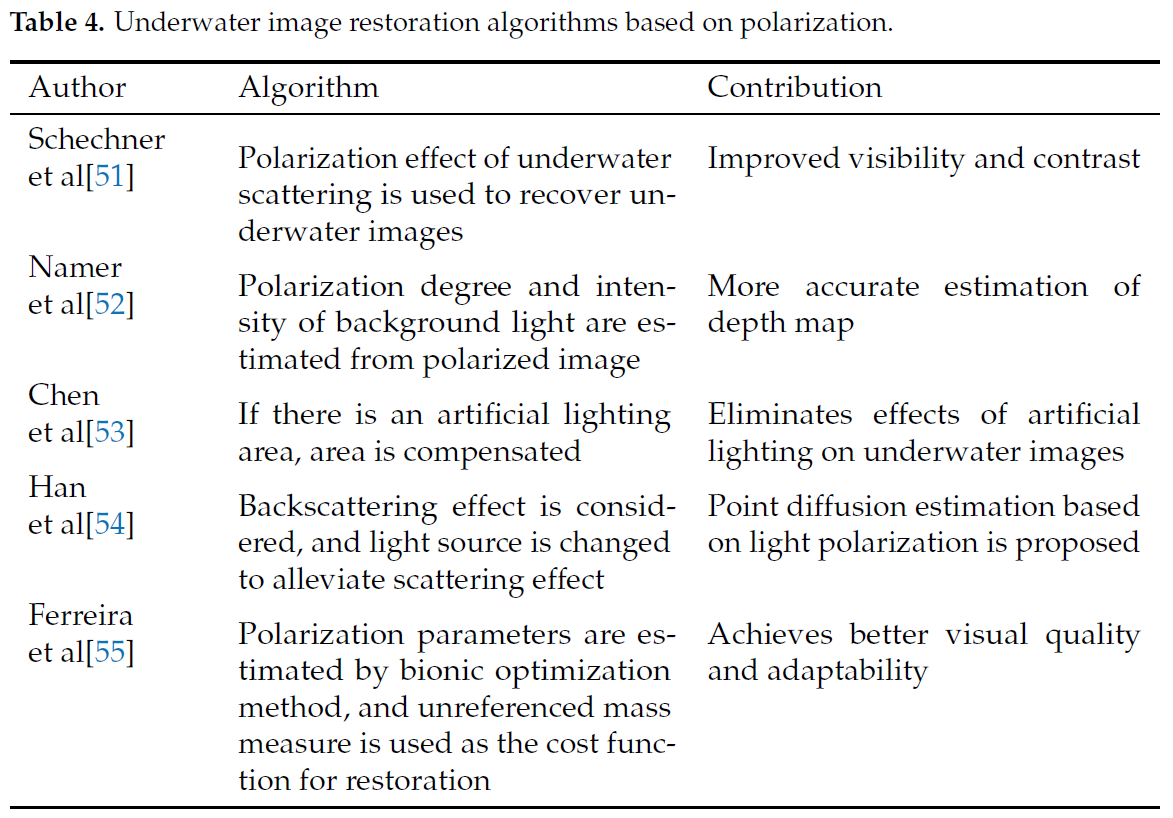

(1) Polarization-based methods

An underwater image restoration method based on the principle of polarization imaging utilizes the polarization characteristics of scattered light to separate scene light and scattered light, estimate the intensity and transmission coefficient of scattered light, and realize the imaging intensification.

(2) Dark channel prior–based methods

He et al. [10] proposed the dark channel prior (DCP) algorithm. According to statistics, it is found that there is always a channel in most areas of a fog-free image, and a pixel has a meager gray value, which is called a dark channel. The dark channel prior theory is used to solve the transmission image and atmospheric light value, and the atmospheric scattering model is used to restore the image.

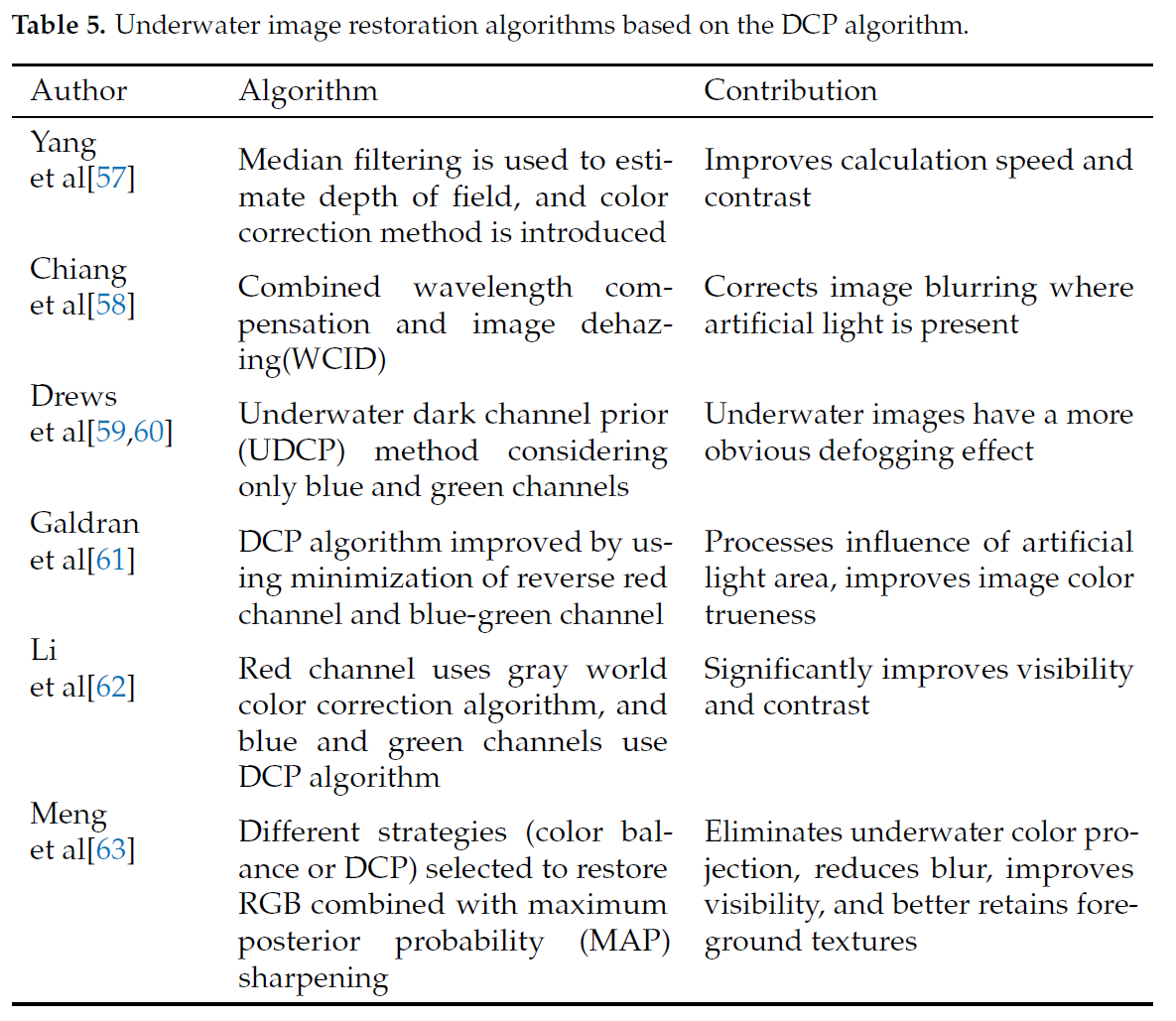

DCP algorithm has excellent defogging performance. When applied to underwater images, the dark channel is affected because the water absorbs too much red light. Therefore, underwater DCP algorithm is usually improved for this feature. Table 5 lists the

underwater-specific DCP algorithms.

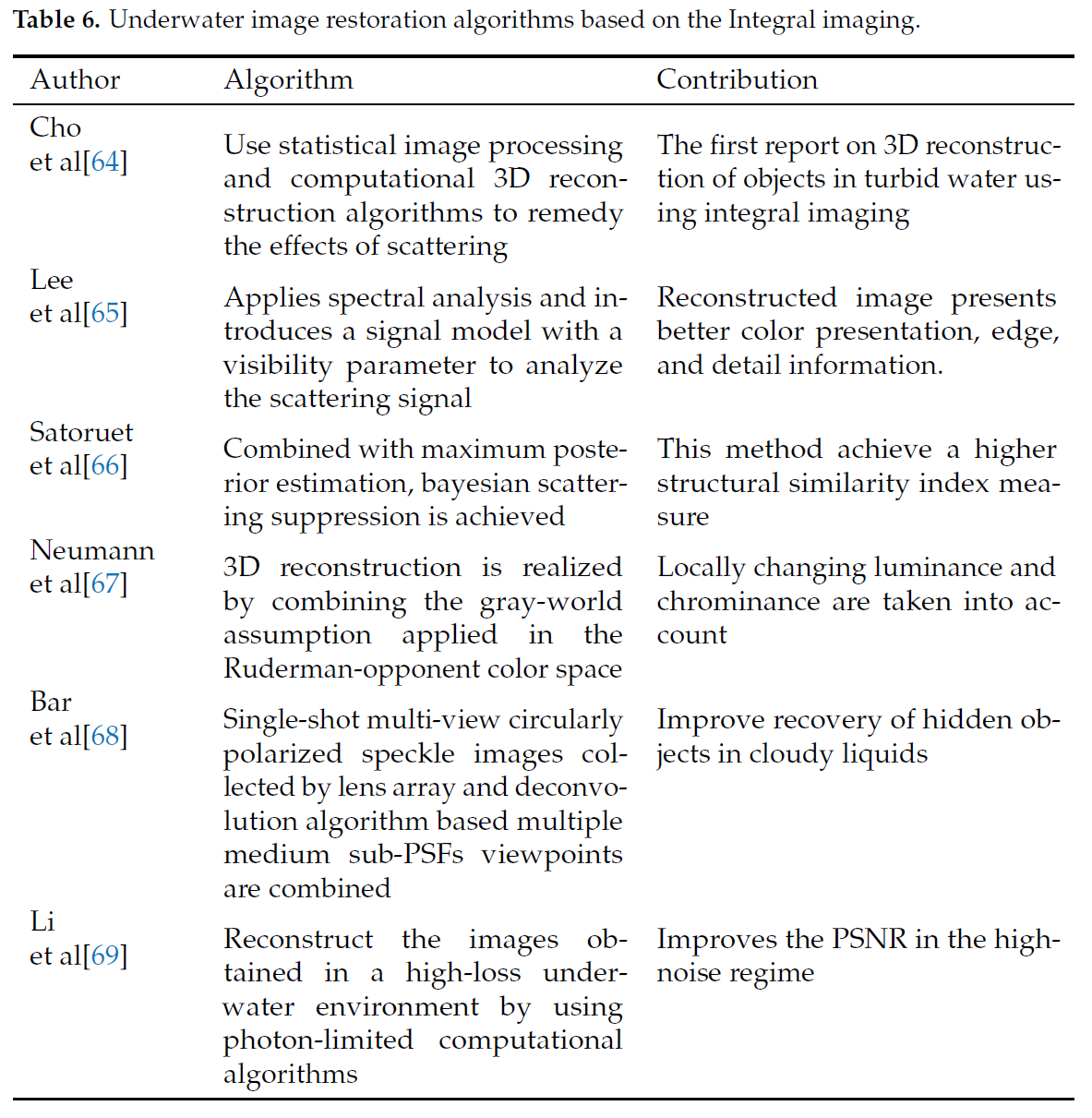

(3) Integral imaging-based methods

Integral imaging technology is based on a multi-lens stereo vision system, which uses lens array or camera array to quickly obtain information from different perspectives of the target, and combines all element images (each image that records information from ifferent perspectives of a three-dimensional object) into element image array (EIA).

3.1. Convolutional neural network methods

LeCun et al. [11] first proposed the convolutional neural network structure LeNET. The convolutional neural network is a kind of deep feedforward artificial neural network. It is composed of multiple convolutional layers that can effectively extract different feature expressions, from low-level details to high-level semantics, and is widely used in computer vision. In the underwater image enhancement algorithm based on CNN, according to whether the algorithm uses a physical imaging model to restore, it can be divided into non-physical and combined physical methods.

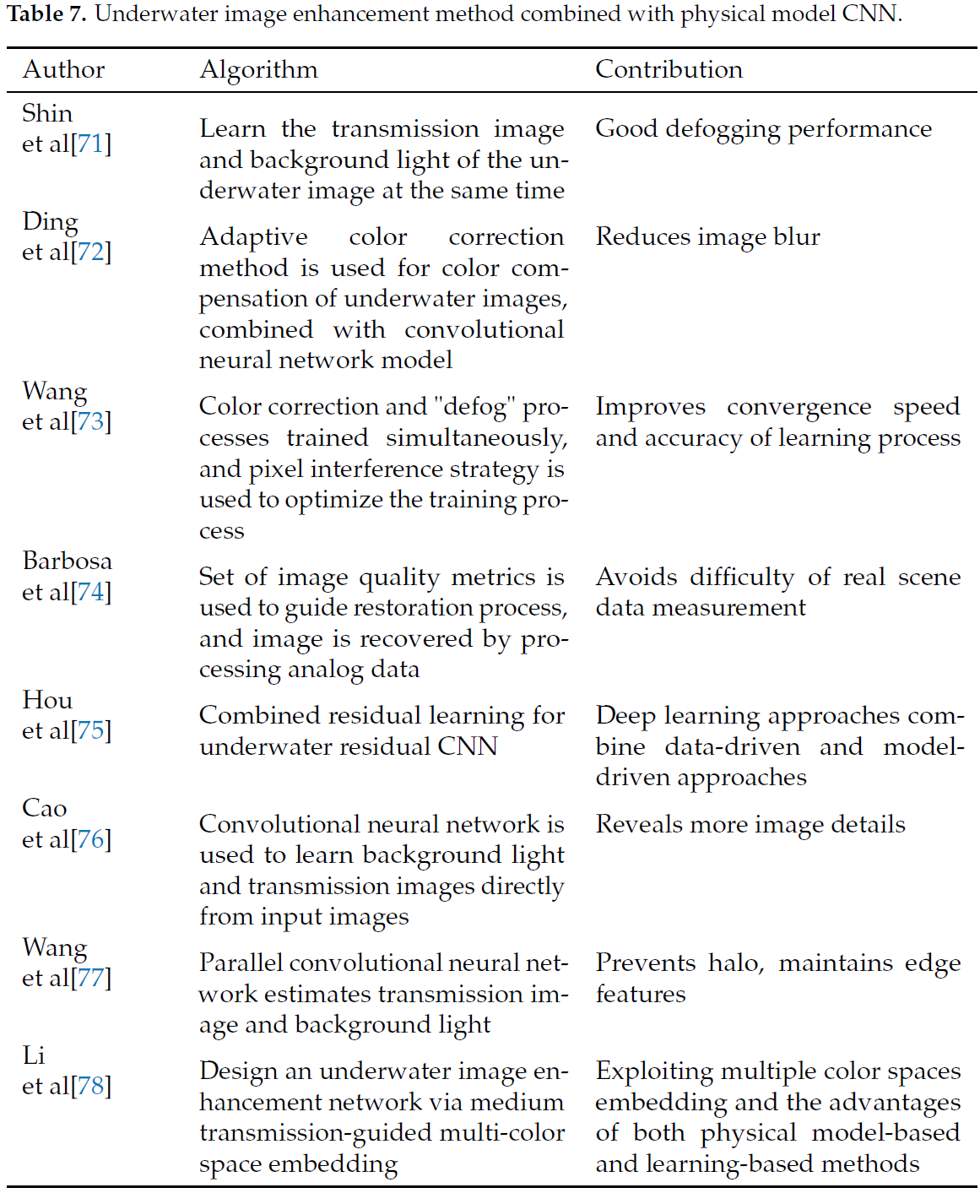

(1) Combined physical methods

Traditional model-based underwater image enhancement methods usually need to estimate the transmission graph and parameters of the underwater image based on prior knowledge and other strategies, and those estimated values thus have poor adaptability. The method combined with the physical model mainly uses the excellent feature extraction ability of the convolutional neural network to solve the parameter values in the imaging model, such as the transmission diagram. In this process, CNN replaces the assumptions or prior knowledge used in traditional methods, such as dark channel prior theory.

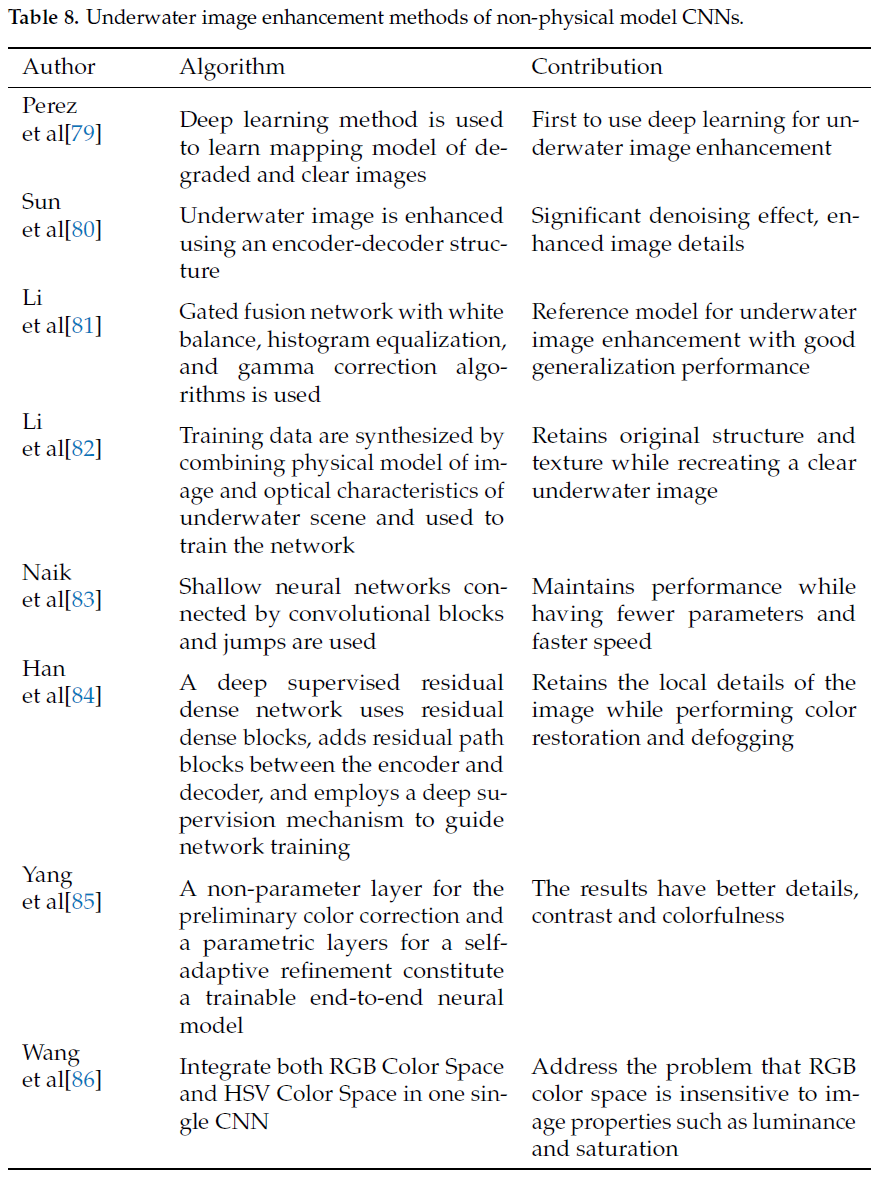

(2) Non-physical model methods

In the non-physical model, the original underwater image is sent into the network model with the help of CNN’s powerful learning ability. The enhanced underwater image is directly output after convolution, pooling, deconvolution, and other operations.

3.2. Generative adversarial network-based methods

Generative adversarial network(GAN) was proposed by GoodFellow et al[12]. A generative adversarial network (GAN) is used to produce better output through the game confrontation learning of generator and discriminator. By learning, the generator generates an image as similar to the actual image as possible so that the discriminator cannot distinguish between true and false images. The discriminator is used to indicate whether the image is a composite or actual image. If the discriminator cannot be cheated, the generator will continue to learn. The process is shown in Figure 5. The input of the generator is a low-quality image, and the output is a generated image. The input of the discriminant network is the generated image and the actual sample, and the output is the probability value that the generated image is true. The probability value is between 1 and 0. As an excellent generation model, GAN has a wide range of applications in image generation, image enhancement and restoration, and image style transfer mutual.

4. Underwater video enhancement

With the development of underwater video acquisition and data communication technology,real-time underwater video transmission becomes possible. Underwater video with spatiotemporal information and motion characteristics has higher application prospects than underwater images in ocean development. Because of the optical properties, underwater video has some similar problems to underwater images, such as color bias, image blur, low contrast, uneven illumination, etc. At the same time, due to the influence of water flow on video acquisition equipment, the texture features and details of moving objects are weakened or disappear. These problems seriously affect the ability of the underwater video system to accurately collect scene and object features. Unlike atmospheric video enhancement technology, which tends to solve blur and jitter, underwater video enhancement focuses more on solving the harmful effects of the unique optical environment on color and visibility.

Compared with underwater image enhancement technology, underwater video enhancement is more complicated. The research in this direction has not yet reached a mature stage. Most of the existing underwater video enhancement methods are extensions of single image enhancement algorithms. When underwater image enhancement technology is directly applied to video, each frame is enhanced and then connected into a new video. Due to the differences in transmission images and background light between frames, the continuity of the enhanced video is not well maintained, and time artifacts and interframe flicker phenomena can occur.

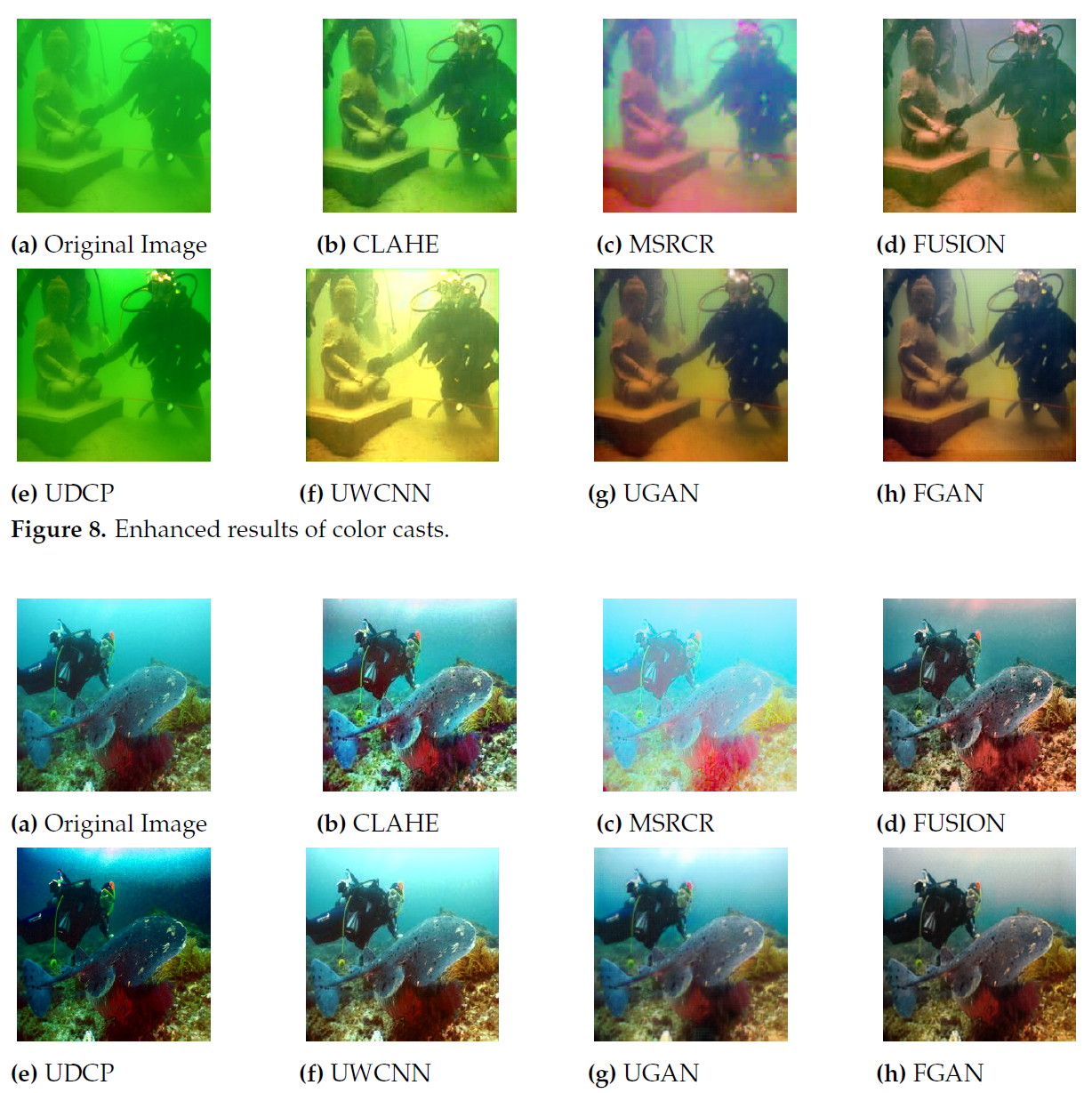

To verify the performance of these algorithms, we select some typical algorithms from different categories, including CLAHE[21], MSRCR[34], FUSION[42], UDCP[59], UWCNN[82], UGAN[96], FGAN[99]. We tested it on an effective and public underwater test dataset (U45)[134], which include include the color casts, low contrast and hazelike effects of underwater degradation. This represents a typical feature of low-quality underwater images. The results are shown in Figure below.

This entry is adapted from the peer-reviewed paper 10.3390/jmse10020241