| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Ciril Bohak | + 1943 word(s) | 1943 | 2020-05-12 05:26:51 | | | |

| 2 | Camila Xu | Meta information modification | 1943 | 2020-06-02 04:24:32 | | | | |

| 3 | Camila Xu | -13 word(s) | 1930 | 2020-10-30 07:34:36 | | |

Video Upload Options

Direct point-cloud visualisation is a common approach for visualising large datasets of aerial terrain LiDAR scans. However, because of the limitations of the acquisition technique, such visualisations often lack the desired visual appeal and quality, mostly because certain types of objects are incomplete or entirely missing (e.g., missing water surfaces, missing building walls and missing parts of the terrain). To improve the quality of direct LiDAR point-cloud rendering, we present a point-cloud processing pipeline that uses data fusion to augment the data with additional points on water surfaces, building walls and terrain through the use of vector maps of water surfaces and building outlines. In the last step of the pipeline, we also add colour information, and calculate point normals for illumination of individual points to make the final visualisation more visually appealing. We evaluate our approach on several parts of the Slovenian LiDAR dataset.

1. Introduction and background

In recent years, aerial data acquisition with LiDAR scanning systems has been used in such diverse scenarios as digital elevation model acquisition [1][2], discovery/reconstruction of archaeological remains [3][4], estimating the vegetation density and/or height [5], etc. While in most scenarios the gathered LiDAR data are used for analysis and digital terrain model development, it can also be used for visualisation. This is especially true for large country-wide LiDAR datasets, which can be augmented with colour information from aerial orthophoto data—https://potree.entwine.io. The number of publicly accessible datasets is increasing; however, they are mostly available for download in the raw (or partly classified) form and are rarely visualised online. Many tools have been developed for point-cloud data visualisation on the web (e.g., Potree [6] and Plasio—https://plas.io or as stand-alone applications (e.g., Cloud Compare—https://www.cloudcompare.org and MeshLab—http://www.meshlab.net), but the LiDAR data are rarely used for direct visualisations due to the many inconsistencies and missing parts which makes them less appealing. LiDAR scans may be incomplete because of:

-

parts of the acquired objects/terrain are not in the sensor’s line-of-sight and thus cannot be acquired. For example, vertical building walls, especially in areas with high building density, and mountain overhangs, where parts of the terrain are not visible by the acquisition sensor.

-

the scanned surface does not reflect, but rather refracts, disperses, dissipates or absorbs light. For example, water surface, where the laser beam is mostly refracted into and/or absorbed by the water and there is very low to no reflectance back to the sensor.

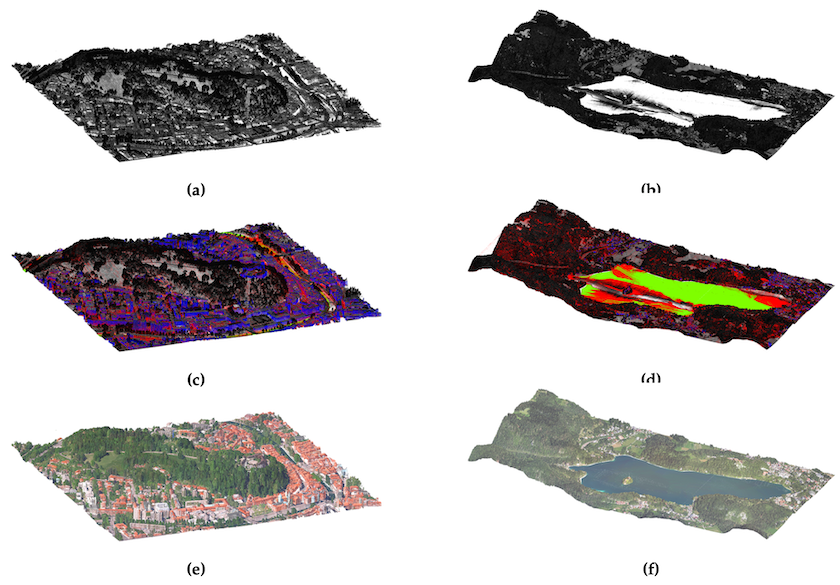

All of the above-mentioned cases are displayed in Figure 1. Figure 1a shows missing points in a mountain region (as gray background patches within the point-cloud), while Figure 1b shows missing points in building walls, where gray background colour is visible through buildings and on water surfaces (gray background instead of points on the river whining through the city).

In the past, many researchers have addressed the problem of point-cloud reconstruction for specific domains. A method for generating a digital terrain model (DTM) from aerial LiDAR point-cloud data [7] filters out non-ground objects and provides an efficient way of processing large datasets. The approach extends a compact representation of a differential morphological profile vector fields model [8] by extracting the most contrasted connected-components from the dataset and uses them in a multi-criterion filter definition. It also considers areas with the most contrasted connected-components and the standard deviation of contained points’ levels. The output of the method is a DTM defined on a regular grid with high precision. Such a DTM is also used in our approach as an input for estimating the terrain slope. The need for fast terrain acquisition in disaster management led to the development of a LiDAR-based unmanned aerial vehicle (UAV) system [9] equipped with an inertial navigation system, a global navigation satellite system (GNSS) and a low-cost LiDAR system. The data acquired with the presented system were compared with a high-grade terrestrial LiDAR sensor. The results show that the system achieves meter-level accuracy and produces a dense point-cloud representation. While such systems could be used to acquire the missing data in existing datasets, the large amount of hours needed to identify the problematic regions and acquire the missing data are prohibitive. Our approach addresses the problem without the need for additional data acquisition and also provides the identification of the problematic areas for new data acquisitions.

While the above articles address the problem of terrain reconstruction, there are also several works that address the more specific problem of building and urban area reconstruction. In [10], the authors address the problem of a complete residential urban area reconstruction where the density of vegetation is high in comparison to the downtown areas. They present a robust classification algorithm for classifying trees, buildings and ground by adapting an energy minimisation scheme based on 2.5D characteristics of building structures. The output of the system are 3D mesh models. The authors of [11] present a graph-based approach for 3D building model reconstruction from airborne LiDAR data. The approach uses graph-theory to represent the topological building structure, separates the buildings into different parts according to their topological relationship and reconstructs the building model by joining individual models using graph matching. An approach to 3D building reconstruction [12] uses adaptive 2.5D dual contouring. For each cell in a 2D grid overlaid on top of the LiDAR point-cloud data, vertices of the building model are estimated and their number is reduced using quad-tree collapsing procedures. The remaining points are connected according to their grid adjacency and the model is triangulated. An earlier approach [13] produces multi-layer rooftops with complex boundaries and vertical walls connecting roofs to the ground. A graph-cut based method is used to segment out vegetation areas and a novel method—hierarchical Euclidean clustering—is used to extract rooftops and ground terrain.

A more specific problem of roof reconstruction is addressed in [14][15]. Henn et al. present a supervised machine learning approach for identifying the roof type from a point-cloud representation of single and multi-plane roofs, and Chen et al. present a multi-scale grid method for detection and reconstruction of building roofs.

While processing and augmenting the point-cloud data are a hard problem on its own, there is also a growing need for real-time visualisation of large point-cloud datasets on the web. Researchers have developed several solutions for such visualisations that address the problem of multiple visualisation scales, data transfer and others. In [16], authors present a web-based system for visualisation of point-cloud data with progressive encoding, storage and transition. The system was developed for integration into collaborative environments with support for WebGL accelerated visualisation.

A multi-scale workflow for obtaining a more complete description of the captured environment is presented in [17]. The method fuses data from aerial LiDAR data, terrestrial laser scanner data and photogrammetry based reconstruction data in an efficient multi-scale layered spatial representation. While the approach presents an efficient multi-scale layered representation, it does not address the streaming problems that occur in web-based solutions.

Peters and Ledoux [18] present a novel point-cloud visualisation technique—Medial Axis Transform—developed for LiDAR point-clouds. The technique renders the points as circles, whereby it adjusts their radii and orientation. In this way, one can use an order of magnitude fewer points for accurate visualisation of the acquired terrain and buildings. This is very useful in cases where one wants to limit the number of points in visualisation to improve performance.

In recent years, several methods [19][20][21] were developed for real-time progressive rendering of point-cloud data which can also be used for web-based visualisations. The first approach can progressively render as many points as can fit into the GPU memory. The already rendered points in one frame are reprojected and then random points are added to uniformly converge to the final render within a few consecutive frames. The second method supports progressive real-time rendering of large point-cloud datasets without any hierarchical structures. The third method optimises point-cloud rendering using compute shaders. All of the presented methods offer an improvement in terms of performance in comparison to traditional point-cloud rendering.

As real-time direct visualization of large points clouds is already made feasible by the recent progress, in contrast to the presented reconstruction methods, our goal is not to extract 3D mesh models from the point cloud, but to augment the point-cloud data with additional points that would make the visualizations more appealing. To accomplish this, we make use of data fusion of the point cloud data with additional data sources data and present an aerial LiDAR data augmentation pipeline designed to address specific issues of terrestrial point-clouds:

-

holes on water surfaces—LiDAR laser beams are mostly refracted into and/or absorbed by the water instead of reflected back to the sensor, which thus creates big holes on surfaces of lakes and rivers,

-

missing vertical building walls—in places where due to the direction of the flight and overreach of the roofs the walls do not get scanned and are thus missing in the point-cloud representation, and

-

holes in mountain overhangs—in places where mountains are so steep that they form overhangs or where due to the direction of the flight parts of mountains do not get scanned and are thus missing in the point-cloud.

2. Methods

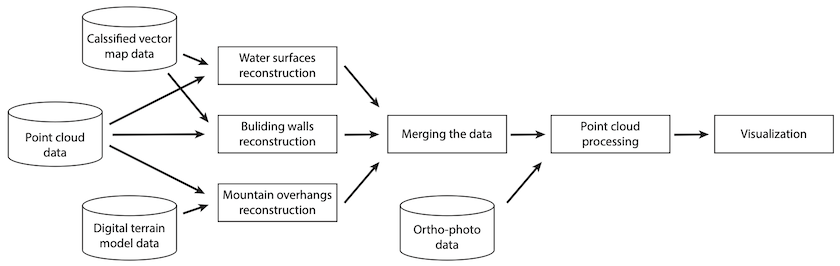

The point-cloud data augmentation pipeline presented in this paper consists of multiple stages. In the first stage, which fuses three data sources, three steps can be performed in parallel: (1) water surface reconstruction, (2) building wall reconstruction, and (3) mountain overhang reconstruction. In the next step, all of the reconstructions are merged into a seamless point-cloud. Finally, we add colour and normal information to make the point-cloud ready for visualisation. The pipeline is presented in Figure 2.

3. Results

In Figure 3, we present the augmentation results for two sets of data from the Slovenian LiDAR dataset. The first example shows a 1 km2 area of Ljubljana city centre displayed in Figures 3a, c, e, and the second a 6 km2 area of lake Bled with surroundings in Figures 3b, d, f. The first row (Figures 3a, b) displays the input data where points are shaded according to their intensity values. The second row (Figures 3c, d) displays augmentations (water surfaces in green, building walls in blue and terrain reconstruction in red) together with intensity values, and the third row (Figures 3e, f) displays the final output of the proposed augmentation pipeline, where all of the points are merged, the colour information is added from orthophoto images and normal information is calculated using principal component analysis on the point cloud data.

4. Conclusions

The main goal was to augment parts of the dataset where the acquisition process fails to obtain an appropriate amount of points for direct point-cloud visualisation. We addressed three problematic domains: (1) missing points on water surfaces, (2) missing points on building walls, and (3) missing points in mountain overhang regions. Additionally, we added colour information to the point-cloud from aerial orthophoto images and estimated point normals for calculation of illumination. The proposed pipeline allows for fast and easy augmentation of the point-cloud data and outputs a denser point-cloud, more suitable for direct visualisation. To the best of our knowledge, this is the first augmentation pipeline that addresses the weaknesses of raw LiDAR point-cloud data for direct visualization.

As part of future work it is possible to (1) implement the automatic adaptation of some of the parameters of the proposed algorithms to their context (e.g., wall reconstruction) in order to avoid reconstruction errors, (2) develop augmentation algorithms for other problematic features (e.g., bridges, river banks beneath bridges, and roofs), and (3) to preprocess the orthophoto images to remove lighting information and shadows, which currently interfere with our illumination calculations.

References

- Qiusheng Wu; Chengbin Deng; Zuoqi Chen; Automated delineation of karst sinkholes from LiDAR-derived digital elevation models. Geomorphology 2016, 266, 1-10, 10.1016/j.geomorph.2016.05.006.

- Christine Hladik; Merryl Alber; Accuracy assessment and correction of a LIDAR-derived salt marsh digital elevation model. Remote Sensing of Environment 2012, 121, 224-235, 10.1016/j.rse.2012.01.018.

- Juan C. Fernandez-Diaz; William E. Carter; Ramesh L. Shrestha; Craig Glennie; Now You See It… Now You Don’t: Understanding Airborne Mapping LiDAR Collection and Data Product Generation for Archaeological Research in Mesoamerica. Remote Sensing 2014, 6, 9951-10001, 10.3390/rs6109951.

- James Schindling; Cerian Gibbes; LiDAR as a tool for archaeological research: a case study. Archaeological and Anthropological Sciences 2014, 6, 411-423, 10.1007/s12520-014-0178-3.

- Hooman Latifi; Marco Heurich; Florian Hartig; Jörg Müller; Peter Krzystek; Hans Jehl; Stefan Dech; Estimating over- and understorey canopy density of temperate mixed stands by airborne LiDAR data. Forestry: An International Journal of Forest Research 2015, 89, 69-81, 10.1093/forestry/cpv032.

- Schütz, M. Potree: Rendering Large Point Clouds in Web Browsers. Master’s Thesis, Institute of Computer Graphics and Algorithms, Vienna University of Technology, Favoritenstrasse, Vienna, Austria, 2016. [Google Scholar]

- Domen Mongus; Borut Žalik; Computationally Efficient Method for the Generation of a Digital Terrain Model From Airborne LiDAR Data Using Connected Operators. Impact on Sea-Surface Electromagnetic Scattering and Emission Modeling of Recent Progress on the Parameterization of Ocean Surface Roughness, Drag Coefficient and Whitecap Coverage in High Wind Conditions 2013, 7, 340-351, 10.1109/JSTARS.2013.2262996.

- M. Pesaresi; Georgios K. Ouzounis; Lionel Gueguen; A new compact representation of morphological profiles: report on first massive VHR image processing at the JRC. SPIE Defense, Security, and Sensing 2012, 8390, 839025-839025, 10.1117/12.920291.

- Kai-Wei Chiang; Guang-Je Tsai; Yu-Hua Li; Naser El-Sheimy; Development of LiDAR-Based UAV System for Environment Reconstruction. IEEE Geoscience and Remote Sensing Letters 2017, 14, 1790-1794, 10.1109/LGRS.2017.2736013.

- Qian-Yi Zhou; Ulrich Neumann; Complete residential urban area reconstruction from dense aerial LiDAR point clouds. Graphical Models 2013, 75, 118-125, 10.1016/j.gmod.2012.09.001.

- Bin Wu; Bailang Yu; Qiusheng Wu; Shenjun Yao; Feng Zhao; Weiqing Mao; Jianping Wu; A Graph-Based Approach for 3D Building Model Reconstruction from Airborne LiDAR Point Clouds. Remote Sensing 2017, 9, 92, 10.3390/rs9010092.

- E. Orthuber; J. Avbelj; 3D BUILDING RECONSTRUCTION FROM LIDAR POINT CLOUDS BY ADAPTIVE DUAL CONTOURING. ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences 2015, null, 157-164, 10.5194/isprsannals-ii-3-w4-157-2015.

- Shaohui Sun; Carl Salvaggio; Aerial 3D Building Detection and Modeling From Airborne LiDAR Point Clouds. Impact on Sea-Surface Electromagnetic Scattering and Emission Modeling of Recent Progress on the Parameterization of Ocean Surface Roughness, Drag Coefficient and Whitecap Coverage in High Wind Conditions 2013, 6, 1440-1449, 10.1109/JSTARS.2013.2251457.

- André Henn; Gerhard Gröger; Viktor Stroh; Lutz Plümer; Model driven reconstruction of roofs from sparse LIDAR point clouds. ISPRS Journal of Photogrammetry and Remote Sensing 2013, 76, 17-29, 10.1016/j.isprsjprs.2012.11.004.

- Yanming Chen; Liang Cheng; Manchun Li; Jiechen Wang; Lihua Tong; Kang Yang; Multiscale Grid Method for Detection and Reconstruction of Building Roofs from Airborne LiDAR Data. Impact on Sea-Surface Electromagnetic Scattering and Emission Modeling of Recent Progress on the Parameterization of Ocean Surface Roughness, Drag Coefficient and Whitecap Coverage in High Wind Conditions 2014, 7, 4081-4094, 10.1109/JSTARS.2014.2306003.

- Alun Evans; Javi Agenjo; Josep Blat; Web-based visualisation of on-set point cloud data. Proceedings of the 11th European Conference on Software Architecture Companion Proceedings - ECSA '17 2014, null, 10-8, 10.1145/2668904.2668937.

- Poux, F.; Neuville, R.; Hallot, P.; Billen, R. Point Clouds as an Efficient Multiscale Layered Spatial Representation. In Eurographics Workshop on Urban Data Modelling and Visualisation; Tourre, V., Biljecki, F., Eds.; The Eurographics Association: Norrköping, Sweden, 2016. [Google Scholar] [CrossRef]

- Ravi Peters; Hugo LeDoux; Robust approximation of the Medial Axis Transform of LiDAR point clouds as a tool for visualisation. Computers & Geosciences 2016, 90, 123-133, 10.1016/j.cageo.2016.02.019.

- Markus Schuetz; Michael Wimmer; Progressive real-time rendering of unprocessed point clouds. ACM SIGGRAPH 2018 Posters on - SIGGRAPH '18 2018, null, 41, 10.1145/3230744.3230816.

- Schütz, M.; Mandlburger, G.; Otepka, J.; Wimmer, M. Progressive Real-Time Rendering of One Billion Points Without Hierarchical Acceleration Structures. 2019. Available online: https://www.researchgate.net/publication/336279053_Progressive_Real-Time_Rendering_of_One_Billion_Points_Without_Hierarchical_Acceleration_Structures (accessed on 7 April 2020).

- Markus Schutz; Michael Wimmer; Rendering Point Clouds with Compute Shaders. SIGGRAPH Asia 2019 Posters 2019, null, 32, 10.1145/3355056.3364554.