| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Prasanna Kolar | + 5849 word(s) | 5849 | 2020-04-22 11:42:18 | | | |

| 2 | Camila Xu | -2615 word(s) | 3234 | 2020-10-30 09:48:07 | | | | |

| 3 | Camila Xu | -2615 word(s) | 3234 | 2020-10-30 09:48:46 | | |

Video Upload Options

Autonomous navigation is a very important area in the huge domain of mobile autonomous vehicles. Sensor integration is a key concept that is critical to the successful implementation of navigation. As part of this publication, we review the integration of Laser sensors like LiDAR with vision sensors like cameras. The past decade, has witnessed a surge in the application of sensor integration as part of smart-autonomous mobility systems. Such systems can be used in various areas of life like safe mobility for the disabled, disinfecting hospitals post Corona virus treatments, driver-less vehicles, sanitizing public areas, smart systems to detect deformation of road surfaces, to name a handful. These smart systems are dependent on accurate sensor information in order to function optimally. This information may be from a single sensor or a suite of sensors with the same or different modalities. We review various types of sensors, their data, and the need for integration of the data with each other to output the best data for the task at hand, which in this case is autonomous navigation. In order to obtain such accurate data, we need to have optimal technology to read the sensor data, process the data, eliminate or at least reduce the noise and then use the data for the required tasks. We present a survey of the current data processing techniques that implement integration of multimodal data from different types of sensors like LiDAR that use light scan technology, various types of Red Green Blue (RGB) cameras that use optical technology and review the efficiency of using fused data from multiple sensors rather than a single sensor in autonomous navigation tasks like mapping, obstacle detection, and avoidance or localization. This survey will provide sensor information to researchers who intend to accomplish the task of motion control of a robot and detail the use of LiDAR and cameras to accomplish robot navigation

1. Introduction

Autonomous systems can play a vital role in assisting humans in a variety of problem areas. This could potentially be in a wide range of applications like driver-less cars, humanoid robots, assistive systems, domestic systems, military systems, and manipulator systems, to name a few. Presently, the world is at a bleeding edge of technologies that can enable this even in our daily lives. Assistive robotics is a crucial area of autonomous systems that helps persons who require medical, mobility, domestic, physical, and mental assistance. This research area is gaining popularity in applications like autonomous wheelchair systems [1,2], autonomous walkers [3], lawn movers [4,5], vacuum cleaners [6], intelligent canes [7], and surveillance systems in places like assisted living [8,9,10,11]. Data are one of the most important components to optimally start, continue, or complete any task. Often, these data are obtained from the environment that the autonomous system functions in; examples of such data could be the system’s position and location coordinates in the environment, the static objects, speed/velocity/acceleration of the system or its peers or any moving object in its vicinity, vehicle heading, air pressure, and so on. Since this is obtained directly from the operational environment, the information is up-to-date and can be accessed through either built-in or connected sensing equipment/devices. This survey is focused on the vehicle navigation of an autonomous vehicle. We review the past and present research using Light Imaging Detection and Ranging (LiDAR) and Imaging systems like a camera, which are laser and vision-based sensors, respectively. The autonomous systems use sensor data for tasks like object detection, obstacle avoidance, mapping, localization, etc. As we will see in the upcoming sections, these two sensors can complement each other and hence are being used extensively for detection in autonomous systems. The LiDAR market alone is expected to reach $52.5 Billion by the year 2032, as given in a recent survey by the Yole group, documented by the “First Sensors” group [12].

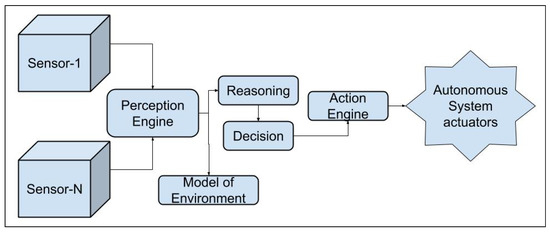

In a typical autonomous system, a perception module inputs the optimal information into the control module. Refer to Figure 1. Crowley et al. [13] define perception as "The process of maintaining an internal description of the external environment."

Figure 1. High-level Perception Framework.

2. Data fusion

Data fusion entails combining information to accomplish something. This ’something’ is usually to sense the state of some aspect of the universe[14]. The applications of this ’state sensing’ are versatile, to say the least. Some high level areas are: neurology, biology, sociology, engineering, physics,and so on[15,16,17,18,19,20,21]. Due to the very versatile nature of the application of data fusion, throughout this manuscript, we will limit our review to the usage of data fusion using LiDAR data and camera data for autonomous navigation. Kolar et. al., [118] performed an exhaustive data fusion survey and docmented several details about data fusion using the above two sensors.

3. Sensors and their Input to Perception

A sensor is an electronic device that measures physical aspects of an environment and outputs machine(a digital computer) readable data. They provide a direct perception of the environment they are implemented in. Typically a suite of sensors is used since it is the inherent property of an individual sensor; to provide a single aspect of an environment. This not only enables the completeness of the data but also improves the accuracy of measuring the environment.

The Merriam-Webster dictionary defines a sensor[22] as a device that responds to a physical stimulus (such as heat, light, sound, pressure, magnetism, or a particular motion) and transmits a resulting impulse (as for measurement or operating a control).

The initial step is raw data capture using the sensors. The data is then filtered and an appropriate fusion technology implemented this is fed into localization and mapping techniques like SLAM; The same data can be used to identify static or moving objects in the environment and this data can be used to classify the objects, wherein classification information is used to finalize information in creating a model of the environment which in turn can be feed into the control algorithm[27]. The classification information could potentially give details of pedestrians, furniture, vehicles, buildings, etc. Such a classification is useful in both pre-mapped ie., known environments and unknown environments since it increases the potential of the system to explore its environment and navigate.

4. Multiple Sensors vs. Single Sensor

It is a known fact that most of the autonomous systems require multiple sensors to function optimally. However, why should we use multiple sensors? The individual usage of any sensor could impact the system where they are used, due to the limitations in each of those sensors. Hence, to get acceptable results, one may utilize a suite of different sensors and utilize the benefits of each of them. The diversity offered by the suite of sensors contributes positively to the sensed data perception[38,39]. Another reason could be the system failure risk due to the failure of that single sensor[21,27,40] and hence one should introduce a level of redundancy. For instance, while executing the obstacle avoidance module, if the camera is the only installed sensor and it fails, it could be catastrophic. However, if it has an additional camera or LiDAR, it can navigate itself to a safe place after successfully avoiding the obstacle, if such logic is built-in for that failure. Many researchers performed a study on high-level decision data fusion and concluded that using multiple sensors with data fusion is better than individual sensors without data fusion. The research community discovered that every sensor used provides a different type, sometimes unique type of information in the selected environment, which includes the tracked object, avoided object, the autonomous vehicle itself, the world it is being used, and so on, and the information is provided with differing accuracy and differing details[27,39,44,45,46].

There are some disadvantages while using multiple sensors and one of them is that they have additional levels of complexity; however, using an optimal technique for fusing the data can mitigate this challenge efficiently. There may be the presence of a level of uncertainty in the functioning, accuracy, and appropriateness of the sensed raw data[47]. Due to these challenges, the system must be able to diagnose accurately when a failure occurs and ensure that the failed component(s) are identified for apt mitigation.

5. Need for Sensor Data Fusion

Some of the limitations of single sensor unit systems are as follows:

Deprivation: If a sensor stops functioning, the system where it was incorporated in will have a loss of perception.

Uncertainty: Inaccuracies arise when features are missing, due to ambiguities or when all required aspects cannot be measured

Imprecision: The sensor measurements will not be precise and will not be accurate.

Limited temporal coverage: There is initialization/setup time to reach a sensor’s maximum performance and transmit a measurement, hence limiting the frequency of the maximum measurements.

Limited spatial coverage: Normally, an individual sensor will cover only a limited region of the entire environment—for example, a reading from an ambient thermometer on a drone provides an estimation of the temperature near the thermometer and may fail to correctly render the average temperature in the entire environment.

Some of the advantages of using multiple sensors or a sensor suite [38,44,46,50,51] are as follows:

Extended Spatial Coverage: Multiple sensors can measure across a wider range of space and sense where a single sensor cannot'

Extended Temporal Coverage: Time-based coverage increases while using multiple sensors

Improved resolution: A union of multiple independent measurements of the same property, the resolution is better, i.e., more than that of single sensor measurement.

Reduced Uncertainty: As a whole, when we consider the entire sensor suite, the uncertainty decreases, since the combined information reduces the set of unambiguous interpretations of the sensed value.

Increased robustness against interference: An increase in the dimensionality of the sensor space (measuring using a LiDAR and stereo vision cameras), the system becomes less vulnerable against interference.

Increased robustness: The redundancy that is provided due to the multiple sensors provides more robustness, even when there is a partial failure due to one of the sensors being down.

Increased reliability: Due to the increased robustness, the system becomes more reliable.

Increased confidence: When the same domain or property is measured by multiple sensors, one sensor can confirm the accuracy of other sensors; this can be attributed to re-verification and hence the confidence is better.

Reduced complexity: The output of multiple sensor fusion is better; it has lesser uncertainty, is less noisy, and complete.

6. Data Fusion Techniques

Over the years, scientists and engineers have applied concepts of sensing that occur in nature and implement them into their research areas and have developed new disciplines and technologies that span over several fields. In the early 1980s, researchers used aerial sensor data to obtain passive sensor fusion of stereo vision imagery. Crowley et al. performed fundamental research in the area of data fusion, perception, and world model development that is vital for robot navigation [57,58,59]. They have developed systems with multiple sensors and devised mechanisms and techniques to augment the data from all the sensors and get the 'best' data as output from this set of sensors, also known as a 'suite of sensors'. In short, this augmentation or integration of data from multiple sensors can simply be termed as multi-sensor data fusion. In the survey paper, we discuss the following integration techniques.

6.1. K-Means

K-means is a popular algorithm that has been widely employed; it provides a good generalization of the data clustering and it guarantees the convergence.

PDA was proposed by Bar-Shalom and Tse, and it is also known by the "modified filter of all neighbors" [86]. The functionality is to assign an association probability to each hypothesis from the correct measurement of a destination/target and then process it. PDA is mainly good for tracking targets that do not make abrupt changes in their movement pattern.

6.3. Distributed Multiple Hypothesis Test

A very useful technique that can be used in distributed and decentralized systems[90]. This is an extension of the multiple hypothesis tests. It is efficient at tracking multiple targets in cluttered environments [91]. This can be used as an estimation and tracking technique [90]. The main disadvantage is the high computation cost, which is in the exponential order.

6.4. State Estimation

Also known as tracking techniques, they assist with calculating the moving target's state, when measurements are given. These measurements are obtained using the sensors [87]. This is a fairly common technique in data fusion mainly for two reasons: (1) measurements are usually obtained from multiple sensors; and there could be noise in the measurements. Some examples are Kalman Filters, Extended Kalman Filters, Particle Filters, etc

6.5. Covariance Consistency Methods

These methods were proposed initially by Uhlmann et al[84,87]. This is a distributed technique that maintains covariance estimations and means in a distributed system. They comprise of estimation-fusion techniques.

6.6. Distributed Data Fusion

As the name suggests, this is a distributed fusion system and is often used in multi-agent systems, multisensor systems, and multimodal systems[84,94,95]. Efficient when distributed and decentralized systems are present. An optimum fusion can be achieved by adjusting the decision rules. However, there are difficulties in finalizing decision uncertainties.Decision Fusion TechniquesThese techniques can be used when successful target detection occurs [87,92,93]. They enable high-level inference for such events. When a user has a situation where multiple classifiers are present, this technique can be used. A single decision can be arrived at using the multiple classifiers. For the enablement of multiple classifiers to be achieved, apriori probabilities need to be present and this is difficult.

6.7. HardwareLiDAR

Light Detection and Ranging (LiDAR) is a technology that is used in several autonomous tasks and functions as follows: an area is illuminated by a light source. The light is scattered by the objects in that scene and is detected by a photo-detector. The LiDAR can provide the distance to the object by measuring the time it takes for the light to travel to the object and back [104, 105, 106, 107, 108, 109 ].CameraThe types of cameras are Conventional color cameras like USB/web camera; RGB [115], RGB-mono, and RGB cameras with depth information; RGB-Depth (RGB-D), 360 degree camera [28,116,117,118], and Time-of-Flight (TOF)camera[119,120,121].Implementation of Data Fusion using a LiDAR and cameraWe review an input-output type of fusion as described by Dasarathy et al. They propose a classification strategy based on input-output of entities like data, architecture, features, and decisions. The fusion of raw data in the first layer, a fusion of features in the second, and finally the decision layer fusion. In the case of the LiDAR and camera data fusion, two distinct steps effectively integrate/fuse the data.

-

Geometric Alignment of the Sensor Data

-

Resolution Match between the Sensor Data

Geometric Alignment of the Sensor DataThe first and foremost step in the data fusion methodology is the alignment of the sensor data. In this step, the logic finds LiDAR data points for each of the pixel data points from the optical image. This ensures the geometric alignment of the two sensors [28].Resolution Match between the Sensor DataOnce the data is geometrically aligned, there must be a match in the resolution between the sensor data of the two sensors. The optical camera has the highest resolution of 1920 × 1080 at 30 fps, followed by the depth camera output that has a resolution of 1280 × 720 pixels at 90 fps, and finally, the LiDAR data have the lowest resolution. This step performs an extrinsic calibration of the data. Madden et al. performed a sensor alignment [126] of a LiDAR and 3D depth camera using a probabilistic approach. De Silva et al. [28] performed a resolution match by finding a distance value for the image pixels for which there is no distance value. They solve this as a missing value prediction problem, which is based on regression. They formulate the missing data values using the relationship between the measured data point values by using a multi-modal technique called Gaussian Process Regression (GPR), developed by Lahat et al. [39]. The resolution matching of two different sensors can be performed through extrinsic sensor calibration. Considering the depth information of a liDAR and the stereo vision camera, 3D depth boards can be developed out of simple 2D imagesChallenges with Sensor Data FusionSeveral challenges have been observed while implementing multisensor data fusion. Some of them could be data related to like: complexity in data, conflicting and/or contradicting data, or they can be technical such as resolution differences between the sensors, the difference in alignment between the sensors, etc. We review two of the fundamental challenges surrounding sensor data fusion, which are the resolution differences in the heterogeneous sensors and understanding and utilizing the heterogeneous sensor data streams while accounting for many uncertainties in the sensor data sources [28, 39]. We focus on reviewing the utilization of the fused information in the autonomous navigation, which is challenging since many autonomous systems work in complex environments, be it at home or work, which is to assist persons with severe motor disabilities to handle their navigational requirements and hence pose significant challenges for decision-making due to the safety, efficiency, and accuracy requirements. For reliable operation, decisions on the system need to be made by considering the entire set of multi-modal sensor data they acquire, keeping in mind a complete solution. In addition to this, the decisions need to be made considering the uncertainties associated with both the data acquisition methods and the implemented pre-processing algorithms. Our focus in this review is to survey the data fusion techniques that consider the uncertainty in the fusion algorithm.

Some researchers used mathematical and/or statistical techniques for data fusion. Others used techniques comprised of reinforcement learning in implementing multisensor data fusion [70], where they encountered conflicting data. In this study, they fitted smart mobile systems with sensors that enabled the systems to be sensitive to the environment(s) they were active in. The challenge they try to solve is mapping the multiple streams of raw sensory data Smart agents to their tasks. In~their environment, the tasks were different and conflicting, which complicated the problem. This resulted in their system learning to translate the multiple inputs to the appropriate tasks or sequence of system actions. Crowel et al. developed mathematical tools to counter uncertainties with fusion and perception [133]. Other implementations include adaptive learning techniques [134], wherein the authors use D-CNN techniques in a multisensor environment for fault diagnostics in planetary gearboxes.Sensor data noise and rectificationNoise filtering techniques include a suite of Kalman filters and their variations.

7. Conclusion

LiDAR and Camera are two of the most important sensors that can provide situation awareness to an autonomous system which can be applied in tasks like mapping, visual localization, path planning, and obstacle avoidance. We are currently integrating these two sensors for autonomous tasks on a power wheelchair. Our results have been in agreement with past observations that an accurate integration does provide better information for all the above mentioned tasks. The improvement is seen since both sensors complement each other. We have been successful in using the speed of the LiDAR with the data richness of the camera.

We used a 3D Lidar Velodyne and integrated it with an Intel Realsense D435. Our results showed that the millisecond response time of the LiDAR when integrated with the high resolution data of the camera, gave us accurate information to detect static or moving targets, perform accurate path planning, and implement SLAM functionality. We have plans to extend this research to develop an autonomous wheelchair controlled by brain computing interface using the human thought.

References

- Kolar, Prasanna and Benavidez, Patrick and Jamshidi, Mo; Survey of Datafusion Techniques for Laser and Vision Based Sensor Integration for Autonomous Navigation. Sensors 2020, 20, 2180.

- 2, Fehr, L.; Langbein, W.E.; Skaar, S.B. Adequacy of power wheelchair control interfaces for persons with severe disabilities: A clinical survey. J. Rehabil. Res. Dev. 2000, 37, 353–360. [Google Scholar] [PubMed]

- 3, Martins, M.M.; Santos, C.P.; Frizera-Neto, A.; Ceres, R. Assistive mobility devices focusing on smart walkers: Classification and review. Robot. Auton. Syst. 2012, 60, 548–562. [Google Scholar] [CrossRef]

- 4, Noonan, T.H.; Fisher, J.; Bryant, B. Autonomous Lawn Mower. U.S. Patent 5,204,814, 20 April 1993. [Google Scholar]

- 5, Bernini, F. Autonomous Lawn Mower with Recharge Base. U.S. Patent 7,668,631, 23 February 2010. [Google Scholar]

- 6, Ulrich, I.; Mondada, F.; Nicoud, J. Autonomous Vacuum Cleaner. Robot. Auton. Syst. 1997, 19. [Google Scholar] [CrossRef]

- 7, Mutiara, G.; Hapsari, G.; Rijalul, R. Smart guide extension for blind cane. In Proceedings of the 4th International Conference on Information and Communication Technology, Bandung, Indonesia, 25–27 May 2016; pp. 1–6. [Google Scholar] [CrossRef]

- 8, Bharucha, A.J.; Anand, V.; Forlizzi, J.; Dew, M.A.; Reynolds, C.F., III; Stevens, S.; Wactlar, H. Intelligent assistive technology applications to dementia care: current capabilities, limitations, and future challenges. Am. J. Geriatr. Psychiatry 2009, 17, 88–104. [Google Scholar] [CrossRef]

- 9, Cahill, S.; Macijauskiene, J.; Nygård, A.M.; Faulkner, J.P.; Hagen, I. Technology in dementia care. Technol. Disabil. 2007, 19, 55–60. [Google Scholar] [CrossRef]

- 10, Furness, B.W.; Beach, M.J.; Roberts, J.M. Giardiasis surveillance–United States, 1992–1997. MMWR CDC Surveill. Summ. 2000, 49, 1–13. [Google Scholar]

- 11, Topo, P. Technology studies to meet the needs of people with dementia and their caregivers: A literature review. J. Appl. Gerontol. 2009, 28, 5–37. [Google Scholar] [CrossRef]

- 12, First Sensors. Impact of LiDAR by 2032, 1. Available online: https://www.first-sensor.com/cms/upload/investor_relations/publications/First_Sensors_LiDAR_and_Camera_Strategy.pdf (accessed on 1 August 2019).

- 13, Crowley, J.L.; Demazeau, Y. Principles and techniques for sensor data fusion. Signal Process. 1993, 32, 5–27. [Google Scholar] [CrossRef]

- 14, Steinberg, A.N.; Bowman, C.L. Revisions to the JDL data fusion model. In Handbook of Multisensor Data Fusion; CRC Press: Boca Raton, FL, USA, 2017; pp. 65–88. [Google Scholar]

- 15, McLaughlin, D. An integrated approach to hydrologic data assimilation: interpolation, smoothing, and filtering. Adv. Water Resour. 2002, 25, 1275–1286. [Google Scholar] [CrossRef]

- 16, Van Mechelen, I.; Smilde, A.K. A generic linked-mode decomposition model for data fusion. Chemom. Intell. Lab. Syst. 2010, 104, 83–94. [Google Scholar] [CrossRef]

- 17, McGurk, H.; MacDonald, J. Hearing lips and seeing voices. Nature 1976, 264, 746–748. [Google Scholar] [CrossRef] [PubMed]

- 18, Caputo, M.; Denker, K.; Dums, B.; Umlauf, G.; Konstanz, H. 3D Hand Gesture Recognition Based on Sensor Fusion of Commodity Hardware. In Mensch & Computer; Oldenbourg Verlag: München, Germany, 2012. [Google Scholar]

- 19, Lanckriet, G.R.; De Bie, T.; Cristianini, N.; Jordan, M.I.; Noble, W.S. A statistical framework for genomic data fusion. Bioinformatics 2004, 20, 2626–2635. [Google Scholar] [CrossRef] [PubMed]

- 20, Aerts, S.; Lambrechts, D.; Maity, S.; Van Loo, P.; Coessens, B.; De Smet, F.; Tranchevent, L.C.; De Moor, B.; Marynen, P.; Hassan, B.; et al. Gene prioritization through genomic data fusion. Nat. Biotechnol. 2006, 24, 537–544. [Google Scholar] [CrossRef] [PubMed]

- 21, Hall, D.L.; Llinas, J. An introduction to multisensor data fusion. Proc. IEEE 1997, 85, 6–23. [Google Scholar] [CrossRef]

- 22, Webster Sensor Definition. Merriam-Webster Definition of a Sensor. Available online: https://www.merriam-webster.com/dictionary/sensor (accessed on 9 November 2019).

- 27, Chavez-Garcia, R.O. Multiple Sensor Fusion for Detection, Classification and Tracking of Moving Objects in Driving Environments. Ph.D. Thesis, Université de Grenoble, Grenoble, France, 2014. [Google Scholar]

- 28, De Silva, V.; Roche, J.; Kondoz, A. Fusion of LiDAR and camera sensor data for environment sensing in driverless vehicles. arXiv 2018, arXiv:1710.06230v2. [Google Scholar]

- 30, Rövid, A.; Remeli, V. Towards Raw Sensor Fusion in 3D Object Detection. In Proceedings of the 2019 IEEE 17th World Symposium on Applied Machine Intelligence and Informatics (SAMI), Herl’any, Slovakia, 24–26 January 2019; pp. 293–298. [Google Scholar] [CrossRef]

- 32, Wu, B.; Nevatia, R. Detection of multiple, partially occluded humans in a single image by bayesian combination of edgelet part detectors. In Proceedings of the Tenth IEEE International Conference on Computer Vision, ICCV 2005, Beijing, China, 17–20 October 2005; Volume 1, pp. 90–97. [Google Scholar]

- 33, Borenstein, J.; Koren, Y. Obstacle avoidance with ultrasonic sensors. IEEE J. Robot. Autom. 1988, 4, 213–218. [Google Scholar] [CrossRef]

- 35, Chavez-Garcia, R.O.; Aycard, O. Multiple Sensor Fusion and Classification for Moving Object Detection and Tracking. IEEE Trans. Intell. Transp. Syst. 2016, 17, 525–534. [Google Scholar] [CrossRef]

- 37, Baltzakis, H.; Argyros, A.; Trahanias, P. Fusion of laser and visual data for robot motion planning and collision avoidance. Mach. Vis. Appl. 2003, 15, 92–100. [Google Scholar] [CrossRef]

- 38, Luo, R.C.; Yih, C.C.; Su, K.L. Multisensor fusion and integration: Approaches, applications, and future research directions. IEEE Sens. J. 2002, 2, 107–119. [Google Scholar] [CrossRef]

- 39, Lahat, D.; Adali, T.; Jutten, C. Multimodal Data Fusion: An Overview of Methods, Challenges, and Prospects. Proc. IEEE 2015, 103, 1449–1477. [Google Scholar] [CrossRef]

- 40, Shafer, S.; Stentz, A.; Thorpe, C. An architecture for sensor fusion in a mobile robot. In Proceedings of the 1986 IEEE International Conference on Robotics and Automation, San Francisco, CA, USA, 7–10 April 1986; Volume 3, pp. 2002–2011. [Google Scholar]

- 44, Waltz, E.; Llinas, J. Multisensor Data Fusion; Artech House: Boston, MA, USA, 1990; Volume 685. [Google Scholar]

- 45, Hackett, J.K.; Shah, M. Multi-sensor fusion: A perspective. In Proceedings of the 1990 IEEE International Conference on Robotics and Automation, Cincinnati, OH, USA, 13–18 May 1990; pp. 1324–1330. [Google Scholar]

- 46, Grossmann, P. Multisensor data fusion. GEC J. Technol. 1998, 15, 27–37. [Google Scholar]

- 47, Brooks, R.R.; Rao, N.S.; Iyengar, S.S. Resolution of Contradictory Sensor Data. Intell. Autom. Soft Comput. 1997, 3, 287–299. [Google Scholar] [CrossRef]

- 48, Vu, T.D. Vehicle Perception: Localization, Mapping with dEtection, Classification and Tracking of Moving Objects. Ph.D. Thesis, Institut National Polytechnique de Grenoble-INPG, Grenoble, France, 2009. [Google Scholar]

- 50, Bosse, E.; Roy, J.; Grenier, D. Data fusion concepts applied to a suite of dissimilar sensors. In Proceedings of the 1996 Canadian Conference on Electrical and Computer Engineering, Calgary, AL, Canada, 26–29 May 1996; Volume 2, pp. 692–695. [Google Scholar]

- 51, Jeon, D.; Choi, H. Multi-sensor fusion for vehicle localization in real environment. In Proceedings of the 2015 15th International Conference on Control, Automation and Systems (ICCAS), Busan, Korea, 13–16 October 2015; pp. 411–415. [Google Scholar]

- 57, Crowley, J.L. A Computational Paradigm for Three Dimensional Scene Analysis; Technical Report CMU-RI-TR-84-11; Carnegie Mellon University: Pittsburgh, PA, USA, 1984. [Google Scholar]

- 58, Crowley, J. Navigation for an intelligent mobile robot. IEEE J. Robot. Autom. 1985, 1, 31–41. [Google Scholar] [CrossRef]

- 59, Herman, M.; Kanade, T. Incremental reconstruction of 3D scenes from multiple, complex images. Artif. Intell. 1986, 30, 289–341. [Google Scholar] [CrossRef]

- 70, Ou, S.; Fagg, A.H.; Shenoy, P.; Chen, L. Application of reinforcement learning in multisensor fusion problems with conflicting control objectives. Intell. Autom. Soft Comput. 2009, 15, 223–235. [Google Scholar] [CrossRef]

- 84, Uhlmann, J.K. Covariance consistency methods for fault-tolerant distributed data fusion. Inf. Fusion 2003, 4, 201–215. [Google Scholar] [CrossRef]

- 86, Bar-Shalom, Y.; Willett, P.K.; Tian, X. Tracking and Data Fusion; YBS Publishing: Storrs, CT, USA, 2011; Volume 11. [Google Scholar]

- 87, Castanedo, F. A review of data fusion techniques. Sci. World J. 2013, 2013. [Google Scholar] [CrossRef]

- 90, Goeman, J.J.; Meijer, R.J.; Krebs, T.J.P.; Solari, A. Simultaneous control of all false discovery proportions in large-scale multiple hypothesis testing. Biometrika 2019, 106, 841–856. [Google Scholar] [CrossRef]

- 91, Olfati-Saber, R. Distributed Kalman filtering for sensor networks. In Proceedings of the 2007 46th IEEE Conference on Decision and Control, New Orleans, LA, USA, 12–14 December 2007; pp. 5492–5498. [Google Scholar]

- 92, Zhang, Y.; Huang, Q.; Zhao, K. Hybridizing association rules with adaptive weighted decision fusion for personal credit assessment. Syst. Sci. Control Eng. 2019, 7, 135–142. [Google Scholar] [CrossRef]

- 93, Caltagirone, L.; Bellone, M.; Svensson, L.; Wahde, M. LIDAR–camera fusion for road detection using fully convolutional neural networks. Robot. Auton. Syst. 2019, 111, 125–131. [Google Scholar] [CrossRef]

- 94, Chen, L.; Cetin, M.; Willsky, A.S. Distributed data association for multi-target tracking in sensor networks. In Proceedings of the IEEE Conference on Decision and Control, Plaza de España Seville, Spain, 12–15 December 2005. [Google Scholar]

- 95, Dwivedi, R.; Dey, S. A novel hybrid score level and decision level fusion scheme for cancelable multi-biometric verification. Appl. Intell. 2019, 49, 1016–1035. [Google Scholar] [CrossRef]

- 96, Dasarathy, B.V. Sensor fusion potential exploitation-innovative architectures and illustrative applications. Proc. IEEE 1997, 85, 24–38. [Google Scholar] [CrossRef]

- 104, NOAA. What Is LiDAR? Available online: https://oceanservice.noaa.gov/facts/lidar.html (accessed on 19 March 2020).

- 105, Yole Developpement, W. Impact of LiDAR by 2032, 1. The Automotive LiDAR Market. Available online: http://www.woodsidecap.com/wp-content/uploads/2018/04/Yole_WCP-LiDAR-Report_April-2018-FINAL.pdf (accessed on 23 March 2020).

- 106, Kim, W.; Tanaka, M.; Okutomi, M.; Sasaki, Y. Automatic labeled LiDAR data generation based on precise human model. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 43–49. [Google Scholar]

- 107, Miltiadou, M.; Michael, G.; Campbell, N.D.; Warren, M.; Clewley, D.; Hadjimitsis, D.G. Open source software DASOS: Efficient accumulation, analysis, and visualisation of full-waveform lidar. In Proceedings of the Seventh International Conference on Remote Sensing and Geoinformation of the Environment (RSCy2019), International Society for Optics and Photonics, Paphos, Cyprus, 18–21 March 2019; Volume 11174, p. 111741. [Google Scholar]

- 108, Hu, P.; Huang, H.; Chen, Y.; Qi, J.; Li, W.; Jiang, C.; Wu, H.; Tian, W.; Hyyppä, J. Analyzing the Angle Effect of Leaf Reflectance Measured by Indoor Hyperspectral Light Detection and Ranging (LiDAR). Remote Sens. 2020, 12, 919. [Google Scholar] [CrossRef]

- 109, Warren, M.E. Automotive LIDAR technology. In Proceedings of the 2019 Symposium on VLSI Circuits, Kyoto, Japan, 9–14 June 2019; pp. C254–C255. [Google Scholar]

- 115, igi global. RGB Camera Details. Available online: https://www.igi-global.com/dictionary/mobile-applications-for-automatic-object-recognition/60647 (accessed on 2 February 2020).

- 116, Sigel, K.; DeAngelis, D.; Ciholas, M. Camera with Object Recognition/data Output. U.S. Patent 6,545,705, 8 April 2003. [Google Scholar]

- 117, De Silva, V.; Roche, J.; Kondoz, A. Robust fusion of LiDAR and wide-angle camera data for autonomous mobile robots. Sensors 2018, 18, 2730. [Google Scholar] [CrossRef] [PubMed]

- 118, Guy, T. Benefits and Advantages of 360° Cameras. Available online: https://www.threesixtycameras.com/pros-cons-every-360-camera/ (accessed on 10 January 2020).

- 119, Myllylä, R.; Marszalec, J.; Kostamovaara, J.; Mäntyniemi, A.; Ulbrich, G.J. Imaging distance measurements using TOF lidar. J. Opt. 1998, 29, 188–193. [Google Scholar] [CrossRef]

- 120, Nair, R.; Lenzen, F.; Meister, S.; Schäfer, H.; Garbe, C.; Kondermann, D. High accuracy TOF and stereo sensor fusion at interactive rates. In Proceedings of the ECCV: European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Volume 7584, pp. 1–11. [Google Scholar] [CrossRef]

- 121, Hewitt, R.A.; Marshall, J.A. Towards intensity-augmented SLAM with LiDAR and ToF sensors. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1956–1961. [Google Scholar] [CrossRef]

- 126, Maddern, W.; Newman, P. Real-time probabilistic fusion of sparse 3d lidar and dense stereo. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 2181–2188. [Google Scholar]

- 133, Crowley, J.; Ramparany, F. Mathematical tools for manipulating uncertainty in perception. In Proceedings of the AAAI Workshop on Spatial Reasoning and Multi-Sensor Fusion, St. Charles, IL, USA, 5–7 October 1987. [Google Scholar]

- 134, Jing, L.; Wang, T.; Zhao, M.; Wang, P. An adaptive multi-sensor data fusion method based on deep convolutional neural networks for fault diagnosis of planetary gearbox. Sensors 2017, 17, 414. [Google Scholar] [CrossRef]

- 161, Waxman, A.; Moigne, J.; Srinivasan, B. Visual navigation of roadways. In Proceedings of the 1985 IEEE International Conference on Robotics and Automation, Louis, MO, USA, 25–28 March 1985; Volume 2, pp. 862–867. [Google Scholar]

- 162, Delahoche, L.; Pégard, C.; Marhic, B.; Vasseur, P. A navigation system based on an ominidirectional vision sensor. In Proceedings of the 1997 IEEE/RSJ International Conference on Intelligent Robot and Systems, Innovative Robotics for Real-World Applications, IROS’97, Grenoble, France, 11 September1997; Volume 2, pp. 718–724. [Google Scholar]

- 163, Zingaretti, P.; Carbonaro, A. Route following based on adaptive visual landmark matching. Robot. Auton. Syst. 1998, 25, 177–184. [Google Scholar] [CrossRef]

- 164, Research, B. Global Vision and Navigation for Autonomous Vehicle.

- 165, Thrun, S. Robotic Mapping: A Survey; CMU-CS-02–111; Morgan Kaufmann Publishers: Burlington, MA, USA, 2002. [Google Scholar]

- 166, Thorpe, C.; Hebert, M.H.; Kanade, T.; Shafer, S.A. Vision and navigation for the Carnegie-Mellon Navlab. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 362–373. [Google Scholar] [CrossRef]

- 167, Zimmer, U.R. Robust world-modelling and navigation in a real world. Neurocomputing 1996, 13, 247–260. [Google Scholar] [CrossRef]

- 170, Danescu, R.G. Obstacle detection using dynamic Particle-Based occupancy grids. In Proceedings of the 2011 International Conference on Digital Image Computing: Techniques and Applications, Noosa, QLD, Australia, 6–8 December 2011; pp. 585–590. [Google Scholar]

- 171, Leibe, B.; Seemann, E.; Schiele, B. Pedestrian detection in crowded scenes. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 878–885. [Google Scholar]

- 172, Lwowski, J.; Kolar, P.; Benavidez, P.; Rad, P.; Prevost, J.J.; Jamshidi, M. Pedestrian detection system for smart communities using deep Convolutional Neural Networks. In Proceedings of the 2017 12th System of Systems Engineering Conference (SoSE), Waikoloa, HI, USA, 18–21 June 2017; pp. 1–6. [Google Scholar]

- 173, Kortenkamp, D.; Weymouth, T. Topological mapping for mobile robots using a combination of sonar and vision sensing. Proc. AAAI 1994, 94, 979–984. [Google Scholar]

- 176, Thrun, S.; Bücken, A. Integrating grid-based and topological maps for mobile robot navigation. In Proceedings of the National Conference on Artificial Intelligence, Oregon, Portland, 4–8 August 1996; pp. 944–951. [Google Scholar]

- 180, Borenstein, J.; Koren, Y. The vector field histogram-fast obstacle avoidance for mobile robots. IEEE Trans. Robot. Autom. 1991, 7, 278–288. [Google Scholar] [CrossRef]

- 188, Fernández-Madrigal, J.A. Simultaneous Localization and Mapping for Mobile Robots: Introduction and Methods: Introduction and Methods; IGI Global: Philadelphia, PA, USA, 2012. [Google Scholar]

- 190, Leonard, J.J.; Durrant-Whyte, H.F.; Cox, I.J. Dynamic map building for an autonomous mobile robot. Int. J. Robot. Res. 1992, 11, 286–298. [Google Scholar] [CrossRef]

- 198, Huang, S.; Dissanayake, G. Robot Localization: An Introduction. In Wiley Encyclopedia of Electrical and Electronics Engineering; John Wiley & Sons: New York, NY, USA, 1999; pp. 1–10. [Google Scholar]

- 199, Huang, S.; Dissanayake, G. Convergence and consistency analysis for extended Kalman filter based SLAM. IEEE Trans. Robot. 2007, 23, 1036–1049. [Google Scholar] [CrossRef]

- 200, Liu, H.; Darabi, H.; Banerjee, P.; Liu, J. Survey of wireless indoor positioning techniques and systems. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2007, 37, 1067–1080. [Google Scholar] [CrossRef]

- 201, Leonard, J.J.; Durrant-Whyte, H.F. Mobile robot localization by tracking geometric beacons. IEEE Trans. Robot. Autom. 1991, 7, 376–382. [Google Scholar] [CrossRef]

- 202, Betke, M.; Gurvits, L. Mobile robot localization using landmarks. IEEE Trans. Robot. Autom. 1997, 13, 251–263. [Google Scholar] [CrossRef]

- 205, Ojeda, L.; Borenstein, J. Personal dead-reckoning system for GPS-denied environments. In Proceedings of the IEEE International Workshop on Safety, Security and Rescue Robotics, SSRR 2007, Rome, Italy, 27–29 September 2007; pp. 1–6. [Google Scholar]

- 206, Levi, R.W.; Judd, T. Dead Reckoning Navigational System Using Accelerometer to Measure Foot Impacts. U.S. Patent 5,583,776, 1996. [Google Scholar]

- 207, Elnahrawy, E.; Li, X.; Martin, R.P. The limits of localization using signal strength: A comparative study. In Proceedings of the 2004 First Annual IEEE Communications Society Conference on Sensor and Ad Hoc Communications and Networks, Santa Clara, CA, USA, 4–7 October 2004; pp. 406–414. [Google Scholar]

- 212, Howell, E.; NAV Star. Navstar: GPS Satellite Network. Available online: https://www.space.com/19794-navstar.html (accessed on 1 August 2019).

- 213, Robotics, A. Experience the New Mobius. Available online: https://www.asirobots.com/platforms/mobius/ (accessed on 1 August 2019).

- 214, Choi, B.S.; Lee, J.J. Sensor network based localization algorithm using fusion sensor-agent for indoor service robot. IEEE Trans. Consum. Electron. 2010, 56, 1457–1465. [Google Scholar] [CrossRef]

- 215, Ramer, C.; Sessner, J.; Scholz, M.; Zhang, X.; Franke, J. Fusing low-cost sensor data for localization and mapping of automated guided vehicle fleets in indoor applications. In Proceedings of the 2015 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), San Diego, CA, USA, 14–16 September 2015; pp. 65–70. [Google Scholar]

- 217, Wan, K.; Ma, L.; Tan, X. An improvement algorithm on RANSAC for image-based indoor localization. In Proceedings of the 2016 International Conference on Wireless Communications and Mobile Computing Conference (IWCMC), An improvement algorithm on RANSAC for image-based indoor localization, Paphos, Cyprus, 5–9 September 2016; pp. 842–845. [Google Scholar]

- 218, Biswas, J.; Veloso, M. Depth camera based indoor mobile robot localization and navigation. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 1697–1702. [Google Scholar]

- 219, Vive, W.H. HTC Vive Details. Available online: https://en.wikipedia.org/wiki/HTC_Vive (accessed on 1 August 2019).

- 220, Buniyamin, N.; Ngah, W.W.; Sariff, N.; Mohamad, Z. A simple local path planning algorithm for autonomous mobile robots. Int. J. Syst. Appl. Eng. Dev. 2011, 5, 151–159. [Google Scholar]

- 221, Popović, M.; Vidal-Calleja, T.; Hitz, G.; Sa, I.; Siegwart, R.; Nieto, J. Multiresolution mapping and informative path planning for UAV-based terrain monitoring. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1382–1388. [Google Scholar]

- 222, Laghmara, H.; Boudali, M.; Laurain, T.; Ledy, J.; Orjuela, R.; Lauffenburger, J.; Basset, M. Obstacle Avoidance, Path Planning and Control for Autonomous Vehicles. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 529–534. [Google Scholar]

- 223, Rashid, A.T.; Ali, A.A.; Frasca, M.; Fortuna, L. Path planning with obstacle avoidance based on visibility binary tree algorithm. Robot. Auton. Syst. 2013, 61, 1440–1449. [Google Scholar] [CrossRef]

- 244, Wang, C.C.; Thorpe, C.; Thrun, S.; Hebert, M.; Durrant-Whyte, H. Simultaneous localization, mapping and moving object tracking. Int. J. Robot. Res. 2007, 26, 889–916. [Google Scholar] [CrossRef]

- 245, Saunders, J.; Call, B.; Curtis, A.; Beard, R.; McLain, T. Static and dynamic obstacle avoidance in miniature air vehicles. In Infotech@ Aerospace; BYU ScholarsArchive; BYU: Provo, UT, USA, 2005; p. 6950. [Google Scholar]

- 246, Chu, K.; Lee, M.; Sunwoo, M. Local path planning for off-road autonomous driving with avoidance of static obstacles. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1599–1616. [Google Scholar] [CrossRef]

- 257, Zhang, X.; Rad, A.B.; Wong, Y.K. A robust regression model for simultaneous localization and mapping in autonomous mobile robot. J. Intell. Robot. Syst. 2008, 53, 183–202. [Google Scholar] [CrossRef]

- 260, Wang, X. A Driverless Vehicle Vision Path Planning Algorithm for Sensor Fusion. In Proceedings of the 2019 IEEE 2nd International Conference on Automation, Electronics and Electrical Engineering (AUTEEE), Shenyang, China, 22–24 November 2019; pp. 214–218. [Google Scholar]

- 261, Ali, M.A.; Mailah, M. Path planning and control of mobile robot in road environments using sensor fusion and active force control. IEEE Trans. Veh. Technol. 2019, 68, 2176–2195. [Google Scholar] [CrossRef]

- 264, Sabe, K.; Fukuchi, M.; Gutmann, J.S.; Ohashi, T.; Kawamoto, K.; Yoshigahara, T. Obstacle avoidance and path planning for humanoid robots using stereo vision. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA’04), New Orleans, LA, USA, 26 April–1 May 2004; Volume 1, pp. 592–597. [Google Scholar]

- 268, Dan, B.K.; Kim, Y.S.; Jung, J.Y.; Ko, S.J.; et al. Robust people counting system based on sensor fusion. IEEE Trans. Consum. Electron. 2012, 58, 1013–1021. [Google Scholar] [CrossRef]

- 269, Pacha, A. Sensor Fusion for Robust Outdoor Augmented Reality Tracking on Mobile Devices; GRIN Verlag: München, Germany, 2013. [Google Scholar]

- 270, Breitenstein, M.D.; Reichlin, F.; Leibe, B.; Koller-Meier, E.; Van Gool, L. Online multiperson tracking-by-detection from a single, uncalibrated camera. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 1820–1833. [Google Scholar] [CrossRef]

- 271, Stein, G. Barrier and Guardrail Detection Using a Single Camera. U.S. Patent 9,280,711, 8 March 2016. [Google Scholar]

- 272, Boreczky, J.S.; Rowe, L.A. Comparison of video shot boundary detection techniques. J. Electron. Imag. 1996, 5, 122–129. [Google Scholar] [CrossRef]

- 273, Sheikh, Y.; Shah, M. Bayesian modeling of dynamic scenes for object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1778–1792. [Google Scholar] [CrossRef] [PubMed]

- 274, John, V.; Long, Q.; Xu, Y.; Liu, Z.; Mita, S. Sensor Fusion and Registration of Lidar and Stereo Camera without Calibration Objects. IEICE TRANSACTIONS Fundam. Electron. Commun. Comput. Sci. 2017, 100, 499–509. [Google Scholar] [CrossRef]

- Kolar, Prasanna and Benavidez, Patrick and Jamshidi, Mo; Survey of Datafusion Techniques for Laser and Vision Based Sensor Integration for Autonomous Navigation. Sensors 2020, 20, 2180.