| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Haolan Zhang | -- | 2226 | 2023-01-05 13:14:20 | | | |

| 2 | Haolan Zhang | + 7377 word(s) | 9603 | 2023-01-05 13:28:51 | | | | |

| 3 | Haolan Zhang | + 8 word(s) | 9611 | 2023-01-07 03:41:24 | | | | |

| 4 | Sirius Huang | -5981 word(s) | 3630 | 2023-01-09 02:20:32 | | | | |

| 5 | Haolan Zhang | Meta information modification | 3630 | 2024-02-05 14:12:15 | | |

Video Upload Options

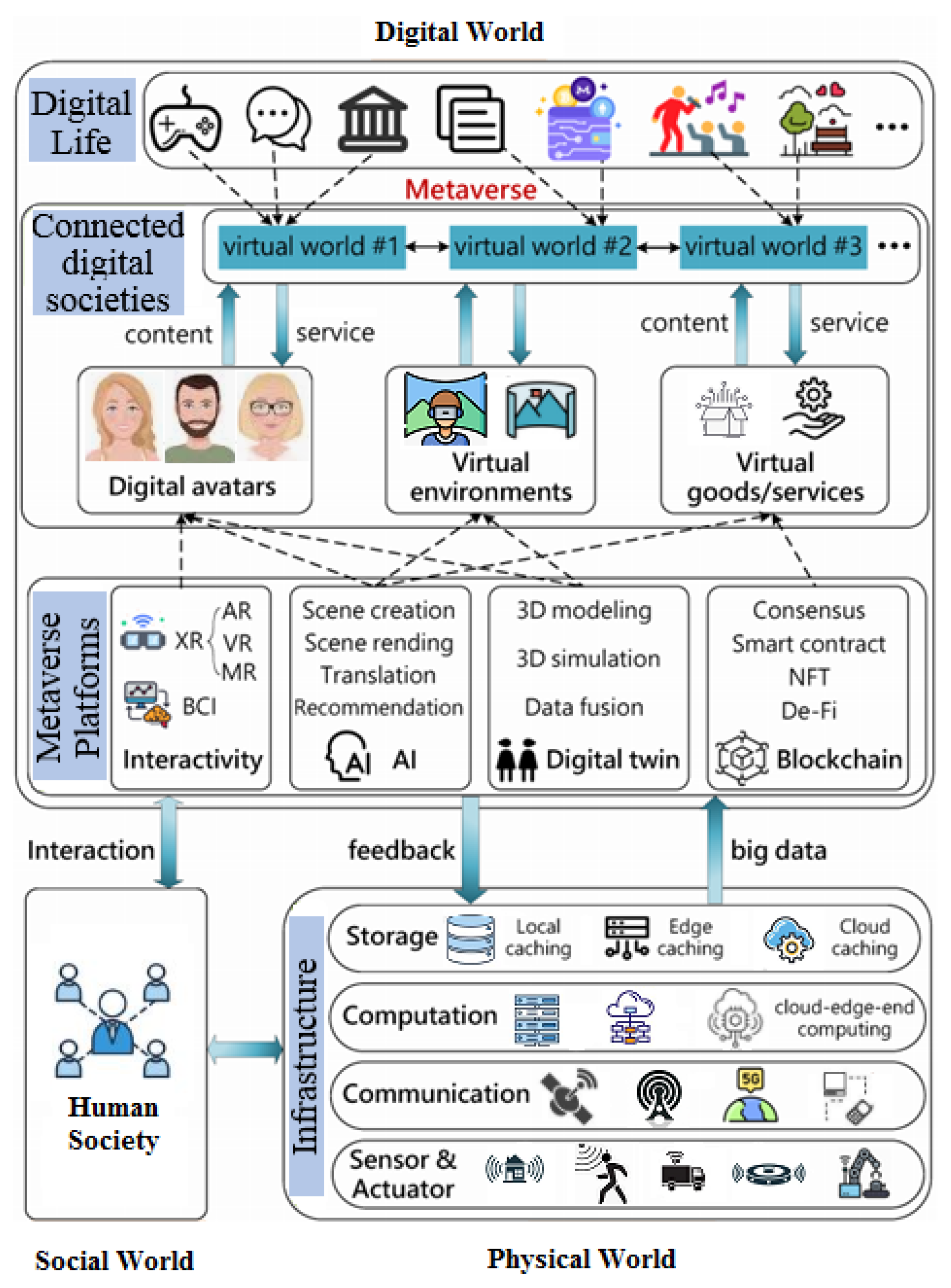

The definition of the Metaverse is a virtual space where users can interact with one another, and with their environment, via 3D digital objects and virtual avatars, in a complex manner that mimics the real world, holding things developed using artificial intelligence techniques; therefore, creating digital humans is essential to the development of the Metaverse and other Virtual Reality (VR), Augmented Reality (AR), Extended Reality (XR) applications.

1. Digital Human Reconstruction

2. Review of Human Body Modeling

3. Optimization-Based Paradigm

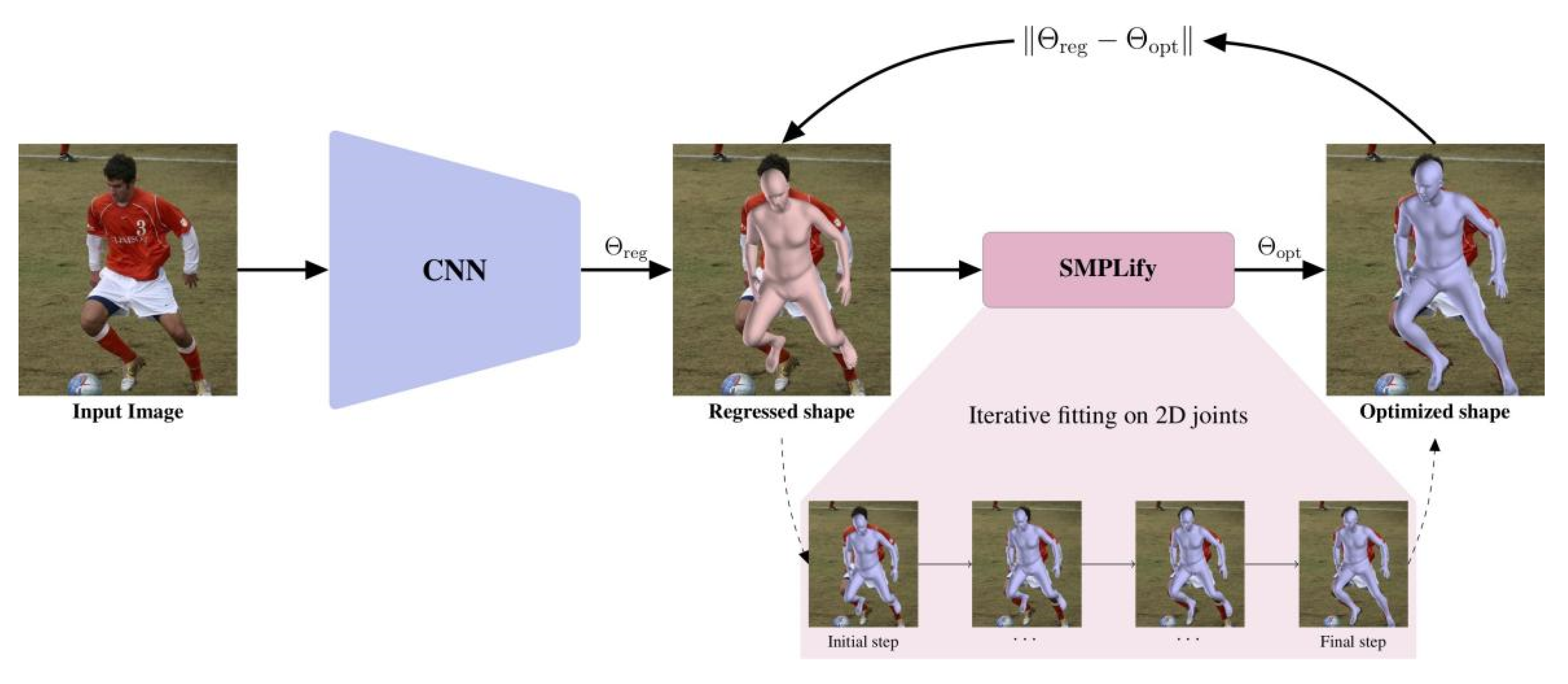

4. Regression-Based Paradigm

5. Technologies in AR/VR/XR Platforms and the Metaverse: Future Trends

The cold start problem is not a persistent problem in VR platforms, as it can be solved automatically when data accumulation reaches a certain quantity, whereas the virtual data explosion problem is a persistent challenge to VR platforms like the Metaverse. The wide range of data sources in the Metaverse will grow exponentially, due to its digitization in nature. Some research studies have suggested adopting the Data as a Service (DaaS) framework [54], as the solution to the data explosion problem in the digital world, including the Metaverse. Several other solutions, including tensor networks and sentiment analysis, have been proposed, to solve this problem. The future trends of technical development in the Metaverse and other VR platforms can be summarized as follows:

- Digital human reconstruction is becoming a crucial area for the Metaverse and other VR platforms: this is a core technology that can accelerate the development of the Metaverse, so as to truly realize human–machine interaction in virtual worlds, as mentioned in the previous sections;

- Digital Twin-related methods are the foundation for creating digital worlds that can mimic the physical world. The digital twin is defined as the effortless integration of data between a physical and virtual environment, in either direction [167]. VR-developing tools, such as Unreal Engine, Unity, 3DS Max & Maya, SketchUp, etc., will be the major developer’s toolkits for digital twin models in the coming decades. The future trends in digital twin will focus on the following: enabling a conformance relationship between digital twin and the real world; digital world autonomy, runtime self-adaptation and self-management; and integration and cooperation, to achieve common goals or provide services [168]. A number of digital twin applications have been developed, based on Microsoft Kinect sensors and the Oculus VR headset.

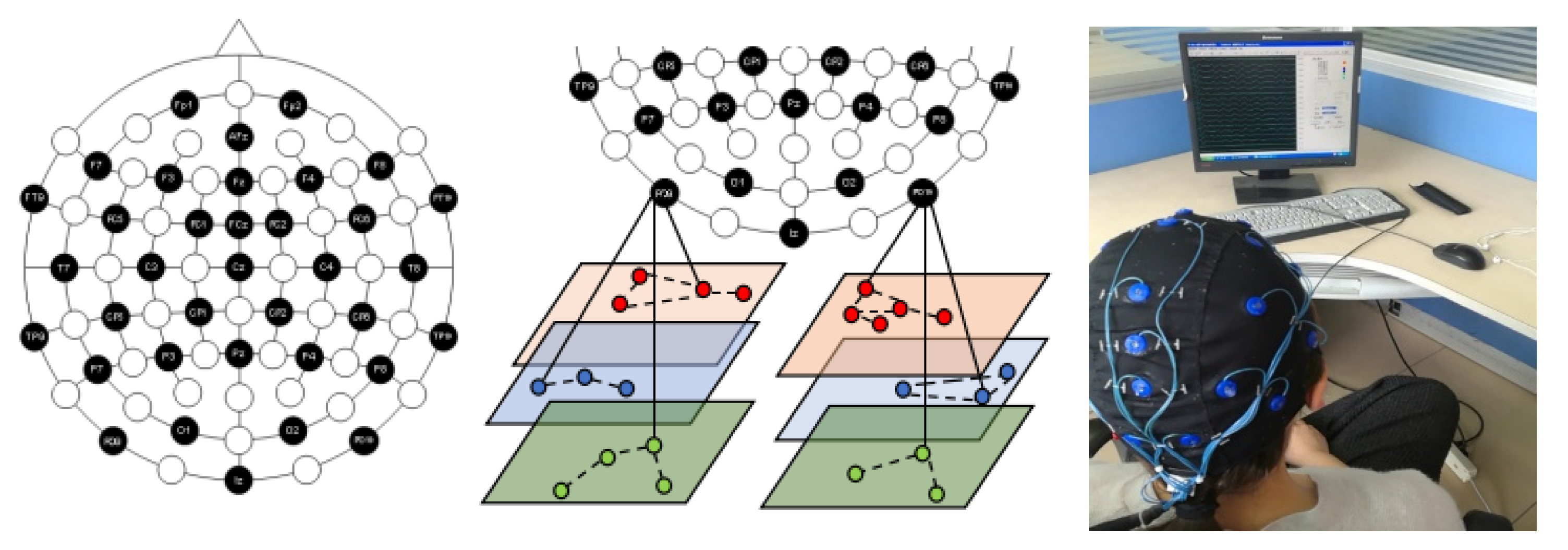

- Brain–Computer Interface (BCI) technology will become a very important area for the Metaverse and for VR platforms. Previous research indicates that non-invasive BCI technology has been applied extensively in various areas in recent years, because of its minimal potential risks and time precision [55]. Figure 5 shows the high-performance EEG BCI method (left), and EEG BCI experiments (right) [55][56].

Figure 5. Segmented EEG time window (left), source: [55]; EEG experiment (right), source: [56].

The NDA/PDA-based methods are adopted, to enhance EEG data analytical efficiency, in order to accommodate the real-time interaction in the Metaverse and VR platforms [74]. The definition for the NDA method is as follows: if S [a, b] ⊆ A [1, k], if x∈[a, b] satisfies:

where mr is the adjusting parameter, and S [a, b] is an NDA set. The ND-based method derives the data values using ksdensity function, to generate a probability distribution [56]. The definition for the PDA method is as follows: the PDA model takes one of the calculated σ and λ values as λ × t, as indicated in the following equations, 11 and 12. Assuming the original data set has σ, then Mean (λ) is the event rate. If Mean (λ) − λ = ∆, then λ × t is lying between Mean (λ) and λ. With |y − λ × t| = a, a1/2+a = ∆ is satisfied.

where N(t) is the sample data in the t time window. The Gamma function is utilized in the PDA method for processing complex numbers, which is expressed in (5) below [57]:

The ∆ parameter is used to regulate the size of the sample data sets, to get the nearest λ and σ values. The ∆ parameter in the PDA plays the same role that it plays in the NDA method. The PDA model employs a PDA benchmark point selection method [55][56][57].

- Blockchain technology is an efficient and secure solution for digital worlds, such as the Metaverse. In the blockchain model, a new transaction can be verified and added to existing records, i.e., blocks, through linking the new transaction to previous ones, by cryptographic hash operation [58]. Each block contains a cryptographic hash of the previous block, a timestamp, and transaction data [59]. The main characteristics of blockchain technology are that it is secure, decentralized, digitized, collaborative and immutable: these characteristics make blockchain technology a perfect solution for digital virtual worlds, such as the Metaverse. Currently, the most successful security technology for blockchain employs the Public Key Infrastructure (PKI)-based blockchain methods [60]. Researchers in the field have started to search for more efficient solutions. The future trends in blockchain technology development in the Metaverse intend to focus on more autonomous, intelligent and scalable models, such as intelligence-agent-based blockchain [61], Self-Sovereign Identity (SSI) blockchain [62], non-fungible tokens (NFTs) [63] and bio-identity-based blockchain.

- Artificial intelligence (AI) is a discipline essential to almost all areas in our modern world, particularly for future virtual worlds such as the Metaverse. AI can accelerate analytical efficiency, enhance security and privacy, improve interoperability, and provide better solutions for human–machine interaction and collaboration. The increase in applications of Natural Language Processing (NLP), sentiment analysis and brain informatics technologies to digital worlds is stimulating the development of AI in these areas. The successful stories of AI implementation in image recognition, voice recognition, human–machine interaction and intuition, reveal the promising future of AI in the Metaverse and other virtual worlds. A recent survey showed that a majority of studies had focused on exploring efficient integration and collaboration between Edge AI architecture and the Metaverse [64].

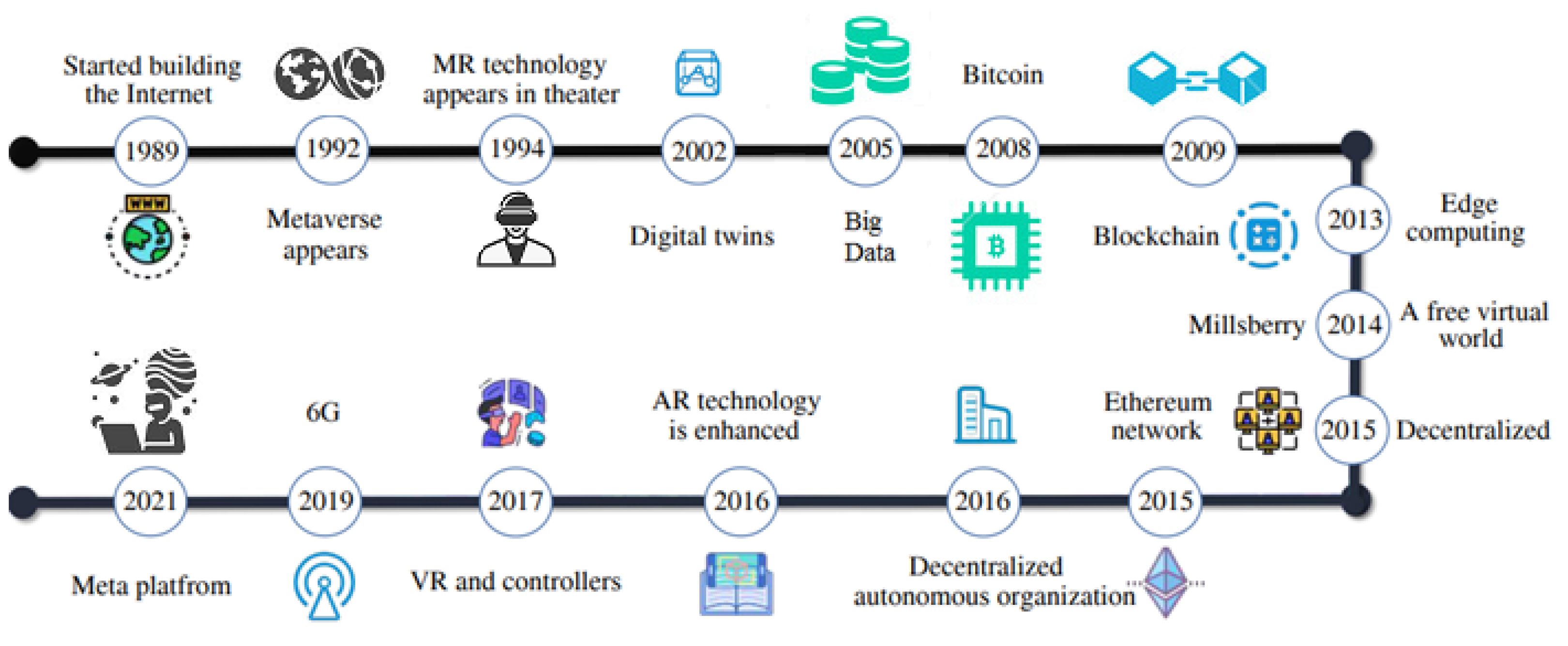

The following Figure 6 demonstrates how the Metaverse and its related technologies, which include big data, have evolved and developed [64].

Figure 6. A chronicle of the Metaverse and its related techniques, modified based on [64].

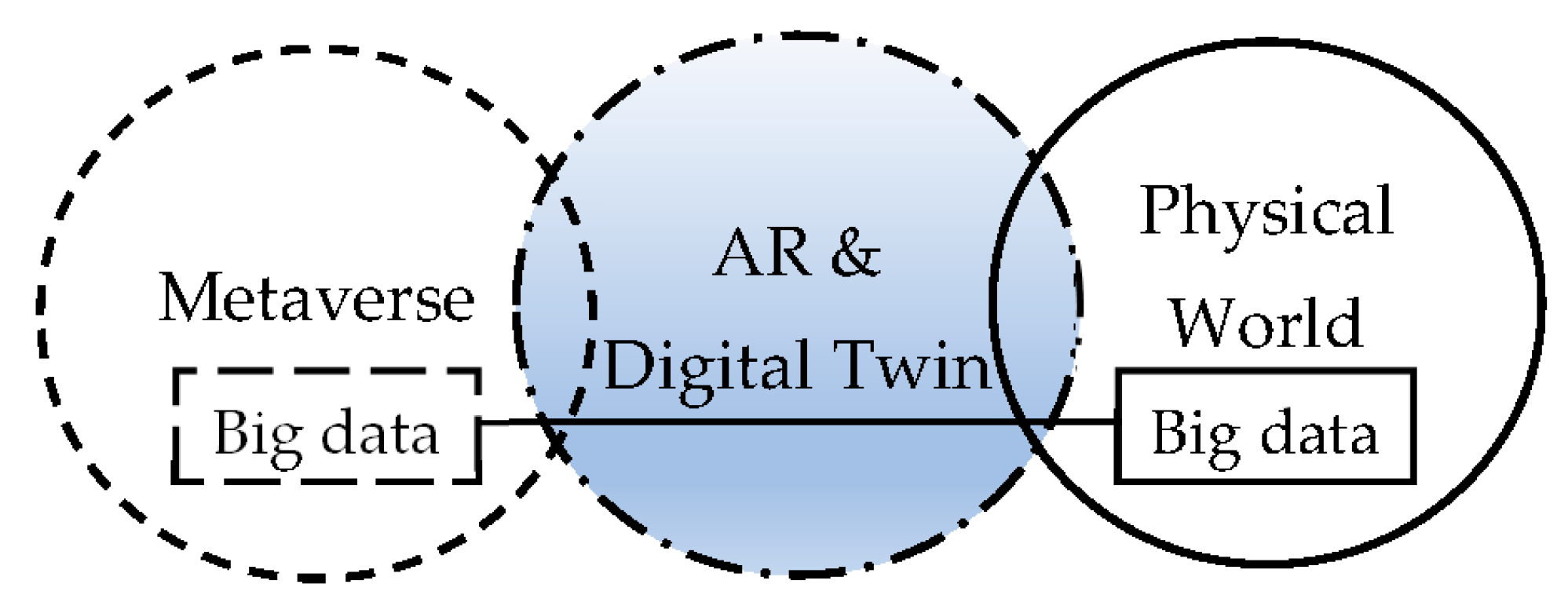

Data sources in the Metaverse and other virtual platforms are growing exponentially; therefore, big data technologies are crucial for the Metaverse, if it is to efficiently manage its digital world, and provide users with real-time analytical services. Big data technologies are fundamental tools for rendering virtual platforms, such as the Metaverse, feasible for users. In other words, big data is a fundamental component in the Metaverse; and the Metaverse accelerates the development of big data technologies; however, big data is not only crucial in the virtual world—it is also an important component of our real physical world, as evidenced in various areas. Figure 7 shows the relationship between big data and the Metaverse.

Figure 7. Big data plays a key component in both the physical world and virtual worlds. The Metaverse is a virtual world parallel to the real physical world: the two are sometimes connected by augmented reality and digital twin.

The current definitions of the Metaverse vary according to different studies; however, many researchers share a common view that the Metaverse is imitating our physical world. In this work, the researchers believe that future virtual worlds, including the Metaverse, will develop to be totally different world from our physical world: these virtual worlds will go beyond our current social structure and civil life. Table 1 shows the example applications of the Metaverse and big data in several key sectors.

Table 1. A brief review of example applications of big data and the Metaverse in major sectors.

| Sectors | Big Data | Metaverse |

|---|---|---|

| Healthcare |

|

|

| Finance and Economy |

|

|

| Education |

|

|

| Entertainment and Social |

6. Conclusion and Discussion

The Metaverse and other virtual platforms have grown rapidly in recent years. The PwC Co. predicts that VR and AR platforms will boost global GDP by USD 1.5 trillion by 2030 [85]. To date, applications of the Metaverse have included online shopping, virtual social media, video games, virtual tours, and online museums and arts [86][87][88]. Many large technology companies have announced plans to launch their Metaverse products, such as Facebook Horizon, Nvidia Omniverse, and Amazon Metaverse. The future trends in technical development in the Metaverse and other VR platforms can be grouped into five main areas: digital human; digital twin; brain–computer interface (BCI), blockchain and artificial intelligence. Notably, brain–computer interface technologies have become increasingly important to Metaverse development in recent years, as immersive interactions provided by BCI can enhance user experience [89][90][91][92][93].

References

- de Gérase, N. Nicomachi Geraseni Pythagorei Introductionis Arithmeticae Libri II; Bibliotheca Scriptorum Graecorum et Romanorum Teubneriana; Aedibvs B.G. Teubneri: Stuttgart, Germany, 1866; pp. 1–198. Available online: https://openlibrary.org/works/OL3947510W/Nicomachi_Geraseni_Pythagorei_introductionis_arithmeticae_libri_II (accessed on 30 November 2022).

- Rivest, R.L.; Shamir, A.; Adleman, L. A method for obtaining digital signatures and public-key cryptosystems. Commun. ACM 1978, 21, 120–126.

- Kolotouros, N.; Pavlakos, G.; Black, M.; Daniilidis, K. Learning to Reconstruct 3D Human Pose and Shape via Model-Fitting in the Loop. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019.

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186.

- Lin, S.; Yang, L.; Saleemi, I.; Sengupta, S. Robust high-resolution video matting with temporal guidance. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 4 – 8 January 2022; pp. 238–247.

- Bhatia, S.; Sigal, L.; Isard, M.; Black, M. 3D Human Limb Detection using Space Carving and Multi-View Eigen Models. In Proceedings of the 2004 Conference on Computer Vision and Pattern Recognition Workshop, Washington, DC, USA, 27 June–2 July 2004; p. 17.

- Agarwal, A.; Triggs, B. Recovering 3D Human Pose from Monocular Images. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 44–58.

- Martinez, J.; Hossain, R.; Romero, J.; Little, J.J. A Simple Yet Effective Baseline for 3D Human Pose Estimation. In Proceedings of the IEEE International Conference on Computer Vision, ICCV 2017, Venice, Italy, 22–29 October 2017; pp. 2659–2668.

- Mehta, D.; Sotnychenko, O.; Mueller, F.; Xu, W.; Elgharib, M.; Fua, P.; Seidel, H.P.; Rhodin, H.; Pons-Moll, G.; Theobalt, C. XNect: Real-time multi-person 3D motion capture with a single RGB camera. ACM Trans. Graph. 2020, 39, 82:1–82:17.

- Federica, B.; Kanazawa, A.; Lassner, C.; Gehler, P.; Romero, J.; Black, M.J. Keep it SMPL: Automatic estimation of 3D human pose and shape from a single image. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 561–578.

- Kanazawa, A.; Black, M.J.; Jacobs, D.W.; Malik, J. End-to-end Recovery of Human Shape and Pose. In Proceedings of the Computer Vision and Pattern Regognition (CVPR), San Juan, PR, USA, 17–19 June 2018; pp. 1–10.

- Pavlakos, G.; Zhu, L.; Zhou, X.; Daniilidis, K. Learning to Estimate 3D Human Pose and Shape from a Single Color Image. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 459–468.

- Pavlakos, G.; Choutas, V.; Ghorbani, N.; Bolkart, T.; Osman, A.A.; Tzionas, D.; Black, M.J. Expressive body capture: 3d hands, face, and body from a single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2019; pp. 10975–10985.

- Choutas, V.; Pavlakos, G.; Bolkart, T.; Tzionas, D.; Black, M.J. Monocular Expressive Body Regression Through Body-Driven Attention. In Proceedings of the European Conference on Computer Vision; Springer: Cham, Switzerland, 2020; pp. 20–40.

- Zhang, Y.; Li, Z.; An, L.; Li, M.; Yu, T.; Liu, Y. Lightweight multi-person total motion capture using sparse multi-view cameras. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 5560–5569.

- Zheng, Z.; Yu, T.; Liu, Y.; Dai, Q. Pamir: Parametric model-conditioned implicit representation for image-based human re-construction. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3170–3184.

- Huang, Z.; Xu, Y.; Lassner, C.; Li, H.; Tung, T. Arch: Animatable reconstruction of clothed humans. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, DC, USA, 13–19 June 2020; pp. 3093–3102.

- Ma, Q.; Yang, J.; Tang, S.; Black, M.J. The power of points for modeling humans in clothing. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10974–10984.

- Peng, S.; Zhang, Y.; Xu, Y.; Wang, Q.; Shuai, Q.; Bao, H.; Zhou, X. Neural Body: Implicit Neural Representations with Structured Latent Codes for Novel View Synthesis of Dynamic Humans. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 9050–9059.

- Mir, A.; Alldieck, T.; Pons-Moll, G. Learning to transfer texture from clothing images to 3d humans. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, DC, USA, 13–19 June 2020; pp. 7023–7034.

- Yang, F.; Li, R.; Georgakis, G.; Karanam, S.; Chen, T.; Ling, H.; Wu, Z. Robust multi-modal 3d patient body modeling. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: New York, NY, USA, 2020; pp. 86–95.

- Lee, H.-J.; Chen, Z. Determination of 3D human body postures from a single view. Comput. Vis. Graph. Image Process. 1985, 30, 148–168.

- Nevatia, R.; Binford, T.O. Description and recognition of curved objects. Artif. Intell. 1977, 8, 77–98.

- Ju, S.X.; Black, M.J.; Yacoob, Y. Cardboard people: A parameterized model of articulated image motion. In Proceedings of the Second International Conference on Automatic Face and Gesture Recognition, Killington, VT, USA, 14–16 October 1996; pp. 38–44.

- Wang, M.; Qiu, F.; Liu, W.; Qian, C.; Zhou, X.; Ma, L. Monocular Human Pose and Shape Reconstruction using Part Differen-tiable Rendering. Comput. Graph. Forum 2020, 39, 351–362.

- Robinette, K.M.; Blackwell, S.; Daanen, H.; Boehmer, M.; Fleming, S. Civilian American and European Surface Anthropometry Resource (CAESAR), Final Report. Volume 1. Summary. 2002. Available online: https://www.humanics-es.com/CAESARvol1.pdf (accessed on 30 November 2022).

- Anguelov, D.; Srinivasan, P.; Koller, D.; Thrun, S.; Rodgers, J.; Davis, J. SCAPE: Shape completion and animation of people. ACM Trans. Graph. 2005, 24, 408–416.

- Loper, M.; Mahmood, N.; Romero, J.; Pons-Moll, G.; Black, M.J. SMPL: A Skinned Multi-Person Linear Model. ACM Trans. Graphics 2015, 34, 248.

- Romero, J.; Tzionas, D.; Black, M.J. Embodied hands: Modeling and capturing hands and bodies together. arXiv 2022, arXiv:2201.02610.

- Blanz, V.; Vetter, T. A morphable model for the synthesis of 3D faces. In Siggraph 1999, Computer Graphics Proceedings; Rockwood, A., Ed.; Addison Wesley Longman: Los Angeles, CA, USA, 1999; pp. 187–194.

- Osman, A.A.A.; Bolkart, T.; Black, M.J. STAR: A Sparse Trained Articulated Human Body Regressor. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 598–613.

- Saito, S.; Huang, Z.; Natsume, R.; Morishima, S.; Li, H.; Kanazawa, A. PIFu: Pixel-Aligned Implicit Function for High-Resolution Clothed Human Digitization. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 2304–2314.

- Saito, S.; Simon, T.; Saragih, J.; Joo, H. PIFuHD: Multi-Level Pixel-Aligned Implicit Function for High-Resolution 3D Human Digitization. In Proceedings of the 2020 IEEE/CVF Conference on CVPR, Seattle, DC, USA, 13–19 June 2020; pp. 81–90.

- Balan, A.O.; Sigal, L.; Black, M.J.; Davis, J.E.; Haussecker, H.W. Detailed Human Shape and Pose from Images. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8.

- Guan, P.; Weiss, A.; Balan, A.O.; Black, M.J. Estimating human shape and pose from a single image. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1381–1388.

- Pishchulin, L.; Insafutdinov, E.; Tang, S.; Andres, B.; Andriluka, M.; Gehler, P.V.; Schiele, B. DeepCut: Joint Subset Partition and Labeling for Multi Person Pose Estimation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 4929–4937.

- Zanfir, A.; Marinoiu, E.; Sminchisescu, C. Monocular 3D Pose and Shape Estimation of Multiple People in Natural Scenes: The Importance of Multiple Scene Constraints. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2148–2157.

- Christoph, L.; Romero, J.; Kiefel, M.; Bogo, F.; Black, M.J.; Gehler, P.V. Unite the people: Closing the loop between 3d and 2d human representations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6050–6059.

- Xiang, D.; Joo, H.; Sheikh, Y. Monocular total capture: Posing face, body, and hands in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019.

- Joo, H.; Neverova, N.; Vedaldi, A. Exemplar Fine-Tuning for 3D Human Model Fitting Towards In-the-Wild 3D Human Pose Estimation. In Proceedings of the 2021 International Conference on 3D Vision (3DV), Virtual, 1–3 December 2021; pp. 42–52.

- Song, J.; Chen, X.; Hilliges, O. Human body model fitting by learned gradient descent. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 744–760.

- Iqbal, U.; Xie, K.; Guo, Y.; Kautz, J.; Molchanov, P. KAMA: 3D Keypoint Aware Body Mesh Articulation. In Proceedings of the 2021 International Conference on 3D Vision (3DV), Virtual, 1–3 December 2021; pp. 689–699.

- Li, J.; Xu, C.; Chen, Z.; Bian, S.; Yang, L.; Lu, C. HybrIK: A Hybrid Analytical-Neural Inverse Kinematics Solution for 3D Human Pose and Shape Estimation. In Proceedings of the IEEE/CVF CVPR, Virtual, 20–25 June 2021; pp. 3382–3392.

- Jiang, W.; Kolotouros, N.; Pavlakos, G.; Zhou, X.; Daniilidis, K. Coherent reconstruction of multiple humans from a single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, DC, USA, 13–19 June 2020; pp. 5579–5588.

- Kolotouros, N.; Pavlakos, G.; Daniilidis, K. Convolutional mesh regression for single-image human shape reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4501–4510.

- Kocabas, M.; Athanasiou, N.; Black, M.J. VIBE: Video Inference for Human Body Pose and Shape Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, DC, USA, 13–19 June 2020; pp. 5252–5262.

- Mahmood, N.; Ghorbani, N.; Troje, N.F.; Pons-Moll, G.; Black, M.J. AMASS: Archive of Motion Capture as Surface Shapes. In Proceedings of the International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 5442–5451.

- Feng, Y.; Choutas, V.; Bolkart, T.; Tzionas, D.; Black, M.J. Collaborative regression of expressive bodies using moderation. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; pp. 792–804.

- Zanfir, A.; Bazavan, E.G.; Zanfir, M.; Freeman, W.T.; Sukthankar, R.; Sminchisescu, C. Neural descent for visual 3d human pose and shape. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14484–14493.

- Zhou, Y.; Habermann, M.; Habibie, I.; Tewari, A.; Theobalt, C.; Xu, F. Monocular Real-Time Full Body Capture with Inter-Part Correlations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4811–4822.

- Moon, G.; Lee, K.M. Pose2pose: 3d positional pose-guided 3d rotational pose prediction for expressive 3d human pose and mesh estimation. arXiv 2020, arXiv:2011.11534.

- Wang, Y.; Su, Z.; Zhang, N.; Xing, R.; Liu, D.; Luan, T.H.; Shen, X. A Survey on Metaverse: Fundamentals, Security, and Privacy. In IEEE Communications Surveys & Tutorials 2022; IEEE Press: New York, NY, USA, 2022; pp. 1–32.

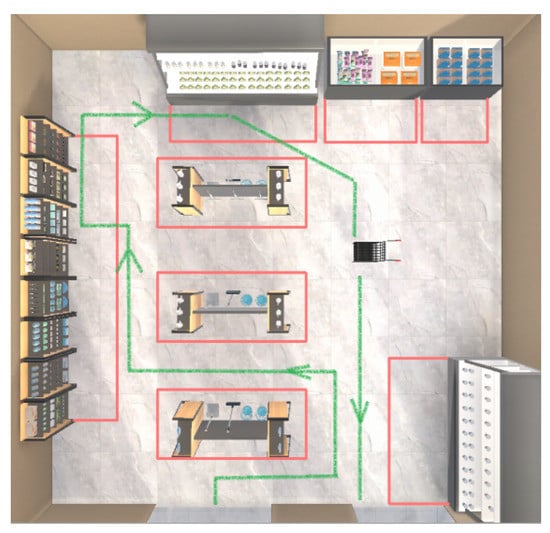

- Huang, J.; Zhang, H.L.; Lu, H.; Xin, Y.; Li, S. A Novel Position-based VR Online Shopping Recommendation System based on Optimized Collaborative Filtering Algorithm. In Proceedings of the Web Intelligence Workshops (WI), Melbourne, VIC, Canada, 14–17 December 2022; pp. 1–7.

- Zheng, Z.; Zhu, J.; Lyu, M.R. Service-Generated Big Data and Big Data-as-a-Service: An Overview. In Proceedings of the 2013 IEEE International Congress on Big Data, Silicon Valley, CA, USA, 6–9 October 2013; pp. 403–410.

- Zhang, H.L.; Liu, J.; Dowens, M.G. Complex brain activity analysis and recognition based on multiagent methods. Concurr. Comput. Pr. Exp. 2020, 34, e5855.

- Zhang, H.; Zhao, Q.; Lee, S.; Dowens, M.G. EEG-Based Driver Drowsiness Detection Using the Dynamic Time Dependency Method. In Proceedings of the Brain Informatics, Haikou, China, 13–15 December 2019; pp. 39–47.

- Zhang, H.L.; Lee, S.; Li, X.; He, J. EEG Self-Adjusting Data Analysis Based on Optimized Sampling for Robot Control. Electronics 2020, 9, 925.

- Xu, H.; Li, Z.; Li, Z.; Zhang, X.; Sun, Y.; Zhang, L. Metaverse Native Communication: A Blockchain and Spectrum Prospective. In Proceedings of the IEEE International Conference on Communications Workshops, Seoul, Korea, 16–20 May 2022; pp. 7–12.

- Narayanan, A.; Bonneau, J.; Felten, E.; Miller, A.; Goldfeder, S. Bitcoin and Cryptocurrency Technologies: A Comprehensive Introduction; Princeton University Press: Princeton, NJ, USA, 2016.

- Talamo, M.; Arcieri, F.; Dimitri, A.; Schunck, H.C. A blockchain based PKI validation system based on rare events manage-ment. Future Internet 2020, 12, 40.

- Badruddoja, S.; Dantu, R.; He, Y.; Thompson, M.; Salau, A.; Upadhyay, K. Trusted AI with Blockchain to Empower Metaverse. International Conference on Blockchain Computing and Applications (BCCA); IEEE Press: New York, NY, USA, 2022; pp. 237–245.

- Mühle, A.; Grüner, A.; Gayvoronskaya, T.; Meinel, C. A survey on essential components of a self-sovereign identity. Comput. Sci. Rev. 2018, 30, 80–86.

- Heimes, A.; Zenkert, J.; Fathi, M. Current State and Latest Trends in Blockchain Technology and its Usage and the Effects on Business Use Cases. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; pp. 3311–3316.

- Chang, L.; Zhang, Z.; Li, P.; Xi, S.; Guo, W.; Shen, Y.; Xiong, Z.; Kang, J.; Niyato, D.; Qiao, X.; et al. 6G-Enabled Edge AI for Metaverse: Challenges, Methods, and Future Research Directions. J. Commun. Inf. Netw. 2022, 7, 107–121.

- Prayitno; Shyu, C.-R.; Putra, K.T.; Chen, H.-C.; Tsai, Y.-Y.; Hossain, K.S.M.T.; Jiang, W.; Shae, Z.-Y. A Systematic Review of Federated Learning in the Healthcare Area: From the Perspective of Data Properties and Applications. Appl. Sci. 2021, 11, 11191.

- Bhugaonkar, K.; Bhugaonkar, R.; Masne, N. The Trend of Metaverse and Augmented & Virtual Reality Extending to the Healthcare System. Cureus 2022, 14, 29071.

- Han, J.; Pei, J.; Tong, H. Data Mining Concepts and Techniques, 4th ed.; Elsevier: Amsterdam, The Netherlands, 2022.

- Willis, G.; Tranos, E. Using ‘Big Data’ to understand the impacts of Uber on taxis in New York City. Travel Behav. Soc. 2021, 22, 94–107.

- Zhang, H.L.; Zhao, Y.; Pang, C.; He, J. Splitting Large Medical Data Sets Based on Normal Distribution in Cloud Environment. IEEE Trans. Cloud Comput. 2015, 8, 518–531.

- Lyko, K.; Nitzschke, M.; Ngomo, A.N. Big Data Acquisition. In New Horizons for a Data-Driven Economy; Cavanillas, J.M., Ed.; Springer Press: New York, NY, USA, 2016; pp. 39–61.

- Coda, F.A.; Filho, D.J.S.; Junqueira, F.; Miyagi, P.E. Big Data Acquisition Architecture: An Industry 4.0 Approach. In Technological Innovation for Life Improvement; Camarinha-Matos, L., Farhadi, N., Lopes, F., Pereira, H., Eds.; Springer: New York, NY, USA, 2020; pp. 222–229.

- Chang, W.; Boyd, D.; Levin, O. NIST Big Data Interoperability Framework: Volume 6, Reference Architecture; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2019. Available online: https://www.nist.gov/publications/nist-big-data-interoperability-framework-volume-6-reference-architecture?pub_id=918936 (accessed on 19 October 2022).

- Jain, P.; Gyanchandani, M.; Khare, N. Big data privacy: A technological perspective and review. J. Big Data 2016, 3, 25.

- Rafiq, F.; Awan, M.J.; Yasin, A.; Nobanee, H.; Zain, A.M.; Bahaj, S.A. Privacy Prevention of Big Data Applications: A Systematic Literature Review. SAGE Open 2022, 12.

- Sun, H.; Rabbani, M.R.; Sial, M.S.; Yu, S.; Filipe, J.A.; Cherian, J. Identifying Big Data’s Opportunities, Challenges, and Implications in Finance. Mathematics 2020, 8, 1738.

- Musamih, A.; Dirir, A.; Yaqoob, I.; Salah, K.; Jayaraman, R.; Puthal, D. NFTs in Smart Cities: Vision, Applications, and Chal-lenges. IEEE Consum. Electron. Mag. 2022, 1–14.

- Edwards, C. Are NFTs Key to Accessing the Metaverse? Eng. Technol. 2022, 17, 1–8.

- Shabihi, N.; Kim, M.S. Big Data Analytics in Education: A Data-Driven Literature Review. In Proceedings of the International Conference on Advanced Learning Technologies (ICALT), Tartu, Estonia, 12–15 July 2021; pp. 154–156.

- Qureshi, H.; Sagar, A.K.; Astya, R.; Shrivastava, G. Big Data Analytics for Smart Education. In Proceedings of the IEEE 6th Inter-national Conference on Computing, Communication and Automation (ICCCA), Arad, Romania, 17–19 December 2021; pp. 650–658.

- Gim, G.; Bae, H.; Kang, S. Metaverse Learning: The Relationship among Quality of VR-Based Education, Self-Determination, and Learner Satisfaction. In Proceedings of the IEEE/ACIS 7th International Conference on Big Data, Cloud Computing, and Data Science, Danang, Vietnam, 4–6 October 2022; pp. 279–284.

- Agrawal, D.; Budak, C.; El Abbadi, A.; Georgiou, T.; Yan, X. Big Data in Online Social Networks: User Interaction Analysis to Model User Behavior in Social Networks. In Proceedings of the Databases in Networked Information Systems, Aizu-Wakamatsu, Japan, 24–26 March 2014; Madaan, A., Kikuchi, S., Bhalla, S., Eds.; Lecture Notes in Computer Science. pp. 1–16.

- Britto, L.F.S.; Pacifico, L.D.S. Evaluating Video Game Acceptance in Game Reviews using Sentiment Analysis Techniques. In Proceedings of the SBGames, Virtual, 7–10 November 2020; pp. 399–402.

- Mirza-Babaei, P.; Robinson, R.; Mandryk, R.; Pirker, J.; Kang, C.; Fletcher, A. Games and the Metaverse. In Proceedings of the Annual Symposium on Computer-Human Interaction in Play, Bremen, Germany, 2–5 November 2022; pp. 318–319.

- Cheng, R.; Wu, N.; Varvello, M.; Chen, S.; Han, B. Are we ready for Metaverse: A measurement study of social virtual reality platforms. In Proceedings of the 22nd ACM Internet Measurement Conference, Nice, France, 25–27 October 2022; pp. 504–518.

- Hobson, D. How Banks Can Make Money in the Metaverse. Future of Finance, 17 June 2022; 1–4.

- Suzuki, S.-N.; Kanematsu, H.; Barry, D.M.; Ogawa, N.; Yajima, K.; Nakahira, K.T.; Shirai, T.; Kawaguchi, M.; Kobayashi, T.; Yoshitake, M. Virtual Experiments in Metaverse and their Applications to Collaborative Projects: The framework and its significance. Procedia Comput. Sci. 2020, 176, 2125–2132.

- Gogolin, G.; Gogolin, E.; Kam, H.-J. Virtual worlds and social media: Security and privacy concerns, implications, and practices. Int. J. Artif. Life Res. 2014, 4, 30–42.

- Falchuk, B.; Loeb, S.; Neff, R. The Social Metaverse: Battle for Privacy. IEEE Technol. Soc. Mag. 2018, 37, 52–61.

- Hu, X.; Liu, Y.; Zhang, H.L.; Wang, W.; Li, Y.; Meng, C.; Fu, Z. Noninvasive Human-Computer Interface Methods and Ap-plications for Robotic Control: Past, Current, and Future. Comput. Intell. Neurosci. 2022, 2022, 1635672.

- Abiri, R.; Borhani, S.; Sellers, E.W.; Jiang, Y.; Zhao, X. A comprehensive review of EEG-based brain-computer interface par-adigms. J. Neural Eng. 2019, 16, 1–21.

- Oudeyer, P.Y.; Gottlieb, J.; Lopes, M. Intrinsic motivation, curiosity, and learning: Theory and applications in educational technologies. Prog. Brain Res. 2016, 229, 257–284.

- Fahimi, F.; Dosen, S.; Ang, K.K.; Mrachacz-Kersting, N.; Guan, C. Generative Adversarial Networks-Based Data Augmentation for Brain–Computer Interface. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4039–4051.

- Chen, X.; Huang, X.; Wang, Y.; Gao, X. Combination of Augmented Reality Based Brain- Computer Interface and Computer Vision for High-Level Control of a Robotic Arm. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 3140–3147.