| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Jason Zhu | -- | 2079 | 2022-11-07 01:42:30 |

Video Upload Options

The face inversion effect is a phenomenon where identifying inverted (upside-down) faces compared to upright faces is much more difficult than doing the same for non-facial objects. A typical study examining the face inversion effect would have images of the inverted and upright object presented to participants and time how long it takes them to recognise that object as what it actually is (i.e. a picture of a face as a face). The face inversion effect occurs when, compared to other objects, it takes a disproportionately longer time to recognise faces when they are inverted as opposed to upright. Faces are normally processed in the special face-selective regions of the brain, such as the fusiform face area. However, processing inverted faces involves both face-selective regions and the scene and object recognition regions of the parahippocampal place area and lateral occipital cortex. There seems to be something different about inverted faces that requires them to also involve these scene and object processing mechanisms. The most supported explanation for why faces take longer to recognise when they are inverted is the configural information hypothesis. The configural information hypothesis states that faces are processed with the use of configural information to form a holistic (whole) representation of a face. Objects, however, are not processed in this configural way. Instead, they are processed featurally (in parts). Inverting a face disrupts configural processing, forcing it to instead be processed featurally like other objects. This causes a delay since it takes longer to form a representation of a face with only local information.

1. Neural Systems of Face Recognition

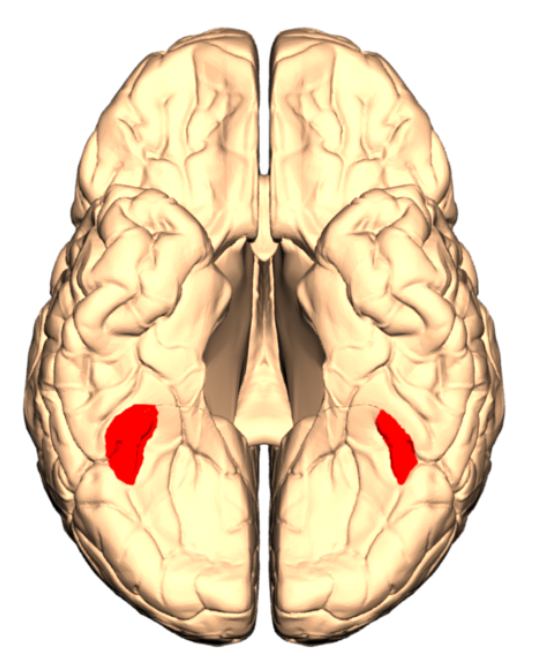

Faces are processed in separate areas of the brain to other stimuli, such as scenes or non-facial objects. For example, the fusiform face area (FFA) is a face-selective region in the brain that is only used for facial processing.[1] The FFA responds more to upright but not inverted faces, demonstrating that inverted faces are not detected the same way that upright faces are.[2]

The scene-selective parahippocampal place area (PPA) processes places, or scenes of the visual environment.[3] The object recognition area in the lateral occipital cortex (LOC) is involved in the processing of objects.[4] Together, these regions are used to process inverted, but not upright faces. This suggests that there is something special about inverted compared to upright faces that requires them to involve object and scene processing regions.[5]

There is still some activity in face recognition regions when viewing inverted faces.[6] Evidence has found that a face-selective region in the brain known as the occipital face area (OFA) is involved in the processing of both upright and inverted faces.[2][5]

Overall, face and object processing mechanisms seem to be separate in the brain. Recognising upright faces involves special facial recognition regions, but recognising inverted faces involves both face and non-facial stimuli recognition regions.

2. Face Vs. Object Recognition Processes

2.1. Face Recognition

Configural information

Configural information, also known as relational information, helps people to quickly recognise faces. It involves the arrangement of facial features, such as the eyes and nose. There are two types of configural information: first-order relational information and second-order relational information.[7]

First-order relational information consists of the spatial relationships between different features of the face. These relationships between facial features are common to most people, for example, having the mouth located under the nose. First-order relational information therefore helps to identify a face as a face and not some other object.[7]

Second-order relational information is the size of the relationships between the features of the face, relative to a prototype (a model of what a face should look like). This type of information helps to distinguish one face from another because it differs between different faces.[7]

Holistic processing

The holistic processing of faces describes the perception of faces as wholes, rather than the sum of their parts. This means that facial features (such as the eyes or nose) are not explicitly represented in the brain on their own, rather, the entire face is represented.[8]

According to the configural information hypothesis of face recognition, recognising faces involves two stages that use configural information to form holistic representations of faces.[9]

A study demonstrated that face-selective activity in the brain was delayed when the configural information of faces was disrupted (for example, when faces were inverted).[10] This means that it took longer for the participants to recognise the faces they were viewing as faces and not other (non-facial) objects. The configural information explanation for facial recognition is therefore supported by the presence of the face inversion effect (a delay when faces are inverted).

Stages of face recognition

The first stage of recognising faces in the configural information hypothesis is first-level information processing. This stage uses first-order relational information to detect a face (i.e. to determine that a face is actually a face and not another object). Building a holistic representation of a face occurs at this early stage of face processing, to allow faces to be detected quickly.[9]

The next stage, second-level information processing, distinguishes one face from another with the use of second-order relational information.[9]

2.2. Object Recognition

An inversion effect does not seem to occur for non-facial objects, suggesting that faces and other objects are not processed in the same way.

Face recognition involves configural information to process faces holistically. However, object recognition does not use configural information to form a holistic representation. Instead, each part of the object is processed independently to allow it to be recognised. This is known as a featural recognition method.[7] Additionally, an explicit representation of each part of the object is made, rather than a representation of the object as a whole.[8]

3. Theories

3.1. Configural Information Hypothesis

According to the configural information hypothesis, the face inversion effect occurs because configural information can no longer be used to build a holistic representation of a face. Inverted faces are instead processed like objects, using local information (i.e. the individual features of the face) instead of configural information.

A delay is caused when processing inverted faces compared to upright faces. This is because the specific holistic mechanism (see holistic processing) that allows faces to be quickly detected is absent when processing inverted faces. Only local information is available when viewing inverted faces, disrupting this early recognition stage and therefore preventing faces from being detected as quickly. Instead, independent features are put together piece-by-piece to form a representation of the object (a face) and allow the viewer to recognise what it is.[11]

3.2. Alternate Hypotheses

Although the configural processing hypothesis is a popular explanation for the face inversion effect, there have been some challenges to this theory. In particular, it has been suggested that faces and objects are both recognised using featural processing mechanisms, instead of holistic processing for faces and featural processing for objects.[12] The face inversion effect is therefore not caused by delay from faces being processed as objects. Instead, another element is involved. Two potential explanations follow.

Perceptual learning

Perceptual learning is a common alternative explanation to the configural processing hypothesis for the face inversion effect. According to the perceptual learning theory, being presented with a stimulus (for example, faces or cars) more often makes that stimulus easier to recognise in the future.[13]

Most people are highly familiar with viewing upright faces. It follows that highly efficient mechanisms have been able to develop to the quick detection and identification of upright faces.[14] This means that the face inversion effect would therefore be caused by an increased amount of experience with perceiving and recognising upright faces compared to inverted faces.[15]

Face-scheme incompatibility

The face-scheme incompatibility model has been proposed in order to explain some of the missing elements of the configural information hypothesis. According to the model, faces are processed and assigned meaning by the use of schemes and prototypes.[16]

The model defines a scheme as an abstract representation of the general structure of a face, including characteristics common to most faces (i.e. the structure of and relationships between facial features). A prototype refers to an image of what an average face would look like for a particular group (e.g. humans or monkeys). After being recognised as a face with the use of a scheme, new faces are added to a group by being evaluated for their similarity to that group's prototype.[16]

There are different schemes for upright and inverted faces: upright faces are more frequently viewed and thus have more efficient schemes than inverted faces. The face inversion effect is thus partly caused by less efficient schemes for processing the less familiar inverted form of faces.[16] This makes the face-scheme incompatibility model similar to the perceptual learning theory, because both consider the role of experience important in the quick recognition of faces.[15][16]

3.3. Integration of Theories

Instead of just one explanation for the face inversion effect, it is more likely that aspects of different theories apply. For example, faces could be processed with configural information but the role of experience may be important for quickly recognising a particular type of face (i.e. human or dog) by building schemes of this facial type.[12]

4. Development

The ability to quickly detect and recognise faces was important in early human life, and is still useful today. For example, facial expressions can provide various signals important for communication.[17][18] Highly efficient facial recognition mechanisms have therefore developed to support this ability.[13]

As humans get older, they become more familiar with upright human faces and continuously refine the mechanisms used to recognise them.[19] This process allows people to quickly detect faces around them, which helps with social interaction.[17]

By about the first year of life, infants are familiar with faces in their upright form and are thus more prone to experiencing the face inversion effect. As they age, they get better at recognising faces and so the face inversion effect becomes stronger.[19] The increased strength of the face inversion effect over time supports the perceptual learning hypothesis, since more experience with faces results in increased susceptibility to the effect.[15]

The more familiar a particular type of face (e.g. human or dog) is, the more susceptible one is to the face inversion effect for that face. This applies to both humans and other species. For example, older chimpanzees familiar with human faces experienced the face inversion effect when viewing human faces, but the same result did not occur for younger chimpanzees familiar with chimpanzee faces.[20] The face inversion effect was also stronger for dog faces when they were viewed by dog experts.[7] This evidence demonstrates that familiarity with a particular type of face develops over time and appears to be necessary for the face inversion effect to occur.

5. Exceptions

There are a number of conditions that may reduce or even eliminate the face inversion effect. This is because the mechanism used to recognise faces by forming holistic representations is absent or disrupted. This can cause faces to be processed the same way as other (non-facial) objects.

5.1. Prosopagnosia

Prosopagnosia is a condition marked by an inability to recognize faces.[21] When those with prosopagnosia view faces, the fusiform gyrus (a facial recognition area of the brain) activates differently to how it would in someone without the condition.[22] Additionally, non-facial object recognition areas (such as the ventral occipitotemporal extrastriate cortex) are activated when viewing faces, suggesting that faces and objects are processed similarly.[6]

Individuals with prosopagnosia can be unaffected or even benefit from face inversion in facial recognition tasks.[21][23] Normally, they process upright faces featurally, like objects. Inverted faces are also processed featurally rather than holistically.[24] This demonstrates that there is no difference between the processing of upright and inverted faces, which explains why there is no disproportionate delay for recognizing inverted faces.[6]

5.2. Autism Spectrum Disorder

Like those with prosopagnosia, individuals with autism spectrum disorder (ASD) do not use a configural processing mechanism to form a holistic representation of a face.[25] Instead, they tend to process faces with the use of local or featural information.[26] This means that the same featural mechanisms are used between processing upright faces, inverted faces, and objects. Consequentially, the face inversion effect is less likely to occur in those with ASD.[27]

However, there is some evidence that the development of a holistic facial recognition mechanism in those with ASD is simply delayed, rather than missing. This would mean that there would actually be a difference between the processing of upright and inverted faces. Those with ASD may therefore eventually become susceptible to the face inversion effect.[28]

References

- Kanwisher, Nancy; Yovel, Galit (2006-12-29). "The fusiform face area: a cortical region specialized for the perception of faces 9". Philosophical Transactions of the Royal Society of London B: Biological Sciences 361 (1476): 2109–2128. doi:10.1098/rstb.2006.1934. PMID 17118927. http://www.pubmedcentral.nih.gov/articlerender.fcgi?tool=pmcentrez&artid=1857737

- Yovel, Galit; Kanwisher, Nancy (2005). "The Neural Basis of the Behavioral Face-Inversion Effect". Current Biology 15 (24): 2256–2262. doi:10.1016/j.cub.2005.10.072. ISSN 0960-9822. PMID 16360687. https://dx.doi.org/10.1016%2Fj.cub.2005.10.072

- Epstein, Russell; Kanwisher, Nancy (1998). "A cortical representation of the local visual environment". Nature 392 (6676): 598–601. doi:10.1038/33402. ISSN 0028-0836. PMID 9560155. https://dx.doi.org/10.1038%2F33402

- Malach, R; Reppas, J B; Benson, R R; Kwong, K K; Jiang, H; Kennedy, W A; Ledden, P J; Brady, T J et al. (1995-08-29). "Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex.". Proceedings of the National Academy of Sciences of the United States of America 92 (18): 8135–8139. doi:10.1073/pnas.92.18.8135. ISSN 0027-8424. PMID 7667258. http://www.pubmedcentral.nih.gov/articlerender.fcgi?tool=pmcentrez&artid=41110

- Pitcher, David; Duchaine, Bradley; Walsh, Vincent; Yovel, Galit; Kanwisher, Nancy (2011). "The role of lateral occipital face and object areas in the face inversion effect". Neuropsychologia 49 (12): 3448–3453. doi:10.1016/j.neuropsychologia.2011.08.020. ISSN 0028-3932. PMID 21896279. https://dx.doi.org/10.1016%2Fj.neuropsychologia.2011.08.020

- Haxby, James V; Ungerleider, Leslie G; Clark, Vincent P; Schouten, Jennifer L; Hoffman, Elizabeth A; Martin, Alex (1999). "The Effect of Face Inversion on Activity in Human Neural Systems for Face and Object Perception". Neuron 22 (1): 189–199. doi:10.1016/s0896-6273(00)80690-x. ISSN 0896-6273. PMID 10027301. https://dx.doi.org/10.1016%2Fs0896-6273%2800%2980690-x

- Civile, Ciro; McLaren, Rossy; McLaren, Ian P. L. (2016). "The Face Inversion Effect: Roles of First and Second-Order Configural Information". The American Journal of Psychology 129 (1): 23–35. doi:10.5406/amerjpsyc.129.1.0023. PMID 27029104. https://dx.doi.org/10.5406%2Famerjpsyc.129.1.0023

- Tanaka, James W.; Farah, Martha J. (1993). "Parts and wholes in face recognition". The Quarterly Journal of Experimental Psychology 46 (2): 225–245. doi:10.1080/14640749308401045. PMID 8316637. https://dx.doi.org/10.1080%2F14640749308401045

- Taubert, Jessica; Apthorp, Deborah; Aagten-Murphy, David; Alais, David (2011). "The role of holistic processing in face perception: Evidence from the face inversion effect". Vision Research 51 (11): 1273–1278. doi:10.1016/j.visres.2011.04.002. ISSN 0042-6989. PMID 21496463. https://dx.doi.org/10.1016%2Fj.visres.2011.04.002

- Rossion, B (1999-07-01). "Spatio-temporal localization of the face inversion effect: an event-related potentials study". Biological Psychology 50 (3): 173–189. doi:10.1016/S0301-0511(99)00013-7. ISSN 0301-0511. PMID 10461804. https://dx.doi.org/10.1016%2FS0301-0511%2899%2900013-7

- Freire, Alejo; Lee, Kang; Symons, Lawrence A (2000). "The Face-Inversion Effect as a Deficit in the Encoding of Configural Information: Direct Evidence". Perception 29 (2): 159–170. doi:10.1068/p3012. ISSN 0301-0066. PMID 10820599. https://dx.doi.org/10.1068%2Fp3012

- Matsuyoshi, Daisuke; Morita, Tomoyo; Kochiyama, Takanori; Tanabe, Hiroki C.; Sadato, Norihiro; Kakigi, Ryusuke (2015-03-11). "Dissociable Cortical Pathways for Qualitative and Quantitative Mechanisms in the Face Inversion Effect". Journal of Neuroscience 35 (10): 4268–4279. doi:10.1523/JNEUROSCI.3960-14.2015. ISSN 0270-6474. PMID 25762673. http://www.pubmedcentral.nih.gov/articlerender.fcgi?tool=pmcentrez&artid=6605288

- Wallis, Guy (2013). "Toward a unified model of face and object recognition in the human visual system" (in English). Frontiers in Psychology 4: 497. doi:10.3389/fpsyg.2013.00497. ISSN 1664-1078. PMID 23966963. http://www.pubmedcentral.nih.gov/articlerender.fcgi?tool=pmcentrez&artid=3744012

- Gold, J.; Bennett, P. J.; Sekuler, A. B. (1999). "Signal but not noise changes with perceptual learning". Nature 402 (6758): 176–178. doi:10.1038/46027. ISSN 0028-0836. PMID 10647007. https://dx.doi.org/10.1038%2F46027

- Sekuler, Allison B; Gaspar, Carl M; Gold, Jason M; Bennett, Patrick J (2004). "Inversion Leads to Quantitative, Not Qualitative, Changes in Face Processing". Current Biology 14 (5): 391–396. doi:10.1016/j.cub.2004.02.028. ISSN 0960-9822. PMID 15028214. https://dx.doi.org/10.1016%2Fj.cub.2004.02.028

- Rakover, Sam S. (2013-08-01). "Explaining the face-inversion effect: the face–scheme incompatibility (FSI) model". Psychonomic Bulletin & Review 20 (4): 665–692. doi:10.3758/s13423-013-0388-1. ISSN 1069-9384. PMID 23381811. https://dx.doi.org/10.3758%2Fs13423-013-0388-1

- Schmidt, Karen L.; Cohn, Jeffrey F. (2001). "Human Facial Expressions as Adaptations: Evolutionary Questions in Facial Expression Research". American Journal of Physical Anthropology Suppl 33: 3–24. doi:10.1002/ajpa.20001. ISSN 0002-9483. PMID 11786989. http://www.pubmedcentral.nih.gov/articlerender.fcgi?tool=pmcentrez&artid=2238342

- Goldman, Alvin I.; Sripada, Chandra Sekhar (2005). "Simulationist models of face-based emotion recognition". Cognition 94 (3): 193–213. doi:10.1016/j.cognition.2004.01.005. ISSN 0010-0277. PMID 15617671. https://dx.doi.org/10.1016%2Fj.cognition.2004.01.005

- Passarotti, A.M.; Smith, J.; DeLano, M.; Huang, J. (2007). "Developmental differences in the neural bases of the face inversion effect show progressive tuning of face-selective regions to the upright orientation". NeuroImage 34 (4): 1708–1722. doi:10.1016/j.neuroimage.2006.07.045. ISSN 1053-8119. PMID 17188904. https://dx.doi.org/10.1016%2Fj.neuroimage.2006.07.045

- Dahl, Christoph D.; Rasch, Malte J.; Tomonaga, Masaki; Adachi, Ikuma (2013-08-27). "The face inversion effect in non-human primates revisited - an investigation in chimpanzees (Pan troglodytes)". Scientific Reports 3 (1): 2504. doi:10.1038/srep02504. ISSN 2045-2322. PMID 23978930. http://www.pubmedcentral.nih.gov/articlerender.fcgi?tool=pmcentrez&artid=3753590

- Busigny, Thomas; Rossion, Bruno (2010). "Acquired prosopagnosia abolishes the face inversion effect". Cortex 46 (8): 965–981. doi:10.1016/j.cortex.2009.07.004. ISSN 0010-9452. PMID 19683710. https://dx.doi.org/10.1016%2Fj.cortex.2009.07.004

- Grüter, Thomas; Grüter, Martina; Carbon, Claus-Christian (2008). "Neural and genetic foundations of face recognition and prosopagnosia". Journal of Neuropsychology 2 (1): 79–97. doi:10.1348/174866407x231001. ISSN 1748-6645. PMID 19334306. https://dx.doi.org/10.1348%2F174866407x231001

- Farah, Martha J; Wilson, Kevin D; Maxwell Drain, H; Tanaka, James R (1995-07-01). "The inverted face inversion effect in prosopagnosia: Evidence for mandatory, face-specific perceptual mechanisms". Vision Research 35 (14): 2089–2093. doi:10.1016/0042-6989(94)00273-O. ISSN 0042-6989. PMID 7660612. https://dx.doi.org/10.1016%2F0042-6989%2894%2900273-O

- Avidan, Galia; Tanzer, Michal; Behrmann, Marlene (2011). "Impaired holistic processing in congenital prosopagnosia". Neuropsychologia 49 (9): 2541–2552. doi:10.1016/j.neuropsychologia.2011.05.002. PMID 21601583. http://www.pubmedcentral.nih.gov/articlerender.fcgi?tool=pmcentrez&artid=3137703

- van der Geest, J.N.; Kemner, C.; Verbaten, M.N.; van Engeland, H. (2002). "Gaze behavior of children with pervasive developmental disorder toward human faces: a fixation time study". Journal of Child Psychology and Psychiatry 43 (5): 669–678. doi:10.1111/1469-7610.00055. ISSN 0021-9630. https://dx.doi.org/10.1111%2F1469-7610.00055

- Behrmann, Marlene; Avidan, Galia; Leonard, Grace Lee; Kimchi, Rutie; Luna, Beatriz; Humphreys, Kate; Minshew, Nancy (2006). "Configural processing in autism and its relationship to face processing". Neuropsychologia 44 (1): 110–129. doi:10.1016/j.neuropsychologia.2005.04.002. PMID 15907952. http://repository.cmu.edu/cgi/viewcontent.cgi?article=1114&context=psychology.

- Falck-Ytter, Terje (2008). "Face inversion effects in autism: a combined looking time and pupillometric study". Autism Research 1 (5): 297–306. doi:10.1002/aur.45. PMID 19360681. https://dx.doi.org/10.1002%2Faur.45

- Hedley, Darren; Brewer, Neil; Young, Robyn (2014-10-31). "The Effect of Inversion on Face Recognition in Adults with Autism Spectrum Disorder". Journal of Autism and Developmental Disorders 45 (5): 1368–1379. doi:10.1007/s10803-014-2297-1. ISSN 0162-3257. PMID 25358250. https://dx.doi.org/10.1007%2Fs10803-014-2297-1