| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | NURSYAZYLA SULAIMAN | + 2237 word(s) | 2237 | 2022-03-08 09:54:09 | | | |

| 2 | Peter Tang | Meta information modification | 2237 | 2022-03-18 03:31:47 | | |

Video Upload Options

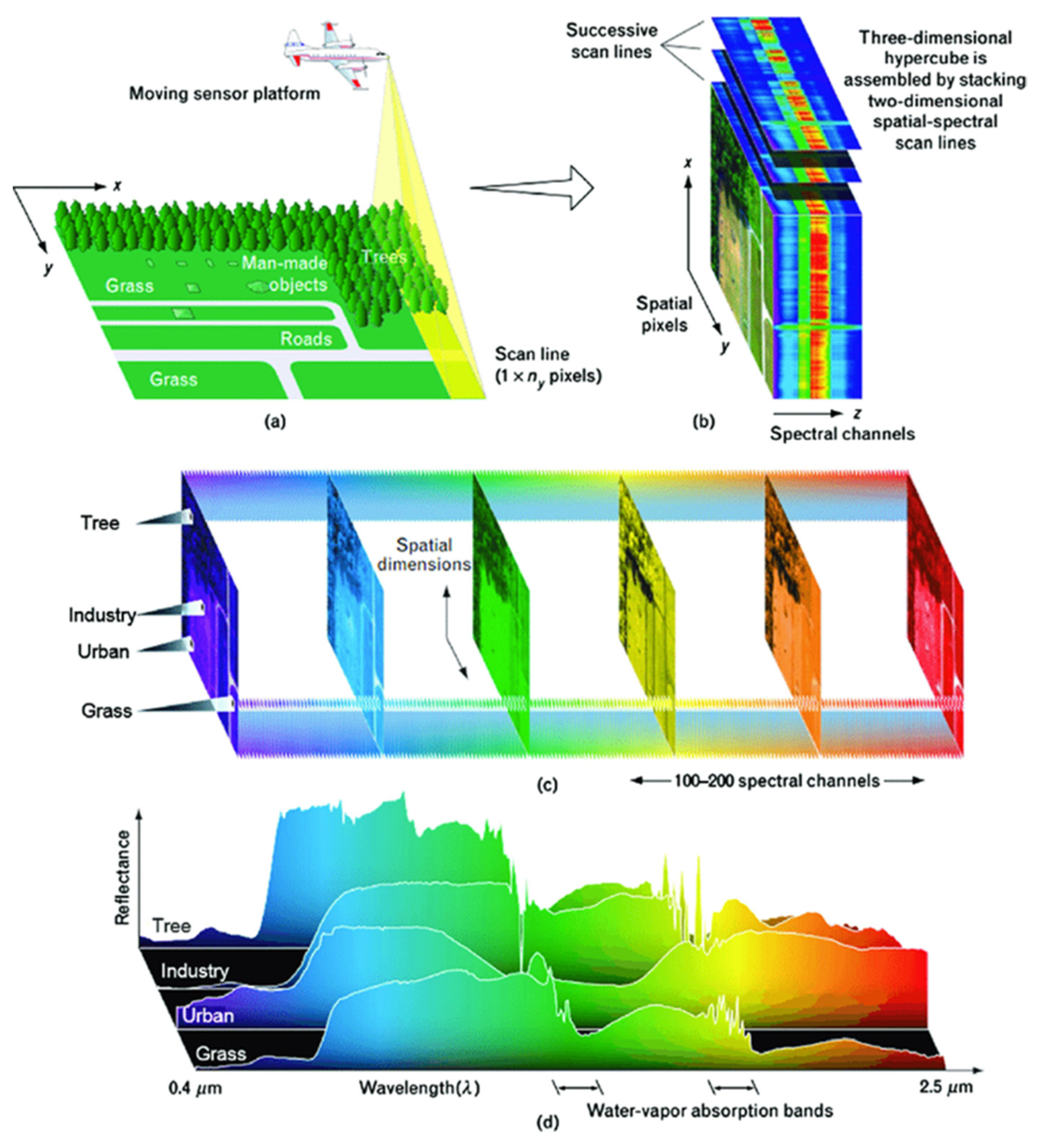

Hyperspectral imaging is an incorporation of the modern imaging system and traditional spectroscopy technology. Unmanned aerial vehicle (UAV) hyperspectral imaging techniques have recently emerged as a valuable tool in agricultural remote sensing, with tremendous promise for many application such as weed detection and species separation

1. Hyperspectral Remote Sensing: A Brief Overview

|

Parameters |

Satellites |

Airplanes |

Helicopters |

Fixed-Wing UAVs |

Multi-Rotor UAVs |

Close-Range Platforms |

|---|---|---|---|---|---|---|

|

Operational Altitudes |

400–700 km |

1–20 km |

100 m–2 km |

<150 m |

<150 m |

<10 m |

|

Spatial coverage |

42 km × 7.7 km |

~100 km2 |

~10 km2 |

~5 km2 |

~0.5 km2 |

~0.005 km2 |

|

Spatial resolution |

20–60 m |

1–20 m |

0.1–1 m |

0.01–0.5 m |

0.01–0.5 m |

0.0001–0.01 m |

|

Temporal resolution |

Days to weeks |

Depends on flight operations (hours to days) |

||||

|

Flexibility |

Low (fixed by repeating cycles) |

Medium (depend on availability of aviation company) |

High |

|||

|

Operational complexity |

Low (provide final data to users) |

Medium (depend on users or vendors) |

High (operate by users with setting up the hardware and software) |

|||

|

Applicable scales |

Regional–global |

Landscape-regional |

Canopy–landscape |

Leaf–canopy |

||

|

Major limiting factors |

Weathers |

Unfavourable flight height/speed, unstable illumination conditions |

Short battery endurance, flight regulations |

Platform design and operation |

||

|

Image acquisition cost |

Low to medium |

High (typically need to hire an aviation company to fly) |

Large (due to area coverage) |

|||

|

Types of Sensors |

Producer |

Number of Bands |

Spectral Image (μm) |

|---|---|---|---|

|

Satellite mounted hyperspectral sensors |

|||

|

FTHSI on MightySat II |

Air Force Research (OH, USA) |

256 |

0.35–1.05 |

|

Hyperion on EO- |

NASA Guddard Space Flight Center (Greenbelt, MA, USA) |

242 |

0.40–250 |

|

Aircraft-mounted hyperspectral sensors |

|||

|

AVIRIS (airborne visible infrared imaging spectrometer) |

NASA Jet Propulsion Lab. (Pasadena, CA, USA) |

224 |

0.40–2.50 |

|

HYDICE (hyperspectral digital imagery collection experiment) |

Naval Research Lab (Washington, DC, USA) |

210 |

0.40–2.50 |

|

PROBE-1 |

Earth Search Sciences Inc. (Kalispell, MT, USA) |

128 |

0.40–2.50 |

|

CASI (compact airborne spectrographic imager) |

ITRES Research Limited (Calgary, AB, Canada) |

Over 22 |

0.40–1.00 |

|

HyMap |

Integrated Spectronics |

100 la 200 |

Visible to thermal Infrared |

|

EPS-H (environmental protection system) |

GER Corporation |

VIS/NIR (76), SWIR1 (32), SWIR2 (32), TIR (12) |

VIS/NIR (0.43–1.05) SWIR1 (1.50–1.80) SWIR2 (2.00–2.50) TIR (8–12.50) |

|

DAIS 7915 (digital airborne imaging spectrometer) |

GER Corporation (geophysical and environmental research imaging spectrometer) |

VIS/NIR (32), SWIR1 (8), SWIR2 (32), MIR (1), TIR (12) |

VIS/NIR (0.43–1.05) SWIR1 (1.50–1.80) SWIR2 (2.00–2.50) MIR (3.00–5.00) TIR (8.70–12.30) |

|

DAIS 21115 (digital airborne imaging spectrometer) |

GER Corporation |

VIS/NIR (76), SWIR1 (64), SWIR2 (64), MIR (1), TIR (6) |

VIS/NIR (0.40–1.00) SWIR1 (1.00–1.80) SWIR2 (2.00–2.50) MIR (3.00–5.00) TIR (8.00–12.00) |

|

AISA (airborne imaging spectrometer) |

Spectral Imaging |

Over 288 |

0.43–1.00 |

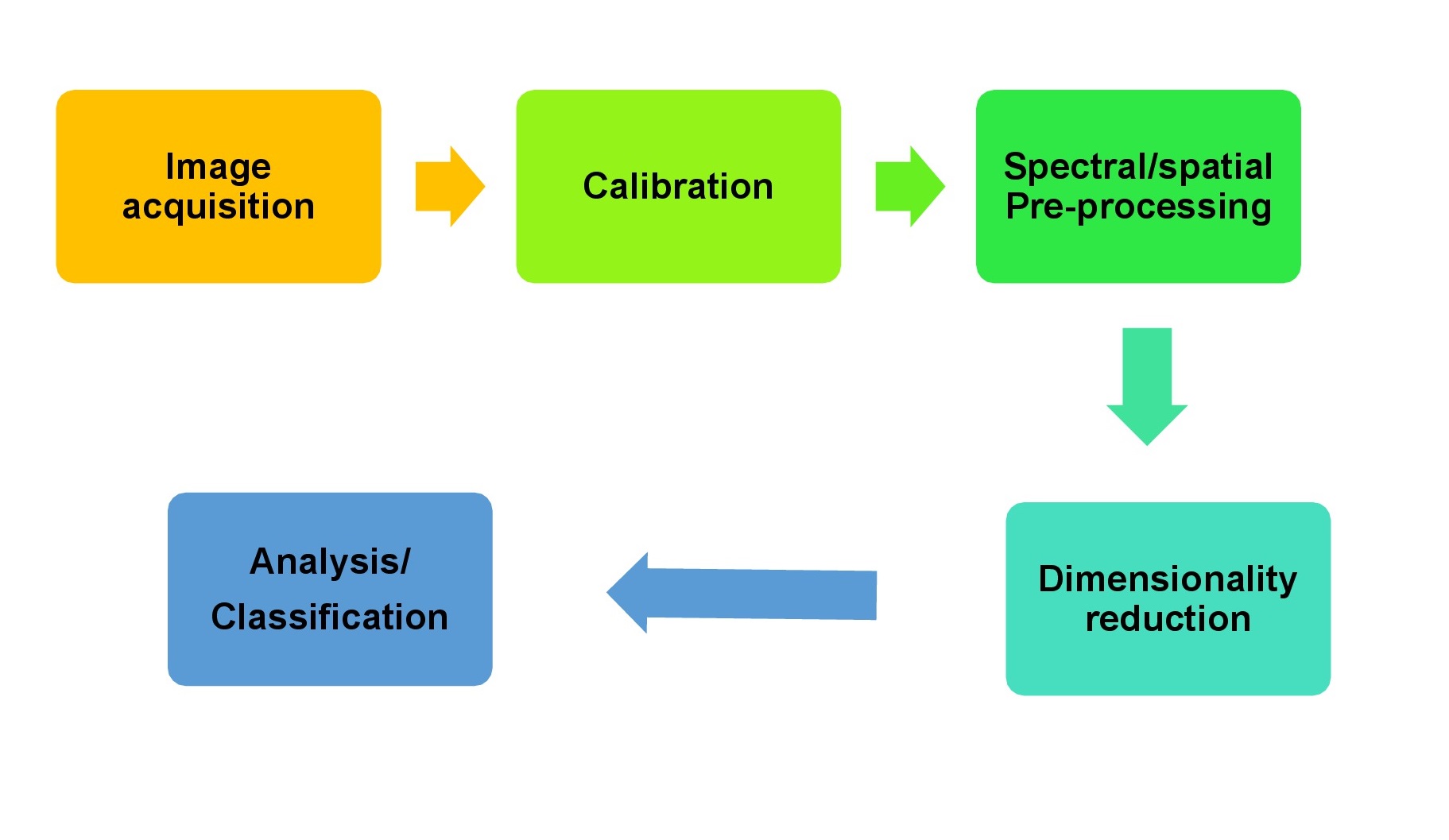

2. Hyperspectral Remote Sensing Imagery (HRSI) Data Processing and Analysing

2.1. Data Preprocessing

2.2. Hyperspectral Image Classification

|

No. |

Crop |

Weed |

Model |

Optimal Accuracy |

Reference |

|---|---|---|---|---|---|

|

1. |

Rice |

Barnyard grass, weedy rice |

RF, SVM |

100% |

Zhang et al. (2019) |

|

2. |

Maize |

Caltrop, curly dock, barnyard grass, ipomoea spp., polymeria spp. |

SVM, LDA |

>98.35% |

Wendel et al. (2016) |

|

3. |

Soybean, cotton |

Ryegrass |

LDA |

>90% |

Huang et al. (2016) |

|

4. |

Wheat |

Broadleaf weeds, grass weeds |

PLSDA |

85% |

Hermann et al. (2013) |

|

5. |

Broadbean, wheat |

Cruciferous weeds |

ANN |

100% |

De Castro et al. (2012) |

|

6. |

Sugar beet |

Wild buckwheat, Field Horsetail, Green foxtail, Chickweed |

LDA |

97.3% |

Okamoto et al. (2007) |

|

7. |

Wheat |

Musk thistle |

SVM |

91% |

Mirik et al. (2013) |

|

8. |

Maize |

C. arvenis |

RF |

>90% |

Gao et al. (2018) |

RF—random forest; SVM—support vector machines; LDA—linear discriminant analysis; ANN—artificial neural network; PLSDA—partial least square discriminant analysis.

References

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402.

- Campbell, J.B.; Wynne, R.H. Introduction to Remote Sensing; Guilford Press: New York, NY, USA, 2011.

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.M.; Chanussot, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36.

- Camps-Valls, G.; Tuia, D.; Gómez-Chova, L.; Jiménez, S.; Malo, J. Remote sensing image processing. Synth. Lect. Image Video Multimed. Process. 2011, 5, 1–192.

- Qian, S.-E. Optical Satellite Signal Processing and Enhancement; SPIE Press: Bellingham, DC, USA; Cardiff, Wales, 2013.

- Qian, S.E. Hyperspectral Satellites and System Design; CRC Press: Boca Raton, FL, USA, 2020.

- Govender, M.; Chetty, K.; Naiken, V.; Bulcock, H. A comparison of satellite hyperspectral and multispectral remote sensing imagery for improved classification and mapping of vegetation. Water SA 2019, 34, 147.

- Wendel, A. Hyperspectral Imaging from Ground Based Mobile Platforms and Applications in Precision Agriculture; School of Aerospace, Mechanical and Mechatronic Engineering, The University of Sydney: Sydney, Australia, 2018.

- Chen, Y.; Guerschman, J.; Cheng, Z.; Guo, L. Remote sensing for vegetation monitoring in carbon capture storage regions: A review. Appl. Energy 2019, 240, 312–326.

- Shaw, G.A.; Burke, H.K. Spectral imaging for remote sensing. Linc. Lab. J. 2003, 14, 3–28.

- Lu, B.; Dao, P.; Liu, J.; He, Y.; Shang, J. Recent Advances of Hyperspectral Imaging Technology and Applications in Agriculture. Remote Sens. 2020, 12, 2659.

- Kate, S.H.; Rocchini, D.; Neteler, M.; Nagendra, H. Benefits of hyperspectral remote sensing for tracking plant invasions. Divers. Distrib. 2011, 17, 381–392.

- Vorovencii, I. The hyperspectral sensors used in satellite and aerial remote sensing. Bull. Transilv. Univ. Brasov. For. Wood Ind. Agric. Food Engineering. Ser. II 2009, 2, 51.

- Lv, W.; Wang, X. Overview of Hyperspectral Image Classification. J. Sens. 2020, 2020, 4817234.

- Rosle, R.; Che’Ya, N.N.; Ang, Y.; Rahmat, F.; Wayayok, A.; Berahim, Z.; Omar, M.H. Weed Detection in Rice Fields Using Remote Sensing Technique: A Review. Appl. Sci. 2021, 11, 10701.

- Chang, C.I. Hyperspectral Data Processing: Algorithm Design and Analysis; WileyInterscience: Hoboken, NJ, USA, 2013.

- Park, B.; Lu, R. (Eds.) Hyperspectral Imaging Technology in Food and Agriculture; Springer: New York, NY, USA, 2015.

- Vidal, M.; Amigo, J.M. Pre-processing of hyperspectral images. Essential steps before image analysis. Chemom. Intell. Lab. Syst. 2012, 117, 138–148.

- Karim, R.S.; Man, A.B.; Sahid, I.B. Weed problems and their management in rice fields of Malaysia: An overview. Weed Biol. Manag. 2004, 4, 177–186.

- Burger, J.; Geladi, P. Hyperspectral NIR image regression part I: Calibration and correction. J. Chemom. A J. Chemom. Soc. 2005, 19, 355–363.

- Singh, M.; Nagargade, M.; Tyagi, V. Ecologically sustainable integrated weed management in dry and irrigated direct-seeded rice. Adv. Plants Agric. Res. 2018, 8, 319–331.

- Ahmad, M.; Shabbir, S.; Raza, R.A.; Mazzara, M.; Distefano, S.; Khan, A.M. Hyperspectral Image Classification: Artifacts of Dimension Reduction on Hybrid CNN. arXiv 2021, arXiv:2101.10532, preprint.

- Basantia, N.C.; Nollet, L.M.; Kamruzzaman, M. (Eds.) Hyperspectral Imaging Analysis and Applications for Food Quality; CRC Press: Boca Raton, FL, USA, 2018.

- Tamilarasi, R.; Prabu, S. Application of Machine Learning Techniques for Hyperspectral Image Dimensionality: A Review. J. Crit. Rev. 2020, 7, 3499–3516.

- Sawant, S.S.; Prabukumar, M. Semi-supervised techniques based hyper-spectral image classification: A survey. In Proceedings of the 2017 Innovations in Power and Advanced Computing Technologies (i-PACT), Vellore, India, 21–22 April 2017; pp. 1–8.

- Freitas, S.; Almeida, C.; Silva, H.; Almeida, J.; Silva, E. Supervised classification for hyperspectral imaging in UAV maritime target detection. In Proceedings of the 2018 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Torres Vedras, Portugal, 25–27 April 2018; pp. 84–90.

- Romaszewski, M.; Głomb, P.; Cholewa, M. Semi-supervised hyperspectral classification from a small number of training samples using a co-training approach. ISPRS J. Photogramm. Remote Sens. 2016, 121, 60–76.

- Su, W.H. Advanced Machine Learning in Point Spectroscopy, RGB-and hyperspectral-imaging for automatic discriminations of crops and weeds: A review. Smart Cities 2020, 3, 767–792.