| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Vlad Ungureanu | + 2557 word(s) | 2557 | 2021-05-22 06:45:26 | | | |

| 2 | Lily Guo | Meta information modification | 2557 | 2021-05-28 06:02:25 | | |

Video Upload Options

In mobile systems, fog, rain, snow, haze, and sun glare are natural phenomena that can be very dangerous for drivers. In addition to the visibility problem, the driver must face also the choice of speed while driving. The main effects of fog are a decrease in contrast and a fade of color. Rain and snow cause also high perturbation for the driver while glare caused by the sun or by other traffic participants can be very dangerous even for a short period. In the field of autonomous vehicles, visibility is of the utmost importance. To solve this problem, different researchers have approached and offered varied solutions and methods. It is useful to focus on what has been presented in the scientific literature over the past ten years relative to these concerns.

1. Introduction

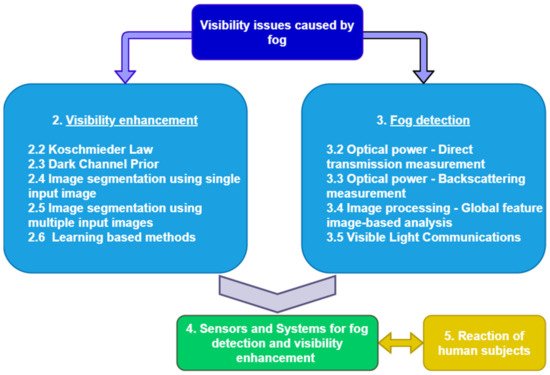

2. Visibility Enhancement Methods

3. Fog Detection and Visibility Estimation Methods

4. Sensors and Systems for Fog Detection and Visibility Enhancement

5. Conclusions

| Methods | Evaluation Criteria | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Computation Complexity | Availability on Vehicles | Data Processing Speed | Day/Night Use | Real-Time Use | Result Distribution | Reliable | Link to Visual Accuracy | ||

| Image dehazing | Koschmieder’s law [28][29][30][31][32][33][34][35][36] |

Medium/High | Partial (camera) | Medium | Daytime only | Yes | Local for 1 user | No (not for all inputs) | Yes |

| Dark channel prior [24][37][38][39][40][41][42][43][44][45][46][20][47][48][49][50][51][52] |

High | Partial (camera) | Medium | Daytime only | Yes | Local for 1 user | No (not for all inputs) | Yes | |

| Dark channel prior integrated in SIDE [53] |

High | Partial (camera) | Medium | Both | Yes | Local for 1 user | Yes | Yes | |

| Image segmentation using single input image [54][55][56][57] |

High | Partial (camera) | Low | Daytime only | No | Local for 1 user | No | Yes | |

| Image segmentation using multiple input images [58][59][60] |

High | Partial (camera) | Medium | Daytime only | Yes (notify drivers) | Local for many users (highways) | No (not for all cases) | Yes | |

| Learning-based methods I [61][62][63][64] |

High | Partial (camera) | Medium | Daytime only | No | Local for many users (highways) | Depends on the training data | No | |

| Learning-based methods II [65] |

High | No | Medium | Daytime only | No | Large area | Depends on the training data | Yes | |

| Learning-based methods III [66][67] |

High | Partial (camera) | Medium | Daytime only | No | Local for 1 user | Depends on the training data | Yes | |

| Learning-based methods IV [68] |

High | Partial (camera + extra hardware) | High | Daytime only | Yes | Local for 1 user | Depends on the training data | Yes | |

| Learning-based methods V [69] |

High | Partial (camera) | High | Both | Yes | Local for 1 user | Depends on the training data | Yes | |

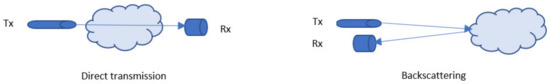

| Fog detection and visibility estimation | Direct transmission measurement [8][70][71][72] |

Low | No | High | Both | Yes | Local for many users (highways) | Yes | No (still need to prove) |

| Backscattering measurement I [9][10][11][12][73][74] |

Low | Partial (LIDAR) | High | Both | Yes | Local for 1 or many users | Yes | No (still need to prove) |

|

| Backscattering measurement II [75] |

Medium | No | Medium | Both | Yes | Local for 1 or many users | No | Yes | |

| Global feature image-based analysis [76][77][78][79][80][81][82][83][84][85][86] |

Medium | Partial (camera) | Low | Both | No | Local for 1 user | No | Yes | |

| Sensors and Systems | Camera + LIDAR [12] |

High | Partial (High-end vehicles) | High | Both | Yes | Local for 1 or many users | Yes | Yes |

| Learning based methods + LIDAR [87] |

High | Partial (LIDAR) |

Medium | Both | Yes | Local for 1 user | Depends on the training data | Yes | |

| Radar [81] |

Medium | Partial (High-end vehicles) | High | Both | Yes | Local for 1 or many users | No (need to be prove in complex scenarios) | Yes | |

| Highway static system (laser) [88] |

Medium | No (static system) |

Medium | Both | Yes | Local (can be extend to a larger area) | Yes | No (still need to prove) |

|

| Motion detection static system [89] |

Medium | No (static system) |

Medium | Day | Yes | Local for 1 or many users | No (not for all cases) |

Yes | |

| Camera based static system [90][91][92] |

High | No (static system) |

Medium | Both | Yes | Local for 1 or many users | Depends on the training data | Yes | |

| Satellite-based system I [93] |

High | No (satellite-based system) | Medium | Night | Yes | Large area | Yes | Yes | |

| Satellite-based system II [94] |

High | No (satellite-based system) | Medium | Both | Yes | Large area | Yes | Yes | |

| Wireless sensor network [95] |

High | No (static system) |

Medium | Both | Yes | Large area | No (not tested in real conditions) |

No | |

| Visibility Meter (camera) [70][71] |

Medium | - | Medium | Day time only | No | Local for many users (highways) | No (not tested in real conditions) |

No | |

| Fog sensor (LWC, particle surface, visibility) [72] |

Medium | No (PVM-100) |

Medium | Both | - | Local for many users (highways) | No (error rate ~20%) |

No | |

| Fog sensor (density, temperature, humidity) [9][73] |

Medium | No | Low | Both | No | Local for many users (highways) | No | No | |

| Fog sensor (particle size—laser and camera) [96][97] |

High | Partial (High-end vehicles) | High | Day time only | No | Local for many users (highways) | No | No | |

References

- U.S. Department of Transportation. Traffic Safety Facts—Critical Reasons for Crashes Investigated in the National Motor Vehicle Crash Causation Survey; National Highway Traffic Safety Administration (NHTSA): Washington, DC, USA, 2015.

- OSRAM Automotive. Available online: (accessed on 30 June 2020).

- The Car Connection. 12 October 2018. Available online: (accessed on 30 June 2020).

- Aubert, D.; Boucher, V.; Bremond, R.; Charbonnier, P.; Cord, A.; Dumont, E.; Foucher, P.; Fournela, F.; Greffier, F.; Gruyer, D.; et al. Digital Imaging for Assessing and Improving Highway Visibility; Transport Research Arena: Paris, France, 2014.

- Rajagopalan, A.N.; Chellappa, R. (Eds.) Motion Deblurring Algorithms and Systems; Cambridge University Press: Cambridge, UK, 2014.

- Palvanov, A.; Giyenko, A.; Cho, Y.I. Development of Visibility Expectation System Based on Machine Learning. In Computer Information Systems and Industrial Management; Springer: Berlin/Heidelberg, Germany, 2018; pp. 140–153.

- Yang, L.; Muresan, R.; Al-Dweik, A.; Hadjileontiadis, L.J. Image-Based Visibility Estimation Algorithm for Intelligent Transportation Systems. IEEE Access 2018, 6, 76728–76740.

- Ioan, S.; Razvan-Catalin, M.; Florin, A. System for Visibility Distance Estimation in Fog Conditions based on Light Sources and Visual Acuity. In Proceedings of the 2016 IEEE International Conference on Automation, Quality and Testing, Robotics (AQTR), Cluj-Napoca, Romania, 19–21 May 2016.

- Ovseník, Ľ.; Turán, J.; Mišenčík, P.; Bitó, J.; Csurgai-Horváth, L. Fog density measuring system. Acta Electrotech. Inf. 2012, 12, 67–71.

- Gruyer, D.; Cord, A.; Belaroussi, R. Vehicle detection and tracking by collaborative fusion between laser scanner and camera. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 5207–5214.

- Gruyer, D.; Cord, A.; Belaroussi, R. Target-to-track collaborative association combining a laser scanner and a camera. In Proceedings of the 16th International IEEE Conference on Intelligent Transportation Systems (ITSC 2013), The Hague, The Netherlands, 6–9 October 2013.

- Dannheim, C.; Icking, C.; Mäder, M.; Sallis, P. Weather Detection in Vehicles by Means of Camera and LIDAR Systems. In Proceedings of the 2014 Sixth International Conference on Computational Intelligence, Communication Systems and Networks, Tetova, Macedonia, 27–29 May 2014.

- Chaurasia, S.; Gohil, B.S. Detection of Day Time Fog over India Using INSAT-3D Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4524–4530.

- Levinson, J.; Askeland, J.; Becker, J.; Dolson, J.; Held, D.; Kammel, S.; Kolter, J.Z.; Langer, D.; Pink, O.; Pratt, V.; et al. Towards fully autonomous driving: Systems and algorithms. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 163–168.

- Jegham, I.; Khalifa, A.B. Pedestrian Detection in Poor Weather Conditions Using Moving Camera. In Proceedings of the IEEE/ACS 14th International Conference on Computer Systems and Applications (AICCSA), Hammamet, Tunisia, 30 October–3 November 2017.

- Dai, X.; Yuan, X.; Zhang, J.; Zhang, L. Improving the performance of vehicle detection system in bad weathers. In Proceedings of the 2016 IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Xi’an, China, 3–5 October 2016.

- Miclea, R.-C.; Silea, I.; Sandru, F. Digital Sunshade Using Head-up Display. In Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2017; Volume 633, pp. 3–11.

- Tarel, J.-P.; Hautiere, N. Fast visibility restoration from a single color or gray level image. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 27 September–4 October 2009; pp. 2201–2208.

- Narasimhan, S.G.; Nayar, S.K. Contrast restoration of weather degraded images. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 713–724.

- Narasimhan, S.G.; Nayar, S.K. Vision and the atmosphere. Int. J. Comput. Vis. 2002, 48, 233–254.

- Middleton, W.E.K.; Twersky, V. Vision through the Atmosphere. Phys. Today 1954, 7, 21.

- McCartney, E.J.; Hall, F.F. Optics of the Atmosphere: Scattering by Molecules and Particles. Phys. Today 1977, 30, 76–77.

- Martínez-Domingo, M.Á.; Valero, E.M.; Nieves, J.L.; Molina-Fuentes, P.J.; Romero, J.; Hernández-Andrés, J. Single Image Dehazing Algorithm Analysis with Hyperspectral Images in the Visible Range. Sensors 2020, 20, 6690.

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353.

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient image dehazing with boundary constraint and con-textual regularization. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013.

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. DehazeNet: An End-to-End System for Single Image Haze Removal. IEEE Trans. Image Process. 2016, 25, 5187–5198.

- Berman, D.; Treibitz, T.; Avidan, S. Non-local Image Dehazing. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1674–1682.

- Hautière, N.; Tarel, J.P.; Aubert, D. Towards Fog-Free In-Vehicle Vision Systems through Contrast Restoration. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 18–23 June 2007.

- Tarel, J.-P.; Hautiere, N.; Caraffa, L.; Cord, A.; Halmaoui, H.; Gruyer, D. Vision Enhancement in Homogeneous and Heterogeneous Fog. IEEE Intell. Transp. Syst. Mag. 2012, 4, 6–20.

- Hautière, N.; Tarel, J.P.; Halmaoui, H.; Brémond, R.; Aubert, D. Enhanced fog detection and free-space segmentation for car navigation. Mach. Vis. Appl. 2014, 25, 667–679.

- Negru, M.; Nedevschi, S. Image based fog detection and visibility estimation for driving assistance systems. In Proceedings of the 2013 IEEE 9th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 5–7 September 2013; pp. 163–168.

- Negru, M.; Nedevschi, S. Assisting Navigation in Homogenous Fog. In Proceedings of the 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Portugal, 5–8 January 2014.

- Negru, M.; Nedevschi, S.; Peter, R.I. Exponential Contrast Restoration in Fog Conditions for Driving Assistance. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2257–2268.

- Abbaspour, M.J.; Yazdi, M.; Masnadi-Shirazi, M. A new fast method for foggy image enhancemen. In Proceedings of the 2016 24th Iranian Conference on Electrical Engineering (ICEE), Shiraz, Iran, 10–12 May 2016.

- Liao, Y.Y.; Tai, S.C.; Lin, J.S.; Liu, P.J. Degradation of turbid images based on the adaptive logarithmic algorithm. Comput. Math. Appl. 2012, 64, 1259–1269.

- Halmaoui, H.; Joulan, K.; Hautière, N.; Cord, A.; Brémond, R. Quantitative model of the driver’s reaction time during daytime fog—Application to a head up display-based advanced driver assistance system. IET Intell. Transp. Syst. 2015, 9, 375–381.

- Yeh, C.H.; Kang, L.W.; Lin, C.Y.; Lin, C.Y. Efficient image/video dehazing through haze density analysis based on pixel-based dark channel prior. In Proceedings of the 2012 International Conference on Information Security and Intelligent Control, Yunlin, Taiwan, 14–16 August 2012.

- Yeh, C.H.; Kang, L.W.; Lee, M.S.; Lin, C.Y. Haze Effect Removal from Image via Haze Density estimation in Optical Model. Opt. Express 2013, 21, 27127–27141.

- Tan, R.T. Visibility in bad weather from a single image. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8.

- Fattal, R. Single image dehazing. ACM Trans. Graph. 2008, 27, 1–9.

- Huang, S.-C.; Chen, B.-H.; Wang, W.-J. Visibility Restoration of Single Hazy Images Captured in Real-World Weather Conditions. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 1814–1824.

- Wang, Z.; Feng, Y. Fast single haze image enhancement. Comput. Electr. Eng. 2014, 40, 785–795.

- Zhang, Y.-Q.; Ding, Y.; Xiao, J.-S.; Liu, J.; Guo, Z. Visibility enhancement using an image filtering approach. EURASIP J. Adv. Signal Process. 2012, 2012, 220.

- Tarel, J.-P.; Hautiere, N.; Cord, A.; Gruyer, D.; Halmaoui, H. Improved visibility of road scene images under heterogeneous fog. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010.

- Wang, R.; Yang, X. A fast method of foggy image enhancement. In Proceedings of the 2012 International Conference on Measurement, Information and Control, Harbin, China, 18–20 May 2012.

- Kim, J.-H.; Jang, W.-D.; Sim, J.-Y.; Kim, C.-S. Optimized contrast enhancement for real-time image and video dehazing. J. Vis. Commun. Image Represent. 2013, 24, 410–425.

- Peli, E. Contrast in complex images. J. Opt. Soc. Am. A 1990, 7, 2032–2040.

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409.

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Prentice-Hall: Hoboken, NJ, USA, 2007.

- Su, C.; Wang, W.; Zhang, X.; Jin, L. Dehazing with Offset Correction and a Weighted Residual Map. Electronics 2020, 9, 1419.

- Wu, X.; Wang, K.; Li, Y.; Liu, K.; Huang, B. Accelerating Haze Removal Algorithm Using CUDA. Remote Sens. 2020, 13, 85.

- Ngo, D.; Lee, S.; Nguyen, Q.H.; Ngo, T.M.; Lee, G.D.; Kang, B. Single Image Haze Removal from Image En-hancement Perspective for Real-Time Vision-Based Systems. Sensors 2020, 20, 5170.

- He, R.; Guo, X.; Shi, Z. SIDE—A Unified Framework for Simultaneously Dehazing and Enhancement of Nighttime Hazy Images. Sensors 2020, 20, 5300.

- Zhu, Q.; Mai, J.; Song, Z.; Wu, D.; Wang, J.; Wang, L. Mean shift-based single image dehazing with re-refined transmission map. In Proceedings of the 2014 IEEE International Conference on Systems, Man, and Cybernetics (SMC), San Diego, CA, USA, 5–8 October 2014.

- Das, D.; Roy, K.; Basak, S.; Chaudhury, S.S. Visibility Enhancement in a Foggy Road Along with Road Boundary Detection. In Proceedings of the Blockchain Technology and Innovations in Business Processes, New Delhi, India, 8 October 2015; pp. 125–135.

- Yuan, H.; Liu, C.; Guo, Z.; Sun, Z. A Region-Wised Medium Transmission Based Image Dehazing Method. IEEE Access 2017, 5, 1735–1742.

- Zhu, Y.-B.; Liu, J.-M.; Hao, Y.-G. An single image dehazing algorithm using sky detection and segmentation. In Proceedings of the 2014 7th International Congress on Image and Signal Processing, Hainan, China, 20–23 December 2014; pp. 248–252.

- Gangodkar, D.; Kumar, P.; Mittal, A. Robust Segmentation of Moving Vehicles under Complex Outdoor Conditions. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1738–1752.

- Yuan, Z.; Xie, X.; Hu, J.; Zhang, Y.; Yao, D. An Effective Method for Fog-degraded Traffic Image Enhance-ment. In Proceedings of the 2014 IEEE International Conference on Service Operations and Logistics, and Informatics, Qingdao, China, 8–10 October 2014.

- Wu, B.-F.; Juang, J.-H. Adaptive Vehicle Detector Approach for Complex Environments. IEEE Trans. Intell. Transp. Syst. 2012, 13, 817–827.

- Cireşan, D.; Meier, U.; Masci, J.; Schmidhuber, J. Multi-column deep neural network for traffic sign classification. Neural Netw. 2012, 32, 333–338.

- Hussain, F.; Jeong, J. Visibility Enhancement of Scene Images Degraded by Foggy Weather Conditions with Deep Neural Networks. J. Sens. 2015, 2016, 1–9.

- Singh, G.; Singh, A. Object Detection in Fog Degraded Images. Int. J. Comput. Sci. Inf. Secur. 2018, 16, 174–182.

- Cho, Y.I.; Palvanov, A. A New Machine Learning Algorithm for Weather Visibility and Food Recognition. J. Robot. Netw. Artif. Life 2019, 6, 12.

- Hu, A.; Xie, Z.; Xu, Y.; Xie, M.; Wu, L.; Qiu, Q. Unsupervised Haze Removal for High-Resolution Optical Remote-Sensing Images Based on Improved Generative Adversarial Networks. Remote Sens. 2020, 12, 4162.

- Ha, E.; Shin, J.; Paik, J. Gated Dehazing Network via Least Square Adversarial Learning. Sensors 2020, 20, 6311.

- Chen, J.; Wu, C.; Chen, H.; Cheng, P. Unsupervised Dark-Channel Attention-Guided CycleGAN for Sin-gle-Image Dehazing. Sensors 2020, 20, 6000.

- Ngo, D.; Lee, S.; Lee, G.-D.; Kang, B.; Ngo, D. Single-Image Visibility Restoration: A Machine Learning Approach and Its 4K-Capable Hardware Accelerator. Sensors 2020, 20, 5795.

- Feng, M.; Yu, T.; Jing, M.; Yang, G. Learning a Convolutional Autoencoder for Nighttime Image Dehazing. Information 2020, 11, 424.

- Pesek, J.; Fiser, O. Automatically low clouds or fog detection, based on two visibility meters and FSO. In Proceedings of the 2013 Conference on Microwave Techniques (COMITE), Pardubice, Czech Republic, 17–18 April 2013; pp. 83–85.

- Brazda, V.; Fiser, O.; Rejfek, L. Development of system for measuring visibility along the free space optical link using digital camera. In Proceedings of the 2014 24th International Conference Radioelektronika, Bratislava, Slovakia, 15–16 April 2014; pp. 1–4.

- Brazda, V.; Fiser, O. Estimation of fog drop size distribution based on meteorological measurement. In Proceedings of the 2015 Conference on Microwave Techniques (COMITE), Pardubice, Czech Republic, 23–24 April 2008; pp. 1–4.

- Ovseník, Ľ.; Turán, J.; Tatarko, M.; Turan, M.; Vásárhelyi, J. Fog sensor system: Design and measurement. In Proceedings of the 13th International Carpathian Control Conference (ICCC), High Tatras, Slovakia, 28–31 May 2012; pp. 529–532.

- Sallis, P.; Dannheim, C.; Icking, C.; Maeder, M. Air Pollution and Fog Detection through Vehicular Sensors. In Proceedings of the 2014 8th Asia Modelling Symposium, Taipei, Taiwan, 23–25 September 2014; pp. 181–186.

- Kim, Y.-H.; Moon, S.-H.; Yoon, Y. Detection of Precipitation and Fog Using Machine Learning on Backscatter Data from Lidar Ceilometer. Appl. Sci. 2020, 10, 6452.

- Pavlic, M.; Belzner, H.; Rigoll, G.; Ilic, S. Image based fog detection in vehicles. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, Madrid, Spain, 3–7 June 2012; pp. 1132–1137.

- Pavlic, M.; Rigoll, G.; Ilic, S. Classification of images in fog and fog-free scenes for use in vehicles. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast City, Australia, 23–26 June 2013.

- Spinneker, R.; Koch, C.; Park, S.B.; Yoon, J.J. Fast Fog Detection for Camera Based Advanced Driver Assistance Systems. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), The Hague, The Netherlands, 24–26 September 2014.

- Asery, R.; Sunkaria, R.K.; Sharma, L.D.; Kumar, A. Fog detection using GLCM based features and SVM. In Proceedings of the 2016 Conference on Advances in Signal Processing (CASP), Pune, India, 9–11 June 2016; pp. 72–76.

- Zhang, D.; Sullivan, T.; O’Connor, N.E.; Gillespie, R.; Regan, F. Coastal fog detection using visual sensing. In Proceedings of the OCEANS 2015, Genova, Italy, 18–21 May 2015.

- Alami, S.; Ezzine, A.; Elhassouni, F. Local Fog Detection Based on Saturation and RGB-Correlation. In Proceedings of the 2016 13th International Conference on Computer Graphics, Imaging and Visualization (CGiV), Beni Mellal, Morocco, 29 March–1 April 2016; pp. 1–5.

- Gallen, R.; Cord, A.; Hautière, N.; Aubert, D. Method and Device for Detecting Fog at Night. Versailles. France Patent WO 2 012 042 171 A2, 5 April 2012.

- Gallen, R.; Cord, A.; Hautière, N.; Dumont, É.; Aubert, D. Night time visibility analysis and estimation method in the presence of dense fog. IEEE Trans. Intell. Transp. Syst. 2015, 16, 310–320.

- Pagani, G.A.; Noteboom, J.W.; Wauben, W. Deep Neural Network Approach for Automatic Fog Detection. In Proceedings of the CIMO TECO, Amsterdam, The Netherlands, 8–16 October 2018.

- Li, S.; Fu, H.; Lo, W.-L. Meteorological Visibility Evaluation on Webcam Weather Image Using Deep Learning Features. Int. J. Comput. Theory Eng. 2017, 9, 455–461.

- Chaabani, H.; Kamoun, F.; Bargaoui, H.; Outay, F.; Yasar, A.-U.-H. A Neural network approach to visibility range estimation under foggy weather conditions. Procedia Comput. Sci. 2017, 113, 466–471.

- Liang, X.; Huang, Z.; Lu, L.; Tao, Z.; Yang, B.; Li, Y. Deep Learning Method on Target Echo Signal Recognition for Obscurant Penetrating Lidar Detection in Degraded Visual Environments. Sensors 2020, 20, 3424.

- Miclea, R.-C.; Silea, I. Visibility Detection in Foggy Environment. In Proceedings of the 2015 20th International Conference on Control Systems and Computer Science, Bucharest, Romania, 27–29 May 2015; pp. 959–964.

- Kumar, T.S.; Pavya, S. Segmentation of visual images under complex outdoor conditions. In Proceedings of the 2014 International Conference on Communication and Signal Processing, Chennai, India, 3–5 April 2014; pp. 100–104.

- Han, Y.; Hu, D. Multispectral Fusion Approach for Traffic Target Detection in Bad Weather. Algorithms 2020, 13, 271.

- Ibrahim, M.R.; Haworth, J.; Cheng, T. WeatherNet: Recognising Weather and Visual Conditions from Street-Level Images Using Deep Residual Learning. ISPRS Int. J. Geo-Inf. 2019, 8, 549.

- Qin, H.; Qin, H. Image-Based Dedicated Methods of Night Traffic Visibility Estimation. Appl. Sci. 2020, 10, 440.

- Weston, M.; Temimi, M. Application of a Nighttime Fog Detection Method Using SEVIRI Over an Arid Environment. Remote Sens. 2020, 12, 2281.

- Han, J.-H.; Suh, M.-S.; Yu, H.-Y.; Roh, N.-Y. Development of Fog Detection Algorithm Using GK2A/AMI and Ground Data. Remote Sens. 2020, 12, 3181.

- Li, L.; Zhang, H.; Zhao, C.; Ding, X. Radiation fog detection and warning system of highway based on wireless sensor networks. In Proceedings of the 2014 IEEE 7th Joint International Information Technology and Artificial Intelligence Conference, Chongqing, China, 20–21 December 2014; pp. 148–152.

- Miclea, R.-C.; Dughir, C.; Alexa, F.; Sandru, F.; Silea, A. Laser and LIDAR in A System for Visibility Distance Estimation in Fog Conditions. Sensors 2020, 20, 6322.

- Tóth, J.; Ovseník, Ľ.; Turán, J. Free Space Optics—Monitoring Setup for Experimental Link. Carpathian J. Electron. Comput. Eng. 2015, 8, 27–30.