In mobile systems, fog, rain, snow, haze, and sun glare are natural phenomena that can be very dangerous for drivers. In addition to the visibility problem, the driver must face also the choice of speed while driving. The main effects of fog are a decrease in contrast and a fade of color. Rain and snow cause also high perturbation for the driver while glare caused by the sun or by other traffic participants can be very dangerous even for a short period. In the field of autonomous vehicles, visibility is of the utmost importance. To solve this problem, different researchers have approached and offered varied solutions and methods. It is useful to focus on what has been presented in the scientific literature over the past ten years relative to these concerns.

1. Introduction

Adapting vehicle speed to environmental conditions is the main way to reduce the number of accidents on public roads

[1]. Bad visibility caused by the weather conditions while driving proved to be one of the main factors of accidents

[1]. The research from the last decade came with different features to help the drivers, such as redesigning the headlights by using LED or laser devices or improving the directivity of the beam in real time; with these new technologies, the emitted light is closer to the natural one

[2]. In addition, they also introduced a new feature, auto-dimming technologies being already installed on most of the high-end vehicles

[3]. In case of fog, unfortunately, this is not enough, and up until now, no reliable and robust system was developed to be installed on a commercial vehicle. There were approaches based on image processing by detecting lane marking, traffic signs, or hazards such as obstacles

[4], image dehazing and deblurring

[5], image segmentation, or machine learning methods

[6][7][6,7]. Other methods are based on evaluating the optical power of a light source in direct transmission or backscattering, by analyzing the scattering and dispersion of the beam

[8][9][8,9]. There are approaches that are using systems already installed on the vehicle such as ADAS (Advanced Driver Assistant Systems), LIDAR (LIght Detection And Ranging), radar, cameras, or different sensors

[10][11][12][10,11,12] and even geostationary satellite approaches

[13]. While imaging sensors output reliable results in good weather conditions, their efficiency is decreasing in bad weather conditions such as fog, rain, snow, or glare of the sun.

The biggest companies around the world are working these years to develop a technology that will completely change driving, the autonomous vehicle

[14]. When this will be rolled out in public ways, the expectation will be for crashes to decrease considerably. However, let us think about how an autonomous vehicle will behave in bad weather conditions: loss of vehicle adherence, problems on vehicle stability, and maybe the most important fact is related to the decrease or lack of visibility: non-visible traffic signs and lane markings, non-identifiable pedestrian

[15], objects or vehicles on its way

[16], lack of visibility due to sun glare

[17], etc. We have also the example of the autonomous vehicle developed by Google, which failed the tests in bad weather conditions in 2014. Now, the deadline for rolling out the autonomous vehicle is very close; 2020 was already announced by many companies, and they must find a proper solution for these problems because these vehicles will take decision exclusively based on the inputs obtained from the cameras and sensors or in case of doubts will hand over the vehicle control to the driver.

In the next decades, there will be a transition period; on the public roads, there will be autonomous vehicles but also vehicles controlled by the drivers; as drivers’ reactions are unpredictable, these systems will have to have an extremely short evaluation and reaction time to avoid possible accidents. Based upon this reasoning visibility estimation and the general improvement of visibility remain viable fields of study, we did a study on the state of the research for papers that use image processing as the means to estimate visibility in fog conditions, thus increasing general traffic safety.

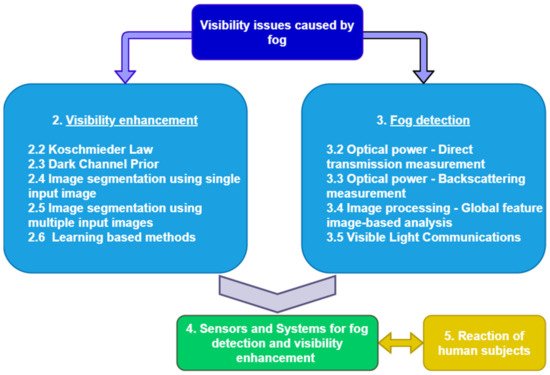

presents an overview of the field, starting from the main methods from the state of the art, visibility enhancement (2), and fog detection (3), following by systems and sensors (4) that use the methods proposed in the first two subsections to detect visibility in adverse weather conditions and ending by presenting the human observer’s reactions in such conditions (5).

Figure 1.

Overall structure.

Basically, in the first category, the methods are based on image processing, while in the second one, they are based on optical power measurements or image processing. In the next sections, the most known and used methods from these two broad categories will be detailed. The goal of this work is to present the advantages but also the weaknesses of every method to identify new ways of improvement. Afterwards, as it is stated in the figure below, we propose a mix of methods with the scope of counterbalancing the shortages of a method with the other one. The final step will be to check if the results obtained from such a system are valid for human beings and additionally usable by autonomous vehicles.

2. Visibility Enhancement Methods

In the last decade, there was a great interest in the area of improving visibility in bad weather conditions and especially in foggy conditions. The methods are based on image processing algorithms and can be split into two categories: image processing using a single input image (one of the first approaches was presented by Tarel and Hautiere in (

[18]) and using multiple images (

[19]) as input. Taking multiple input images of the same scene is usually impractical in several real applications; that is why single image haze removal has recently received much attention.

3. Fog Detection and Visibility Estimation Methods

In the previous section, we mentioned Hautière and He as pilots for the field of image dehazing; now, one of the most relevant works for vision in the atmosphere is the work of Nayar and Narasimhan

[20][42], which is based on reputed research of Middleton

[21][66] and McCartney

[22][67].

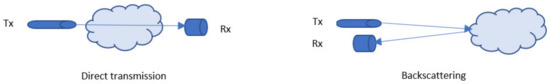

Most of the approaches for detecting fog and determining its density for visibility estimation are based on optical power measurements (OPM), but there are also image processing approaches. The basic principle of the methods from the first category is the fact that infrared or light pulses emitted in the atmosphere are scattered and absorbed by the fog particles and molecules, resulting in an attenuation of the optical power. Methods of detecting the attenuation degree are by measuring the optical power after the light beam passed a layer of fog (direct transmission) or by measuring the reflected light when the light beam is backscattered by the fog layer. provides an overview of optical power measurement methods.

Figure 25.

Optical power measurement methods.

4. Sensors and Systems for Fog Detection and Visibility Enhancement

Nowadays, vehicles are equipped with plenty of cameras and sensors desired for some specific functionalities that might be used also for fog detection and visibility improvements. For example, Tesla Model S has only for the autopilot functionality 8 surround cameras, 12 ultrasonic sensors, and forward-facing radar with enhanced processing capabilities.

5. Conclusions

This paper presented methods and systems from the scientific literature related to fog detection and visibility enhancement in foggy conditions that appeared over the past ten years. In the next period, the main focus of the automotive companies will be the development of autonomous vehicles, and visibility requirements in bad weather conditions will be of high importance. The actual methods from the state of the art are based on image processing, optical power measurements, or based on different sensors, some of them already available on actual commercial vehicles but used for different functionalities. The image processing methods are based on cameras, which are devices that have a lot of advantages such as freedom of implementing different algorithms, versatility, or costs, but on the other hand, the results obtained from such a system can be erroneous due to blindness caused by other traffic participants, environment, or weather. Methods based on image processing can be applied for low fog conditions; if fog becomes denser, the system is not able to give any valid output. Some methods presented in the literature work only in day conditions, making them unusable for automotive applications that require systems able to offer reliable results in real time and complex scenarios 24 h/day.

Focusing on the fact that images are degraded in foggy or hazy conditions, the degradation depends on the distance, the density of the atmospheric particles, and the wavelength. The authors in

[23][89] tested multiple single image dehazing algorithms and performed an evaluation based on two strategies: one based on the analysis of state-of-the-art metrics and the other one based on psychophysical experiments. The results of the study suggest that the higher the wavelength within the visible range, the higher the quality of the dehazed images. The methods tested during the experiments were dark channel prior

[24][31], Tarel method

[18], Meng method

[25][86], DehazeNet method

[26][87], and Berman method

[27][88]. The presented work emphasizes the fact that there is no method that is superior to every single metric; therefore, the best algorithm would vary according to the selected metric. The results of the subjective analysis revealed the fact that the observers preferred the output of the Berman algorithm. The main conclusion is that it is very important to set the correct expectations that will lead to a selection of some metrics and then, based on that, a dehazing algorithm can be preferred.

Systems based on optical power measurement, by direct transmission or backscattering, improve some of the drawbacks described above for cameras: the result is not influenced by day or night conditions, can measure also very dense fog, and the computational complexity is lower comparing to the previous category, making them more sensitive to very quick changes in the environment, which is important in real-time applications. The results obtained using such systems can be also erroneous, due to environmental conditions (bridges, road curves) or traffic participants; that is why our conclusion after gathering all these methods and systems in a single paper is that at least two different systems shall be interconnected to validate the results of each other.

One big challenge, from our point of view, for the next years in this field is to prove that the results obtained from the systems presented above are valid for a human being. The validity of the results is a relevant topic also for autonomous vehicles that need to identity the road, objects, other vehicles, and traffic signs in bad weather conditions, and the automotive companies shall define the visibility limit for these vehicles.

The evaluation of the state-of-the-art methods is presented in .

Table 1.

Evaluation of the state-of-the-art methods.

| Methods |

Evaluation Criteria |

| Computation Complexity |

Availability on Vehicles |

Data Processing Speed |

Day/Night Use |

Real-Time Use |

Result Distribution |

Reliable |

Link to Visual Accuracy |

| Image dehazing |

Koschmieder’s law

[28][29][30][31][32][33][34][35][36] | Koschmieder’s law

[22,23,24,25,26,27,28,29,30] |

Medium/High |

Partial (camera) |

Medium |

Daytime only |

Yes |

Local for 1 user |

No (not for all inputs) |

Yes |

Dark channel prior

[24][37][38][39][40][41][42][43][44][45][46][20][47][48][49][50][51][52] | Dark channel prior

[31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48] |

High |

Partial (camera) |

Medium |

Daytime only |

Yes |

Local for 1 user |

No (not for all inputs) |

Yes |

Dark channel prior integrated in SIDE

[53] | Dark channel prior integrated in SIDE

[49] |

High |

Partial (camera) |

Medium |

Both |

Yes |

Local for 1 user |

Yes |

Yes |

Image segmentation using single input image

[54][55][56][57] | Image segmentation using single input image

[50,51,52,53] |

High |

Partial (camera) |

Low |

Daytime only |

No |

Local for 1 user |

No |

Yes |

Image segmentation using multiple input images

[58][59][60] | Image segmentation using multiple input images

[54,55,56] |

High |

Partial (camera) |

Medium |

Daytime only |

Yes (notify drivers) |

Local for many users (highways) |

No (not for all cases) |

Yes |

Learning-based methods I

[61][62][63][64] | Learning-based methods I

[57,58,59,60] |

High |

Partial (camera) |

Medium |

Daytime only |

No |

Local for many users (highways) |

Depends on the training data |

No |

Learning-based methods II

[65] | Learning-based methods II

[61] |

High |

No |

Medium |

Daytime only |

No |

Large area |

Depends on the training data |

Yes |

Learning-based methods III

[66][67] | Learning-based methods III

[62,63] |

High |

Partial (camera) |

Medium |

Daytime only |

No |

Local for 1 user |

Depends on the training data |

Yes |

Learning-based methods IV

[68] | Learning-based methods IV

[64] |

High |

Partial (camera + extra hardware) |

High |

Daytime only |

Yes |

Local for 1 user |

Depends on the training data |

Yes |

Learning-based methods V

[69] | Learning-based methods V

[65] |

High |

Partial (camera) |

High |

Both |

Yes |

Local for 1 user |

Depends on the training data |

Yes |

| Fog detection and visibility estimation |

Direct transmission measurement

[8][70][71][72] | Direct transmission measurement

[8,69,70,71] |

Low |

No |

High |

Both |

Yes |

Local for many users (highways) |

Yes |

No

(still need to prove) |

Backscattering measurement I

[9][10][11][12][73][74] | Backscattering measurement I

[9,10,11,12,72,73] |

Low |

Partial (LIDAR) |

High |

Both |

Yes |

Local for 1 or many users |

Yes |

No

(still need to prove) |

Backscattering measurement II

[75] | Backscattering measurement II

[74] |

Medium |

No |

Medium |

Both |

Yes |

Local for 1 or many users |

No |

Yes |

Global feature image-based analysis

[76][77][78][79][80][81][82][83][84][85][86] | Global feature image-based analysis

[75,76,77,78,79,80,81,82,83,84,85] |

Medium |

Partial (camera) |

Low |

Both |

No |

Local for 1 user |

No |

Yes |

| Sensors and Systems |

Camera + LIDAR

[12] |

High |

Partial (High-end vehicles) |

High |

Both |

Yes |

Local for 1 or many users |

Yes |

Yes |

Learning based methods + LIDAR

[87] | Learning based methods + LIDAR

[106] |

High |

Partial

(LIDAR) |

Medium |

Both |

Yes |

Local for 1 user |

Depends on the training data |

Yes |

Radar

[81] | Radar

[80] |

Medium |

Partial (High-end vehicles) |

High |

Both |

Yes |

Local for 1 or many users |

No (need to be prove in complex scenarios) |

Yes |

Highway static system (laser)

[88] | Highway static system (laser)

[108] |

Medium |

No

(static system) |

Medium |

Both |

Yes |

Local (can be extend to a larger area) |

Yes |

No

(still need to prove) |

Motion detection static system

[89] | Motion detection static system

[112] |

Medium |

No

(static system) |

Medium |

Day |

Yes |

Local for 1 or many users |

No

(not for all cases) |

Yes |

Camera based static system

[90][91][92] | Camera based static system

[113,114,115] |

High |

No

(static system) |

Medium |

Both |

Yes |

Local for 1 or many users |

Depends on the training data |

Yes |

Satellite-based system I

[93] | Satellite-based system I

[116] |

High |

No (satellite-based system) |

Medium |

Night |

Yes |

Large area |

Yes |

Yes |

Satellite-based system II

[94] | Satellite-based system II

[117] |

High |

No (satellite-based system) |

Medium |

Both |

Yes |

Large area |

Yes |

Yes |

Wireless sensor network

[95] | Wireless sensor network

[109] |

High |

No

(static system) |

Medium |

Both |

Yes |

Large area |

No

(not tested in real conditions) |

No |

Visibility Meter (camera)

[70][71] | Visibility Meter (camera)

[69,70] |

Medium |

- |

Medium |

Day time only |

No |

Local for many users (highways) |

No

(not tested in real conditions) |

No |

Fog sensor (LWC, particle surface, visibility)

[72] | Fog sensor (LWC, particle surface, visibility)

[71] |

Medium |

No

(PVM-100) |

Medium |

Both |

- |

Local for many users (highways) |

No

(error rate ~20%) |

No |

Fog sensor (density, temperature, humidity)

[9][73] | Fog sensor (density, temperature, humidity)

[9,72] |

Medium |

No |

Low |

Both |

No |

Local for many users (highways) |

No |

No |

Fog sensor (particle size—laser and camera)

[96][97] | Fog sensor (particle size—laser and camera)

[107,110] |

High |

Partial (High-end vehicles) |

High |

Day time only |

No |

Local for many users (highways) |

No |

No |

Based on the evaluation criteria listed in the table above (), we can conclude that a system able to determine and improve visibility in a foggy environment shall include a camera and a device able to make optical measurements in the atmosphere. Both categories have their drawbacks, but putting them together, most of the gaps can be covered; every subsystem can work as a backup and can validate the result offered by the other one. An example can be a system composed of a camera and a LIDAR such as in

[12]; both systems are already available on nowadays high-end vehicles, offering reliable results, in real-time, 24 h/day. The results obtained from a vehicle can be shared with other traffic participants from that area, in this way creating a network of systems. The direction of improvement for such a system would be to increase the detection range for LIDARs and to use infrared cameras that can offer reliable results in night conditions and to validate the results obtained from the LIDAR.

This synthesis can be a starting point for developing a reliable system for fog detection and visibility improvement, by presenting the weaknesses of the methods from the state of the art (the referenced articles have more than 30,000 citations in Google Scholar), which can lead to some new ideas of improving them. Additionally, we described ways of interconnecting these systems to get more robust and reliable results.