| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Sunusi Bala Abdullahi | -- | 1364 | 2024-04-09 07:37:09 | | | |

| 2 | Rita Xu | Meta information modification | 1364 | 2024-04-09 08:39:14 | | | | |

| 3 | Sunusi Bala Abdullahi | + 334 word(s) | 1698 | 2025-12-03 23:38:16 | | | | |

| 4 | Sunusi Bala Abdullahi | Meta information modification | 1698 | 2025-12-03 23:44:35 | | | | |

| 5 | Rita Xu | -15 word(s) | 1683 | 2025-12-04 02:19:46 | | |

Video Upload Options

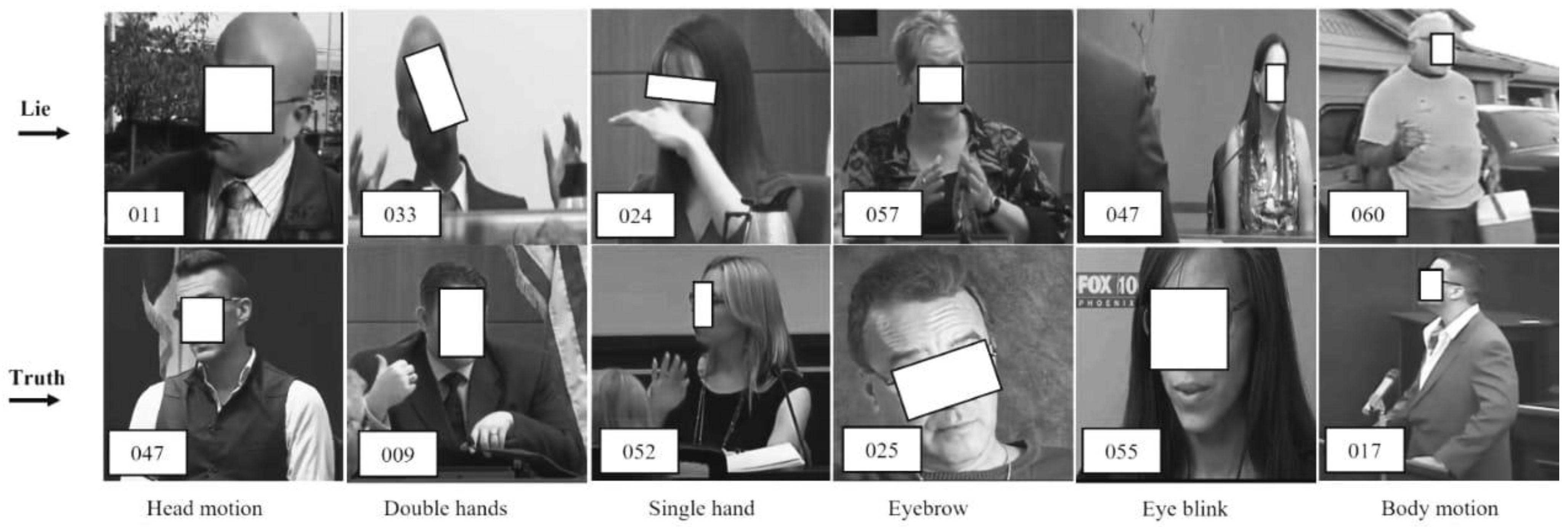

Recognition of lying is a more complex cognitive process than truth-telling because of the presence of involuntary cognitive cues that are useful to lie recognition. Researchers have proposed different approaches in the literature to solve the problem of lie recognition from either handcrafted and/or automatic lie features during court trials and police interrogations.

1. Introduction

2. Eye Blinking Approach

3. Multi-Modal Cue Approaches

4. The Hybrid CNN-BiLSTM Architecture: Mechanisms and Advantages

The architectural gap identified in prior methods, specifically, the inability to jointly model salient spatial features and their long-range temporal dependencies in involuntary cue data, is addressed by hybrid Convolutional Neural Network-Bidirectional Long Short-Term Memory (CNN-BiLSTM) models. This paradigm operates on a principled division of labor: the CNN backbone acts as a hierarchical spatial feature extractor, transforming raw video frames or optical flow fields into compact, discriminative representations of local patterns (e.g., micro-expressions, hand contours). These sequential feature vectors are then processed by the BiLSTM layer, which models their temporal evolution by learning contextual dependencies in both forward and backward directions. This bidirectional context is critical for interpreting cues like the progression of a gesture or the dynamics of a facial action unit. The comparative advantage of this hybrid approach lies in its end-to-end learning capability, which supersedes the need for manual feature engineering. It directly optimizes the integration of spatial and temporal information, thereby mitigating information redundancy and enhancing model stability for the complex, variable-length sequences characteristic of real-world behavioral data.

5. Conclusion and Future Research Trajectories

In conclusion, the application of deep learning, particularly hybrid spatial-temporal models like CNN-BiLSTM, represents a significant advance in automated lie recognition from visual cues. These systems move beyond isolated cue analysis toward a more holistic integration of multi-modal behavioral signals. Future research must navigate several key challenges to transition from constrained experimental settings to robust, real-world deployment. These include: (i) the curation of large-scale, ecologically valid video datasets that reflect diverse populations, lighting conditions, and cultural nuances in nonverbal behavior; (ii) the development of explainable AI (XAI) frameworks to render model decisions interpretable to forensic experts and legal professionals, ensuring adherence to evidentiary standards; and (iii) the exploration of efficient, lightweight architectures suitable for potential real-time analysis scenarios. Addressing these challenges will be pivotal in developing principled, reliable tools for assistive forensic analysis.

References

- Avola, D.; Cinque, L.; Maria, D.; Alessio, F.; Foresti, G. LieToMe: Preliminary study on hand gestures for deception detection via Fisher-LSTM. Pattern Recognit. Lett. 2020, 138, 455–461.

- Al-jarrah, O.; Halawan, A. Recognition of gestures in Arabic sign language using neuro-fuzzy systems. Artif. Intell. 2001, 133, 117–138.

- Abdullahi, S.B.; Khunpanuk, C.; Bature, Z.A.; Chroma, H.; Pakkaranang, N.; Abubakar, A.B.; Ibrahm, A.H. Biometric Information Recognition Using Artificial Intelligence Algorithms: A Performance Comparison. IEEE Access 2022, 10, 49167–49183.

- Avola, D.; Cascio, M.; Cinque, L.; Fagioli, A.; Foresti, G. LieToMe: An Ensemble Approach for Deception Detection from Facial Cues. Int. J. Neural Syst. 2021, 31, 2050068.

- Sen, U.; Perez, V.; Yanikoglu, B.; Abouelenien, M.; Burzo, M.; Mihalcea, R. Multimodal deception detection using real-life trial data. IEEE Trans. Affect. Comput. 2022, 2022, 2050068.

- Ding, M.; Zhao, A.; Lu, Z.; Xiang, T.; Wen, J. Face-focused cross-stream network for deception detection in videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019.

- Abdullahi, S.B.; Chamnongthai, K. American sign language words recognition using spatio-temporal prosodic and angle features: A sequential learning approach. IEEE Access 2022, 10, 15911–15923.

- Bhaskaran, N.; Nwogu, I.; Frank, M.G.; Govindaraju, V. Lie to me: Deceit detection via online behavioral learning. In Proceedings of the 2011 IEEE International Conference on Automatic Face & Gesture Recognition (FG), Santa Barbara, CA, USA, 21–23 March 2011; IEEE: Piscataway, NJ, USA, 2011.

- Jeffry, G.; Jenkins, J.L.; Burgoon, J.K.; Judee, K.; Nunamaker, J.F. Deception is in the eye of the communicator: Investigating pupil diameter variations in automated deception detection interviews. In Proceedings of the 2015 IEEE International Conference on Intelligence and Security Informatics (ISI), Baltimore, MD, USA, 27–29 May 2015; IEEE: Piscataway, NJ, USA, 2015.

- Thakar, M.K.; Kaur, P.; Sharma, T. Validation studies on gender determination from fingerprints with special emphasis on ridge characteristics. Egypt. J. Forensic Sci. 2022, 8, 20.

- Meservy, T.O.; Jensen, M.L.; Kruse, J.; Burgoon, J.K.; Nunamaker, J.F.; Twitchell, D.P.; Tsechpenakis, G.; Metaxas, D.N. Deception detection through automatic, unobtrusive analysis of nonverbal behavior. IEEE Intell. Syst. 2005, 20, 36–43.

- Pérez-Rosas, V.; Abouelenien, M.; Mihalcea, R.; Burzo, M. Deception detection using real-life trial data. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Seattle, DC, USA, 9–13 November 2015; ACM: New York, NY, USA, 2015.

- Abouelenien, M.; Pérez-Rosas, V.; Mihalcea, R.; Burzo, M. Detecting deceptive behavior via integration of discriminative features from multiple modalities. IEEE Trans. Inf. Forensics Secur. 2017, 5, 1042–1055.

- Karimi, H.; Tang, J.; Li, Y. Toward end-to-end deception detection in videos. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; IEEE: Piscataway, NJ, USA, 2018.

- Wu, Z.; Singh, B.; Davis, L.; Subrahmanian, V. Deception detection in videos. In Proceedings of the AAAI conference on artificial intelligence, New Orleans, LA, USA, 2–7 February 2018; AAAI: Washington, DC, USA, 2018.

- Rill-García, R.; Jair, E.H.; Villasenor-Pineda, L.; Reyes-Meza, V. High-level features for multimodal deception detection in videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; IEEE: Piscataway, NJ, USA, 2019.

- Krishnamurthy, G.; Majumder, N.; Poria, S.; Cambria, E. A deep learning approach for multimodal deception detection. arXiv 2018, arXiv:1803.00344.

- Abdullahi, S.B.; Ibrahim, A.H.; Abubakar, A.B.; Kambheera, A. Optimizing Hammerstein-Wiener Model for Forecasting Confirmed Cases of COVID-19. IAENG Int. J. Appl. Math. 2022, 52, 101–115.

- Abdullahi, S.B.; Muangchoo, K. Semantic parsing for automatic retail food image recognition. Int. J. Adv. Trends Comput. Sci. Eng. 2020, 53, 7808–7816.

- Lu, S.; Tsechpenakis, G.; Metaxas, D.N.; Jensen, M.L.; Kruse, J. Blob analysis of the head and hands: A method for deception detection. In Proceedings of the 38th Annual Hawaii International Conference on System Sciences, Big Island, HI, USA, 3–6 January 2005; IEEE: Piscataway, NJ, USA, 2005.