Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Subjects:

Others

Recognition of lying is a more complex cognitive process than truth-telling because of the presence of involuntary cognitive cues that are useful to lie recognition. Researchers have proposed different approaches in the literature to solve the problem of lie recognition from either handcrafted and/or automatic lie features during court trials and police interrogations.

- artificial intelligence

- bidirectional long short-term memory

- convolutional neural network

1. Introduction

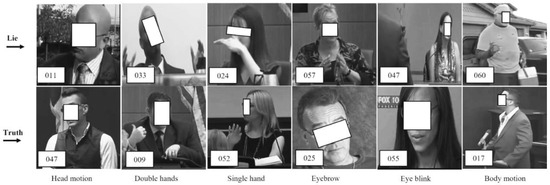

On average, every person tells lies at least twice a day [1]. More aggravating is lies presented against others during court trials, police interrogations, interviews, etc., which change the outcome of relevant facts and may lead to wrong judgments or convictions. These problems have inspired the development of computer engineering systems, such as electroencephalography (EEG). Despite the benefits of computer engineering systems for lie recognition, some restrictions exist, such as being cumbersome, which allows a liar to understand that they are being monitored, thus resulting in the presence of deliberate behavioral attitude that can confuse the interviewers. Such deliberate behavioral attitude affects involuntary cues, which mislead the actual results. These involuntary cues comprise facial expression, body language, eye motion, and hand motion, as shown in Figure 1. Each subfigure contains a scene from a court trial video. The scene contains a label in the white box corresponding to the number from the video clip of the original court trial video data set. The scenes from the top left corner to the right show the behavioral attitudes of lying people, while the scenes from the bottom left corner to the right show the behavioral attitudes of truth-telling people. Addressing the problem of learning human involuntary cues, recent research in the field of image processing/CV and machine learning reshapes computer engineering systems into machine learning-based (ML) systems [2,3,4]. ML-based systems can learn tiny facial marks [4] and behaviors in connection with body motion, as well as hand gestures [5], therefore making lie recognition suitable via CV and ML techniques. However, a combination of two or more human involuntary actions (known as cognitive cues) provides good results at some expenses [6]. Therefore, deep learning with CV features, such as bidirectional long short-term memory (BLSTM), has advanced with appreciable performance, although only a few examples have appeared in the literature [1]. However, the weights of BLSTM do not highlight the key information in the context, which leads to information redundancy when learning long video sequences [7], as well as insufficient recognition accuracy and model instability [1].

Figure 1. Sample of real-life court trial video data set with involuntary cognitive cues. From the top left corner: (1) lying during the court trial with a forward head motion, (2) lying with double-hand motions, (3) lying with a single hand motion, (4) lying with an eyebrow, (5) lying with an eye blink, and (6) lying with a body motion. From the left bottom corner to the right: (7) truth-telling during the court trial with a forward head motion, (8) truth-telling with double-hand motions, (9) truth-telling with a single hand motion, (10) truth-telling with an eyebrow, (11) truth-telling with an eye blink, and (12) truth-telling with a body motion.

The recognition accuracy of these methods [1,4] is low because some multi-modal involuntary cues and their complementary information are missing; thus, their accuracy needs to be improved since involuntary cues, such as those shown in Figure 1, are significant factors in determining people’s behaviors while giving testimony during court trials or investigations. These involuntary cues are difficult to capture by using classical technique. Thus, deep learning methods are the suitable choice. However, deep learning methods provide a huge amount of information that is sometimes irrelevant to lie recognition. Uncertainty about the type of multi-modal information to be used for lying recognition remains a key factor. Thus, we improve this process by highlighting the key information of multi-modal features by proposing multi-modal spatial–temporal state transition patterns (STSTP). It is found that the highlighted multi-modal STSTP information provides a sound basis for lie recognition under real-life court trial videos and paves the way for the development of explainable and principled tools. Inspired by these results, we propose spatial–temporal state transition patterns based on involuntary actions of lying and truth-telling persons.

2. Eye Blinking Approach

Eye blinking is an involuntary cue during lying or truth-telling actions; however, it is a valuable index to enhance effective recognition. The eye-blinking cues of a lie are hard to learn during a cross-examination or a court trial. Although complex techniques are in use to record eye blinking, such as eye trackers, these techniques need a biomarker and complex data interpretation. Therefore, RGB videos from computer vision (CV) provide a flexible data set for the recognition of lies. CV allows an algorithm to be built without the need for a biomarker and/or complex data interpretation support. Eye-gaze lie systems, such as that of Bhaskaran et al. [8], propose eye-gaze features based on dynamic Bayesian learning. This method was reported to achieve an accuracy of 82.5% in learning distinct features between deceit and non-deceit cues. The major limitation of this work includes failure to reflect real-life scenarios, such as a suspect or witness wearing glasses or showing flicking an eyebrow motion. Proudfoot et al. [9] proposed eye pupil diameter using a latent growth curve modeling technique to capture changes in the eyes of the suspect and complainant, while George et al. [10] evaluated the number of eyeblink counts and their duration among lying and truth-telling persons. The former study finds that significant changes occur when a person is telling lies, while the latter study can conclude when a lying person is pressurized. The advantage of the work by Avola et al. [4] is that it highlights the benefits of extracting macro- and micro-expressions (MME) during police interrogation, cross-examination, and court trials. Macro- and micro-expressions of the face are built in an ensemble fashion. Therefore, it can be observed that a truth-telling or lie-telling person employs various body cues (multi-modal cues) to express themselves, as shown in Figure 1; thus, single-body cues are not sufficient to discriminate lies from facts.

3. Multi-Modal Cue Approaches

An automated multi-modal lie recognition system can allow the building of a system with potential behavioral cues to distinguish a lie from the truth [11]. The work by PrezRosas et al. [12] exploited verbal and non-verbal indices to detect court verdicts with decision trees and random forests. Abouelenien et al. [13] demonstrated the performance of cross-referencing physiological information with a decision tree and majority voting strategy, while Karimi et al. [14] exploited visual and acoustic cues using large margin nearest neighbor learning. Wu et al. [15] considered visual, audio, and text information in unison to compare and select the best classifier among decision trees, random forests, and linear SVM. Rill-Garcia et al. [16] jointly combined visual, acoustical, and textual indices using SVM to evaluate the effectiveness of the combined information. Krishnamurthy et al. [17] utilized a 3D CNN for feature extraction, and classification was conducted using multi-layer perceptron.

Furthermore, hand features are very stable cues for identifying human actions and intentions, as reported in the literature [3,18,19]. Lu et al. [20] extracted hand and facial features using color 3-D LUT, which are further utilized with blob analysis to track head and hand motions (behavioral state). Their method needs to be improved to avoid complex segmentation and long processing time. Meservy et al. [11] extracted hand and facial features using color analysis, eigenspace-based shape segmentation, and Kalman filters. The major limitation of this method is user invariability. Avola et al. [1] extracted hand features from RGB videos using OpenPose. In their method, the hand is represented using 21 finger joint coordinates per frame along with acceleration and velocity. In addition, their method calculates hand elasticity and openness to observe hand behavior while lying or speaking the truth. Mut Sen et al. [5] proposed visual, acoustic, and linguistic modalities. This method designs automatic and manually annotated features using a random seed, and the features are validated using different classifiers in semi-automatic and automatic modes. The best results are obtained from the semi-automatic system with artificial neural network classifiers. The work in [5] proposes a multi-feature approach based on subject-level analysis. The features are detected manually, which affects the performance. Most of the current best works achieve the best result via deep learning methods. However, eyebrow, eye blinking, and optical flow of involuntary information are not utilized by those methods; thus, the current challenges have not been properly addressed.

This entry is adapted from the peer-reviewed paper 10.3390/brainsci13040555

This entry is offline, you can click here to edit this entry!