Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Ndidiamaka Adiuku | -- | 3167 | 2024-03-05 13:37:40 | | | |

| 2 | Fanny Huang | Meta information modification | 3167 | 2024-03-06 09:42:52 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Adiuku, N.; Avdelidis, N.P.; Tang, G.; Plastropoulos, A. Robotic Applications in Maintenance, Repair, and Overhaul Hangar. Encyclopedia. Available online: https://encyclopedia.pub/entry/55876 (accessed on 03 March 2026).

Adiuku N, Avdelidis NP, Tang G, Plastropoulos A. Robotic Applications in Maintenance, Repair, and Overhaul Hangar. Encyclopedia. Available at: https://encyclopedia.pub/entry/55876. Accessed March 03, 2026.

Adiuku, Ndidiamaka, Nicolas P. Avdelidis, Gilbert Tang, Angelos Plastropoulos. "Robotic Applications in Maintenance, Repair, and Overhaul Hangar" Encyclopedia, https://encyclopedia.pub/entry/55876 (accessed March 03, 2026).

Adiuku, N., Avdelidis, N.P., Tang, G., & Plastropoulos, A. (2024, March 05). Robotic Applications in Maintenance, Repair, and Overhaul Hangar. In Encyclopedia. https://encyclopedia.pub/entry/55876

Adiuku, Ndidiamaka, et al. "Robotic Applications in Maintenance, Repair, and Overhaul Hangar." Encyclopedia. Web. 05 March, 2024.

Copy Citation

The aerospace industry has continually evolved to guarantee the safety and reliability of aircraft to make air travel one of the safest and most reliable means of transportation. Mobile robots encompass comprehensive system structures that work together through perception, detection, motion planning, and control. The subject of autonomous robot navigation entails mapping, localisation, obstacle detection, avoidance, and achieving an optimal path from a starting point to a predefined target location efficiently.

robotics

MRO hangar

robot navigation

1. Introduction

In recent years, the aviation sector has made significant strides in the periodic inspection and maintenance of aircraft, aiming to keep pace with the increasing global air traffic demand. This focus is driven by a commitment to safety and the goal to reduce operational costs, which currently represent 10–15% of airlines’ operational costs and are projected to rise from $67.6 billion in 2016 to $100.6 billion in 2026 [1]. This has heightened interest in automated visual aircraft inspection with the aim of reducing conventional assessment strategies conducted by human operators, which are often time-intensive and susceptible to transcriptional error, especially when accessing complex and hazardous areas within the aircraft [2]. To overcome these limitations and improve the effectiveness of the aircraft visual inspection process, the aerospace industry is actively exploring the integration of unmanned robotic systems, including mobile robots and drones. The fundamental focus lies on the capacity of robots to perceive and navigate through their surroundings, ensuring the avoidance of collisions with obstacles. This necessitates the understanding of dynamic and unstructured environments, like aircraft hangars, where accurate and real-time detection and avoidance of obstacles have paramount significance [3]. The hangar environment is unpredictably complex, with diverse object irregularities, including light variations that contribute to environmental uncertainties and navigational difficulties. Consequently, there is a need to equip autonomous vehicles with reliable obstacle detection and avoidance mechanisms to improve their ability to safely navigate the surrounding environment.

Traditionally, mobile robots have utilised technologies such as Radar and GPS, along with various other sensors for navigation purposes. However, in comparison to these sensors, RGBD (red, green, blue—depth) cameras and LiDAR (light detection and ranging) systems, although more expensive, offer significantly broader range and higher resolution. These advanced sensors enable the capture of a more detailed representation of the environment. RGBD cameras provide a rich visual and depth perception, while LiDAR systems offer more precise environmental mapping, making them superior for complex navigation tasks [4]. The data collected by these sensors undergo algorithmic processing to create comprehensive models of the environments that enable the implementation of obstacle avoidance strategies. The use of mobile robots to perceive, detect, navigate through environments, and enhance inspection processes has gained considerable attention in this field [5]. However, the principal challenge extensively investigated is accomplishing a navigational task that ensures an optimal, collision-free, and shortest path to the designated target. This challenge is amplified by the inherently complex and unstructured nature of the changing environments, which complicates the real-time decision-making process and impacts the robot’s autonomy. Consequently, the robots struggle to navigate, avoid obstacles, and identify the most suitable path in changing environments.

2. Robotic Applications in Maintenance, Repair, and Overhaul Hangar

2.1. MRO Hangar in Aviation

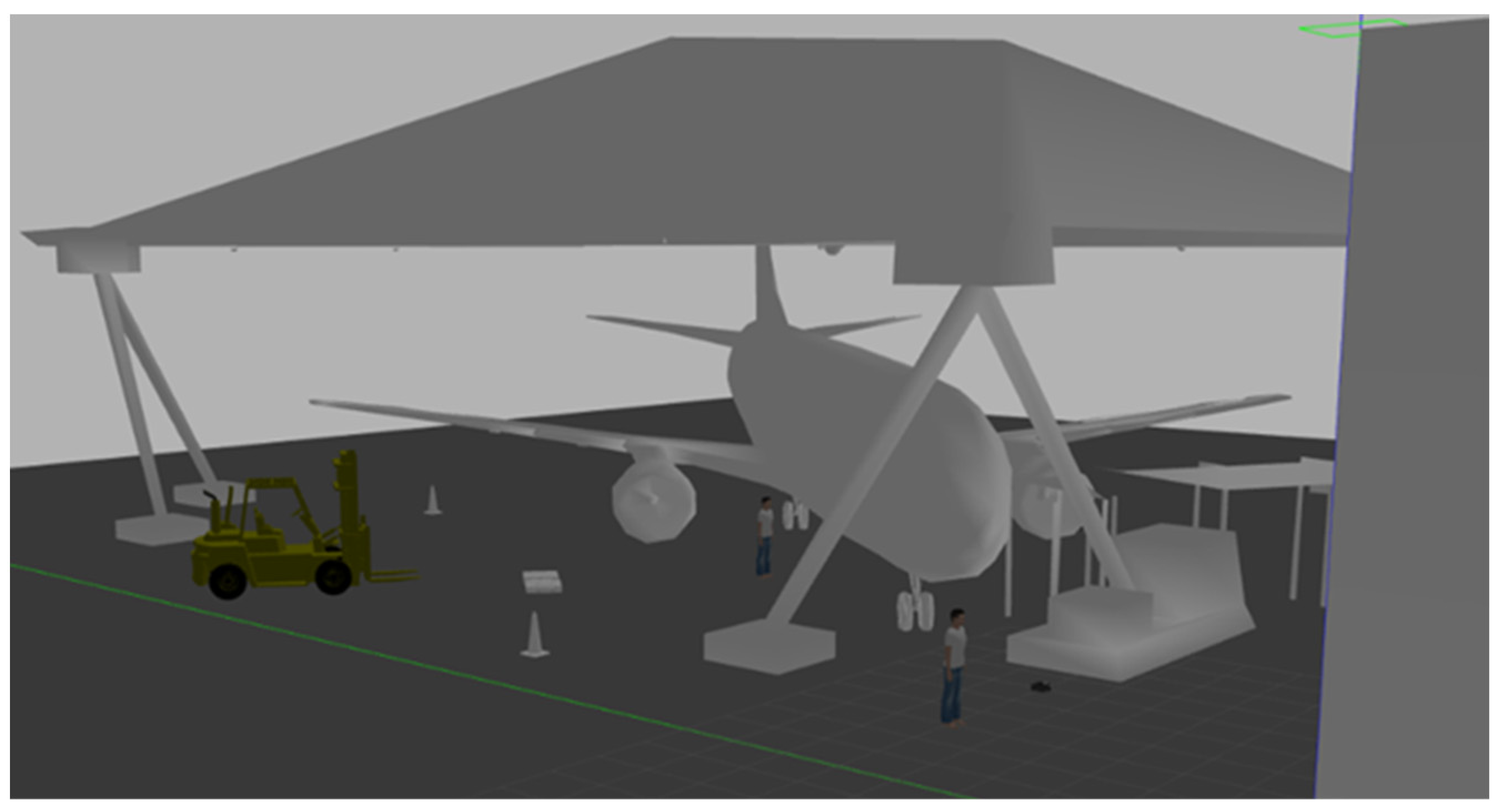

The aerospace industry has continually evolved to guarantee the safety and reliability of aircraft to make air travel one of the safest and most reliable means of transportation. The traditional approach to aircraft maintenance and inspection involves semiautomated systems with human control to execute tasks. Sensing and navigation systems are usually preprogrammed to follow predefined inspection paths and do not adapt to unexpected conditions or obstacles. These factors are time-consuming and increase the overall operation cost. There is a growing need for more advanced and automated systems, potentially reducing cost and enhancing safety. The aviation industry has grasped the integration of robotics to improve the MRO processes of aircraft towards the “Hangar of the Future” initiative. The MRO hangar represented in a simulation model shown in Figure 1 is a major part of the aviation sector in which Industry 4.0 (I4.0) technology environments [6] have gained wide adoption and are instrumental in improving safety and operational efficiency in the environment. Robotics and artificial intelligence are part of the key enablers of I4.0, as illustrated in Figure 2. These technologies have been effectively harnessed using unmanned vehicles, including intelligent ground robots, for autonomous navigation in a busy and changing hangar environment, particularly for inspection, maintenance, and repair tasks. A comparative description of the intelligent application of robotics over the conventional method is shown in Table 1. Robots have emerged as a promising cutting-edge technology, enabling efficient and precise operations in various tasks, including assembly, drilling, painting, and inspections. Intelligent robots involve the use of machines that are built and programmed to perform a specific task, combined with artificial intelligence techniques that instil and optimise intelligence through automated, data-driven learning capabilities. The integration of these technologies has spurred a digitalisation drive within the sector, promoting the concept of “Hangar of the future”. This is where intelligent robots play major roles by improving aircraft inspection efficiency and reducing aircraft-on-ground (AOG) time and overall operation cost.

Figure 1. Simulated Cranfield University MRO hangar.

Figure 2. Industry 4.0 technologies.

Table 1. Comparing traditional and intelligent robotics applications in MRO hangar.

| Task | Traditional Approach | Intelligent Robotics Application |

|---|---|---|

| Inspection accuracy | Dependent on programme quality and human interaction | Enhanced accuracy facilitated by learning and data analysis |

| Automation level | Manual–semiautomated with human oversight | Fully automated with limited human input |

| Algorithms | Predefined algorithms combined with basic sensor input | Sensor fusion, advanced navigation algorithms, and machine learning models |

| Obstacle detection and navigation | Basic, simple path planning algorithms | Using real-time and advanced deep learning models to enhance path planning |

| Task performance | Best suited for repetitive and defined tasks | Able to manage varied and complex tasks |

| Cost | Higher cost for longer maintenance time and error management | Lower maintenance engagements and cost |

A typical hangar environment is characterised by highly complex configuration space due to the presence of unstructured and dynamic objects that vary in shape, size, and colour. Additionally, low-light conditions prevalent in such environments can impact visibility in certain areas. These factors pose a challenge for robots, as their ability to navigate from the starting point to the target location is constrained by objects and changing environmental structures [7]. Mobile robots must proactively engage with their surroundings, interacting with and exploring the aircraft environment to ensure efficient navigation experiences [6]. In this process, they generate valuable information using various sensors that facilitate the detection of environmental features, including positions of obstacles, which are essential for environment modelling and safe navigation to their destinations [8]. Different machine-learning-based functionalities have been developed, leveraging environmental information for robotic applications [9]. These have been demonstrated through various robotic platforms, such as the human-like robots from Boston Dynamics, the Crawling inspection robot by Cranfield University [2], and others. These robot systems follow standard robot architecture comprising sensory data acquisition, environmental perception, decision-making process, and execution of actions. This architecture is embedded within the robot’s hardware framework to effectively learn the robot’s orientation relative to a set of state space variables for optimal navigation in complex and dynamic environments.

2.2. Intelligent Robotic in MRO Hangar

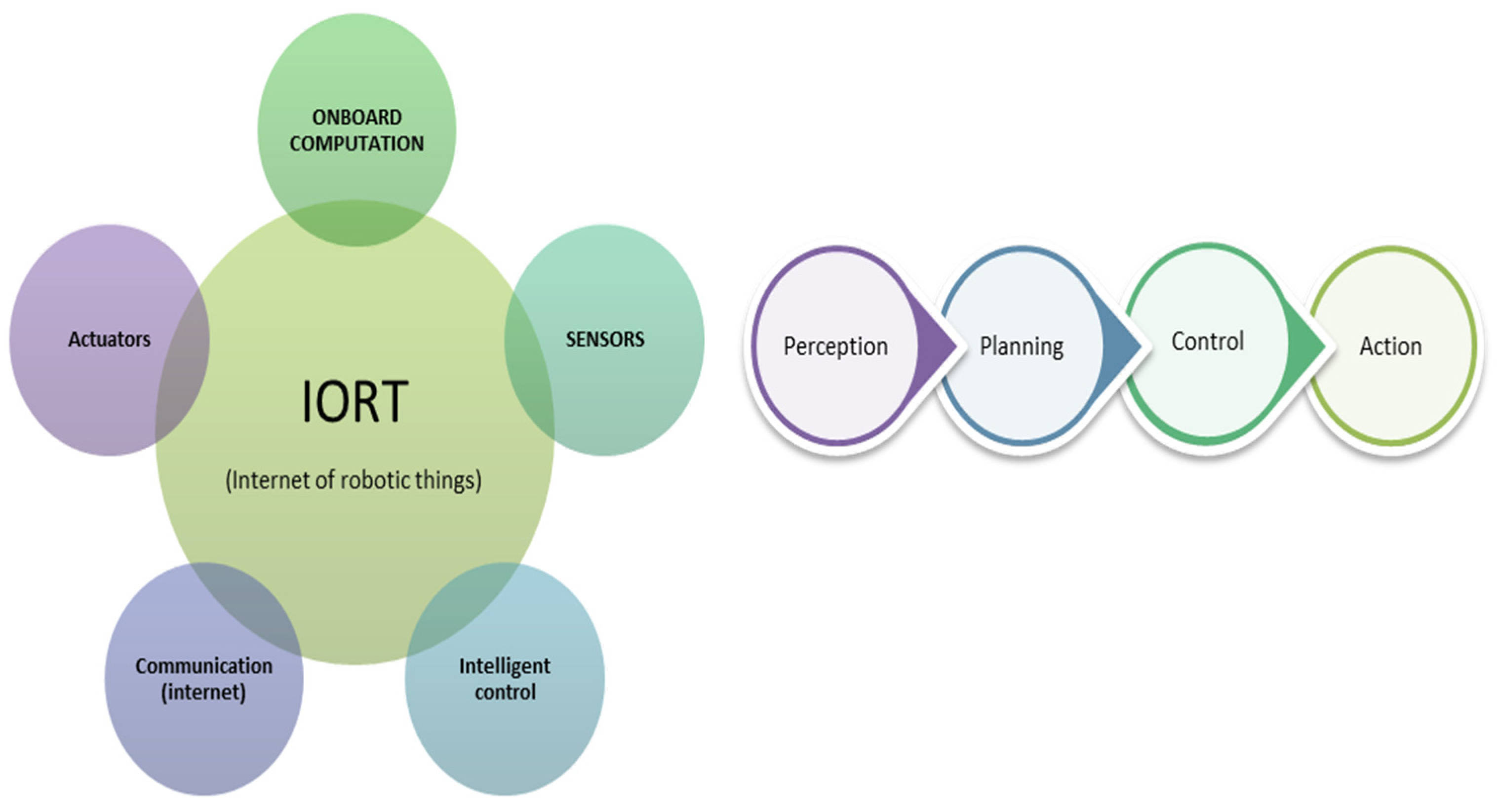

Mobile robots encompass comprehensive system structures that work together through perception, detection, motion planning, and control, as illustrated in Figure 3, to perform a series of navigation tasks. Robotic scientists in this field have proposed many intelligent technologies integrated to form an Internet of robotic things (IORT) that can interact with the environment and learn from sensor information or real-time observation without the need for human intervention [2]. This technology empowers robots to operate more independently and make decisions based on the information they gather from their environments. Machine learning (ML) is an artificial intelligence approach that is at the core of these enabling intelligent technologies with widespread adoption [2] and has become an essential component in accomplishing many intelligent tasks in robotics. The ML techniques incorporate sensor information fusion, object detection [10], collision avoidance mechanism [11], pathfinding [12], path tracking [13], and control systems [14] to solve robot autonomous navigation problems [15]. Diverse arrays of sensors, including laser scanners, cameras, LiDAR, and others, are leveraged for information gathering, mapping, obstacle detection, as well as robot positions and velocities. The fusion of information from these multiple sensors has brought a paradigm shift in the development of more robust and accurate models of robotic systems. The multisensory fusion augments the capabilities of each individual sensor, thereby enhancing the overall system’s visual perception and its efficacy in obstacle detection and avoidance under a variety of operational conditions [16]. ML methods have revolutionised robot navigation, especially in unstructured and complex environments, by offering highly accurate and robust capabilities [17] to training models by learning from data to adapt to various types of obstacles they encounter during navigation.

Figure 3. Structure of robotic intelligence.

2.3. Robotic Navigation

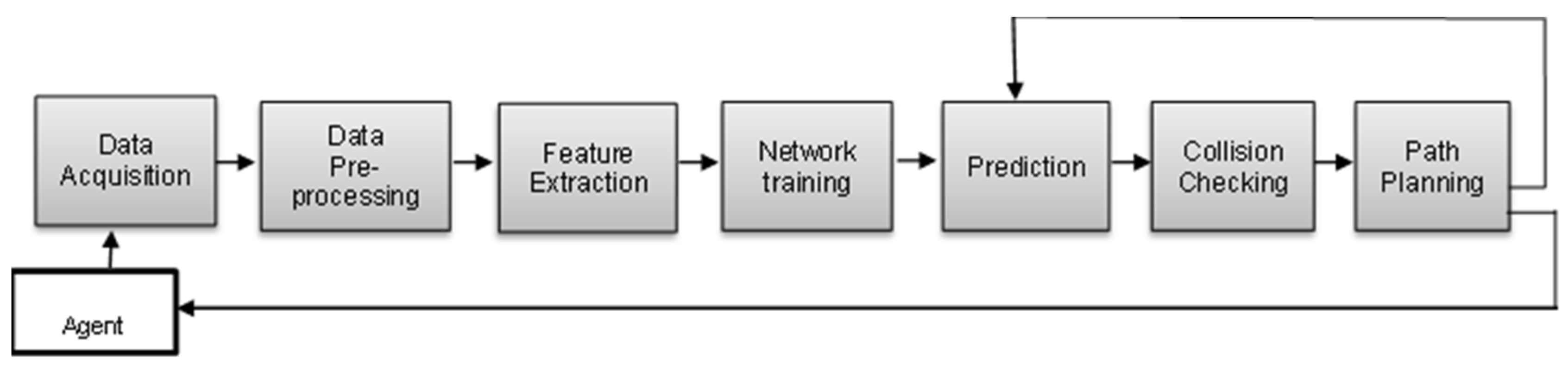

The subject of autonomous robot navigation entails mapping, localisation, obstacle detection, avoidance, and achieving an optimal path from a starting point to a predefined target location efficiently [18]. The nature of obstacles encountered can be static or dynamic, depending on the environment structure. Navigation through such an environment can be challenging due to the reliance on the sensory and real-time capability of analysing the vast amount of environmental data. Some of the robot navigation problems include the need to accurately perceive, identify, and respond to the geometry of the environment, the shape of the robot, obstacle types, and obstacle position using a suitable model. However, improper navigation processes often result in inaccuracies in perception, the development of flawed models of the environment, and emergencies of learning complexities, which significantly limit the robot from achieving its navigational goal. The application of advanced computational techniques like parallel processing and deep neural network (DNN) algorithms has significantly improved the navigation experience. In the context of a neural-network-enabled approach for obstacle avoidance and path planning, the architecture encompasses several interconnected modules, each contributing uniquely to overall system efficiency. As illustrated in Figure 4, the modules collaboratively contribute to achieving optimal path planning, adapting to changing scenarios, and are able to minimise obstacle collisions in complex environments.

Figure 4. Learning-based path planning framework.

For many applications, various researchers have added specialist knowledge and undertaken studies to improve these modules to solve navigation problems. In most cases, the agents learn from data or through trial and error to master navigational skills and facilitate the generalisation of learned skills in similar settings in simulation environments, which is valuable for reducing training time and real-world difficulties. The virtual platform helps to manage environmental factors and task structure that can influence the efficiency, adaptability, and reusability of these models before transferring to the real-world environment. In the context of the MRO hangar environment, the robotic systems are subject to complexities and uncertainties due to the unstructured nature of the settings, variability in object types, and sensor capacity. This demands robust solutions capable of perceiving, responding and adapting to real-time changes.

2.4. Vision Sensors

To effectively perform robotics tasks, mobile robots require a thorough understanding of their environment. To achieve this, robots are equipped with sensors that enable them to perceive and gather relevant information from the surroundings. Vision-based sensors, including LiDAR, cameras, and depth cameras, have become the most used equipment for unmanned vehicle (UV) detection and navigation tasks [19]. LiDAR is extensively used in the detection and tracking of AMR, even though it may be more costly than some alternatives. The sensor can obtain reliable information, including basic shape, precise distance measurements, and position of the obstacle, and is more efficient in different weather and lighting conditions [20]. However, the ability to capture the texture and colour of objects for accurate obstacle detection is limited compared with cameras [21][22]. This limitation can result in challenges when attempting to accurately track fast-moving objects in real time. RGBD cameras have also shown great capabilities, including high resolution and generation of rich and detailed environment information, though within a limited range, but are greatly efficient in object position estimation using depth information [23][24]. However, the performance is highly susceptible to lightning conditions, which can be associated with certain areas in the hangar environments. The hangar environment has significant influences on the choice of appropriate perception sensors for operational use within the space. Obstacle detection sensors are designed to interact with the environment and generate environmental data through sensor devices. They then use algorithms based on computer vision and object recognition for obstacle detection, tracking, and avoidance in a navigation system. To complement the capabilities of the RGB camera, depth sensing was combined in [25] to provide an accurate distance between obstacles and the robot position based on operational range and resolutions. The authors in [26] employed depth camera information to estimate robots’ poses for an efficient navigation experience. Depth cameras like Microsoft Kinect, Intel RealSense, and OAK-D offer valuable 3D spatial data that can enhance robots’ understanding of their environment with precision. The integration generally facilitates obstacle sensing and state estimation for robust obstacle avoidance and path planning. Like the RGB cameras, variable lighting conditions and environmental factors can affect the accuracy of the perceived obstacles and position. This perception constraint is part of obstacle avoidance and path planning challenges in complex settings.

Recent research has made significant contributions to intelligent obstacle detection and avoidance solutions based on sensor usages and algorithm improvement. The work in [27] presents different configurations and capabilities of vision sensors relevant across diverse domains. Manzoor et al. [28] analysed Vison sensor modalities as intricate factors in understanding environmental features used in deep learning models for real-world mobile robot’s obstacle detection and navigation operation. Xie et al. [29] improved obstacle detection and avoidance techniques through the utilisation of 3D LiDAR. Their study highlights the proficiency of LiDAR in detecting basic shapes and identifying obstacles at extended ranges. The integration of sensor data for more comprehensive environmental perception in learning-based models has been a notable development in the field of robotic navigation. This translates raw sensor data into usable information to enhance the system’s capability from environmental perception to improved efficiency in obstacle detection and effective decision making for obstacle avoidance and path planning.

2.5. Obstacle Detection

Obstacle perception and identification for robot navigation involves locating potential obstacles that could influence a robot’s ability to navigate in its surroundings. The mobile robot utilises its sensory systems, which may include LiDARs or cameras, to perceive and understand its environment, enabling it to plan a safe and collision-free path to its intended destination. Deep learning has gained wide adoption in research and industry, leading to the development of numerous navigation models that leverage different object detection models and sensor inputs for robot obstacle detection and avoidance systems. Most recent object detection methods are based on convolutional neural networks (CNNs) like YOLO [30], Faster R-CNN [31], and single-shot multibox detectors [32]. Faster R-CNN is renowned for its high detection accuracy and employs a two-stage deep learning framework. This network structure impacts computational efficiency and speed, which are crucial factors for real-time applications [33]. The YOLO model, on the other hand, is a one-stage object section approach that is known for significant speed and real-time performance. This makes it well suited for autonomous mobile robot navigation, in which prompt decision making is important for obstacle avoidance and motion control.

2.6. Obstacle Avoidance

Ensuring the safety of the working environment is a primary priority when deploying mobile robots for navigation tasks in complex environments. The safety solution should be able to perceive the environment and take proactive actions to avoid obstacle collisions using reliable sensors [11]. The mobile robot should have the capability to identify a safe and efficient path to navigate within its operational environment, which may contain static and dynamic obstacles to the target destination. Different learning-based obstacle avoidance algorithms have been developed to enable robots to effectively and precisely complete intended tasks. Some are integrated with local and global planners to efficiently adjust the direction and speed of robot motion in response to detected obstacles within static and dynamic environments to generate an improved path to reach the target location [34]. Recent review studies, learning-based models in robotic navigation, have demonstrated notable success by learning and generating obstacle data from environment sensors. These models extract obstacle features from images and video streams, allowing them to classify and locate different obstacles within the given environment. The integration of these models into robot operating system (ROS)-based planners has shown improved performance in robotic navigation. Planning algorithms like the dynamic window approach (DWA) [35] have good capabilities in a dynamic and complex environment and have been widely combined with learning algorithms for more capability, efficiency, and intelligent path planning [36].

2.7. Path Planning

Autonomous learning in path planning has made significant progress in recent times, where technologies such as CNN and deep reinforcement learning have been increasingly adopted. Path planning entails a sequence of configurations based on robot types and environment models that enable robots to navigate from a starting point to a target location [37]. The environment can be mapped to represent geometric information about the environment and connectivity between different nodes or maples. The map-based method enables the robotic solutions to compute the robot’s dynamics and environment representation for an optimal global path planning to the goal [38]. For local path planning, it relies on real-time sensory information to navigate safely in the presence of static and dynamic obstacles. Another aspect of path planning configuration is the maples model, which requires no predefined map of the environment but rather capitalises on frameworks like deep learning models to learn and enhance optimal navigation strategies. Path planning in an MRO hangar can be challenging, as the environment is often changing and complex with a high density of obstacles. To ensure a robust obstacle-free path, ongoing research is focusing on path tracking [39], advanced deep learning [40], and hybrid approaches for more autonomous and intelligent robot path planning to target locations [36].

2.8. Path Tracking

Safe and efficient robot navigation requires a path tracking system that guides mobile robots along the planned trajectory to a target location, managing and minimising deviation from the planned route. This involves continuous monitoring and updating of the planned route based on sensor feedback and the changing environment. The work in [39] reviewed path tracking algorithms relative to high and low speeds. For high-speed applications, the reaction time available for the robots to perceive, process, and respond to obstacles was significantly reduced at high velocities, making it harder to execute quick and sharp manoeuvres without compromising stability or safety. Looking at low-speed use cases, the application of robotic systems in MRO hangars involved low-speed movement and the requirement for precise path tracking in complex settings. The low-speed movement of these robots led to path tracking errors, especially when dealing with sharp turns and frequent changes in direction. Accurate modelling of low-speed dynamics is essential to adjust the robot’s behaviour for optimal path tracking. The combination of adaptive control systems, sensor technologies [41], and advanced deep learning techniques have been shown to enhance robust real-time path tracking capability for robot navigation in such scenarios. From the study in [42], the most applied path tracking algorithms include pure pursuit (PP) [43], model predictive control (MPC) [44][45], as well as learning-based models to generate control laws leveraging training data and experience from a variety of scenarios [46].

References

- Sprong, J.P.; Jiang, X.; Polinder, H. Deployment of Prognostics to Optimize Aircraft Maintenance—A Literature Review. J. Int. Bus. Res. Mark. 2020, 5, 26–37.

- Dhoot, M.K.; Fan, I.-S.; Skaf, Z. Review of Robotic Systems for Aircraft Inspection. SSRN Electron. J. 2020.

- Lakrouf, M.; Larnier, S.; Devy, M.; Achour, N. Moving obstacles detection and camera pointing for mobile robot applications. In ICMRE 2017, Proceedings of the 3rd International Conference on Mechatronics and Robotics Engineering, Paris, France, 8–12 February 2017; ACM International Conference Proceeding Series; Association for Computing Machinery: New York, NY, USA, 2017; pp. 57–62.

- Mugunthan, N.; Balaji, S.B.; Harini, C.; Naresh, V.H.; Prasannaa Venkatesh, V. Comparison Review on LiDAR vs. Camera in Autonomous Vehicle. Int. Res. J. Eng. Technol. 2020, 7, 4242–4246.

- Papa, U.; Ponte, S. Preliminary Design of an Unmanned Aircraft System for Aircraft General Visual Inspection. Electronics 2018, 7, 435.

- Jovančević, I.; Orteu, J.-J.; Sentenac, T.; Jovančević, R.G.I.; Gilblas, R.; Jovančevi, I. Automated visual inspection of an airplane exterior. In Proceedings of the Twelfth International Conference on Quality Control by Artificial Vision, Le Creusot, France, 3–5 June 2015; Volume 9534, pp. 247–255.

- Kurzer, K. Path Planning in Unstructured Environments: A Real-time Hybrid A* Implementation for Fast and Deterministic Path Generation for the KTH Research Concept Vehicle Situation Assessment and Semantic Maneuver Planning under Consideration of Uncertainties for Cooperative Vehicles Project. Master’s Thesis, KTH Royal Institute of Technology, Stockholm, Sweden, 2016.

- Sevastopoulos, C.; Konstantopoulos, S. A Survey of Traversability Estimation For Mobile Robots. IEEE Access 2022, 10, 96331–96347.

- Otte, M.W. A Survey of Machine Learning Approaches to Robotic Path-Planning; University of Colorado at Boulder: Boulder, CO, USA, 2009.

- Valenti, F.; Giaquinto, D.; Musto, L.; Zinelli, A.; Bertozzi, M.; Broggi, A. Enabling Computer Vision-Based Autonomous Navigation for Unmanned Aerial Vehicles in Cluttered GPS-Denied Environments. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Proceedings, ITSC, Maui, HI, USA, 4–7 November 2018; Volume 2018-Novem, pp. 3886–3891.

- Rill, R.A.; Faragó, K.B. Collision Avoidance Using Deep Learning-Based Monocular Vision. SN Comput. Sci. 2021, 2, 375.

- Zhou, C.; Huang, B.; Fränti, P. A review of motion planning algorithms for intelligent robots. J. Intell. Manuf. 2022, 33, 387–424.

- Sezer, V. An Optimized Path Tracking Approach Considering Obstacle Avoidance and Comfort. J. Intell. Robot. Syst. Theory Appl. 2022, 105, 21.

- Kuutti, S.; Bowden, R.; Jin, Y.; Barber, P.; Fallah, S. A Survey of Deep Learning Applications to Autonomous Vehicle Control. arXiv 2019, arXiv:1912.10773.

- Song, X.; Fang, H.; Jiao, X.; Wang, Y. Autonomous mobile robot navigation using machine learning. In Proceedings of the 2012 IEEE 6th International Conference on Information and Automation for Sustainability, Beijing, China, 27–29 September 2012; pp. 135–140.

- Vermesan, O.; Bahr, R.; Ottella, M.; Serrano, M.; Karlsen, T.; Wahlstrøm, T.; Sand, H.E.; Ashwathnarayan, M.; Gamba, M.T. Internet of Robotic Things Intelligent Connectivity and Platforms. Front. Robot. AI 2020, 7, 104.

- Masita, K.L.; Hasan, A.N.; Shongwe, T. Deep Learning in Object Detection: A Review. In Proceedings of the International Conference on Artificial Intelligence, Big Data, Computing and Data Communication Systems (icABCD), Durban, South Africa, 6–7 August 2020; pp. 1–11.

- Li, T.; Xu, W.; Wang, W.; Zhang, X. Obstacle detection in a field environment based on a convolutional neural network security. Enterp. Inf. Syst. 2022, 16, 472–493.

- Nowakowski, M.; Kurylo, J. Usability of Perception Sensors to Determine the Obstacles of Un-manned Ground Vehicles Operating in Off-Road Environments. Appl. Sci. 2023, 13, 4892.

- Ennajar, A.; Khouja, N.; Boutteau, R.; Tlili, F. Deep Multi-modal Object Detection for Autonomous Driving. In Proceedings of the 2021 18th International Multi-Conference on Systems, Signals & Devices (SSD), Monastir, Tunisia, 22–25 March 2021; p. 10.

- Dang, X.; Rong, Z.; Liang, X. Sensor Fusion-Based Approach to Eliminating Moving Objects for SLAM in Dynamic Environments. Sensors 2021, 21, 230.

- Feng, Z.; Jing, L.; Yin, P.; Tian, Y.; Li, B. Advancing Self-supervised Monocular Depth Learning with Sparse LiDAR. In Proceedings of the 5th Conference on Robot Learning, London, UK, 8–11 November 2021.

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. CenterNet++ for Object Detection. arXiv 2022, arXiv:2204.08394.

- Huang, P.; Huang, P.; Wang, Z.; Wu, X.; Liu, J.; Zhu, L. Deep-Learning-Based Trunk Perception with Depth Estimation and DWA for Robust Navigation of Robotics in Orchards. Agronomy 2023, 13, 1084.

- Chen, C.Y.; Chiang, S.Y.; Wu, C.T. Path planning and obstacle avoidance for omnidirectional mobile robot based on Kinect depth sensor. Int. J. Embed. Syst. 2016, 8, 343.

- Maier, D.; Hornung, A.; Bennewitz, M. Real-Time Navigation in 3D Environments Based on Depth Camera Data. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots (Humanoids), Osaka, Japan, 29 November–1 December 2012; pp. 692–697.

- Reinoso, O.; Payá, L. Special Issue on Visual Sensors. Sensors 2020, 20, 910.

- Manzoor, S.; Joo, S.; Kim, E.; Bae, S.; In, G.; Pyo, J.; Kuc, T. 3D Recognition Based on Sensor Modalities for Robotic Systems: A Survey. Sensors 2021, 21, 7120.

- Xie, D.; Xu, Y.; Wang, R. Obstacle detection and tracking method for autonomous vehicle based on three-dimensional LiDAR. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419831587.

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788.

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149.

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. SSD: Single Shot MultiBox Detector. Lect. Notes Comput. Sci. 2016, 9905, 21–37.

- Mahendrakar, T.; Ekblad, A.; Fischer, N.; White, R.; Wilde, M.; Kish, B.; Silver, I. Performance Study of YOLOv5 and Faster R-CNN for Autonomous Navigation around Non-Cooperative Targets. In Proceedings of the IEEE Aerospace Conference Proceedings, Big Sky, MT, USA, 5–12 March 2022; pp. 1–12.

- Nguyen, A.-T.; Vu, C.-T. Obstacle Avoidance for Autonomous Mobile Robots Based on Mapping Method. In Proceedings of the International Conference on Advanced Mechanical Engineering, Automation, and Sustainable Development, Ha Long, Vietnam, 4–7 November 2021.

- Fox, D.; Burgard, W.; Thrun, S. The dynamic window approach to collision avoidance. IEEE Robot. Autom. Mag. 1997, 4, 23–33.

- Pausti, N.M.; Jkuat, R.N.; Cdta, N.O.; Adika, C.O. Multi-object detection for autonomous motion planning based on Convolutional Neural Networks. Int. J. Eng. Res. Technol. 2019, 12, 1881–1889.

- Sabiha, A.D.; Kamel, M.A.; Said, E.; Hussein, W.M. Real-time path planning for autonomous vehicle based on teaching–learning-based optimization. Intell. Serv. Robot. 2022, 15, 381–398.

- Sánchez-Ibáñez, J.R.; Pérez-Del-pulgar, C.J.; García-Cerezo, A. Path Planning for Autonomous Mobile Robots: A Review. Sensors 2021, 21, 7898.

- Chen, Y.; Zheng, Y. A Review of Autonomous Vehicle Path Tracking Algorithm Research. Authorea 2022.

- Quiñones-Ramírez, M.; Ríos-Martínez, J.; Uc-Cetina, V. Robot path planning using deep reinforcement learning. arXiv 2023, arXiv:2302.09120.

- Geng, K.; Liu, S. Robust Path Tracking Control for Autonomous Vehicle Based on a Novel Fault Tolerant Adaptive Model Predictive Control Algorithm. Appl. Sci. 2020, 10, 6249.

- Rokonuzzaman, M.; Mohajer, N.; Nahavandi, S.; Mohamed, S. Review and performance evaluation of path tracking controllers of autonomous vehicles. IET Intell. Transp. Syst. 2021, 15, 646–670.

- Wang, L.; Chen, Z.L.; Zhu, W. An improved pure pursuit path tracking control method based on heading error rate. Ind. Robot. 2022, 49, 973–980.

- Wang, M.; Chen, J.; Yang, H.; Wu, X.; Ye, L. Path Tracking Method Based on Model Predictive Control and Genetic Algorithm for Autonomous Vehicle. Math. Probl. Eng. 2022, 2022, 4661401.

- Huang, Z.; Li, H.; Li, W.; Liu, J.; Huang, C.; Yang, Z.; Fang, W. A New Trajectory Tracking Algorithm for Autonomous Vehicles Based on Model Predictive Control. Sensors 2021, 21, 7165.

- Martinsen, A.B.; Lekkas, A.M.; Gros, S. Reinforcement learning-based NMPC for tracking control of ASVs: Theory and experiments. Control. Eng. Pract. 2022, 120, 105024.

More

Information

Subjects:

Robotics

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

910

Revisions:

2 times

(View History)

Update Date:

06 Mar 2024

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No