You're using an outdated browser. Please upgrade to a modern browser for the best experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Shivoh Chirayil Nandakumar | -- | 2940 | 2024-02-27 09:14:18 | | | |

| 2 | Sirius Huang | Meta information modification | 2940 | 2024-02-28 01:55:34 | | | | |

| 3 | Sirius Huang | Meta information modification | 2940 | 2024-03-11 01:56:51 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Chirayil Nandakumar, S.; Mitchell, D.; Erden, M.S.; Flynn, D.; Lim, T. Anomaly Detection in Autonomous Robotic Missions. Encyclopedia. Available online: https://encyclopedia.pub/entry/55506 (accessed on 25 December 2025).

Chirayil Nandakumar S, Mitchell D, Erden MS, Flynn D, Lim T. Anomaly Detection in Autonomous Robotic Missions. Encyclopedia. Available at: https://encyclopedia.pub/entry/55506. Accessed December 25, 2025.

Chirayil Nandakumar, Shivoh, Daniel Mitchell, Mustafa Suphi Erden, David Flynn, Theodore Lim. "Anomaly Detection in Autonomous Robotic Missions" Encyclopedia, https://encyclopedia.pub/entry/55506 (accessed December 25, 2025).

Chirayil Nandakumar, S., Mitchell, D., Erden, M.S., Flynn, D., & Lim, T. (2024, February 27). Anomaly Detection in Autonomous Robotic Missions. In Encyclopedia. https://encyclopedia.pub/entry/55506

Chirayil Nandakumar, Shivoh, et al. "Anomaly Detection in Autonomous Robotic Missions." Encyclopedia. Web. 27 February, 2024.

Copy Citation

An anomaly in autonomous robotic missions (ARM) is a deviation from the expected behaviour, performance, or state of the robotic system and its environment, which may impact the mission’s objectives, safety, or efficiency; and this anomaly can be caused either by system faults or the change in the environmental dynamics of interaction. The nuanced understanding of anomaly categories facilitates a more strategic approach, ensuring that detection methods are more effective in addressing the specific nature of the anomaly.

anomaly

autonomous robots

autonomous missions

1. Introduction

Autonomous robotic missions (ARMs) have become increasingly important in various fields, including manufacturing [1], logistics [2], search and rescue operations [3], and even space exploration [4]. As the complexity of these missions grows, ensuring the reliability and safety of the robots becomes paramount [5]. One critical aspect of ensuring the safety and reliability of ARMs is the timely and accurate detection of anomalies [6], which can arise from various sources such as hardware faults [7], software faults [8], environmental change [9], or unexpected interactions with other systems [10][11][12][13].

2. What Are Anomalies and How Do They Differ from Faults?

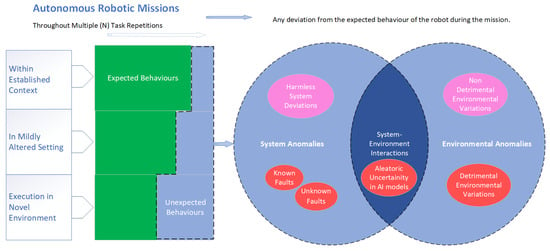

The relationship between anomalies and faults is quite complex, with different researchers having varying opinions [14][15]. However, Figure 1 clarifies the fault and anomaly concepts and their relationship in the context of ARMs. When the ARM is repeated N times, the green left section of the bar represents the number of times where the hypothetical autonomous robot completes the mission with the expected behaviours. The blue bar section towards the right, represents the number of times in the mission where unexpected behaviour in execution were observed. To differentiate these unexpected behaviours, and their inter-relations, researchers visualise it with a Venn diagram. As observed in the Venn diagram, not all anomalies are as harmful as most people perceive [16]. If an observed anomaly was diagnosed to be harmful or disruptive to the mission continuity, it could be either due to a system anomaly or an environmental anomaly. System anomalies can be caused by faults, which can be classified as either known or unknown faults. Known faults represent the system errors, the cause of which are known and mostly have a known solution while unknown or unanticipated faults are system errors that were not experienced nor solved yet [14][15][17].

Figure 1. The relationship of anomalies and faults in the context of autonomous robotic missions.

Khalastchi and Kalech’s [14] article suggests the commonly used term “unknown fault” in fault detection is indicative that there is no clear term to define the unknown faults. Such unknown faults are usually interpreted as “anomaly” in the context of ARMs. However, Graabæk et al. [18] suggests that anomalies can be symptoms of faults, implying that both known and unknown faults can be a cause of an anomaly. Moreover, researchers propose that the term anomaly has a meaning beyond the undesired system behaviour caused by faults and that also encapsulates the environmental impacts independent of the system and hence cannot be named as faults. Furthermore, as indicated in Figure 1, anomalies in the autonomous missions can also result from the aleatoric uncertainty of the AI models used for the perception and control tasks [19], which become predominant in interactions with specific and usually unforeseen environmental conditions to manifest unexpected behaviour, hence an anomaly.

The two coloured bars in Figure 1 highlight that the probability of the robot executing the same behaviours depends on its environment. Usually, the training of AI systems happens in a specific controlled environment. Thus, the missions will be highly repeatable in those environments; however, in new environments, there is a higher possibility that the robot will perform unexpected or wrong executions due to the probabilistic nature of certain AI models such as deep reinforcement learning and Bayesian Models [20][21][22]. Moreover, the irreducible error or aleatoric uncertainty [23], intrinsic to any AI model, is one of the reasons for anomalies in autonomous robots and is exacerbated when the robot is in a new environment [19].

The consequences of these anomalies are not always harmful to a mission, as illustrated in Figure 1. An anomaly can sometimes be inconsequential to the mission continuity. For example, a robot that uses deep reinforcement learning for motion planning can move from a start position to an end goal via different trajectories. In the presence of an environmental anomaly, and due to its interaction with the probabilistic nature of AI models, it may randomly plan a totally new path which was not experienced before; yet, the new trajectory may result in achieving the goal more or less in the same duration, hence overall it might not result in any failure or underperformance. Such deviations are considered a harmless or inconsequential anomaly.

In summary, there are different types of anomalies and different reasons for them in autonomous missions. How do we know what an anomalous behaviour is? Are there any fundamental features that define anomalous behaviours in ARMs? This is explored in the next section in reference to the reviewed literature.

3. Classification of Anomalies in ARMs

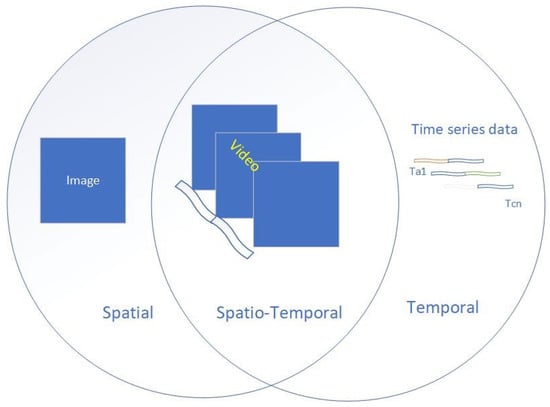

Anomalies in ARMs can manifest in various forms, with different levels of complexity and implications for the mission’s success and safety. To better understand and address these anomalies, it is essential to classify them according to their fundamental characteristics. In analysing the strategy, methods, and models within the reviewed articles, three distinct frameworks for anomalies in ARMs were identified: spatial, temporal, and spatiotemporal, as shown in Figure 2.

Figure 2. Spatial, temporal, and their correlations. For example, an image of a car with its background is purely spatial, and similarly, the time-series data showing variation in the car’s horsepower are purely temporal. However, researchers demonstrate the spatiotemporal correlation when the images are stacked to create a sequence of motions or when horsepower data links with the appropriate image in the sequence.

The spatial, temporal, and spatiotemporal classification refers primarily to the nature of the observation that defines the anomaly and its classification and, hence, the data used for its detection. For example, spatial anomalies may include hardware faults affecting the physical structure of a robot or environmental obstacles that hinder a robot’s movement. Temporal anomalies, on the other hand, may include unexpected changes in a robot’s speed, acceleration, or energy consumed over time. Spatiotemporal anomalies, which involve both spatial and temporal aspects, can be more complex and challenging to detect, as they may involve interactions between multiple robots or between a robot and its environment over time.

The distinction between these types of anomalies has implications for the methods used to detect them and the challenges faced in ARMs. For instance, spatial anomalies may be more effectively detected using image-based or distance-based algorithms, while temporal anomalies may require time-series analysis or signal-processing techniques. Spatiotemporal anomalies, due to their complexity, may require more advanced methods, such as machine learning algorithms that can capture the intricate relationships between spatial and temporal features.

Table 1 presents a summary of the key characteristics of each category of anomalies, along with representative examples of anomalies from the literature. It lists articles selected based on their relevance to the specific anomaly types, their methodological rigour, and their contributions to the understanding and detection of anomalies in ARMs.

Table 1. Features of anomalies observed in ARMs.

| Title | Year | “Anomaly” Determinants | Data Used to Capture “Anomalies” | Anomaly Class |

|---|---|---|---|---|

| Compressive change retrieval for moving object detection [24] | 2016 | The difference in the given image and the previous similar images retrieved from a search. | GPS data, two cameras, and LiDAR data | Spatial |

| Anomaly detection and cognisant path planning for surveillance operations using aerial robots [25] | 2019 | Car, blanket | Camera of a DJI Matrice 100 | Spatial |

| Safe robot navigation [26] | 2020 | Variation in environmental conditions (Sunlight, Fire, Rain, Wet) | RGB-D, Gravity Aligned Depth. Gravity Aligned Surface Normals. Gravity Aligned Surface Normals. | Spatial |

| An anomaly detection system via moving surveillance robots with human collaboration [27] | 2021 | Variation of the position of objects in the reference images to that of the observed image. | RGB data from a camera attached to the robot | Spatial |

| An anomaly detection approach to monitor the structure-based navigation in agricultural robotics [28] | 2021 | Low light, shadows, leaf-covering sensors, and unrealistic basic assumptions in the tracking algorithms | 16-channel 3D LiDAR sensor | Spatial |

| Curiosity MSL [29] | 2018 | Variation in the individual telemetry data. | Telemetry data for a specific time frame (5 days) nearby an anomaly | Temporal |

| Robot health estimation through unsupervised anomaly detection using gaussian mixture models [30] | 2020 | Robot immobilised or unstable due to external influences such as extra payload | Current from the motor | Temporal |

| Anomaly detection in industrial robots [31] | 2021 | Overload, parts breaking, environmental effects, maloperation, program exceptions, transmission errors | Power factor. Loop current. Reactive power. Active power. Current, Voltage. Incoming frequency. | Temporal |

| Anomaly detection in cobots [32] | 2021 | Increase in temperature due to load and speed of the cobot during human-robot interaction. | Joint values, Speed, Current, Voltage Power | Temporal |

| Robot-assisted feeding [33] | 2017 | Touch by a user, aggressive eating, utensil collision by a user, sound from a user, face occlusion, utensil miss by a user | RGB-D, Joint torque, Sound energy | Spatio-Temporal |

| Human-care rounds robot with contactless breathing measurement [34] | 2019 | A breathing Human on the floor | RGB-D and Thermal Point Cloud (TPC) | Spatio-temporal |

| Anomaly detection using IoT sensor-assisted ConvLSTM models for connected vehicles [35] | 2021 | Unusual Variations in autonomous vehicle navigation and powertrain data | Temperature sensors, pressure sensors, location and orientation sensors | Spatio-temporal |

| Traffic accident detection via self-supervised consistency learning in driving scenarios [36] | 2022 | Inconsistent movements of humans and other vehicles | RGB | Spatio-temporal |

| Proactive anomaly detection for robot navigation with multi-sensor fusion [37] | 2022 | Weeds and low-hanging leaves block the sensory signals. | RGB, Lidar point cloud, and the planned path. | Spatio-temporal |

4. Methods of Anomaly Detection in ARMs

This section provides an overview of the methods and techniques employed for anomaly detection in ARMs, considering the diverse types of anomalies outlined in the previous section. The discussion on anomaly detection is centred around model-based techniques and data-driven methods, emphasising the latter due to its increased adoption and scalability.

4.1. Model-Based Techniques

To detect anomalies, model-based techniques rely on a priori knowledge of the system’s underlying kinematics, dynamics, or other physical properties. These methods can be useful when the system’s behaviour can be accurately modelled using theoretical principles and the amount of available data is limited.

4.1.1. Kinematic and Dynamic Modeling

Anomalies in the kinematic or dynamic behaviour of a robot can be detected by comparing the observed motion with the expected motion based on the system’s kinematic or dynamic models [38]. Deviations from the expected behaviour can indicate the presence of an anomaly [39].

4.1.2. Model Predictive Control

Model Predictive Control (MPC) is a control strategy that uses a system model to predict future behaviour and determine the optimal control inputs. Anomalies can be identified and addressed in real-time by comparing the predicted behaviour with the actual system response [40]. However, this method needs an accurate system model, which is hard to formulate, especially when considering ARMs. Methods such as learning local linear models online have been introduced to reduce these limitations of MPC [41].

4.1.3. Observer-Based Methods

Observer-based methods, such as Kalman or particle filters, have been employed to estimate a system’s internal state based on the available measurements and a system model. By comparing the estimated state with the actual state, anomalies can be detected and mitigated [42]. Similarly, hidden Markov models (HMMs) are widely used in the anomaly detection of autonomous robotic missions. Here, HMMs are used to model the normal state of the robot or different phases of the task. Once the HMM is trained, it can be used to analyze new sequences of sensor data. A low likelihood score indicates that the observed sequence is unlikely under normal operating conditions and is considered an anomaly [43]. Further, employing sliding mode observers for meticulous fault identification within robotic 271 vision systems underscores the indispensable utility of precise, model-informed diagnostics 272 in safeguarding autonomous robotic missions’ fidelity and operational efficacy [44].

The above methods heavily rely on the accurate model of either the system process or system behaviour, which is usually not available and tedious to develop due to the complexity of the system. For example, with a walking autonomous robot equipped with cameras, these approaches would require an extensive model of the behaviour of all the actuators of the robot in almost all possible states and all relevant visual information on the environmental conditions. Therefore, these methods have the limitation of not being scalable to be applied on more complex systems and to detect more complex phenomena [45].

Emerging hybrid techniques in ARMs, such as Adaptive Neural Tracking Control [46] and Generative-Model-Based Autonomous Intelligent Unmanned Systems [47], exemplify the cutting-edge integration of model-based frameworks with adaptive, data-driven technologies. While the former leverages neural networks for enhancing anomaly detection and system performance, the latter employs generative models to boost system adaptability and intelligence in dynamic environments. These approaches underscore the hybrid paradigm’s capability to transcend traditional boundaries, offering sophisticated solutions for complex challenges in autonomous system development.

4.2. Data-Driven Techniques

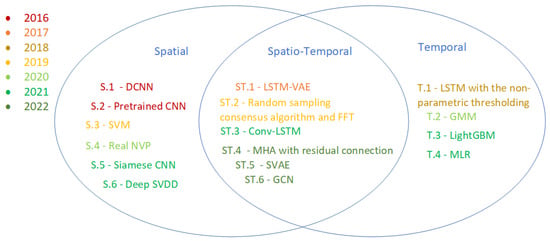

Data-driven techniques have been extensively used for anomaly detection in ARMs due to their minimal reliance on prior system knowledge and adaptability to data variations. In this discussion, the data-driven anomaly detection techniques are categorised according to their focus on spatial, temporal and spatiotemporal features. The review has indicated an increased utilisation of data-driven methods to detect anomalies in the recent decade. Figure 3 provides examples of various algorithms presently applied to detect spatial, temporal, and spatiotemporal anomalies in autonomous missions and with the year of the initial use of these algorithms.

The breakthrough in spatial anomaly detection happened with the progress and adoption of convolutional neural networks (CNNs) [48] and variations of the same for object recognition in the images. Spatial anomaly detection research mainly focuses on comparing the normal image with the new image and detecting any new pattern or object in the image compared to the previous one. Various algorithms, such as deep convolutional neural networks (DCNNs) [49], a convolutional neural network with multiple hidden layers, LibSVM, a library for support vector machine algorithms, and autoencoders which encode the data or, in this case, image into a latent space and detect variations in new images using the reconstruction error, is used for spatial anomaly detection. More recently, SiamNet [50] and Deep Support Vector Data Description (SVDD) [17] have been implemented where SiamNet contains two symmetric convolutional neural networks which enable the comparison of different images with minimal training data, and the Deep SVDD optimises the training data or image into a hypersphere and considers out of boundary data from this distribution as anomalies.

Figure 3. Methods to find anomalies in spatial, temporal and Spatio-temporal elements. Where S.1 [24], S.2 [25], S.3 [49], S.4 [26], S.5 [50], S.6 [17] represent spatial anomaly detection methods, ST.1 [33], ST.2 [34], ST.3 [35], ST.4 [37], ST.5 [51], ST.6 [36] represent Spatio-temporal anomaly detection methods and T.1 [29], T.2 [30], T.3 [31], T.4 [32] represent Temporal anomaly detection methods. The colour variation represents the year when the method was first used in Robotics for anomaly detection.

The main challenge in temporal anomaly detection is developing models or systems that can remember past experiences or quantify patterns from long and complex time-series data. The temporal anomaly detection problem is not equivalent to sensor noise prediction problems. The noise, or any sudden amplitude or frequency change in the signal, can be easily captured or filtered out using already established digital signal processing methods such as Fast Fourier Transform (FFT) [52] and Radon Fourier Transform (RFT) [53], or cancelled out via the feedback controllers inside the autonomous robots. The main breakthrough leading to temporal anomaly detection through solving and capturing patterns in highly complex time series data in robotic systems emerged with the development of recurrent neural networks (RNNs) [54]. RNNs are neural networks capable of remembering past experiences or storing helpful information from past data, and LSTM [55] is a type of RNN widely used in time series forecasting.

Moreover, the Gaussian mixture model (GMM) [56] is used for health monitoring and detecting anomalies from the sensors in the robots, wherein the healthy robot data is used for training the model that is then able to detect any anomalies in the time series data without any previous knowledge of the anomalies. Light Gradient Boosting Machine or LightGBM, a fast and high-performing gradient boosting algorithm, has recently been used for time series data analysis [30]. It is similar to the multiple linear regression (MLR) method used in time series forecasting in robotics systems [31].

Spatio-temporal anomaly detection targets anomalies with spatial and temporal correlations and hence is more challenging due to the complexity of finding the spatial correlations, temporal correlations and the correlations between both. Recent advancements in spatio-temporal anomaly detection have stemmed from the integration of convolutional networks in the RNNs to create Convolutional LSTMs (Conv-LSTM) [11] and Integrating Variational Autoencoders with LSTMs. Both these frameworks were able to capture the spatial variations, temporal variations, and correlations. For example, capturing anomalies by collecting various sensor data such as from the Global Navigation Satellite System (spatial), the location, position, and orientation of an autonomous car in a specific time interval (temporal) is a representative of spatio-temporal anomaly detection [35]. In Figure 3, ST.2 mentions using FFT in anomaly detection. Here, spatial anomaly (human in a specific location) is captured via a Random Sampling consensus algorithm, and further, FFT is specifically used to detect the variation in the chest movement of the detected human. Thus, the overall system can detect spatiotemporal anomalies. J. W. Kaeli et al. focuses on a data-driven approach that uniquely combines spatial and temporal anomaly detection aspects through semantic mapping in the context of autonomous underwater vehicles [57]. Given its emphasis on real-time processing of diverse data types, such as optical and acoustical imagery for dynamic environmental interpretation and anomaly identification, it naturally extends into spatiotemporal anomaly detection.

In summary, this section has provided an overview of the model-based and data-driven techniques employed for anomaly detection in ARMs. It has specifically focused on the data driven detection methods targeting spatial, temporal, and spatio-temporal anomaly detection, which are mainly based on application of deep neural networks.

References

- Liaqat, A. Autonomous mobile robots in manufacturing: Highway Code development, simulation, and testing. Int. J. Adv. Manuf. Technol. 2019, 104, 4617–4628.

- Shamout, M.; Ben-Abdallah, R.; Alshurideh, M.; Alzoubi, H.; Kurdi, B.A.; Hamadneh, S. A conceptual model for the adoption of autonomous robots in supply chain and logistics industry. Uncertain Supply Chain Manag. 2022, 10, 577–592.

- Arnold, R.D.; Yamaguchi, H.; Tanaka, T. Search and rescue with autonomous flying robots through behavior-based cooperative intelligence. J. Int. Humanit. Action 2018, 3, 18.

- Gao, Y. Contemporary Planetary Robotics: An Approach toward Autonomous Systems; Wiley-VCH: Berlin, Germany, 2016; pp. 1–410.

- Emaminejad, N.; Akhavian, R. Trustworthy AI and robotics: Implications for the AEC industry. Autom. Constr. 2022, 139, 104298.

- Washburn, A.; Adeleye, A.; An, T.; Riek, L.D. Robot Errors in Proximate HRI. ACM Trans. Hum.-Robot Interact. 2020, 9, 1–21.

- Sabri, N.; Tlemçani, A.; Chouder, A. Battery internal fault monitoring based on anomaly detection algorithm. Advanced Statistical Modeling, Forecasting, and Fault Detection in Renewable Energy Systems; IntechOpen: London, UK, 2020; Volume 187.

- Steinbauer-Wagner, G.; Wotawa, F. Detecting and locating faults in the control software of autonomous mobile robots. In Proceedings of the 16th International Workshop on Principles of Diagnosis, Monterey, CA, USA, 1–3 June 2005; pp. 1742–1743.

- Fang, X. Sewer Pipeline Fault Identification Using Anomaly Detection Algorithms on Video Sequences. IEEE Access 2020, 8, 39574–39586.

- Gombolay, M.C.; Wilcox, R.J.; Shah, J.A. Fast Scheduling of Robot Teams Performing Tasks With Temporospatial Constraints. IEEE Trans. Robot. 2018, 34, 220–239.

- Inoue, M.; Yamashita, T.; Nishida, T. Robot path planning by LSTM network under changing environment. In Advances in Computer Communication and Computational Sciences: Proceedings of IC4S 2017; Springer: Singapore, 2019; Volume 1, pp. 317–329.

- Ahmed, U.; Srivastava, G.; Djenouri, Y.; Lin, J.C.W. Deviation Point Curriculum Learning for Trajectory Outlier Detection in Cooperative Intelligent Transport Systems. IEEE Trans. Intell. Transp. Syst. 2022, 23, 16514–16523.

- Bossens, D.M.; Ramchurn, S.; Tarapore, D. Resilient Robot Teams: A Review Integrating Decentralised Control, Change-Detection, and Learning. Curr. Robot. Rep. 2022, 3, 85–95.

- Khalastchi, E.; Kalech, M. On Fault Detection and Diagnosis in Robotic Systems. ACM Comput. Surv. 2019, 51, 1–24.

- Author, I.S.C. Anomaly detection: A robust approach to detection of unanticipated faults. In Proceedings of the 2008 International Conference on Prognostics and Health Management, Denver, CO, USA, 6–9 October 2008; pp. 1–8.

- Rabeyron, T.; Loose, T. Anomalous Experiences, Trauma, and Symbolisation Processes at the Frontiers between Psychoanalysis and Cognitive Neurosciences. Front. Psychol. 2015, 6, 1926.

- Theissler, A. Detecting known and unknown faults in automotive systems using ensemble-based anomaly detection. Knowl.-Based Syst. 2017, 123, 163–173.

- Graabæk, S.G.; Ancker, E.V.; Christensen, A.L.; Fugl, A.R. An Experimental Comparison of Anomaly Detection Methods for Collaborative Robot Manipulators. IEEE Access 2022, 11, 65834–65848.

- Fisher, M.; Cardoso, R.C.; Collins, E.C.; Dadswell, C.; Dennis, L.A.; Dixon, C.; Farrell, M.; Ferrando, A.; Huang, X.; Jump, M.; et al. An Overview of Verification and Validation Challenges for Inspection Robots. Robotics 2021, 10, 67.

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. Deep Reinforcement Learning: A Brief Survey. IEEE Signal Process. Mag. 2017, 34, 26–38.

- Besada-Portas, E.; Lopez-Orozco, J.A.; de la Cruz, J.M. Unified fusion system based on Bayesian networks for autonomous mobile robots. In Proceedings of the Fifth International Conference on Information Fusion. FUSION 2002. (IEEE Cat.No.02EX5997), Annapolis, MD, USA, 8–11 July 2002; Volume 2.

- Blanzeisky, W.; Cunningham, P. Algorithmic Factors Influencing Bias in Machine Learning. In Machine Learning and Principles and Practice of Knowledge Discovery in Databases; Kamp, M., Koprinska, I., Bibal, A., Bouadi, T., Frénay, B., Galárraga, L., Oramas, J., Adilova, L., Krishnamurthy, Y., Kang, B., et al., Eds.; Springer: Cham, Switzerland, 2021; pp. 559–574.

- Hüllermeier, E.; Waegeman, W. Aleatoric and epistemic uncertainty in machine learning: An introduction to concepts and methods. Mach. Learn. 2021, 110, 457–506.

- Tomoya, M.; Kanji, T. Compressive change retrieval for moving object detection. In Proceedings of the 2016 IEEE/SICE International Symposium on System Integration (SII), Sapporo, Japan, 13–15 December 2016; pp. 780–785.

- Dang, T.; Khattak, S.; Papachristos, C.; Alexis, K. Anomaly detection and cognizant path planning for surveillance operations using aerial robots. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems, ICUAS 2019, Atlanta, GA, USA, 11–14 June 2019; pp. 667–673.

- Wellhausen, L.; Ranftl, R.; Hutter, M. Safe Robot Navigation Via Multi-Modal Anomaly Detection. IEEE Robot. Autom. Lett. 2020, 5, 1325–1332.

- Zaheer, M.Z.; Mahmood, A.; Khan, M.H.; Astrid, M.; Lee, S.I. An Anomaly Detection System via Moving Surveillance Robots with Human Collaboration. In Proceedings of the IEEE International Conference on Computer Vision, 2021, Montreal, BC, Canada, 11–17 October 2021; pp. 2595–2601.

- Nehme, H.; Aubry, C.; Rossi, R.; Boutteau, R. An Anomaly Detection Approach to Monitor the Structured-Based Navigation in Agricultural Robotics. In Proceedings of the IEEE International Conference on Automation Science and Engineering, Lyon, France, 23–27 August 2021; pp. 1111–1117.

- Hundman, K.; Constantinou, V.; Laporte, C.; Colwell, I.; Soderstrom, T. Detecting Spacecraft Anomalies Using LSTMs and Nonparametric Dynamic Thresholding. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018.

- Schnell, T. Robot Health Estimation through Unsupervised Anomaly Detection using Gaussian Mixture Models. In Proceedings of the IEEE International Conference on Automation Science and Engineering, Hong Kong, China, 20–21 August 2020; pp. 1037–1042.

- Lu, H.; Du, M.; Qian, K.; He, X.; Sun, Y.; Wang, K. GAN-based Data Augmentation Strategy for Sensor Anomaly Detection in Industrial Robots. IEEE Sens. J. 2021, 22, 17464–17474.

- Aliev, K.; Antonelli, D. Proposal of a Monitoring System for Collaborative Robots to Predict Outages and to Assess Reliability Factors Exploiting Machine Learning. Appl. Sci. 2021, 11, 1621.

- Park, D.; Hoshi, Y.; Kemp, C.C. A Multimodal Anomaly Detector for Robot-Assisted Feeding Using an LSTM-Based Variational Autoencoder. IEEE Robot. Autom. Lett. 2018, 3, 1544–1551.

- Saegusa, R.; Ito, H.; Duong, D.M. Human-care rounds robot with contactless breathing measurement. In Proceedings of the International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; pp. 6172–6177.

- Zekry, A.; Sayed, A.; Moussa, M.; Elhabiby, M. Anomaly Detection using IoT Sensor-Assisted ConvLSTM Models for Connected Vehicles. In Proceedings of the 2021 IEEE 93rd Vehicular Technology Conference (VTC2021-Spring), Helsinki, Finland, 25–28 April 2021; pp. 1–6.

- Fang, J.; Qiao, J.; Bai, J.; Yu, H.; Xue, J. Traffic Accident Detection via Self-Supervised Consistency Learning in Driving Scenarios. IEEE Trans. Intell. Transp. Syst. 2022, 23, 9601–9614.

- Ji, T.; Sivakumar, A.N.; Chowdhary, G.; Driggs-Campbell, K. Proactive Anomaly Detection for Robot Navigation with Multi-Sensor Fusion. IEEE Robot. Autom. Lett. Artic. 2022, 7, 4975–4982.

- Wescoat, E.; Kerner, S.; Mears, L. A comparative study of different algorithms using contrived failure data to detect robot anomalies. Procedia Comput. Sci. 2022, 200, 669–678.

- Xinjilefu, X.; Feng, S.; Atkeson, C.G. Center of mass estimator for humanoids and its application in modelling error compensation, fall detection and prevention. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Republic of Korea, 3–5 November 2015; pp. 67–73.

- Saputra, R.P.; Rakicevic, N.; Chappell, D.; Wang, K.; Kormushev, P. Hierarchical Decomposed-Objective Model Predictive Control for Autonomous Casualty Extraction. IEEE Access 2021, 9, 39656–39679.

- Alattar, A.; Chappell, D.; Kormushev, P. Kinematic-Model-Free Predictive Control for Robotic Manipulator Target Reaching With Obstacle Avoidance. Front. Robot. AI 2022, 9, 809114.

- Amoozgar, M.H.; Chamseddine, A.; Zhang, Y. Experimental Test of a Two-Stage Kalman Filter for Actuator Fault Detection and Diagnosis of an Unmanned Quadrotor Helicopter. J. Intell. Robot. Syst. 2013, 70, 107–117.

- Azzalini, D.; Castellini, A.; Luperto, M.; Farinelli, A.; Amigoni, F. HMMs for Anomaly Detection in Autonomous Robots. In Proceedings of the 19th International Conference on Autonomous Agents and MultiAgent Systems, Auckland, New Zealand, 9–13 May 2020; pp. 105–113.

- Sergiyenko, O.; Tyrsa, V.; Zhirabok, A.; Zuev, A. Sliding mode observer based fault identification in automatic vision system of robot. Control Eng. Pract. 2023, 139, 105614.

- Rabiner, R. A tutorial on hidden Markov models and selected applications in speech recognition. Proc. IEEE 1989, 77, 257–286.

- Sun, Y.; Liu, J.; Gao, Y.; Liu, Z.; Zhao, Y. Adaptive Neural Tracking Control for Manipulators With Prescribed Performance Under Input Saturation. IEEE/ASME Trans. Mechatron. 2023, 28, 1037–1046.

- Zhang, Z.; Wu, Z.; Ge, R. Generative-Model-Based Autonomous Intelligent Unmanned Systems. In Proceedings of the 2023 International Annual Conference on Complex Systems and Intelligent Science (CSIS-IAC), Shenzhen, China, 20–22 October 2023; pp. 766–772.

- Gu, J. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377.

- Aloysius, N.; Geetha, M. A review on deep convolutional neural networks. In Proceedings of the 2017 International Conference on Communication and Signal Processing, Chennai, India, 6–8 April 2017; pp. 0588–0592.

- Koch, G.; Zemel, R.; Salakhutdinov, R. Siamese neural networks for one-shot image recognition. In Proceedings of the ICML Deep Learning Workshop, Lille, France, 6–11 July 2015; Volume 2.

- Shekhar, S.; Evans, M.R.; Kang, J.M.; Mohan, P. Identifying patterns in spatial information: A survey of methods. WIREs Data Min. Knowl. Discov. 2011, 1, 193–214.

- Planat, M. Ramanujan sums for signal processing of low frequency noise. Phys. Rev. E 2002, 66, 056128.

- Longman, O.; Bilik, I. Spectral Radon-Fourier Transform for Automotive Radar Applications. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 1046–1056.

- Sherstinsky, A. Fundamentals of Recurrent Neural Network (RNN) and Long Short-Term Memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306.

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780.

- Reynolds, D.A. Gaussian Mixture Models. Encycl. Biom. 2009, 741, 659–663.

- Kaeli, J.W.; Singh, H. Online data summaries for semantic mapping and anomaly detection with autonomous underwater vehicles. In Proceedings of the OCEANS 2015-Genova, Genova, Italy, 18–21 May 2015; pp. 1–7.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.6K

Revisions:

3 times

(View History)

Update Date:

11 Mar 2024

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No