Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Vitaliy Kinakh | -- | 1124 | 2024-02-23 16:15:40 | | | |

| 2 | Vitaliy Kinakh | + 7 word(s) | 1131 | 2024-02-23 16:17:01 | | | | |

| 3 | Rita Xu | -389 word(s) | 742 | 2024-02-26 03:32:45 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Kinakh, V.; Belousov, Y.; Quétant, G.; Drozdova, M.; Holotyak, T.; Schaerer, D.; Voloshynovskiy, S. Hubble Meets Webb. Encyclopedia. Available online: https://encyclopedia.pub/entry/55398 (accessed on 02 March 2026).

Kinakh V, Belousov Y, Quétant G, Drozdova M, Holotyak T, Schaerer D, et al. Hubble Meets Webb. Encyclopedia. Available at: https://encyclopedia.pub/entry/55398. Accessed March 02, 2026.

Kinakh, Vitaliy, Yury Belousov, Guillaume Quétant, Mariia Drozdova, Taras Holotyak, Daniel Schaerer, Slava Voloshynovskiy. "Hubble Meets Webb" Encyclopedia, https://encyclopedia.pub/entry/55398 (accessed March 02, 2026).

Kinakh, V., Belousov, Y., Quétant, G., Drozdova, M., Holotyak, T., Schaerer, D., & Voloshynovskiy, S. (2024, February 23). Hubble Meets Webb. In Encyclopedia. https://encyclopedia.pub/entry/55398

Kinakh, Vitaliy, et al. "Hubble Meets Webb." Encyclopedia. Web. 23 February, 2024.

Copy Citation

Researchers explore the generation of James Webb Space Telescope (JWSP) imagery via image-to-image translation from the available Hubble Space Telescope (HST) data.

image-to-image translation

denoising diffusion probabilistic models

uncertainty estimation

1. Introduction

Researchers explore the problem of predicting the visible sky images captured by the James Webb Space Telescope (JWST), hereafter referred to as ‘Webb’ [1], using the available data from the Hubble Space Telescope (HST), hereinafter called ‘Hubble’ [2]. There is much interest in this type of problem in fields such as astrophysics, astronomy, and cosmology, encompassing a variety of data types and sources. This includes the translation of observations of galaxies in visible light [3] and predictions of dark matter [4]. The data registered from different sources may be acquired at different times, by different sensors, in different bands, with different resolutions, sensitivities, and levels of noise. The exact underlying mathematical model for transforming data between these sources is very complex and largely unknown.

Despite the great success of image-to-image translation in computer vision, its adoption in the astrophysics community has been limited, even though there is a lot of data available for such tasks that might enable sensor-to-sensor translation, conversion between different spectral bands, and adaptation among various satellite systems.

Before the launch of missions such as Euclid [5], the radio telescope Square Kilometre Array [6], and others, there has been a significant interest in advancing image-to-image translation techniques for astronomical data to: (i) enable efficient mission planning due to the high complexity and cost of exhaustive space exploration, allowing for the prioritization of specific space regions using existing data; and (ii) generate sufficient synthetic data for machine learning (ML) analysis as soon as the first real images from new imaging missions are available in adequate quantities.

2. Comparison between Webb and Hubble Telescopes

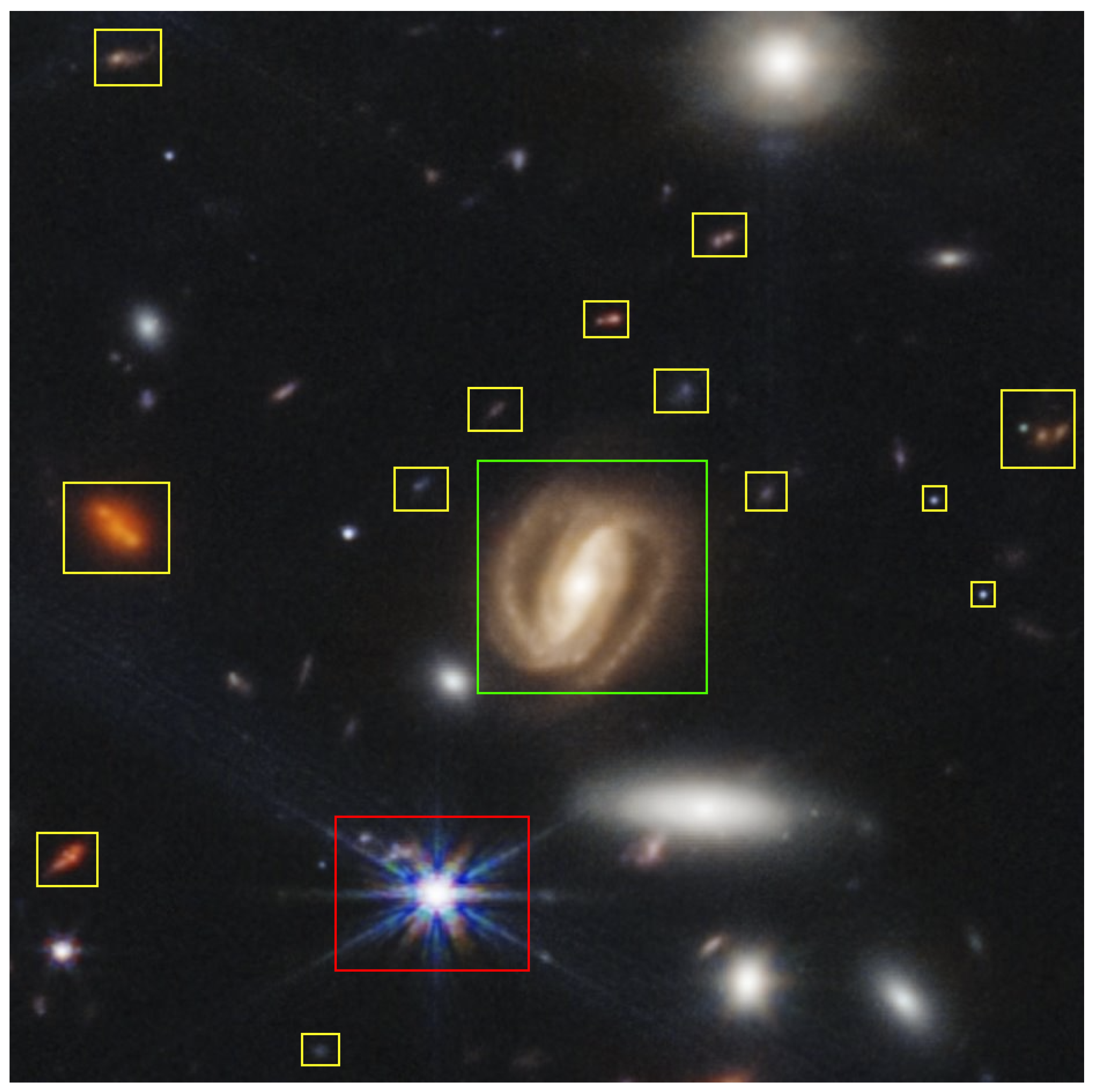

In Figure 1 and Figure 2, the same part of the sky captured by the Hubble and Webb telescopes is shown in the RGB format. The main differences between the Hubble and Webb telescopes are: (i) Spatial resolution—The Webb telescope, featuring a 6.5-m primary mirror, offers superior resolution compared to Hubble’s 2.4-m mirror, which is particularly noticeable in infrared observations [7]. This enables Webb to capture images of objects up to 100 times fainter than Hubble, as evident in the central spiral galaxy in Figure 2.

(ii) Wavelength coverage— Hubble, optimized for ultraviolet and visible light (0.1 to 2.5 microns), contrasts with Webb’s focus on infrared wavelengths (0.6 to 28.5 microns) [8]. While this differentiation allows Webb to observe more distant and fainter celestial objects, including the earliest stars and galaxies, it is crucial to note that the IR emission captured by Webb differs inherently from the UV or visible light observed by Hubble. The distinction is not solely in the resolution or sensitivity between the Hubble Space Telescope (HST) and the James Webb Space Telescope (JWST) but also in the varying absorption of light by dust within different galaxy types. However, the proposed image-to-image translation method does not aim to delve into these observational differences. Instead, the focus is to explore whether image-to-image translation can effectively simulate Webb telescope imagery based on the existing data from Hubble. This approach seeks to leverage the available Hubble data to anticipate and interpret the observations that Webb might deliver, without directly analyzing the spectral and compositional differences between the images captured by the two telescopes.

Figure 1. Hubble photo of Galaxy Cluster SMACS 0723.

Figure 2. Webb image of Galaxy Cluster SMACS 0723.

(iii) Light-collecting capacity—Webb’s substantially larger mirror provides over six times the light-collecting area compared to Hubble, essential for studying longer, dimmer wavelengths of light from distant, redshifted objects [7]. This is exemplified in Webb’s images, which reveal smaller galaxies and structures not visible in Hubble’s observations, highlighted in yellow in Figure 2.

3. Image-to-Image Translation

Image-to-image translation [9] is the task of transforming an image from one domain to another, where the goal is to understand the mapping between an input image and an output image. Image-to-image translation methods have shown great success in computer vision tasks, including transferring different styles [10], colorization [11], superresolution [12], visible to infrared translation [13], and many others [14]. There are two types of image-to-image translation methods: unpaired [15] (sometimes called unsupervised) and paired [16]. Unpaired setups do not require fixed pairs of corresponding images, while paired setups do.

4. Image-to-Image Translation in Astrophysics

Image-to-image translation has been used in astrophysics for galaxy simulation [3], but these methods have mostly been used for denoising [17] optical and radio astrophysical data [18]. The task of predicting the images of one telescope from another using image-to-image translation remains largely under-researched.

References

- Garner, J.P.; Mather, J.C.; Clampin, M.; Doyon, R.; Greenhouse, M.A.; Hammel, H.B.; Hutchings, J.B.; Jakobsen, P.; Lilly, S.J.; Long, K.S.; et al. The James Webb space telescope. Space Sci. Rev. 2006, 123, 485–606.

- Lallo, M.D. Experience with the Hubble Space Telescope: 20 years of an archetype. Opt. Eng. 2012, 51, 011011.

- Lin, Q.; Fouchez, D.; Pasquet, J. Galaxy Image Translation with Semi-supervised Noise-reconstructed Generative Adversarial Networks. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 5634–5641.

- Schaurecker, D.; Li, Y.; Tinker, J.; Ho, S.; Refregier, A. Super-resolving Dark Matter Halos using Generative Deep Learning. arXiv 2021, arXiv:2111.06393.

- Racca, G.D.; Laureijs, R.; Stagnaro, L.; Salvignol, J.C.; Alvarez, J.L.; Criado, G.S.; Venancio, L.G.; Short, A.; Strada, P.; Bönke, T.; et al. The Euclid mission design. In Proceedings of the Space Telescopes and Instrumentation 2016: Optical, Infrared, and Millimeter Wave, Edinburgh, UK, 19 July 2016; Volume 9904, pp. 235–257.

- Hall, P.; Schillizzi, R.; Dewdney, P.; Lazio, J. The square kilometer array (SKA) radio telescope: Progress and technical directions. Int. Union Radio Sci. URSI 2008, 236, 4–19.

- NASA. Webb vs Hubble Telescope. Available online: https://www.jwst.nasa.gov/content/about/comparisonWebbVsHubble.html (accessed on 6 January 2024).

- Science, N. Hubble vs. Webb. Available online: https://science.nasa.gov/science-red/s3fs-public/atoms/files/HSF-Hubble-vs-Webb-v3.pdf (accessed on 6 January 2024).

- Pang, Y.; Lin, J.; Qin, T.; Chen, Z. Image-to-image translation: Methods and applications. IEEE Trans. Multimed. 2021, 24, 3859–3881.

- Liu, M.Y.; Huang, X.; Mallya, A.; Karras, T.; Aila, T.; Lehtinen, J.; Kautz, J. Few-shot unsupervised image-to-image translation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10551–10560.

- Zhang, R.; Isola, P.; Efros, A.A. Colorful image colorization. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 649–666.

- Hui, Z.; Gao, X.; Yang, Y.; Wang, X. Lightweight image super-resolution with information multi-distillation network. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 2024–2032.

- Patel, D.; Patel, S.; Patel, M. Application of Image-To-Image Translation in Improving Pedestrian Detection. In Artificial Intelligence and Sustainable Computing; Pandit, M., Gaur, M.K., Kumar, S., Eds.; Springer Nature: Singapore, 2023; pp. 471–482.

- Kaji, S.; Kida, S. Overview of image-to-image translation by use of deep neural networks: Denoising, super-resolution, modality conversion, and reconstruction in medical imaging. Radiol. Phys. Technol. 2019, 12, 235–248.

- Liu, M.-Y.; Breuel, T.; Kautz, J. Unsupervised image-to-image translation networks. Adv. Neural Inf. Process. Syst. 2017, 30. Available online: https://proceedings.neurips.cc/paper_files/paper/2017/file/dc6a6489640ca02b0d42dabeb8e46bb7-Paper.pdf (accessed on 3 February 2024).

- Tripathy, S.; Kannala, J.; Rahtu, E. Learning image-to-image translation using paired and unpaired training samples. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 51–66.

- Vojtekova, A.; Lieu, M.; Valtchanov, I.; Altieri, B.; Old, L.; Chen, Q.; Hroch, F. Learning to denoise astronomical images with U-nets. Mon. Not. R. Astron. Soc. 2021, 503, 3204–3215.

- Liu, T.; Quan, Y.; Su, Y.; Guo, Y.; Liu, S.; Ji, H.; Hao, Q.; Gao, Y. Denoising Astronomical Images with an Unsupervised Deep Learning Based Method. arXiv 2023.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

630

Revisions:

3 times

(View History)

Update Date:

26 Feb 2024

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No