Researchers explore the generation of James Webb Space Telescope (JWSP) imagery via image-to-image translation from the available Hubble Space Telescope (HST) data.

1. Introduction

Researchers explore the problem of predicting the visible sky images captured by the James Webb Space Telescope (JWST), hereafter referred to as ‘Webb’ [

1], using the available data from the Hubble Space Telescope (HST), hereinafter called ‘Hubble’ [

2]. There is much interest in this type of problem in fields such as astrophysics, astronomy, and cosmology, encompassing a variety of data types and sources. This includes the translation of observations of galaxies in visible light [

3] and predictions of dark matter [

4]. The data registered from different sources may be acquired at different times, by different sensors, in different bands, with different resolutions, sensitivities, and levels of noise. The exact underlying mathematical model for transforming data between these sources is very complex and largely unknown.

Despite the great success of image-to-image translation in computer vision, its adoption in the astrophysics community has been limited, even though there is a lot of data available for such tasks that might enable sensor-to-sensor translation, conversion between different spectral bands, and adaptation among various satellite systems.

Before the launch of missions such as Euclid [

5], the radio telescope Square Kilometre Array [

6], and others, there has been a significant interest in advancing image-to-image translation techniques for astronomical data to: (i) enable efficient mission planning due to the high complexity and cost of exhaustive space exploration, allowing for the prioritization of specific space regions using existing data; and (ii) generate sufficient synthetic data for machine learning (ML) analysis as soon as the first real images from new imaging missions are available in adequate quantities.

2. Comparison between Webb and Hubble Telescopes

In

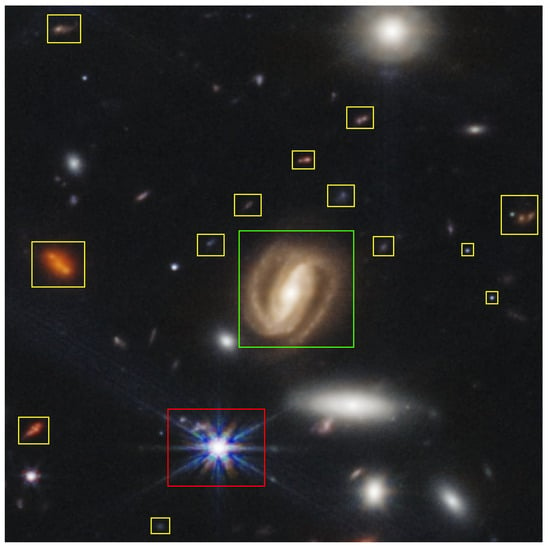

Figure 1 and

Figure 2, the same part of the sky captured by the Hubble and Webb telescopes is shown in the RGB format. The main differences between the Hubble and Webb telescopes are: (i)

Spatial resolution—The Webb telescope, featuring a 6.5-m primary mirror, offers superior resolution compared to Hubble’s 2.4-m mirror, which is particularly noticeable in infrared observations [

15]. This enables Webb to capture images of objects up to 100 times fainter than Hubble, as evident in the central spiral galaxy in

Figure 2.

(ii)

Wavelength coverage— Hubble, optimized for ultraviolet and visible light (0.1 to 2.5 microns), contrasts with Webb’s focus on infrared wavelengths (0.6 to 28.5 microns) [

16]. While this differentiation allows Webb to observe more distant and fainter celestial objects, including the earliest stars and galaxies, it is crucial to note that the IR emission captured by Webb differs inherently from the UV or visible light observed by Hubble. The distinction is not solely in the resolution or sensitivity between the Hubble Space Telescope (HST) and the James Webb Space Telescope (JWST) but also in the varying absorption of light by dust within different galaxy types. However, our proposed image-to-image translation method does not aim to delve into these observational differences. Instead, our focus is to explore whether image-to-image translation can effectively simulate Webb telescope imagery based on the existing data from Hubble. This approach seeks to leverage the available Hubble data to anticipate and interpret the observations that Webb might deliver, without directly analyzing the spectral and compositional differences between the images captured by the two telescopes.

Figure 1. Hubble photo of Galaxy Cluster SMACS 0723.

Figure 2. Webb image of Galaxy Cluster SMACS 0723.

(iii)

Light-collecting capacity—Webb’s substantially larger mirror provides over six times the light-collecting area compared to Hubble, essential for studying longer, dimmer wavelengths of light from distant, redshifted objects [

15]. This is exemplified in Webb’s images, which reveal smaller galaxies and structures not visible in Hubble’s observations, highlighted in yellow in

Figure 2.

3. Image-to-Image Translation

Image-to-image translation [

19] is the task of transforming an image from one domain to another, where the goal is to understand the mapping between an input image and an output image. Image-to-image translation methods have shown great success in computer vision tasks, including transferring different styles [

20], colorization [

21], superresolution [

22], visible to infrared translation [

23], and many others [

24]. There are two types of image-to-image translation methods:

unpaired [

25] (sometimes called unsupervised) and

paired [

26]. Unpaired setups do not require fixed pairs of corresponding images, while paired setups do.

4. Image-to-Image Translation in Astrophysics

Image-to-image translation has been used in astrophysics for galaxy simulation [

3], but these methods have mostly been used for denoising [

27] optical and radio astrophysical data [

28]. The task of predicting the images of one telescope from another using image-to-image translation remains largely under-researched.

5. Method

The study investigates image translation between Hubble and Webb telescopes using techniques like Pix2Pix [26], CycleGAN [25], TURBO [9] and Palette [11], image-to-image approach based on Denoising Diffusion Probabilistic Models (DDPMs). The evaluation of these methods relies on metrics such as MSE(Mean Squared Error), PSNR (Peak Signal-to-Noise Ratio), SSIM (Structural Similarity Index Measure), LPIPS (Learned Perceptual Image Patch Similarity) [13] and FID (Fréchet Inception Distance) [14]. This comprehensive approach allows for a deeper understanding of the potential and limitations of applying deep learning for astronomical image translation, aiming for high fidelity and scientifically useful outputs. Incorporating synchronization aspects, the study also delves into the challenge of aligning images from the Hubble and Webb telescopes, considering the differences in acquisition times, sensors, spectral bands, and other variables. Achieving precise synchronization is crucial for accurate image translation and comparison, ensuring that the images from both telescopes correspond to the same cosmic region and conditions. This aspect is vital for the effectiveness of the deep learning models in producing scientifically valuable translations that can be reliably used for astronomical analysis and research.

6. Results

The study's findings reveal that the novel DDPM approach outperforms traditional methods like Pix2Pix and CycleGAN in translating Hubble images to Webb-like quality. The results are quantitatively evaluated using PSNR and SSIM metrics, where DDPM demonstrated superior performance, indicating higher fidelity in the image translation process.

| Method |

MSE |

SSIM |

PSNR |

LPIPS |

FID |

| Pix2Pix |

0.002 |

0.93 |

26.78 |

0.44 |

54.58 |

| TURBO |

0.003 |

0.92 |

25.88 |

0.41 |

43.36 |

DDPM

(Palette) |

0.001 |

0.95 |

29.12 |

0.44 |

30.08 |

Table 1. Huble-to-Webb results. All results are obtained on a validation set of Galaxy Cluster SMACS 0723 with local synchronization approach. The best results are highlighted in bold.

7. Conclusions

This paper demonstrates an advancement in astronomical research through the application of image-to-image translation techniques, bridging the gap between Hubble and Webb telescopes' imagery. By leveraging existing Hubble data, the study explores the potential of simulating Webb telescope observations, focusing on enhancing mission planning and generating synthetic data for machine learning analysis. The comparison of the telescopes' capabilities, including spatial resolution, wavelength coverage, and light-collecting capacity, provides a foundation for understanding the complexities involved in translating images across different domains. The research highlights the untapped potential of image-to-image translation in astrophysics, suggesting avenues for future exploration and development. This approach not only promises to optimize the use of astronomical data but also opens new possibilities for interpreting the cosmos through the lenses of advanced telescopic technologies.