| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Martin George Wynn | -- | 2813 | 2024-01-24 08:45:23 | | | |

| 2 | Jessie Wu | -2 word(s) | 2811 | 2024-01-24 09:19:24 | | | | |

| 3 | Bilgin Metin | -5 word(s) | 2899 | 2024-02-02 16:51:41 | | | | |

| 4 | Jessie Wu | Meta information modification | 2899 | 2024-02-04 02:11:43 | | |

Video Upload Options

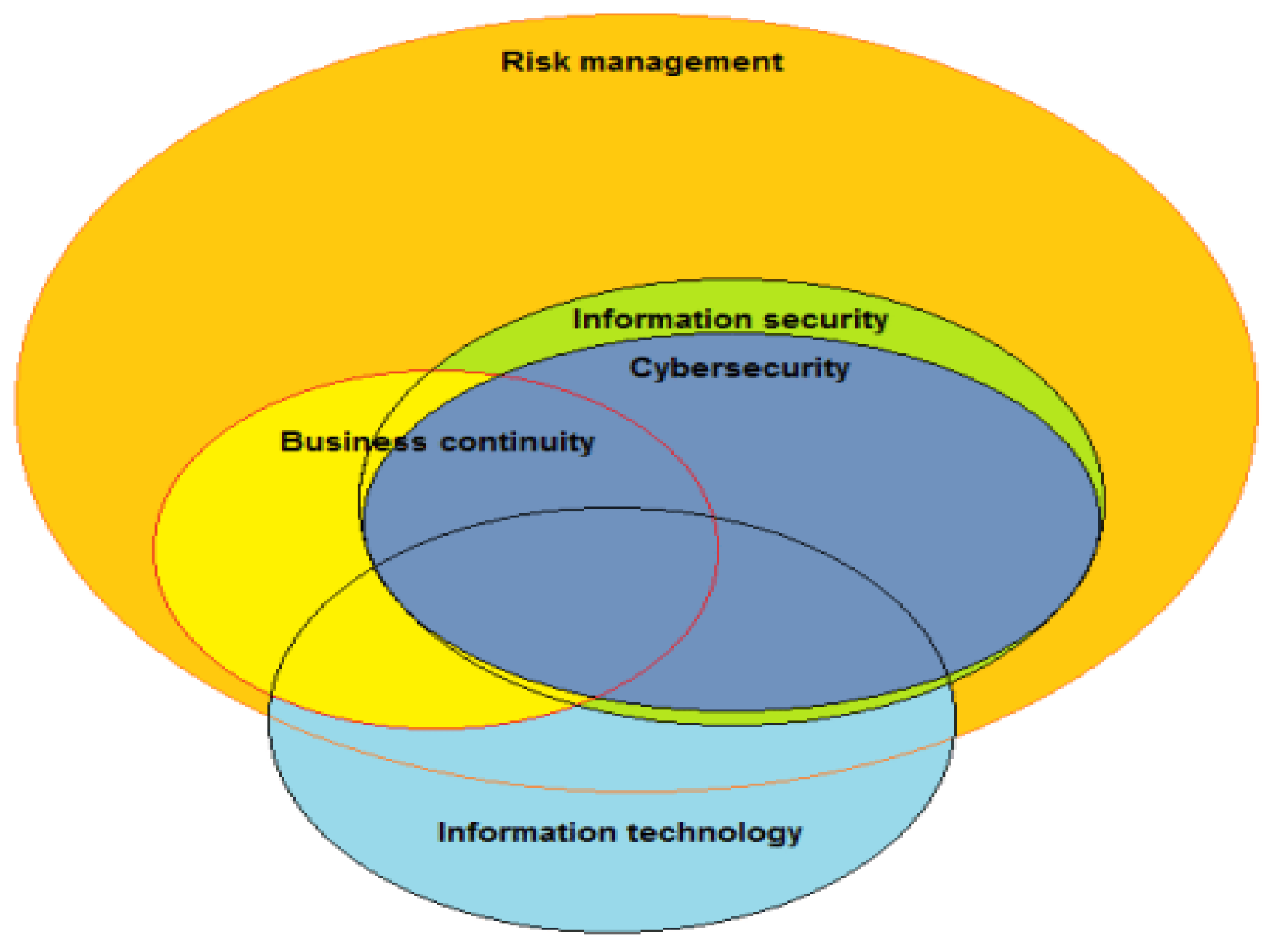

Cybersecurity culture, encompassing organizational and individual levels, shapes an organization's values, behaviors, and practices. Its core objective is to protect information technology (IT) assets, sensitive data, and technology infrastructures against cyber threats and reduce IT risks in today's digital-centric business landscape. In today’s technology-centric business environment, where organizations encounter numerous cyber threats, effective IT risk management is crucial. An objective risk assessment—based on information relating to business requirements, human elements, and the security culture within an organisation—can provide a sound basis for informed decision making, effective risk prioritisation, and the implementation of suitable security measures. Asset valuation with enhanced objectivity should be considered in an established security culture. Therefore, mitigating subjectivity in IT risk assessments diminishes personal biases and presumptions to provide a more transparent and accurate understanding of the real risks involved and enhances cybersecurity culture.

1. Concepts and Methods: Risk Assesment

2. Asset Valuation in Risk Assessment

3. Third-Party and Supply Chain Cybersecurity

4. Cyber Security Culture Framework and IT Governance

5. Information Security Regulations, Legislation, and International Standards

References

- Kirvan, P.; Irei, A. Using the FAIR Model to Quantify Cyber-Risk; TechTarget: Newton, MA, UAS, 2023; Available online: https://www.techtarget.com/searchsecurity/tip/Using-the-FAIR-model-to-quantify-cyber-risk (accessed on 9 November 2023).

- Hedström, K.; Kolkowska, E.; Karlsson, F.; Allen, J.P. Value conflicts for information security management. J. Stra-Tegic Inf. Syst. 2011, 20, 373–384.

- Shypovskyi, V. Enhancing the factor analysis of information risk methodology for assessing cyber-resilience in critical infrastructure information systems. Political Sci. Secur. Stud. J. 2023, 4, 25–33.

- Crespo-Martinez, P.E. Selecting the Business Information Security Officer with ECU@ Risk and the Critical Role Model. In In-ternational Conference on Applied Human Factors and Ergonomics; Springer: Cham, Switzerland, 2019; pp. 368–377.

- Middleton, J. Capita Cyber-Attack: 90 Organisations Report Data Breaches; The Guardian: London, UK, 2023; Available online: https://www.theguardian.com/business/2023/may/30/capita-cyber-attack-data-breaches-ico (accessed on 20 July 2023).

- Cram, W.A.; Proudfoot, J.G.; D’arcy, J. Organizational information security policies: A review and research framework. Eur. J. Inf. Syst. 2017, 26, 605–641.

- Safa, N.S.; Maple, C.; Furnell, S.; Azad, M.A.; Perera, C.; Dabbagh, M.; Sookhak, M. Deterrence and prevention-based model to mitigate information security insider threats in organisations. Future Gener. Comput. Syst. 2019, 97, 587–597.

- Dursun, S.M.; Mutluturk, M.; Taskin, N.; Metin, B. An Overview of the IT Risk Management Methodologies for Securing Information Assets. In Cases on Optimizing the Asset Management Process; IGI Global: Hershey, PA, USA, 2022; pp. 30–47.

- Fredriksen, R.; Kristiansen, M.; Gran, B.A.; Stølen, K.; Opperud, T.A.; Dimitrakos, T. The CORAS framework for a model-based risk management process. In Proceedings of the Computer Safety, Reliability and Security: 21st International Conference Proceedings, SAFECOMP, Catania, Italy, 10–13 September 2002; Springer: Berlin/Heidelberg, Germany, 2002; pp. 94–105.

- Weil, T. Risk assessment methods for cloud computing platforms. In Proceedings of the 2019 IEEE 43rd Annual Computer Software and Applications Conference (COMPSAC), Milwaukee, WI, USA, 15–19 July 2019; IEEE: Piscataway, NJ, USA, 2019; Volume 1, pp. 545–547.

- Nost, E.; Maxim, M.; Bell, K.; Worthington, J.; DiCicco, H. The State of Vulnerability Risk Management 2023; Forrester Report; Forrester: Cambridge, MA, USA, 2023; Available online: https://reprints2.forrester.com/#/assets/2/1730/RES179028/report (accessed on 22 August 2023).

- Irwin, L. Conducting an Asset-Based Risk Assessment in ISO 27001; Vigilant Software: Ely, UK, 2022; Available online: https://www.vigilantsoftware.co.uk/blog/conducting-an-asset-based-risk-assessment-in-iso-270012013 (accessed on 24 August 2023).

- Loloei, I.; Shahriari, H.R.; Sadeghi, A. A model for asset valuation in security risk analysis regarding assets’ dependencies. In Proceedings of the 20th Iranian Conference on Electrical Engineering (ICEE2012), Tehran, Iran, 15–17 May 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 763–768.

- Tatar, Ü.; Karabacak, B. A hierarchical asset valuation method for information security risk analysis. In Proceedings of the IEEE International Conference on Information Society (i-Society 2012), London, UK, 25–28 June 2012; pp. 286–291.

- Kassa, S.G.; Cisa, C. IT asset valuation, risk assessment, and control implementation model. ISACA J. 2017, 3, 1–9.

- Ruan, K. Digital Asset Valuation and Cyber Risk Measurement: Principles of Cybernomics; Academic Press: Cambridge, MA, USA, 2019.

- Ekstedt, M.; Afzal, Z.; Mukherjee, P.; Hacks, S.; Lagerström, R. Yet another cybersecurity risk assessment framework. Int. J. Inf. Secur. 2023, 22, 1713–1729.

- Berry, H.S. The Importance of Cybersecurity in Supply Chain. In Proceedings of the 11th IEEE International Symposium on Digital Forensics and Security (ISDFS), Chattanooga, TN, USA, 11–12 May 2023; pp. 1–5.

- Edwards, B.; Jacobs, J.; Forrest, S. Risky Business: Assessing Security with External Measurements. arXiv 2019, arXiv:1904.11052.

- Youssef, A.E. A Framework for Cloud Security Risk Management Based on the Business Objectives of Organizations. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 186–194.

- Dennig, F.L.; Cakmak, E.; Plate, H.; Keim, D.A. VulnEx: Exploring Open-Source Software Vulnerabilities in Large Development Organizations to Understand Risk Exposure. arXiv 2022, arXiv:2108.06259v3.

- Goyal, H.P.; Akhil, G.; Ramasubramanian, S. Manage Risks in Complex Engagements by Leveraging Organization-Wide Knowledge Using Machine Learning. arXiv 2022, arXiv:2202.10332.

- Hu, K.; Levi, R.; Yahalom, R.; Zerhouni, E. Supply Chain Characteristics as Predictors of Cyber Risk: A Machine-Learning Assessment. arXiv 2023, arXiv:2210.15785v5.

- Khani, S.; Gacek, C.; Popov, P. Security-aware selection of web services for reliable composition. arXiv 2015, arXiv:1510.02391.

- Hammi, B.; Zeadally, S.; Nebhen, J. Security threats, countermeasures, and challenges of digital supply chains. ACM Comput. Surv. 2023, 55, 316.

- Marcu, P.; Hommel, W. Inter-organizational fault management: Functional and organizational core aspects of management architectures. arXiv 2011, arXiv:1101.3891.

- Eyadema, S.I. Outsource Supply Chain Challenges and Risk Mitigation. Unpublished Doctoral Dissertation, Utica College, New York, NY, USA, 2021.

- Georgiadou, A.; Mouzakitis, S.; Bounas, K.; Askounis, D. A Cyber-Security Culture Framework for Assessing Organization Readiness. J. Comput. Inf. Syst. 2020, 62, 452–462.

- Cristopher, A. Employing COBIT 2019 for Enterprise Governance Strategy. 2019. Available online: https://www.isaca.org/resources/news-and-trends/industry-news/2019/employing-cobit-2019-for-enterprise-governance-strategy (accessed on 11 September 2023).

- OneTrust. Avoid Uncertainty—Empower Your Operations with Risk-Based Decision Making. 2023. Available online: https://www.onetrust.com/solutions/grc-and-security-assurance-cloud/ (accessed on 24 November 2023).

- Archer. Archer GRC Solution. Available online: https://www.archerirm.com/content/grc (accessed on 24 November 2023).

- SAP. Governance, Risk, Compliance (GRC), and Cybersecurity. 2023. Available online: https://www.sap.com/products/financial-management/grc.html (accessed on 24 November 2023).

- Oracle. Oracle Enterprise Governance, Risk and Compliance Documentation. 2023. Available online: https://docs.oracle.com/applications/grc866/ (accessed on 24 November 2023).

- Lund, M.S.; Solhaug, B.; Stølen, K. Model-Driven Risk Analysis: The CORAS Approach; Springer: Berlin/Heidelberg, Germany, 2010.

- Nost, E.; Burn, J. CISA Releases Directives on Asset Discovery and Vulnerability Enumeration; Forrester: Cambridge, MA, USA, 2022; Available online: https://www.forrester.com/blogs/cisa-releases-directives-on-asset-discovery-and-vulnerability-enumeration/ (accessed on 4 October 2023).

- Rapid7. Evaluating Vulnerability Assessment Solutions. Available online: https://www.rapid7.com/globalassets/_pdfs/whitepaperguide/rapid7-vulnerability-assessment-buyers-guide.pdf (accessed on 9 October 2023).

- EUR-Lex. Directive (EU) 2022/2555 of the European Parliament and of the Council of 14 December 2022 on Measures for a High Common Level of Cybersecurity across the Union, Amending Regulation (EU) No 910/2014 and Directive (EU) 2018/1972, and Re-Pealing Directive (EU) 2016/1148 (NIS 2 Directive); Official Journal of the European Union: Brussels, Belgium, 2022; Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32022L2555 (accessed on 24 November 2023).

- CyberArk/PWC. Getting Ready for the NIS2 Directive; White Paper; CyberArk UK: London, UK, 2023; Available online: https://www.cyberark.com/resources/white-papers/getting-ready-for-nis2?utm_source=google&utm_medium=paid_search&utm_term=emea_english_nl_ie_be_dk_sw_it_es_fr&utm_content=20230220_gb_wc_nis2_get_ready_pwc_wp&utm_campaign=security_privilege_access&gclid=CjwKCAiA6byqBhAWEiwAnGCA4LSZ1FpvLUjXjEyu1LJvBqpKVY73PryI2HnXd_BYvR23uZX74Z19RxoCY9QQAvD_BwE (accessed on 11 November 2023).

- EUR-Lex. Regulation (EU) 2022/2554 of the European Parliament and of the Council of 14 December 2022 on Digital Operational Resilience for the Financial Sector and Amending Regulations (EC) No 1060/2009, (EU) No 648/2012, (EU) No 600/2014, (EU) No 909/2014 and (EU) 2016/1011; Official Journal of the European Union: Brussels, Belgium, 2022; Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32022R2554 (accessed on 11 November 2023).

- ISO 27001; Information Technology—Security Techniques–Information Security Management Systems—Requirements. International Organization for Standardization: Geneva, Switzerland, 2013. Available online: http://www.itref.ir/uploads/editor/42890b.pdf (accessed on 23 August 2023).

- ISO 27005:2022; Information Technology—Security Techniques—Information Security Risk Management. International Organization for Standardization: Geneva, Switzerland, 2022. Available online: https://www.iso.org/standard/80585.html (accessed on 23 August 2023).

- ISO 31000; Risk Management—Guidelines. International Organization for Standardization: Geneva, Switzerland, 2018. Available online: https://www.iso.org/standard/65694.html (accessed on 30 June 2023).

- Kosutic, D. ISO 31000 and ISO 27001—How Are They Related? 2022. Available online: https://advisera.com/27001academy/blog/2014/03/31/iso-31000-and-iso-27001-how-are-they-related/#:~:text=In%20clause%206.1.-,3%2C%20ISO%2027001%20notes%20that%20information%20security%20management%20in%20ISO,already%20compliant%20with%20ISO%2031000 (accessed on 23 August 2023).