Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Ebrahim Karami | -- | 3751 | 2023-12-14 19:03:11 | | | |

| 2 | Lindsay Dong | Meta information modification | 3751 | 2023-12-18 07:51:16 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Yousefpour Shahrivar, R.; Karami, F.; Karami, E. Machine Learning in Fetal Anomaly Detection. Encyclopedia. Available online: https://encyclopedia.pub/entry/52775 (accessed on 07 February 2026).

Yousefpour Shahrivar R, Karami F, Karami E. Machine Learning in Fetal Anomaly Detection. Encyclopedia. Available at: https://encyclopedia.pub/entry/52775. Accessed February 07, 2026.

Yousefpour Shahrivar, Ramin, Fatemeh Karami, Ebrahim Karami. "Machine Learning in Fetal Anomaly Detection" Encyclopedia, https://encyclopedia.pub/entry/52775 (accessed February 07, 2026).

Yousefpour Shahrivar, R., Karami, F., & Karami, E. (2023, December 14). Machine Learning in Fetal Anomaly Detection. In Encyclopedia. https://encyclopedia.pub/entry/52775

Yousefpour Shahrivar, Ramin, et al. "Machine Learning in Fetal Anomaly Detection." Encyclopedia. Web. 14 December, 2023.

Copy Citation

Fetal development is a critical phase in prenatal care, demanding the timely identification of anomalies in ultrasound images to safeguard the well-being of both the unborn child and the mother. Medical imaging has played a pivotal role in detecting fetal abnormalities and malformations. However, despite significant advances in ultrasound technology, the accurate identification of irregularities in prenatal images continues to pose considerable challenges, often necessitating substantial time and expertise from medical professionals.

fetal anomaly

prenatal diagnosis

machine learning

deep learning

ultrasonography imaging

1. Introduction

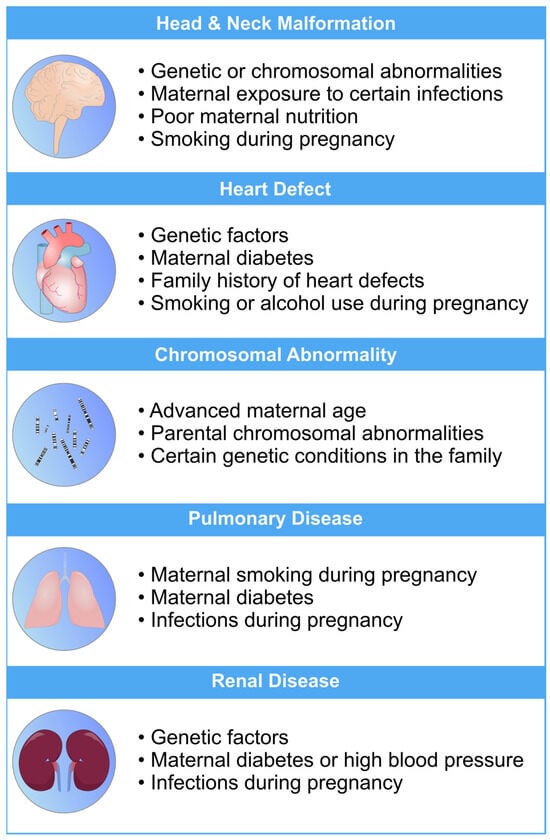

Fetal development is a critical phase in human growth, in which any abnormality can lead to significant health complications. The subjectivity and inaccuracies of medical sonographers and technicians in interpreting ultrasonography images often result in misdiagnoses [1][2][3]. Fetal anomalies can be defined as structural abnormalities in prenatal development that manifest in several critical anatomical sites, such as the fetal heart, central nervous system (CNS), lungs, and kidneys [4][5]. These anomalies can arise during various stages of pregnancy and can be caused by different genetics and environmental factors, or a combination of both, which are called multifactorial disorders (Figure 1) [6][7]. Ultrasound and genetic testing are two examples of prenatal screening and diagnostic tools that can help find these abnormalities at an earlier gestational age. Fetal abnormalities can have varying degrees of influence on a child’s health, from those that are easily treatable to those that result in the child’s death either during pregnancy or shortly after birth [8]. The occurrence of fetal anomalies differs across different populations. Structural anomalies in fetuses can be detected in approximately 3% of all pregnancies [9]. Ultrasound (US) is still the most commonly used method to safely screen for fetal anomalies during pregnancy, but it is mainly dependent on sonographer expertise and, therefore, is error-prone. In addition, US images sometimes lack high quality and discrete edges that can lead to inaccurate diagnosis [10][11]. Fetal development is crucial and complex, and abnormalities will significantly impact the children’s and sometimes the maternal health [12]. In this regard, the ever-increasing progress in the field of computer science has produced a wide variety of methods, such as machine learning (ML), deep learning (DL), and neural networks (NN), that are specific techniques within the broader field of artificial intelligence (AI) and have gained notable popularity in the medical field [13][14][15][16][17][18]. These methods include, but are not limited to, image classification, segmentation, detection of specific objects within images, and regression analysis. Consequently, numerous studies have been carried out on developing DL- and ML-based models for the accurate recognition of various types of prenatal abnormalities, including heart defects, CNS malformations, respiratory diseases, and renal anomalies in the context of chromosomal disorders or in the isolated forms.

Figure 1. An overview of the most common risk factors associated with fetal abnormalities of the heart, brain, lung, and kidneys. These risk factors can have a profo bcb4und impact on the health and well-being of newborns. Limiting the exposure to these risk factors can mitigate the risk of fetal defects [19][20][21][22][23].

2. Methods in Machine Learning for Fetal Anomaly Detection

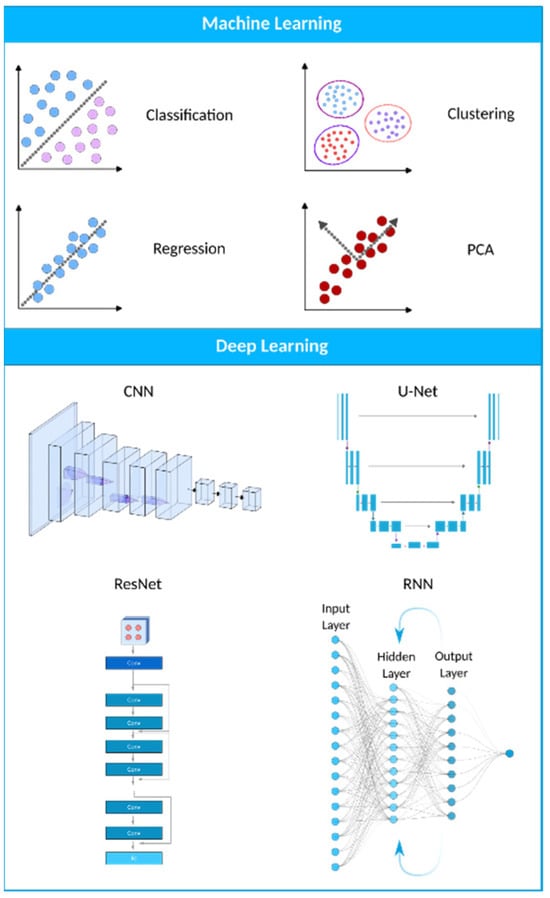

Machine learning (ML) is a computational technique originated from the field of computer science. In recent years, ML has been extensively used in various fields, such as medical image analysis, and has provided many valuable methods and approaches for more accurate and specific diagnoses. The field of medical image analysis is rapidly evolving, and new models and techniques are constantly emerging (Figure 2). One of the more widely used techniques in this field is deep learning (DL). A recent study has evaluated the practicality of DL-based models within clinics. They have found that AI-driven technologies can significantly help sonographers by performing disruptive tasks automatically, thus allowing technicians to focus mainly on interpreting images [24]. AI-based tools have great potential to lead to a paradigm shift in how we practice medicine. Many researchers have now constructed ML- and DL-based models to use in applications ranging from evaluating gestational age [25] to the simultaneous anomaly detection of fetal organs, which will be discussed in more detail in the following sections.

Figure 2. A visual representation of the AI landscape with three primary subsections: artificial intelligence, machine learning, and deep learning. The figure highlights four deep learning models (CNN, U-Net, ResNet, and RNN) and four machine learning algorithms (classification, clustering, PCA, and regression) as key components within these domains.

3. Applications of Machine Learning in Fetal Anomaly Detection

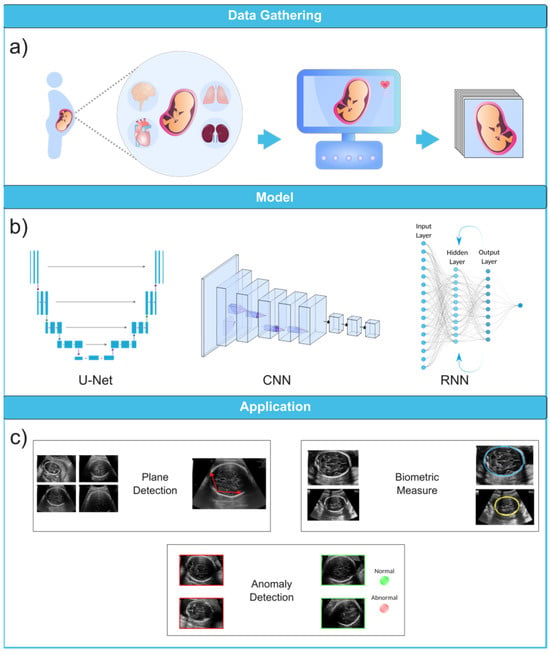

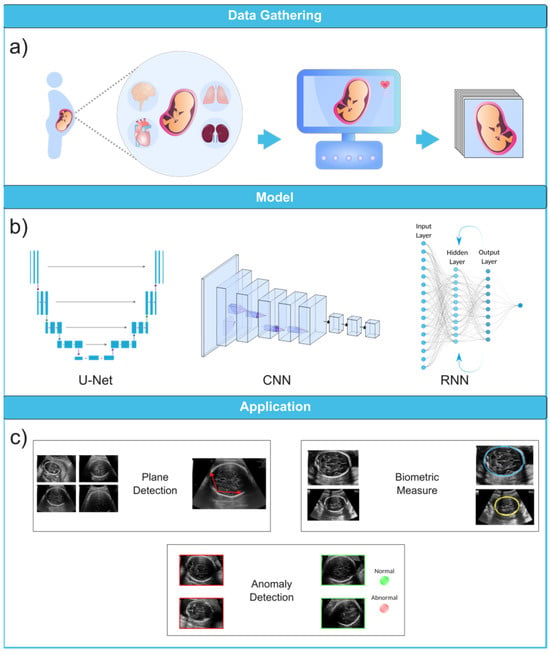

To fully appreciate the role of machine learning in the diagnosis of fetal abnormalities, it is necessary to first become familiar with the standard imaging technique that serves as the foundation of this diagnostic procedure. In comparison to computed tomography (CT) and magnetic resonance imaging (MRI), US imaging is the preferable method since it allows for real-time, cost-effective prenatal examination without the use of ionizing radiation. The standard procedure for fetal anomaly detection is typically a multi-step process, starting with the identification and interpretation of the sonographic images (Figure 3a). The initial scans are obtained in the first trimester, followed by a detailed anatomic survey in the second trimester. This survey involves the examination of multiple fetal organ systems and structures like the heart, brain, lungs, and kidneys, among others. Following this, the images are analyzed, pre-processed for any potential noise and errors, and finally fed into ML-based models for the detection of abnormalities or deviations from the normal developmental patterns (Figure 3b,c). ML can significantly streamline this process by automating the initial analysis and potentially identifying abnormalities with greater accuracy and speed than traditional manual interpretation.

Figure 3. Ultrasound image analysis pipeline. (a) In this initial phase, ultrasound imaging is performed on a pregnant woman to identify potential fetal organ abnormalities. (b) This section presents a variety of deep learning models designed for different ultrasound image analysis task, such as CNN, U-Net, and RNN. (c) This section demonstrates the wide-ranging applications facilitated by deep learning models, including biometric measurements (e.g., head circumference), standard plane identification, and detection of fetal anomalies. Ultrasound images were obtained from the following dataset on the Kaggle (https://www.kaggle.com/datasets/rahimalargo/fetalultrasoundbrain, accessed on 1 August 2023).

3.1. Ultrasound Imaging

US imaging provides a real-time, low-cost prenatal evaluation with the additional advantages of being radiation-free and noninvasive in comparison to CT and MRI [26]. During a US exam, a transducer probe is placed against the mother’s abdomen and moved to visualize fetal structures. The probe transmits high-frequency sound waves, which are reflected to produce two-dimensional grayscale images representing tissue planes. The US machine calculates the time interval between transmitted and reflected waves to localize anatomical structures. Repeated pulses and reflections generate real-time visualization of the fetus. The US can capture standard views such as the four-chamber heart, profile, lips, brain, spine, and extremities [27][28][29]. Fetal standard planes in US imaging refer to specific anatomical views to assess fetal development. They provide a standardized orientation for evaluating different structures and measurements in the fetus, aiding in diagnosing potential abnormalities or monitoring the growth and well-being of the developing baby during pregnancy. Thus, the automatic recognition of standard planes in fetal US images is an effective method for diagnosing fetal anomalies.

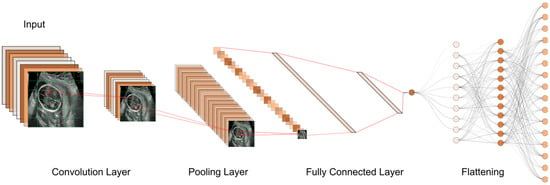

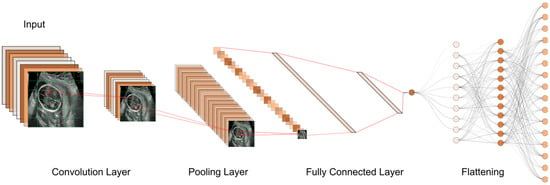

Until now, numerous studies have been conducted to find the best models and approaches for reliable US image and video segmentation [30][31][32][33]. The evaluation of fetal health is the most common application of ultrasound technology. In particular, ultrasound is used to monitor the development of a fetus and detect any abnormalities early on. Placenta anomalies, growth restrictions, and structural defects all fall into this category. Due to their improved pattern recognition skills, DL models such as CNN have proven to be effective in the detection of abnormalities (Figure 4).

Figure 4. The typical workflow of a CNN for ultrasound image analysis. Convolution Layer: This displays the initial layer where input images are processed using convolution operations to extract features. Pooling Layer: This illustrates the subsequent layer where pooling operations (e.g., max-pooling) are applied to reduce spatial dimensions and retain important information. Fully Connected Layer: This shows the layer responsible for connecting the extracted features to make classification decisions or predictions. Flattening: This represents the process of converting the output from the previous layers into a one-dimensional vector for further processing.

3.1.1. First Trimester

First-trimester US imaging is typically performed between 11 and 13 weeks of gestation [34]. Its primary uses are to confirm pregnancy viability, determine gestational age, evaluate multiple gestations, and screen for significant fetal anomalies such as neural tube defects, abdominal wall defects, cardiac anomalies, nuchal translucency (NT), and some significant fetal brain abnormalities [35][36]. An abnormal NT measurement (≥3.5 mm (>p99)) during the first-trimester US can strongly predict the risk of chromosomal abnormalities and even congenital heart defects [37][38][39].

3.1.2. Second Trimester

Second-trimester US imaging is commonly performed between 18 and 22 weeks of gestation. The primary aim is a detailed anatomical survey to evaluate fetal growth and full screening for structural abnormalities and placental growth and status. The fetal anatomy scan assesses the brain, face, spine, heart, lungs, abdomen, kidneys, and extremities [40]. The second-trimester US has high detection rates for major fetal anomalies if performed by a qualified expert. The appropriateness criteria provide screening recommendations for fetuses in the second and third trimesters with varying risk levels [41].

3.1.3. Third Trimester

Third-trimester US imaging is often performed around 28–32 weeks of gestation to re-confirm fetal growth and position, screen for anomalies that may have developed since the prior scan, and make further assessments on the placental location and growth. It was found that fetal anomalies can be discovered in 1/300 pregnancies during routine third-trimester ultrasounds [42]. While US is valuable for prenatal screening, it does have limitations. The imaging quality can be impaired by the maternal body environment, fetal position, shadowing from bones, and low amniotic fluid volume [43][44]. Interpretation requires extensive training and is subject to human error. A computerized analysis of US images using ML offers the potential to overcome some human limitations. ML methods aim to improve screening accuracy and standardize interpretation by applying AI to analyze US data. These models can be trained to identify anomalies in poor-quality scans and detect subtle or complex patterns that may be missed by the technicians. However, further research is still needed to fully integrate ML into clinics and medical workflows.

3.2. Diagnosis of Fetal Abnormalities

3.2.1. Congenital Heart Diseases

Congenital heart diseases (CHDs) are classified as common and severe congenital malformations in fetuses, occurring in approximately 6 to 13 out of every 1000 cases [45]. Although, CHDs may have no prenatal symptoms, they may result in significant morbidities, and even death, later in life. Since heart defects are the most common fetal anomalies among fetuses, research interest in this matter is consequently higher than other types of defects. Evaluating the cardiac function of a fetus is challenging due to the factors such as the fetus’s constant movement, rapid heart rate, small size, limited access, and insufficient expertise in fetal echocardiography among some sonographers, which makes the identification of complex abnormal heart structures difficult and prone to errors [46][47][48]. Fetal echocardiography was introduced about 25 years ago and now needs to incorporate advanced technologies.

The inability to identify CHD during prenatal screening is more strongly influenced by a deficiency in adaptation skills during the performance of the SAS test than by situational variables like body mass index or fetal position. The cardiac images exhibited a considerably higher frequency of insufficient quality in undiscovered instances as compared to identified ones. In spite of the satisfactory image quality, CHD was undetected in 31% of instances. Furthermore, it is worth noting that in 20% of instances when CHD went undiscovered, the condition was not visually apparent despite the presence of high-quality images [49].

Echocardiography, a specialized US technique, remains the primary and essential method for early detection of fetal cardiac abnormalities and mortality risk, aimed at identifying congenital heart defects before birth. It is extensively employed during pregnancy, and the obtained images can be used to train DL models like CNN to automate and enhance the identification of abnormalities [50]. An echocardiogram consists of a detailed US test of the fetal heart, performed prenatally; utilizing AI for analyzing echocardiograms holds promise in advancing prenatal diagnosis and improving heart defect screening [51].

While GANs have demonstrated their effectiveness in anomaly detection and generative modeling, it is possible to enhance their analytical performance for intricate tasks like fetal echocardiography assessment by training an ensemble of multiple neural networks and integrating their predictions. The use of an ensemble of neural networks involves the integration of different neural networks in order to address certain machine-learning objectives. The key concept is that an ensemble of multiple neural networks would typically exhibit greater performance compared to any individual network.

The four-chamber view facilitates the assessment of cardiac chamber size and the septum. In contrast, the left ventricular outflow tract view offers a visualization of the aortic valve and root. The right ventricular outflow tract view provides insight into the pulmonary valve and artery, and the three-vessel view confirms normal anatomy by showcasing the pulmonary artery, aorta, and superior vena cava.

Zhou et al. [52] introduced a category attention network aimed at simultaneous image segmentation for the four-chamber view. They modified the SOLOv2 model for object instance segmentation. However, SOLOv2 encounters a potential misclassification issue with grids within divisions containing pixels from different instance categories. This discrepancy arises because the category score of a grid might erroneously surpass that of surrounding grids, which affects the final quality of instance segmentation. Certain image portions would become intertwined, leading to challenges in accurate object classification. To address this, the researchers integrated a “category attention module” (CAM) into SOLOv2, creating CA-ISNet. The CAM analyzes various image sections, aiding in accurately determining object categories. The proposed CA-ISNet model underwent training using a dataset of 319 images encompassing the four cardiac chambers of the fetuses.

3.2.2. Head and Neck Anomalies

The development of the fetal brain is the most essential process that takes place during the 18–21 weeks of pregnancy. Any abnormalities in the fetal brain can have severe effects on various functionalities of the brain, such as cognitive function, motor skills, language development, cortical maturation, and learning capabilities [53][54]. Thus, a precise anomaly detection method is of the utmost importance. Currently, US is still the most commonly used method to initially examine the development of the fetal brain for any fetal anomalies during pregnancy. During the 18- to 21-week pregnancy period, US imaging is used to measure the cerebrum, midbrain, cerebellum, brainstem, and other regions of the brain as part of the screening for fetal abnormalities [55][56]. To detect fetal brain abnormalities, Sreelakshmy et al. developed a model (ReU-Net) based on U-Net and ResNet for the segmentation of fetuses’ cerebellum using 740 fetal brain US images [57].

The cerebellum is an essential part of the brain that plays a crucial role in motor control, coordination, and balance. The fetal cerebellum can be seen and distinguished from other parts of the brain in US images, which makes it relatively easy for technicians to examine it during scans and, consequently, for researchers to employ DL-based models for the segmentation of the obtained images. Moreover, ResNet is a popular model frequently used for medical image segmentation, and it offers to skip connections to address the vanishing gradient problem. More specifically, in deep networks, gradients that are used to guide the weight information update for layers can become smaller and smaller as they are multiplied at each layer, and they will eventually reach close to zero. This makes the network struggle to learn complex patterns from images, which is essential in medical image processing. Besides using ResNets, Sreelakshmy et al. also employed the Wiener filter, which reduces unwanted noises in most US images. As a result, their ReU-Net model achieved 94% and 91% for precision rate and DICE, respectively. Singh et al. also used the ResNet model in conjunction with U-Nets to automate the cerebellum segmentation procedure. However, in this study, by including residual blocks and using dilation convolution in the last two layers, they were able to improve cerebellar segmentation from noisy US images [58].

The subcortical volume development in a fetus is a crucial aspect to monitor during pregnancy. Hesse et al. constructed a CNN-based model for an automated segmentation of subcortical structures in 537 3D US images [59]. One important aspect of this research is the use of few-shot learning to train the CNN using relatively few manually annotated data (in this case, only nine). Few-shot learning is a machine learning paradigm characterized by the training of a model to perform various tasks using a very restricted amount of data. This quantity is often significantly smaller than what is typically required by conventional machine learning approaches. The basic goal of few-shot learning is to make models flexible and capable of doing tasks that would otherwise need extensive labeled data collection, which can be either time-consuming or expensive.

Cystic hygroma is an abnormal growth that frequently occurs in the fetal nuchal area, within the posterior triangle of the neck. This growth originates from a lymphatic system abnormality, which develops from jugular-lymphatic blockage in 1 in every 285 fetuses [60]. The diagnosis of cystic hygroma is made with an evaluation of the NT thickness. Studies have also shown the connection between cystic hygroma and chromosomal abnormalities in first-trimester screenings [61]. In this concern, a CNN model called DenseNet was trained by Walker et al. on a dataset that included 289 sagittal fetal US images (129 images were from cystic hygroma cases, and 160 were from normal NT controls) in order to diagnose cystic hygroma in the first-trimester US images. The model was used to classify images as either “normal” or “cystic hygroma”, with an overall accuracy of 93% [62].

To perform US in order to look for abnormalities in the brains of prenatal fetuses, the standard planes of fetal brain are commonly used. However, fetal head plane detection is a subjective procedure, and consequently, prone to errors and mistakes by technicians. Recently, a study was conducted to automate fetal head plane detection by constructing a multi-task learning framework with regional CNNs (R-CNN). This MF R-CNN model was able to accurately locate the six fetal anatomical structures and perform a quality assessment for US images [63].

Based on the same dataset provided by Xie et al. [64], another study was conducted to develop a computer-aided framework for diagnosing fetal brain anomalies. Craniocerebral regions of fetal head images were first extracted using a DCNN with U-Nets and a VGG-Net network, and then classified into normal and abnormal categories. In small datasets, using VGG networks can lead to overfitting because of the large number of parameters available in these models. However, they used this model on a large dataset of US images and achieved an overall accuracy of 91.5%. In addition, the researchers implemented class activation mapping (CAM) to localize lesions and provide visual evidence for diagnosing abnormal cases, which can make them visually comprehensive for non-expert technicians. However, the IoU value of the predicted lesions was too low, and thus, more advanced object detection techniques are required for a more precise localization [65].

3.2.3. Respiratory Diseases

The development and function of the lungs are crucial for the well-being and survival of fetuses. Malformations caused by underdevelopment or abnormalities inside the lung structure will lead to serious health issues and even death in newborns. For example, neonatal respiratory morbidity (NRM), such as respiratory distress syndrome or transient tachypnea of the newborn, is often seen when a fetus’ lungs are not fully developed, and it is still a major cause of morbidity and death [66]. Immature fetal lungs are closely linked to the respiratory complications experienced by newborns [67]. In addition, fetal lung lesions are estimated to manifest in around 1 in 15,000 live births, and are believed to originate from a range of abnormalities associated with fetal lung airway malformation [68]. In this case, the random undersampling with AdaBoost (RUSBoost) model was developed using extracted features from fetal lung images to predict NRM. However, locating regions of interest within the included images was manually performed, which is time-consuming and should be automated for use in clinics. This model was able to accurately predict NRM in fetal lung images. Small sample sizes and single-source datasets were also some of its limitations [69].

3.2.4. Chromosomal Abnormalities

Chromosomal disorders are frequently occurring genetic conditions that contribute to congenital disabilities. These disorders arise due to abnormalities in the structure or number of chromosomes in an individual’s cells, leading to significant health challenges and impairments present from birth. There are, however, various ways to detect them early on in the pregnancy.

-

NT measurement, which measures the thickness of the fluid-filled space at the back of the fetus’s neck.

-

Detailed anomaly scan, a thorough US examination that checks for any structural abnormalities in fetuses.

-

Fetal echocardiography, which focuses on evaluating the fetal heart structure and function to detect cardiac anomalies.

-

Nasal bone (NB), whose absence is a valuable biomarker of Down syndrome in the first trimester of pregnancy.

In addition to the mentioned procedures, another technique that can be used to detect chromosomal disorders from US images is the measurement of fetal facial structure. Certain facial features can indicate the presence of certain genetic conditions [70].

Tang et al. developed a two-stage ensemble learning model named Fgds-EL that uses CNN and RF models to train a model to diagnose genetic diseases based on the facial features of the fetuses. This study used 932 images (680 were labeled normal, and 252 were diagnosed with various genetic disorders). To detect anomalies, the researchers extracted key features from a fetal facial structure, such as the nasal bone, frontal bone, and jaw. These are specific locations where genetic disorders such as trisomy 21, 19, 13, and others can be identified. The CNN was trained to extract high-level features from the facial images, while the RF was used to classify the extracted features and make the final diagnosis. The proposed model achieved a sensitivity of 0.92 and a specificity of 0.97 in the test set [71].

4. Conclusions

In conclusion, the field of medical image analysis has made significant developments in recent years, with the advent of advanced DL models and data processing techniques that can significantly improve the quality of final models. Eventually, the developed models should be able to outperform sonographers and technicians in terms of accuracy and efficiency. These AI-driven models will not simply enhance the diagnostic process but also enable more personalized treatment plans based on individual patient data. Furthermore, the use of such models can reduce the workload of healthcare professionals, ultimately leading to a more streamlined healthcare system globally. However, several challenges still slow down progress in this area of research. These challenges include the difficulty of training accurate models for diagnosing evolving fetal brain abnormalities, the lack of labeled ultrasound images for certain conditions, etc. Nevertheless, ongoing research and the advent of newer, more robust algorithms provide hope for the future.

References

- Di Serafino, M.; Iacobellis, F.; Schillirò, M.L.; D’auria, D.; Verde, F.; Grimaldi, D.; Orabona, G.D.; Caruso, M.; Sabatino, V.; Rinaldo, C.; et al. Common and Uncommon Errors in Emergency Ultrasound. Diagnostics 2022, 12, 631.

- Krispin, E.; Dreyfuss, E.; Fischer, O.; Wiznitzer, A.; Hadar, E.; Bardin, R. Significant deviations in sonographic fetal weight estimation: Causes and implications. Arch. Gynecol. Obstet. 2020, 302, 1339–1344.

- Cate, O.T.; Regehr, G. The Power of Subjectivity in the Assessment of Medical Trainees. Acad. Med. 2019, 94, 333–337.

- Feygin, T.; Khalek, N.; Moldenhauer, J.S. Fetal brain, head, and neck tumors: Prenatal imaging and management. Prenat. Diagn. 2020, 40, 1203–1219.

- Sileo, F.G.; Curado, J.; D’Antonio, F.; Benlioglu, C.; Khalil, A. Incidence and outcome of prenatal brain abnormality in twin-to-twin transfusion syndrome: Systematic review and meta-analysis. Ultrasound Obstet. Gynecol. Off. J. Int. Soc. Ultrasound Obstet. Gynecol. 2022, 60, 176–184.

- Bagherzadeh, R.; Gharibi, T.; Safavi, B.; Mohammadi, S.Z.; Karami, F.; Keshavarz, S. Pregnancy; an opportunity to return to a healthy lifestyle: A qualitative study. BMC Pregnancy Childbirth 2021, 21, 751.

- Flierman, S.; Tijsterman, M.; Rousian, M.; de Bakker, B.S. Discrepancies in Embryonic Staging: Towards a Gold Standard. Life 2023, 13, 1084.

- Horgan, R.; Nehme, L.; Abuhamad, A. Artificial intelligence in obstetric ultrasound: A scoping review. Prenat. Diagn. 2023, 43, 1176–1219.

- Edwards, L.; Hui, L. First and second trimester screening for fetal structural anomalies. Semin. Fetal Neonatal Med. 2018, 23, 102–111.

- Drukker, L.; Sharma, H.; Karim, J.N.; Droste, R.; Noble, J.A.; Papageorghiou, A.T. Clinical workflow of sonographers performing fetal anomaly ultrasound scans: Deep-learning-based analysis. Ultrasound Obstet. Gynecol. Off. J. Int. Soc. Ultrasound Obstet. Gynecol. 2022, 60, 759–765.

- Dawood, Y.; Buijtendijk, M.F.; Shah, H.; Smit, J.A.; Jacobs, K.; Hagoort, J.; Oostra, R.-J.; Bourne, T.; van den Hoff, M.J.; de Bakker, B.S. Imaging fetal anatomy. Semin. Cell Dev. Biol. 2022, 131, 78–92.

- Demirci, O.; Selçuk, S.; Kumru, P.; Asoğlu, M.R.; Mahmutoğlu, D.; Boza, B.; Türkyılmaz, G.; Bütün, Z.; Arısoy, R.; Tandoğan, B. Maternal and fetal risk factors affecting perinatal mortality in early and late fetal growth restriction. Taiwan. J. Obstet. Gynecol. 2015, 54, 700–704.

- Habehh, H.; Gohel, S. Machine Learning in Healthcare. Curr. Genom. 2021, 22, 291–300.

- van der Velden, B.H.; Kuijf, H.J.; Gilhuijs, K.G.; Viergever, M.A. Explainable artificial intelligence (XAI) in deep learning-based medical image analysis. Med. Image Anal. 2022, 79, 102470.

- Hanchard, S.E.L.; Dwyer, M.C.; Liu, S.; Hu, P.; Tekendo-Ngongang, C.; Waikel, R.L.; Duong, D.; Solomon, B.D. Scoping review and classification of deep learning in medical genetics. Genet. Med. Off. J. Am. Coll. Med. Genet. 2022, 24, 1593–1603.

- Jiang, H.; Diao, Z.; Shi, T.; Zhou, Y.; Wang, F.; Hu, W.; Zhu, X.; Luo, S.; Tong, G.; Yao, Y.-D. A review of deep learning-based multiple-lesion recognition from medical images: Classification, detection and segmentation. Comput. Biol. Med. 2023, 157, 106726.

- Alzubaidi, M.; Agus, M.; Alyafei, K.; Althelaya, K.A.; Shah, U.; Abd-Alrazaq, A.; Anbar, M.; Makhlouf, M.; Househ, M. Toward deep observation: A systematic survey on artificial intelligence techniques to monitor fetus via ultrasound images. iScience 2022, 25, 104713.

- Yang, X.; Yu, L.; Li, S.; Wen, H.; Luo, D.; Bian, C.; Qin, J.; Ni, D.; Heng, P.-A. Towards Automated Semantic Segmentation in Prenatal Volumetric Ultrasound. IEEE Trans. Med. Imaging 2019, 38, 180–193.

- Massalska, D.; Bijok, J.; Kucińska-Chahwan, A.; Zimowski, J.G.; Ozdarska, K.; Panek, G.; Roszkowski, T. Triploid pregnancy–Clinical implications. Clin. Genet. 2021, 100, 368–375.

- Lee, K.-S.; Choi, Y.-J.; Cho, J.; Lee, H.; Lee, H.; Park, S.J.; Park, J.S.; Hong, Y.-C. Environmental and Genetic Risk Factors of Congenital Anomalies: An Umbrella Review of Systematic Reviews and Meta-Analyses. J. Korean Med. Sci. 2021, 36, e183.

- Harris, B.S.; Bishop, K.C.; Kemeny, H.R.; Walker, J.S.; Rhee, E.; Kuller, J.A. Risk Factors for Birth Defects. Obstet. Gynecol. Surv. 2017, 72, 123–135.

- Abebe, S.; Gebru, G.; Amenu, D.; Mekonnen, Z.; Dube, L. Risk factors associated with congenital anomalies among newborns in southwestern Ethiopia: A case-control study. PLoS ONE 2021, 16, e0245915.

- Helle, E.; Priest, J.R. Maternal Obesity and Diabetes Mellitus as Risk Factors for Congenital Heart Disease in the Offspring. J. Am. Hear. Assoc. 2020, 9, e011541.

- Matthew, J.; Skelton, E.; Day, T.G.; Zimmer, V.A.; Gomez, A.; Wheeler, G.; Toussaint, N.; Liu, T.; Budd, S.; Lloyd, K.; et al. Exploring a new paradigm for the fetal anomaly ultrasound scan: Artificial intelligence in real time. Prenat. Diagn. 2022, 42, 49–59.

- Dan, T.; Chen, X.; He, M.; Guo, H.; He, X.; Chen, J.; Xian, J.; Hu, Y.; Zhang, B.; Wang, N.; et al. DeepGA for automatically estimating fetal gestational age through ultrasound imaging. Artif. Intell. Med. 2023, 135, 102453.

- Torres, H.R.; Morais, P.; Oliveira, B.; Birdir, C.; Rüdiger, M.; Fonseca, J.C.; Vilaça, J.L. A review of image processing methods for fetal head and brain analysis in ultrasound images. Comput. Methods Programs Biomed. 2022, 215, 106629.

- Sotiriadis, A.; Figueras, F.; Eleftheriades, M.; Papaioannou, G.K.; Chorozoglou, G.; Dinas, K.; Papantoniou, N. First-trimester and combined first- and second-trimester prediction of small-for-gestational age and late fetal growth restriction. Ultrasound Obstet. Gynecol. Off. J. Int. Soc. Ultrasound Obstet. Gynecol. 2018, 53, 55–61.

- Femina, M.A.; Raajagopalan, S.P. Anatomical structure segmentation from early fetal ultrasound sequences using global pollination CAT swarm optimizer–based Chan–Vese model. Med. Biol. Eng. Comput. 2019, 57, 1763–1782.

- Pertl, B.; Eder, S.; Stern, C.; Verheyen, S. The Fetal Posterior Fossa on Prenatal Ultrasound Imaging: Normal Longitudinal Development and Posterior Fossa Anomalies. Ultraschall Der Med. Eur. J. Ultrasound 2019, 40, 692–721.

- Karami, E.; Shehata, M.S.; Smith, A. Estimation and tracking of AP-diameter of the inferior vena cava in ultrasound images using a novel active circle algorithm. Comput. Biol. Med. 2018, 98, 16–25.

- Karami, E.; Shehata, M.; Smith, A. Segmentation and tracking of inferior vena cava in ultrasound images using a novel polar active contour algorithm. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, USA, 14–16 November 2017; pp. 745–749.

- Jafari, Z.; Karami, E. Breast Cancer Detection in Mammography Images: A CNN-Based Approach with Feature Selection. Information 2023, 14, 410.

- Karami, E.; Shehata, M.S.; Smith, A. Adaptive Polar Active Contour for Segmentation and Tracking in Ultrasound Videos. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 1209–1222.

- Yasrab, R.; Fu, Z.; Zhao, H.; Lee, L.H.; Sharma, H.; Drukker, L.; Papageorgiou, A.T.; Noble, J.A. A Machine Learning Method for Automated Description and Workflow Analysis of First Trimester Ultrasound Scans. IEEE Trans. Med. Imaging 2022, 42, 1301–1313.

- Volpe, N.; Dall’Asta, A.; Di Pasquo, E.; Frusca, T.; Ghi, T. First-trimester fetal neurosonography: Technique and diagnostic potential. Ultrasound Obstet. Gynecol. Off. J. Int. Soc. Ultrasound Obstet. Gynecol. 2021, 57, 204–214.

- Mahdavi, S.; Karami, F.; Sabbaghi, S. Non-invasive prenatal diagnosis of foetal gender through maternal circulation in first trimester of pregnancy. J. Obstet. Gynaecol. J. Inst. Obstet. Gynaecol. 2019, 39, 1071–1074.

- Brown, I.; Rolnik, D.L.; Fernando, S.; Menezes, M.; Ramkrishna, J.; Costa, F.d.S.; Meagher, S. Ultrasound findings and detection of fetal abnormalities before 11 weeks of gestation. Prenat. Diagn. 2021, 41, 1675–1684.

- Kristensen, R.; Omann, C.; Gaynor, J.W.; Rode, L.; Ekelund, C.K.; Hjortdal, V.E. Increased nuchal translucency in children with congenital heart defects and normal karyotype—Is there a correlation with mortality? Front. Pediatr. 2023, 11, 1104179.

- Minnella, G.P.; Crupano, F.M.; Syngelaki, A.; Zidere, V.; Akolekar, R.; Nicolaides, K.H. Diagnosis of major heart defects by routine first-trimester ultrasound examination: Association with increased nuchal translucency, tricuspid regurgitation and abnormal flow in ductus venosus. Ultrasound Obstet. Gynecol. Off. J. Int. Soc. Ultrasound Obstet. Gynecol. 2020, 55, 637–644.

- Shi, B.; Han, Z.; Zhang, W.B.; Li, W. The clinical value of color ultrasound screening for fetal cardiovascular abnormalities during the second trimester: A systematic review and meta-analysis. Medicine 2023, 102, e34211.

- Expert Panel on GYN and OB Imaging; Sussman, B.L.; Chopra, P.; Poder, L.; Bulas, D.I.; Burger, I.; Feldstein, V.A.; Laifer-Narin, S.L.; Oliver, E.R.; Strachowski, L.M.; et al. ACR Appropriateness Criteria® Second and Third Trimester Screening for Fetal Anomaly. J. Am. Coll. Radiol. 2021, 18, S189–S198.

- Drukker, L.; Bradburn, E.; Rodriguez, G.B.; Roberts, N.W.; Impey, L.; Papageorghiou, A.T. How often do we identify fetal abnormalities during routine third-trimester ultrasound? A systematic review and meta-analysis. BJOG Int. J. Obstet. Gynaecol. 2021, 128, 259–269.

- Kerr, R.; Liebling, R. The fetal anomaly scan. Obstet. Gynaecol. Reprod. Med. 2021, 31, 72–76.

- Chaoui, R.; Abuhamad, A.; Martins, J.; Heling, K.S. Recent Development in Three and Four Dimension Fetal Echocardiography. Fetal Diagn. Ther. 2020, 47, 345–353.

- Xiao, S.; Zhang, J.; Zhu, Y.; Zhang, Z.; Cao, H.; Xie, M.; Zhang, L. Application and Progress of Artificial Intelligence in Fetal Ultrasound. J. Clin. Med. 2023, 12, 3298.

- Mennickent, D.; Rodríguez, A.; Opazo, M.C.; Riedel, C.A.; Castro, E.; Eriz-Salinas, A.; Appel-Rubio, J.; Aguayo, C.; Damiano, A.E.; Guzmán-Gutiérrez, E.; et al. Machine learning applied in maternal and fetal health: A narrative review focused on pregnancy diseases and complications. Front. Endocrinol. 2023, 14, 1130139.

- Karim, J.N.; Bradburn, E.; Roberts, N.; Papageorghiou, A.T.; for the ACCEPTS study. First-trimester ultrasound detection of fetal heart anomalies: Systematic review and meta-analysis. Ultrasound Obstet. Gynecol. Off. J. Int. Soc. Ultrasound Obstet. Gynecol. 2022, 59, 11–25.

- Haxel, C.S.; Johnson, J.N.; Hintz, S.; Renno, M.S.; Ruano, R.; Zyblewski, S.C.; Glickstein, J.; Donofrio, M.T. Care of the Fetus with Congenital Cardiovascular Disease: From Diagnosis to Delivery. Pediatrics 2022, 150.

- van Nisselrooij, A.E.L.; Teunissen, A.K.K.; Clur, S.A.; Rozendaal, L.; Pajkrt, E.; Linskens, I.H.; Rammeloo, L.; van Lith, J.M.M.; Blom, N.A.; Haak, M.C. Why are congenital heart defects being missed? Ultrasound Obstet. Gynecol. Off. J. Int. Soc. Ultrasound Obstet. Gynecol. 2020, 55, 747–757.

- Reddy, C.D.; Eynde, J.V.D.; Kutty, S. Artificial intelligence in perinatal diagnosis and management of congenital heart disease. Semin. Perinatol. 2022, 46, 151588.

- Arain, Z.; Iliodromiti, S.; Slabaugh, G.; David, A.L.; Chowdhury, T.T. Machine learning and disease prediction in obstetrics. Curr. Res. Physiol. 2023, 6, 100099.

- An, S.; Zhu, H.; Wang, Y.; Zhou, F.; Zhou, X.; Yang, X.; Zhang, Y.; Liu, X.; Jiao, Z.; He, Y. A category attention instance segmentation network for four cardiac chambers segmentation in fetal echocardiography. Comput. Med. Imaging Graph. Off. J. Comput. Med. Imaging Soc. 2021, 93, 101983.

- Leibovitz, Z.; Lerman-Sagie, T.; Haddad, L. Fetal Brain Development: Regulating Processes and Related Malformations. Life 2022, 12, 809.

- Beckers, K.; Faes, J.; Deprest, J.; Delaere, P.R.; Hens, G.; De Catte, L.; Naulaers, G.; Claus, F.; Hermans, R.; Poorten, V.L.V. Long-term outcome of pre- and perinatal management of congenital head and neck tumors and malformations. Int. J. Pediatr. Otorhinolaryngol. 2019, 121, 164–172.

- Hu, Y.; Sun, L.; Feng, L.; Wang, J.; Zhu, Y.; Wu, Q. The role of routine first-trimester ultrasound screening for central nervous system abnormalities: A longitudinal single-center study using an unselected cohort with 3-year experience. BMC Pregnancy Childbirth 2023, 23, 312.

- Cater, S.W.; Boyd, B.K.; Ghate, S.V. Abnormalities of the Fetal Central Nervous System: Prenatal US Diagnosis with Postnatal Correlation. In Proceedings of the 105th Scientific Assembly and Annual Meeting of the Radiological-Society-of-North-America (RSNA), Chicago, IL, USA, 7 December 2019; Volume 40, pp. 1458–1472.

- Sreelakshmy, R.; Titus, A.; Sasirekha, N.; Logashanmugam, E.; Begam, R.B.; Ramkumar, G.; Raju, R. An Automated Deep Learning Model for the Cerebellum Segmentation from Fetal Brain Images. BioMed Res. Int. 2022, 2022, 8342767.

- Singh, V.; Sridar, P.; Kim, J.; Nanan, R.; Poornima, N.; Priya, S.; Reddy, G.S.; Chandrasekaran, S.; Krishnakumar, R. Semantic Segmentation of Cerebellum in 2D Fetal Ultrasound Brain Images Using Convolutional Neural Networks. IEEE Access 2021, 9, 85864–85873.

- Hesse, L.S.; Aliasi, M.; Moser, F.; INTERGROWTH-21(st) Consortium; Haak, M.C.; Xie, W.; Jenkinson, M.; Namburete, A.I. Subcortical segmentation of the fetal brain in 3D ultrasound using deep learning. NeuroImage 2022, 254, 119117.

- Mastromoro, G.; Guadagnolo, D.; Hashemian, N.K.; Bernardini, L.; Giancotti, A.; Piacentini, G.; De Luca, A.; Pizzuti, A. A Pain in the Neck: Lessons Learnt from Genetic Testing in Fetuses Detected with Nuchal Fluid Collections, Increased Nuchal Translucency versus Cystic Hygroma—Systematic Review of the Literature, Meta-Analysis and Case Series. Diagnostics 2022, 13, 48.

- Scholl, J.; Durfee, S.M.; Russell, M.A.; Heard, A.J.; Iyer, C.; Alammari, R.; Coletta, J.; Craigo, S.D.; Fuchs, K.M.; D’alton, M.; et al. First-Trimester Cystic Hygroma: Relationship of nuchal translucency thickness and outcomes. Obstet. Gynecol. 2012, 120, 551–559.

- Walker, M.C.; Willner, I.; Miguel, O.X.; Murphy, M.S.Q.; El-Chaâr, D.; Moretti, F.; Harvey, A.L.J.D.; White, R.R.; Muldoon, K.A.; Carrington, A.M.; et al. Using deep-learning in fetal ultrasound analysis for diagnosis of cystic hygroma in the first trimester. PLoS ONE 2022, 17, e0269323.

- Lin, Z.; Li, S.; Ni, D.; Liao, Y.; Wen, H.; Du, J.; Chen, S.; Wang, T.; Lei, B. Multi-task learning for quality assessment of fetal head ultrasound images. Med. Image Anal. 2019, 58, 101548.

- Xie, H.N.; Wang, N.; He, M.; Zhang, L.H.; Cai, H.M.; Xian, J.B.; Lin, M.F.; Zheng, J.; Yang, Y.Z. Using deep-learning algorithms to classify fetal brain ultrasound images as normal or abnormal. Ultrasound Obstet. Gynecol. J. Int. Soc. Ultrasound Obstet. Gynecol. 2020, 56, 579–587.

- Xie, B.; Lei, T.; Wang, N.; Cai, H.; Xian, J.; He, M.; Zhang, L.; Xie, H. Computer-aided diagnosis for fetal brain ultrasound images using deep convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1303–1312.

- Khalifa, Y.E.A.; Aboulghar, M.M.; Hamed, S.T.; Tomerak, R.H.; Asfour, A.M.; Kamal, E.F. Prenatal prediction of respiratory distress syndrome by multimodality approach using 3D lung ultrasound, lung-to-liver intensity ratio tissue histogram and pulmonary artery Doppler assessment of fetal lung maturity. Br. J. Radiol. 2021, 94, 20210577.

- Ahmed, B.; Konje, J.C. Fetal lung maturity assessment: A historic perspective and Non-invasive assessment using an automatic quantitative ultrasound analysis (a potentially useful clinical tool). Eur. J. Obstet. Gynecol. Reprod. Biol. 2021, 258, 343–347.

- Adams, N.C.; Victoria, T.; Oliver, E.R.; Moldenhauer, J.S.; Adzick, N.S.; Colleran, G.C. Fetal ultrasound and magnetic resonance imaging: A primer on how to interpret prenatal lung lesions. Pediatr. Radiol. 2020, 50, 1839–1854.

- Du, Y.; Jiao, J.; Ji, C.; Li, M.; Guo, Y.; Wang, Y.; Zhou, J.; Ren, Y. Ultrasound-based radiomics technology in fetal lung texture analysis prediction of neonatal respiratory morbidity. Sci. Rep. 2022, 12, 12747.

- Lord, J.; McMullan, D.J.; Eberhardt, R.Y.; Rinck, G.; Hamilton, S.J.; Quinlan-Jones, E.; Prigmore, E.; Keelagher, R.; Best, S.K.; Carey, G.K.; et al. Prenatal exome sequencing analysis in fetal structural anomalies detected by ultrasonography (PAGE): A cohort study. Lancet 2019, 393, 747–757.

- Tang, J.; Han, J.; Xie, B.; Xue, J.; Zhou, H.; Jiang, Y.; Hu, L.; Chen, C.; Zhang, K.; Zhu, F.; et al. The Two-Stage Ensemble Learning Model Based on Aggregated Facial Features in Screening for Fetal Genetic Diseases. Int. J. Environ. Res. Public Heal. 2023, 20, 2377.

More

Information

Subjects:

Engineering, Biomedical

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.1K

Revisions:

2 times

(View History)

Update Date:

18 Dec 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No