Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Georgios Fotis | -- | 1980 | 2023-12-14 10:23:21 | | | |

| 2 | Peter Tang | Meta information modification | 1980 | 2023-12-15 02:05:01 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Pavlatos, C.; Makris, E.; Fotis, G.; Vita, V.; Mladenov, V. Theoretical Concepts Underlying LSTM and BiLSTM Networks. Encyclopedia. Available online: https://encyclopedia.pub/entry/52744 (accessed on 08 February 2026).

Pavlatos C, Makris E, Fotis G, Vita V, Mladenov V. Theoretical Concepts Underlying LSTM and BiLSTM Networks. Encyclopedia. Available at: https://encyclopedia.pub/entry/52744. Accessed February 08, 2026.

Pavlatos, Christos, Evangelos Makris, Georgios Fotis, Vasiliki Vita, Valeri Mladenov. "Theoretical Concepts Underlying LSTM and BiLSTM Networks" Encyclopedia, https://encyclopedia.pub/entry/52744 (accessed February 08, 2026).

Pavlatos, C., Makris, E., Fotis, G., Vita, V., & Mladenov, V. (2023, December 14). Theoretical Concepts Underlying LSTM and BiLSTM Networks. In Encyclopedia. https://encyclopedia.pub/entry/52744

Pavlatos, Christos, et al. "Theoretical Concepts Underlying LSTM and BiLSTM Networks." Encyclopedia. Web. 14 December, 2023.

Copy Citation

Precise anticipation of electrical demand holds crucial importance for the optimal operation of power systems and the effective management of energy markets within the domain of energy planning. The primary aim of bidirectional Long Short-Term Memory (LSTM) network is to enhance predictive performance by capturing intricate temporal patterns and interdependencies within time series data.

forecasting electricity demand

bidirectional LSTM

short-term prediction

medium-term prediction

1. Introduction

A vital area of research within the field of power systems is that of electric load forecasting (ELF), owing to its pivotal role in system operation planning and the escalating scholarly attention, it has attracted [1]. The precise prediction of demand factors, encompassing metrics like hourly load, peak load, and aggregate energy consumption, stands as a critical prerequisite for the efficient governance and strategizing of power systems. The categorization of ELF into three distinct groups, as shown below, caters to diverse application requisites:

-

Long-term forecasting (LTF): Encompassing a time frame of 1 to 20 years, LTF plays a pivotal part in assimilating new-generation units into the system and cultivating transmission infrastructure.

-

Medium-term forecasting (MTF): Encompassing the span of 1 week to 12 months, MTF assumes a central role in determining tariffs, orchestrating system maintenance, financial administration, and harmonizing fuel supply.

-

Short-term forecasting (STF): Encompassing the temporal span of 1 h to 1 week, STF holds fundamental significance in scheduling the initiation and cessation times of generation units, preparing spinning reserves, dissecting constraints within the transmission system, and evaluating the security of the power system.

Distinct forecasting methodologies are employed corresponding to the temporal horizon. While MTF and LTF forecasting often hinge upon trend analysis [2][3], end-use analysis [4], NN techniques [5][6][7], and multiple linear regressions [8], STF necessitates approaches such as regression [9], time series analysis [10], artificial NNs [11][12][13][14], expert systems [15], fuzzy logic [16][17], and support vector machines [18][19]. STF emerges as particularly critical for both transmission system operators (TSOs), guaranteeing the reliability of system operations during adverse weather conditions [20][21], and distribution system operators (DSOs), given the increasing impact of microgrids on aggregate load [22][23], along with the challenge of assimilating variable renewable energy sources to meet demand. Proficiency in data analysis and a profound comprehension of power systems and deregulated markets are prerequisites for successful performance in MTF and LTF, STF primarily emphasizes the use of data modeling to match data with suitable models, rather than necessitating an extensive understanding of power system operations [24]. Accurate daily-ahead load forecasts (STF) stand as imperative for the operational planning department of every TSO year-round. The precision of these forecasts dictates which units partake in energy generation to satisfy the system’s load requisites the subsequent day. Several factors, including load patterns, weather circumstances, air temperature, wind speed, calendar information, economic occurrences, and geographical elements, have an impact on load forecasting [25]. Prudent load projections profoundly influence strategic decisions undertaken by entities such as power generation companies, retailers, and aggregators, given the deregulated and competitive milieu of modern power markets. Furthermore, resilient predictive models offer advantages to prosumers, assisting in the enhancement of resource management, encompassing energy generation, control, and storage. Nonetheless, the rise of “active consumers” [26] and the increasing integration of renewable energy sources (RES) from 2029 to 2049 [27] will bring about novel planning and operational complexities (Commission, 2020). As uncertainties stem from RES energy outputs and power consumption, sophisticated forecasting techniques [28][29][30] become imperative. Consequently, a deep learning (DL) forecasting model was innovated to assess and predict the future of electricity consumption, with the intent of mitigating potential power crises and harnessing opportunities in the evolving energy landscape.

Electrical load prediction has evolved into a critical research field due to the rising demand for effective energy management and resource allocation. Numerous machine learning techniques have been employed in this domain, encompassing traditional time series methods [10], neural and deep learning models [11][12]. Among these methods, bidirectional LSTM (biLSTM) networks have emerged as a prominent and promising approach for achieving accurate and robust electrical load prediction.

2. LSTM Networks

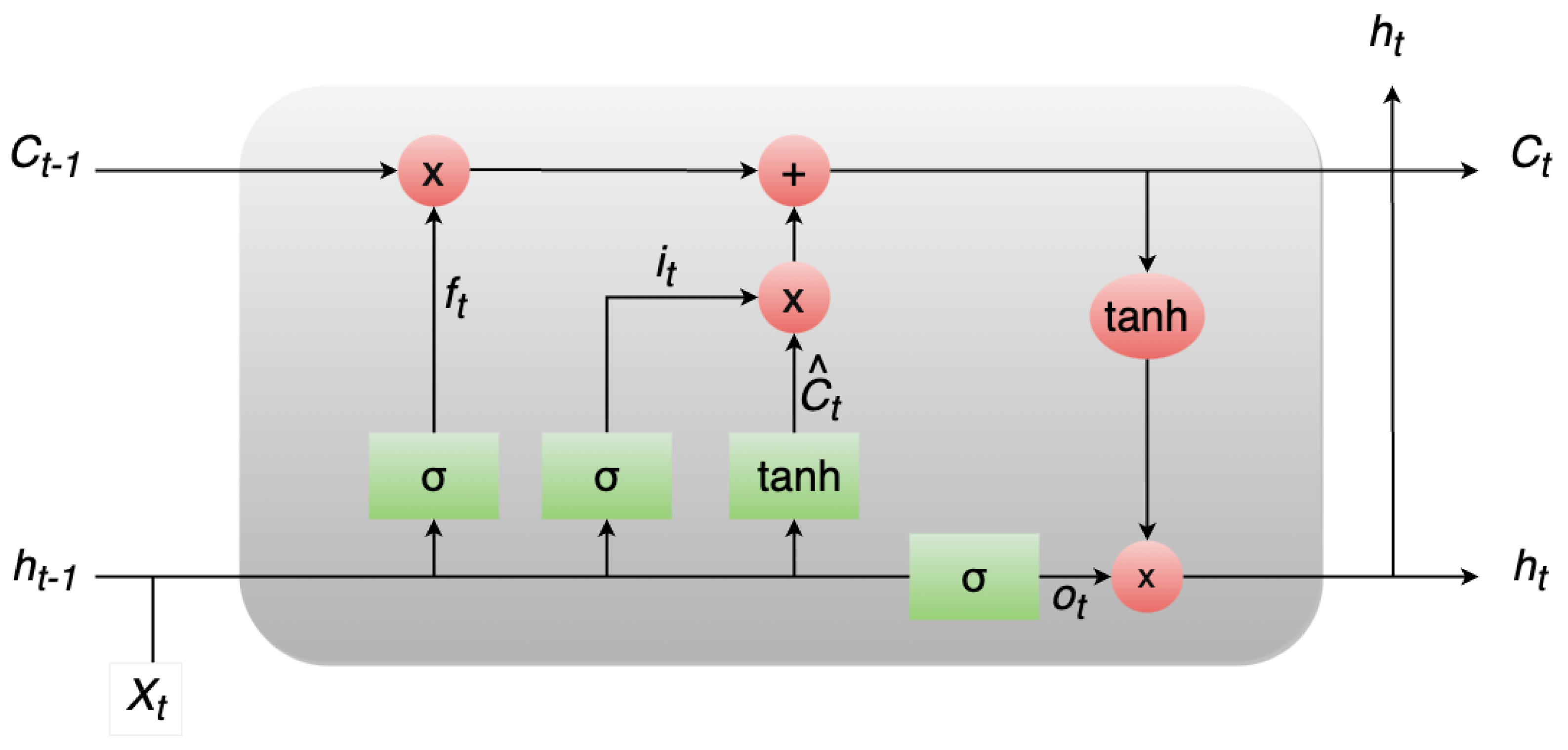

In 1997, Hochreiter and Schmidhuber introduced LSTM networks [31] as a specialized variant of RNNs tailored to effectively manage and learn from long-term dependencies in data. Their pervasive adoption and demonstrated success across a range of problem domains have propelled LSTMs into heightened prominence. Unlike traditional RNNs, LSTMs are explicitly engineered to tackle the challenge of prolonged dependencies, constituting an innate feature of their functioning. LSTMs are constructed from a sequence of recurrent modules, a common trait shared with all RNNs. Yet, it is the configuration of these repeating modules that sets LSTMs apart. In contrast to a solitary layer, LSTMs encompass four interconnected layers. The pivotal distinction within LSTMs arises from the incorporation of a cell state—a horizontal conduit running through the modules that orchestrate seamless information propagation. The transmission of data within the cell state is governed by gates, which comprise a neural network layer based on the sigmoid function connected with a pointwise multiplication operation. The sigmoid layer generates values in the range of 0 to 1, dictating the extent of the passage of information. The fundamental architecture of the LSTM model is visually depicted in Figure 1.

Figure 1. The LSTM model architecture.

To regulate the cell state, LSTM networks employ three distinct gates: the forget gate, the input gate, and the output gate. The purpose of the forget gate is to employ a sigmoid layer to determine which elements of information should be omitted from the current cell state. The input gate is composed of two key elements: a sigmoid layer, responsible for regulating the updates to be applied, and a tangent hyperbolic (tanh) layer, which generates new potential values. These new pieces of information are then combined with the existing cell state to generate an updated state. The output gate, on the other hand, employs a sigmoid layer to discern the relevant segments of the cell state that are pertinent to the final output. This processed cell state is subsequently passed through a tanh activation function and multiplied by the output obtained from the sigmoid gate. This combined process ultimately results in the final output. Here, 𝑋𝑡

represents the input at a given time step, ℎ𝑡 signifies the output, 𝐶𝑡 represents the cell state, 𝑓𝑡 corresponds to the forget gate, 𝑖𝑡 denotes the input gate, 𝑂𝑡 represents the output gate, and 𝐶̂ 𝑡 signifies the internal cell state.

3. BiLSTM

The BiLSTM network [32] constitutes a sophisticated variant of the LSTM architecture. LSTM, in itself, is a specialized form of Recurrent Neural Network (RNN) that has been proven effective in handling sequential data, such as text, speech, and time-series data. However, standard LSTM models have limitations when it comes to capturing bidirectional dependencies within the data, which is where BiLSTM steps in to overcome this constraint. At its core, the BiLSTM network introduces bidirectionality by processing input sequences both forward and backward through two separate LSTM layers. By doing so, the model can exploit context from past and future information simultaneously, leading to a more comprehensive understanding of the data. This distinctive competence renders it especially apt for endeavors necessitating a profound examination of sequential patterns, such as sentiment analysis, named entity recognition, machine translation, and related tasks. The key advantages of bidirectional LSTMs are:

-

Enhanced Contextual Understanding: By considering both past and future information, bidirectional LSTMs better understand the context surrounding each time step in a sequence. This is particularly useful for tasks where the meaning of a word or a data point is influenced by its surrounding elements.

-

Long-Range Dependencies: Bidirectional LSTMs can capture long-range dependencies more effectively than unidirectional LSTMs. Information from the future can provide valuable insights into the context of earlier parts of the sequence.

-

Improved Performance: In tasks like sequence labeling, sentiment analysis, and machine translation, bidirectional LSTMs often outperform unidirectional LSTMs because they can better capture nuanced relationships between elements in a sequence.

However, bidirectional LSTMs also come with certain considerations:

-

Computational Complexity: Since bidirectional LSTMs process data in two directions, they are computationally more intensive than their unidirectional counterparts. This can result in extended training durations and elevated memory demands.

-

Real-Time Applications: In real-time applications where future information is not available, bidirectional LSTMs might not be suitable, as they inherently use both past and future context.

-

Causal Relationships: Bidirectional LSTMs may introduce possible causality violations when used in scenarios where future information is not realistically available at present.

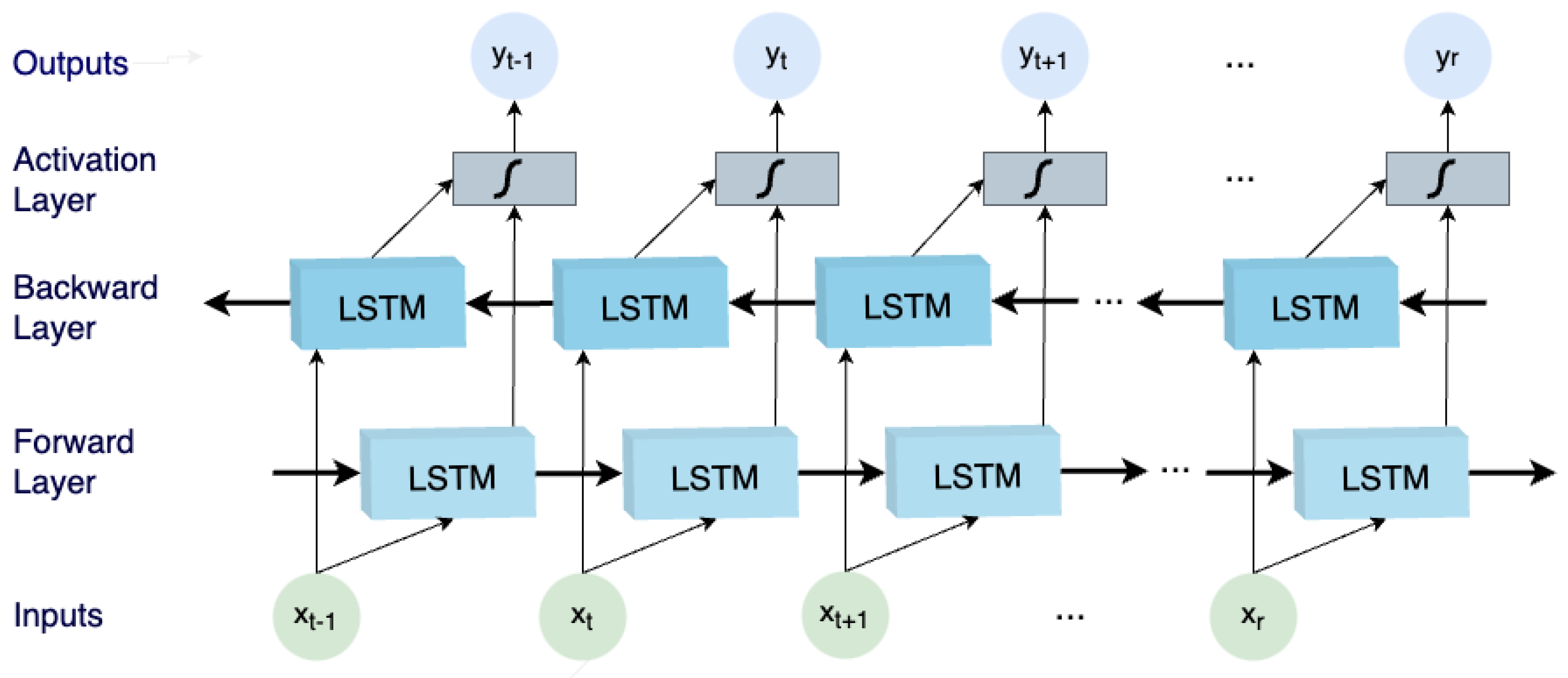

Analyzing each component of a BiLSTM network provides valuable insights into its inner workings and how it overcomes the limitations of traditional LSTMs. A BiLSTM network comprises four main components, i.e., input layer, LSTM layers (both forward and backward), merging layer, and output layer. Next, a detailed analysis of the components is provided:

-

Input Layer: The input layer is the first step in the BiLSTM network and serves as the entry point for sequential data. It accepts the input sequence, which could be a sequence of words in natural language processing tasks or time-series data in other applications. The data is typically encoded using word embeddings or other numerical representations to enable the network to process it effectively. The input layer bears the responsibility of transforming the unprocessed input into a structure comprehensible by subsequent layers.

-

LSTM Layers (Forward and Backward): The fundamental element of the BiLSTM network is composed of two LSTM layers: one dedicated to sequentially processing the input sequence in a forward manner, while the other focuses on processing it in a reverse direction. These LSTM layers play a pivotal role in capturing temporal correlations and extensive contextual information embedded within the data. In the forward LSTM layer, the input sequence is systematically processed from the sequence’s initiation to its conclusion, whereas in the backward LSTM layer, the sequence is processed in a reverse manner. By encompassing dual-directional data processing, the BiLSTM effectively captures insights from both historical and prospective facets of the input, enabling adept modeling of bidirectional relationships. Each LSTM cell situated within these layers incorporates a set of gating mechanisms—namely, input, output, and forget gates—that meticulously govern the course of information flow within the cell. This orchestration ensures the network’s retention of crucial information over extended temporal spans, thereby mitigating the issue of vanishing gradients and substantially enhancing gradient propagation during the training phase.

-

Merging/Activation Layer: Following the sequential processing by the forward and backward LSTM layers, the fusion layer takes center stage. The principal aim of this layer is to seamlessly amalgamate the insights garnered from both directions. This amalgamation is typically accomplished by concatenating the hidden states of the forward and backward LSTMs at each discrete time step. This concatenated representation thus encapsulates knowledge encompassing both anterior and forthcoming contexts for every constituent within the input sequence. This amalgamated representation serves as the fundamental bedrock upon which the ensuing layers base their informed decisions, enriched by bidirectional context.

-

Output Layer: Serving as the ultimate stage within the BiLSTM network, the outcome generation layer undertakes the task of processing the concatenated representation obtained from the fusion layer to yield the intended output. The design of the outcome generation layer depends on the specific objective customized for the BiLSTM. For example, in sentiment analysis, this layer might comprise a solitary node featuring a sigmoid activation function to predict sentiment polarity (positive or negative). In alternative applications like machine translation, the outcome layer could encompass a softmax activation function aimed at predicting the probability distribution of target words within the translation sequence. The outcome layer assumes the responsibility of mapping the bidirectional context, assimilated by the BiLSTM, into the conclusive predictions or representations that align with the precise objectives of the designated task.

The basic architecture of the BiLSTM model including the above-mentioned layers is visually depicted in Figure 2.

Figure 2. The BiLSTM basic architecture.

In summary, a bidirectional LSTM stands as an extension of the conventional LSTM architecture, adeptly harnessing information originating from both past and future time steps. This augmentation substantially enhances the comprehension of sequential data, capturing intricate patterns and interrelationships. As such, bidirectional LSTMs emerge as a potent instrument for diverse sequence-oriented tasks within the realms of machine learning and deep learning.

References

- Kang, C.; Xia, Q.; Zhang, B. Review of power system load forecasting and its development. Autom. Electr. Power Syst. 2004, 28, 1–11.

- Zhang, K.; Feng, X.; Tian, X.; Hu, Z.; Guo, N. Partial Least Squares regression load forecasting model based on the combination of grey Verhulst and equal-dimension and new-information model. In Proceedings of the 7th International Forum on Electrical Engineering And Automation (IFEEA), Hefei, China, 25–27 September 2020; pp. 915–919.

- Liu, Z.; Sun, X.; Wang, S.; Pan, M.; Zhang, Y.; Ji, Z. Midterm Power Load Forecasting Model Based on Kernel Principal Component Analysis. Big Data 2019, 7, 130–138.

- Al-Hamadi, H.M.; Soliman, S.A. Long-term/mid-term electric load forecasting based on short-term correlation and annual growth. Electr. Power Syst. Res. 2005, 74, 353–361.

- Baek, S. Mid-term Load Pattern Forecasting with Recurrent Artificial Neural Network. IEEE Access 2019, 7, 172830–172838.

- Nalcaci, G.; Özmen, A.; Weber, G.W. Long-term load forecasting: Models based on MARS, ANN and LR methods. Cent. Eur. J. Oper. Res. 2019, 27, 1033–1049.

- Adhiswara, R.; Abdullah, A.G.; Mulyadi, Y. Long-term electrical consumption forecasting using Artificial Neural Network (ANN). J. Phys. Conf. Ser. 2019, 1402, 033081.

- Abu-Shikhah, N.; Aloquili, F.; Linear, O.; Regression, N. Medium-Term Electric Load Forecasting Using Multivariable Linear and Non-Linear Regression. Smart Grid Renew. Energy 2011, 2, 126–135.

- Krstonijević, S. Adaptive Load Forecasting Methodology Based on Generalized Additive Model with Automatic Variable Selection. Sensors 2022, 22, 7247.

- Ono, M.; Topcu, U.; Yo, M.; Adachi, S. Risk-limiting power grid control with an ARMA-based prediction model. In Proceedings of the 2013 IEEE 52nd Annual Conference on Decision and Control, CDC 2013, Firenze, Italy, 10–13 December 2013; pp. 4949–4956.

- Shi, T.; Lu, F.; Lu, J.; Pan, J.; Zhou, Y.; Wu, C.; Zheng, J. Phase Space Reconstruction Algorithm and Deep Learning-Based Very Short-Term Bus Load Forecasting. Energies 2019, 12, 4349.

- Román-Portabales, A.; López-Nores, M.; Pazos-Arias, J.J. Systematic Review of Electricity Demand Forecast Using ANN-Based Machine Learning Algorithms. Sensors 2021, 21, 4544.

- Ekonomou, L.; Christodoulou, C.A.; Mladenov, V. A short-term load forecasting method using artificial neural networks and wavelet analysis. Int. J. Power Syst. 2016, 1, 64–68.

- Karampelas, P.; Pavlatos, C.; Mladenov, V.; Ekonomou, L. Design of artificial neural network models for the prediction of the Hellenic energy consumption, In Proceedings of the 10th Symposium on Neural Network Applications in Electrical Engineering, Belgrade, Serbia, 23–25 September 2010.

- Hwan, K.J.; Kim, G.W. A short-term load forecasting expert system. In Proceedings of the 5th Korea-Russia International Symposium On Science And Technology, Tomsk, Russia, 26 June–3 July 2001; Volume 1, pp. 112–116.

- Ali, M.; Adnan, M.; Tariq, M.; Poor, H.V. Load Forecasting Through Estimated Parametrized Based Fuzzy Inference System in Smart Grids. IEEE Trans. Fuzzy Syst. 2021, 29, 156–165.

- Bhotto, M.Z.A.; Jones, R.; Makonin, S.; Bajić, I.V. Short-Term Demand Prediction Using an Ensemble of Linearly-Constrained Estimators. IEEE Trans. Power Syst. 2021, 36, 3163–3175.

- Jiang, H.; Zhang, Y.; Muljadi, E.; Zhang, J.J.; Gao, D.W. A Short-Term and High-Resolution Distribution System Load Forecasting Approach Using Support Vector Regression with Hybrid Parameters Optimization. IEEE Trans. Smart Grid 2018, 9, 3341–3350.

- Li, G.; Li, Y.; Roozitalab, F. Midterm Load Forecasting: A Multistep Approach Based on Phase Space Reconstruction and Sup-port Vector Machine. IEEE Syst. J. 2020, 14, 4967–4977.

- Zafeiropoulou, M.; Mentis, I.; Sijakovic, N.; Terzic, A.; Fotis, G.; Maris, T.I.; Vita, V.; Zoulias, E.; Ristic, V.; Ekonomou, L. Forecasting Transmission and Distribution System Flexibility Needs for Severe Weather Condition Resilience and Outage Management. Appl. Sci. 2022, 12, 7334.

- Fotis, G.; Vita, V.; Maris, I.T. Risks in the European Transmission System and a Novel Restoration Strategy for a Power System after a Major Blackout. Appl. Sci. 2023, 13, 83.

- Zheng, C.; Eskandari, M.; Li, M.; Sun, Z. GA-Reinforced Deep Neural Network for Net Electric Load Forecasting in Microgrids with Renewable Energy Resources for Scheduling Battery Energy Storage Systems. Algorithms 2022, 15, 338.

- Sambhi, S.; Bhadoria, H.; Kumar, V.; Chaurasia, P.; Chaurasia, G.S.; Fotis, G.; Vita, G.; Ekonomou, V.; Pavlatos, C. Economic Feasibility of a Renewable Integrated Hybrid Power Generation System for a Rural Village of Ladakh. Energies 2022, 15, 9126.

- Khuntia, S.; Rueda, J.; Meijden, M. Forecasting the load of electrical power systems in mid- and long-term horizons: A review. IET Gener. Transm. Distrib. 2016, 10, 3971–3977.

- THong, A.; Pinson, P.; Fan, S.; Zareipour, H.; Troccoli, A. Electricity Load Forecasting: A Survey. IEEE Trans. Smart Grid 2016, 7, 1040–1071.

- IRENA. Innovation Landscape Brief: Market Integration of Distributed Energy Resources; International Renewable Energy Agency: Abu Dhabi, United Arab Emirates, 2019.

- Commission, M.; Company, D. Integrating Renewables into Lower Michigan Electric Grid. Available online: https://www.brattle.com/wp-content/uploads/2021/05/15955_integrating_renewables_into_lower_michigans_electricity_grid.pdf (accessed on 14 November 2023).

- Wang, F.C.; Hsiao, Y.S.; Yang, Y.Z. The Optimization Of Hybrid Power Systems With Renewable Energy And Hydrogen Gen-eration. Energies 2018, 11, 1948.

- Wang, F.; Lin, K.-M. Impacts Of Load Profiles On The Optimization Of Power Management Of A Green Building Employing Fuel Cells. Energies 2019, 12, 57.

- Sun, W.; Zhang, C. A Hybrid BA-ELM Model Based on Factor Analysis and Similar-Day Approach for Short-Term Load Forecasting. Energies 2018, 11, 1282.

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780.

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures, JCNN’05. In Proceedings of the 2005 IEEE International Joint Conference on Neural Networks, Montreal, QC, Canada, 31 July–4 August 2005; pp. 2047–2052.

More

Information

Subjects:

Engineering, Electrical & Electronic

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.5K

Revisions:

2 times

(View History)

Update Date:

15 Dec 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No