| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Liman Wang | -- | 1604 | 2023-11-15 11:53:13 | | | |

| 2 | Lindsay Dong | + 3 word(s) | 1607 | 2023-11-17 02:09:39 | | |

Video Upload Options

The growing importance of deformable object manipulation (DOM) in caregiving settings can be attributed to various factors. One significant driving force is the aging population, which has led to increasing demand for caregiving services. In addition to supporting the elderly, deformable object manipulation technologies can assist individuals with disabilities and special needs, improving their quality of life and promoting independence. Another essential aspect of deformable object manipulation in caregiving scenarios is the potential to enhance precision and safety in medical procedures. These technologies can reduce the physical strain experienced by human caregivers, preventing injuries and enabling them to provide better patient care.

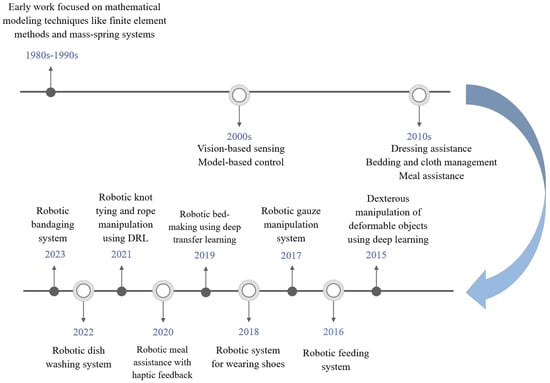

1. An Outlook by Timeline

2. Classification of Applications

-

Dressing assistance is an essential application of DOM in caregiving scenarios, particularly for users with limited mobility or dexterity. Researchers have developed robots capable of assisting users in putting on various clothing items, including T-shirts [4], pants [22], and footwear [23]. These studies have demonstrated the potential of DOM in addressing the challenges faced by individuals with disabilities and the elderly in performing daily dressing tasks.

-

Bedding and Cloth Management is another crucial application of DOM in caregiving scenarios, as it involves handling large, deformable objects and ensuring user comfort and hygiene. Researchers have developed robotic bed-making systems to grasp tension and smooth fitted sheets [5]. Robots capable of managing blankets [6] and pillows [7] have also been developed. The success of these works highlights the potential of DOM in addressing the challenges associated with bedsheet management and the need for continued research and development in this area. Cloth folding is also essential in caregiving settings, particularly for maintaining order and cleanliness. Researchers have developed robots capable of folding clothes, such as towels, shirts, and ropes [4][19][24][25][26][27][28].

-

Personal Hygiene Support is another critical application of DOM in caregiving scenarios. Researchers have developed robots that handle soft materials such as gauze [12] and diapers [29]. These studies have highlighted the importance of integrating various sensory modalities and control techniques for effective soft material handling in caregiving scenarios.

-

Meal Assistance is another important application of DOM in caregiving scenarios, particularly for users with limited mobility or dexterity. Researchers have developed robots capable of manipulating deformable objects such as food items and utensils [8][9][10][11]. These studies have demonstrated the potential of DOM in addressing the challenges faced by individuals with disabilities and the elderly in performing daily meal assistance tasks.

-

Daily Medical Care. In the context of bandaging, deformable object manipulation systems offer improved precision and control, enabling more effective and efficient wound-dressing procedures. These systems can adapt to the varying shapes and contours of the human body, as well as the patient’s involuntary swaying, ensuring proper bandage placement and tension for optimal healing [13]. Deformable object manipulation technologies assist patients during therapeutic exercises and activities in rehabilitation. They provide real-time feedback, support, and guidance, enhancing repair and promoting faster recovery [30][31][32].

3. A New Method to Classify and Analyse

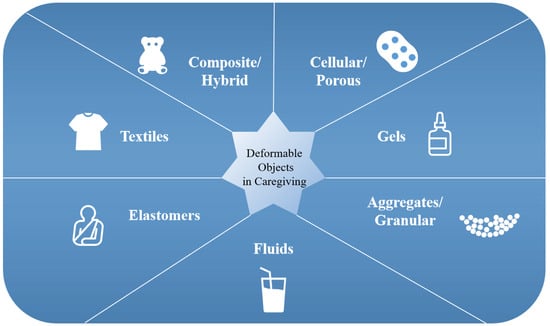

3.1. Common Types of Deformable Objects in Caregiving Scenarios

-

Textiles: This category would cover all cloth and fabric objects like clothing, sheets, towels, etc. Key properties are flexibility, drape, and shear.

-

Elastomers: Includes stretchable/elastic materials like bandages, tubing, and exercise bands. Key properties are elongation and elasticity.

-

Fluids: Encompasses materials like water, shampoo, and creams that flow and conform to containers. Key behaviors are pourability and viscosity.

-

Aggregates/Granular: Covers aggregated materials like rice, beans, and tablets. Flows but maintains loose particulate structure.

-

Gels: Highly viscous/elastic fluids like food gels, slime, and putty. Resist flow due to cross-linked molecular structure.

-

Cellular/Porous: Materials with internal voids like sponges and soft foams. Compressible and exhibit springback.

-

Composite/Hybrid: Combinations of the above categories, like stuffed animals and packaged goods. Display complex interactions of properties.

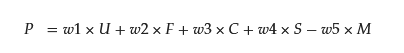

3.2. A Multi-Factor Analysis Method

-

Application Utility (U)—Potential to reduce caregiver burden by automating tasks

-

Object Frequency (F)—How often the object occurs in caregiving activities

-

Task Complexity (C)—Technical challenges posed by physical properties and handling difficulties

-

Safety Criticality (S)—Risks of injury or harm during object manipulation

-

Research Maturity (M)—Existing state of manipulation methods for the object

-

Surveys, interviews, and activity logging (for Application Utility)

-

Workflow observations and activity logging (for Object Frequency)

-

Material testing, caregiver surveys, and interviews (for Task Complexity)

-

Incident data and healthcare professional feedback (for Safety Criticality)

-

Literature review (for Research Maturity)

-

Each metric is normalised on a 0–1 scale based on the maximum value observed. This transforms metrics to a common scale.

-

Criteria weights are assigned to each factor based on the caregiving context. For example, Safety Criticality may be weighted higher for hospital settings compared to home care.

-

Weighted sums are calculated by multiplying each normalised metric by the criteria weight.

-

The weighted sums are aggregated to derive an overall priority score P for each deformable object, where U, F, C, S, and M are the normalised metrics and w1 to w5 are the criteria weights.:

-

Objects are ranked by priority score P, identifying high-impact areas needing research innovations.

4. Challenges of DOM in Caregiving

References

- Jalon, J.G.; Cuadrado, J.; Avello, A.; Jimenez, J.M. Kinematic and Dynamic Simulation of Rigid and Flexible Systems with Fully Cartesian Coordinates. In Computer-Aided Analysis of Rigid and Flexible Mechanical Systems; Springer: Dordrecht, The Netherlands, 1994; pp. 285–323.

- Spong, M.W.; Hutchinson, S.; Vidyasagar, M. Robot Modeling and Control; John Wiley and Sons: Hoboken, NJ, USA, 2020.

- Bohg, J.; Morales, A.; Asfour, T.; Kragic, D. Data-Driven Grasp Synthesis—A Survey. IEEE Trans. Robot. 2014, 30, 289–309.

- Koganti, N.; Tamei, T.; Matsubara, T.; Shibata, T. Real-time estimation of Human-Cloth topological relationship using depth sensor for robotic clothing assistance. In Proceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25–29 August 2014.

- Goldberg, K. Deep Transfer Learning of Pick Points on Fabric for Robot Bed-Making. In Proceedings of the Robotics Research: The 19th International Symposium ISRR; Springer Nature: Cham, Switzerland, 2022; Volume 20, p. 275.

- Avigal, Y.; Berscheid, L.; Asfour, T.; Kroger, T.; Goldberg, K. SpeedFolding: Learning Efficient Bimanual Folding of Garments. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022.

- Yang, P.C.; Sasaki, K.; Suzuki, K.; Kase, K.; Sugano, S.; Ogata, T. Repeatable Folding Task by Humanoid Robot Worker Using Deep Learning. IEEE Robot. Autom. Lett. 2017, 2, 397–403.

- Park, D.; Hoshi, Y.; Mahajan, H.P.; Kim, H.K.; Erickson, Z.; Rogers, W.A.; Kemp, C.C. Active robot-assisted feeding with a general-purpose mobile manipulator: Design, evaluation, and lessons learned. Robot. Auton. Syst. 2020, 124, 103344.

- Bhattacharjee, T.; Lee, G.; Song, H.; Srinivasa, S.S. Towards Robotic Feeding: Role of Haptics in Fork-Based Food Manipulation. IEEE Robot. Autom. Lett. 2019, 4, 1485–1492.

- Feng, R.; Kim, Y.; Lee, G.; Gordon, E.K.; Schmittle, M.; Kumar, S.; Bhattacharjee, T.; Srinivasa, S.S. Robot-Assisted Feeding: Generalizing Skewering Strategies Across Food Items on a Plate. In Springer Proceedings in Advanced Robotics; Springer International Publishing: Cham, Switzerland, 2022; pp. 427–442.

- Hai, N.D.X.; Thinh, N.T. Self-Feeding Robot for Elder People and Parkinson’s Patients in Meal Supporting. Int. J. Mech. Eng. Robot. Res. 2022, 11, 241–247.

- Sanchez, J.; Corrales, J.A.; Bouzgarrou, B.C.; Mezouar, Y. Robotic manipulation and sensing of deformable objects in domestic and industrial applications: A survey. Int. J. Robot. Res. 2018, 37, 688–716.

- Li, J.; Sun, W.; Gu, X.; Guo, J.; Ota, J.; Huang, Z.; Zhang, Y. A Method for a Compliant Robot Arm to Perform a Bandaging Task on a Swaying Arm: A Proposed Approach. IEEE Robot. Autom. Mag. 2023, 30, 50–61.

- Park, F.; Bobrow, J.; Ploen, S. A Lie Group Formulation of Robot Dynamics. Int. J. Robot. Res. 1995, 14, 609–618.

- Mergler, H. Introduction to robotics. IEEE J. Robot. Autom. 1985, 1, 215.

- Lévesque, V.; Pasquero, J.; Hayward, V.; Legault, M. Display of virtual braille dots by lateral skin deformation: Feasibility study. ACM Trans. Appl. Percept. 2005, 2, 132–149.

- Yang, Y.; Li, Y.; Fermuller, C.; Aloimonos, Y. Robot Learning Manipulation Action Plans by “Watching” Unconstrained Videos from the World Wide Web. Proc. AAAI Conf. Artif. Intell. 2015, 29, 3692–3696.

- Li, S.; Ma, X.; Liang, H.; Gorner, M.; Ruppel, P.; Fang, B.; Sun, F.; Zhang, J. Vision-based Teleoperation of Shadow Dexterous Hand using End-to-End Deep Neural Network. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), IEEE, Montreal, QC, Canada, 20–24 May 2019.

- Wu, Y.; Yan, W.; Kurutach, T.; Pinto, L.; Abbeel, P. Learning to Manipulate Deformable Objects without Demonstrations. In Proceedings of the Robotics: Science and Systems XVI. Robotics: Science and Systems Foundation, Corvalis, OR, USA, 12–16 July 2020.

- Teng, Y.; Lu, H.; Li, Y.; Kamiya, T.; Nakatoh, Y.; Serikawa, S.; Gao, P. Multidimensional Deformable Object Manipulation Based on DN-Transporter Networks. IEEE Trans. Intell. Transp. Syst. 2023, 24, 4532–4540.

- Bedaf, S.; Draper, H.; Gelderblom, G.J.; Sorell, T.; de Witte, L. Can a Service Robot Which Supports Independent Living of Older People Disobey a Command? The Views of Older People, Informal Carers and Professional Caregivers on the Acceptability of Robots. Int. J. Soc. Robot. 2016, 8, 409–420.

- Yamazaki, K.; Oya, R.; Nagahama, K.; Okada, K.; Inaba, M. Bottom dressing by a life-sized humanoid robot provided failure detection and recovery functions. In Proceedings of the 2014 IEEE/SICE International Symposium on System Integration, Tokyo, Japan, 13–15 December 2014.

- Li, Y.; Xiao, A.; Feng, Q.; Zou, T.; Tian, C. Design of Service Robot for Wearing and Taking off Footwear. E3S Web Conf. 2020, 189, 03024.

- Jia, B.; Pan, Z.; Hu, Z.; Pan, J.; Manocha, D. Cloth Manipulation Using Random-Forest-Based Imitation Learning. IEEE Robot. Autom. Lett. 2019, 4, 2086–2093.

- Tsurumine, Y.; Matsubara, T. Goal-aware generative adversarial imitation learning from imperfect demonstration for robotic cloth manipulation. Robot. Auton. Syst. 2022, 158, 104264.

- Verleysen, A.; Holvoet, T.; Proesmans, R.; Den Haese, C.; Wyffels, F. Simpler Learning of Robotic Manipulation of Clothing by Utilizing DIY Smart Textile Technology. Appl. Sci. 2020, 10, 4088.

- Jia, B.; Hu, Z.; Pan, Z.; Manocha, D.; Pan, J. Learning-based feedback controller for deformable object manipulation. arXiv 2018, arXiv:1806.09618.

- Deng, Y.; Xia, C.; Wang, X.; Chen, L. Graph-Transporter: A Graph-based Learning Method for Goal-Conditioned Deformable Object Rearranging Task. In Proceedings of the 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Prague, Czech Republic, 9–12 October 2022.

- Baek, J. Smart predictive analytics care monitoring model based on multi sensor IoT system: Management of diaper and attitude for the bedridden elderly. Sensors Int. 2023, 4, 100213.

- Torrisi, M.; Maggio, M.G.; De Cola, M.C.; Zichittella, C.; Carmela, C.; Porcari, B.; la Rosa, G.; De Luca, R.; Naro, A.; Calabrò, R.S. Beyond motor recovery after stroke: The role of hand robotic rehabilitation plus virtual reality in improving cognitive function. J. Clin. Neurosci. 2021, 92, 11–16.

- Vaida, C.; Birlescu, I.; Pisla, A.; Ulinici, I.M.; Tarnita, D.; Carbone, G.; Pisla, D. Systematic Design of a Parallel Robotic System for Lower Limb Rehabilitation. IEEE Access 2020, 8, 34522–34537.

- Chockalingam, M.; Vasanthan, L.T.; Balasubramanian, S.; Sriram, V. Experiences of patients who had a stroke and rehabilitation professionals with upper limb rehabilitation robots: A qualitative systematic review protocol. BMJ Open 2022, 12, e065177.

- Frei, J.; Ziltener, A.; Wüst, M.; Havelka, A.; Lohan, K. Iterative Development of s Service Robot for Laundry Transport in Nursing Homes. In Social Robotics; Springer Nature: Cham, Switzerland, 2022; pp. 359–370.

- Hussin, E.; Jie Jian, W.; Sahar, N.; Zakariya, A.; Ridzuan, A.; Suhana, C.; Mohamed Juhari, R.; Wei Hong, L. A Healthcare Laundry Management System using RFID System. Proc. Int. Conf. Artif. Life Robot. 2022, 27, 875–880.

- Zhang, A.; Yao, Y.; Hu, Y. Analyzing the Design of Windows Cleaning Robots. In Proceedings of the 2022 3rd International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), IEEE, Xi’an, China, 15–17 July 2022.

- Lo, W.S.; Yamamoto, C.; Pattar, S.P.; Tsukamoto, K.; Takahashi, S.; Sawanobori, T.; Mizuuchi, I. Developing a Collaborative Robotic Dishwasher Cell System for Restaurants. In Lecture Notes in Networks and Systems; Springer International Publishing: Cham, Switzerland, 2022; pp. 261–275.

- Lin, X.; Wang, Y.; Olkin, J.; Held, D. SoftGym: Benchmarking Deep Reinforcement Learning for Deformable Object Manipulation. Proc. Mach. Learn. Res. 2021, 155, 432–448.

- Scheikl, P.M.; Tagliabue, E.; Gyenes, B.; Wagner, M.; Dall’Alba, D.; Fiorini, P.; Mathis-Ullrich, F. Sim-to-Real Transfer for Visual Reinforcement Learning of Deformable Object Manipulation for Robot-Assisted Surgery. IEEE Robot. Autom. Lett. 2023, 8, 560–567.

- Thach, B.; Kuntz, A.; Hermans, T. DeformerNet: A Deep Learning Approach to 3D Deformable Object Manipulation. arXiv 2021, arXiv:2107.08067v1.

- Yin, H.; Varava, A.; Kragic, D. Modeling, learning, perception, and control methods for deformable object manipulation. Sci. Robot. 2021, 6, eabd8803.

- Delgado, A.; Corrales, J.; Mezouar, Y.; Lequievre, L.; Jara, C.; Torres, F. Tactile control based on Gaussian images and its application in bi-manual manipulation of deformable objects. Robot. Auton. Syst. 2017, 94, 148–161.

- Frank, B.; Stachniss, C.; Abdo, N.; Burgard, W. Using Gaussian Process Regression for Efficient Motion Planning in Environments with Deformable Objects. In Proceedings of the AAAIWS’11-09: Proceedings of the 9th AAAI Conference on Automated Action Planning for Autonomous Mobile Robots, San Francisco, CA, USA, 7 August 2011.

- Hu, Z.; Sun, P.; Pan, J. Three-Dimensional Deformable Object Manipulation Using Fast Online Gaussian Process Regression. IEEE Robot. Autom. Lett. 2018, 3, 979–986.

- Antonova, R.; Yang, J.; Sundaresan, P.; Fox, D.; Ramos, F.; Bohg, J. A Bayesian Treatment of Real-to-Sim for Deformable Object Manipulation. IEEE Robot. Autom. Lett. 2022, 7, 5819–5826.

- Zheng, C.X.; Colomé, A.; Sentis, L.; Torras, C. Mixtures of Controlled Gaussian Processes for Dynamical Modeling of Deformable Objects. In Proceedings of the 4th Annual Learning for Dynamics and Control Conference, PMLR, Stanford, CA, USA, 23–24 June 2022; Volume 168, pp. 415–426.

- Li, R.; Platt, R.; Yuan, W.; ten Pas, A.; Roscup, N.; Srinivasan, M.A.; Adelson, E. Localization and manipulation of small parts using GelSight tactile sensing. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014.

- Cui, S.; Wang, R.; Wei, J.; Li, F.; Wang, S. Grasp State Assessment of Deformable Objects Using Visual-Tactile Fusion Perception. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020.

- Khalil, F.F.; Payeur, P. Robotic Interaction with Deformable Objects under Vision and Tactile Guidance—A Review. In Proceedings of the 2007 International Workshop on Robotic and Sensors Environments, IEEE, Kyoto, Japan, 23–27 October 2007.

- Yamaguchi, A.; Atkeson, C.G. Tactile Behaviors with the Vision-Based Tactile Sensor FingerVision. Int. J. Humanoid Robot. 2019, 16, 1940002.

- Liang, L.; Liu, M.; Martin, C.; Sun, W. A deep learning approach to estimate stress distribution: A fast and accurate surrogate of finite-element analysis. J. R. Soc. Interface 2018, 15, 20170844.

- Kapitanyuk, Y.A.; Proskurnikov, A.V.; Cao, M. A Guiding Vector-Field Algorithm for Path-Following Control of Nonholonomic Mobile Robots. IEEE Trans. Control Syst. Technol. 2018, 26, 1372–1385.

- Kalashnikov, D.; Irpan, A.; Pastor, P.; Ibarz, J.; Herzog, A.; Jang, E.; Quillen, D.; Holly, E.; Kalakrishnan, M.; Vanhoucke, V.; et al. QT-Opt: Scalable Deep Reinforcement Learning for Vision-Based Robotic Manipulation. arXiv 2018, arXiv:1806.10293.

- Hogan, N. Impedance Control: An Approach to Manipulation. In Proceedings of the 1984 American Control Conference, IEEE, San Diego, CA, USA, 6–8 June 1984.

- Edsinger, A.; Kemp, C.C. Human-Robot Interaction for Cooperative Manipulation: Handing Objects to One Another. In Proceedings of the RO-MAN 2007—The 16th IEEE International Symposium on Robot and Human Interactive Communication, Jeju, Republic of Korea, 26–29 August 2007.

- Kruse, D.; Radke, R.J.; Wen, J.T. Collaborative human-robot manipulation of highly deformable materials. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015.

- Sirintuna, D.; Giammarino, A.; Ajoudani, A. Human-Robot Collaborative Carrying of Objects with Unknown Deformation Characteristics. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022.