Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Yew Kwang Hooi | -- | 2377 | 2023-10-31 10:37:15 | | | |

| 2 | Catherine Yang | Meta information modification | 2377 | 2023-11-01 01:46:31 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Bhanbhro, H.; Kwang Hooi, Y.; Kusakunniran, W.; Amur, Z.H. A Symbol Recognition System for Single-Line Diagrams Developed. Encyclopedia. Available online: https://encyclopedia.pub/entry/50969 (accessed on 04 March 2026).

Bhanbhro H, Kwang Hooi Y, Kusakunniran W, Amur ZH. A Symbol Recognition System for Single-Line Diagrams Developed. Encyclopedia. Available at: https://encyclopedia.pub/entry/50969. Accessed March 04, 2026.

Bhanbhro, Hina, Yew Kwang Hooi, Worapan Kusakunniran, Zaira Hassan Amur. "A Symbol Recognition System for Single-Line Diagrams Developed" Encyclopedia, https://encyclopedia.pub/entry/50969 (accessed March 04, 2026).

Bhanbhro, H., Kwang Hooi, Y., Kusakunniran, W., & Amur, Z.H. (2023, October 31). A Symbol Recognition System for Single-Line Diagrams Developed. In Encyclopedia. https://encyclopedia.pub/entry/50969

Bhanbhro, Hina, et al. "A Symbol Recognition System for Single-Line Diagrams Developed." Encyclopedia. Web. 31 October, 2023.

Copy Citation

In numerous electrical power distribution systems and other engineering contexts, single-line diagrams (SLDs) are frequently used. The importance of digitizing these images is growing. This is primarily because better engineering practices are required in areas such as equipment maintenance, asset management, safety, and others. Processing and analyzing these drawings, however, is a difficult job. With enough annotated training data, deep neural networks perform better in many object detection applications. Based on deep-learning techniques, a dataset can be used to assess the overall quality of a visual system

DCGAN

LSGAN

synthetic images

single-line diagrams

symbol spotting

image analytics

CNN

1. Introduction

There are numerous industries in which engineering documents continue to exist in paper format due to a lack of digitalization for automated systems [1]. Among such documents, single-line diagrams (SLDs) pose a significant challenge in terms of interpretation and comprehension, as exemplified in Figure 1. These technical documents play a crucial role in diverse fields, including electrical systems, power distribution systems, hazardous area layouts, and other structural layouts. Deciphering these drawings often requires a considerable amount of time and the expertise of highly skilled engineers and professionals [2][3].

Figure 1. A single-line diagram.

The digitization of engineering drawings has gained significant importance in recent years. This is partially due to the pressing need to enhance business procedures, such as equipment monitoring, risk analysis, safety checks, and other operations. It is also driven by the remarkable advancements in computer vision and image understanding, particularly in the fields of gaming and AI [4], NLP [5], health [6], and others. Machine learning and deep learning (DL) [7] have significantly improved performance in various domains. Machine vision, in particular, has greatly benefited from DL [8][9].

Convolutional neural networks (CNNs) have made remarkable progress in recent years and are widely used in various image-related tasks, including biometric-based authentication [10], image classification, handwriting recognition, and object recognition [11]. The advancements in image segmentation, classification, and object recognition prior to CNNs were incremental and limited. However, the introduction of CNNs has completely transformed this field [11]. For example, Taigman et al. introduced the DeepFace facial recognition system, which was first implemented on Facebook in 2014, achieving an accuracy of 97.35%, surpassing conventional systems by approximately 27% [12].

Despite notable advancements in image processing and analysis, the digitization of single-line diagrams (SLDs) and the automated interpretation of these drawings continue to pose significant challenges [13]. Presently, most methods rely on traditional image processing techniques that necessitate manual feature extraction. These approaches are highly domain-specific, susceptible to noise and data distribution variations, and tend to focus on addressing specific aspects of the problem, such as symbol detection or text separation. The performance of these models is greatly influenced by the quality of the provided training data.

Even in challenging and less-controlled environments, fundamental image segmentation and other processing tasks, such as object detection and tracking, have become considerably less difficult. Recent methods, such as faster regions with convolutional neural networks (Faster R-CNNs) [13], single-stage detection (SSD) [14], region-based fully convolutional networks [15], and You Only Look Once (YOLO) [16], have demonstrated high performance in object recognition and classification applications. These methods, along with their extensions, have addressed major obstacles, such as noise, orientation, and image quality, leading to significant advancements in this field of study [17].

Insufficient datasets for the training process pose another significant challenge in the digitization of engineering documents [18]. Deep-learning models require vast numbers of data for effective training. Given the industry’s heavy reliance on manual interpretation of these documents, there has been limited effort in generating the drawings automatically, making it challenging for researchers to acquire labeled data. To tackle this issue, data augmentation techniques have been introduced in recent years [19].

Generative models have also undergone significant advancement and have been effectively used in numerous applications. Generative adversarial networks have recently emerged as some of the most well-known and frequently employed tools for producing content. Ian Goodfellow first presented GANs in 2014 [20]. Another difficult issue that affects a wide range of fields, including engineering diagrams, is when several classes of symbols in the drawings are either over-represented or underserved in the dataset [21].

2. Digitization of Engineering Documents

Engineering drawings commonly incorporate diverse forms, symbolic shapes, solid or dashed lines, and text to depict intricate engineering processes in a condensed and comprehensive manner. These technical drawings find extensive application across multiple disciplines. Over the past decade, considerable research efforts have been dedicated to machine vision with the aim of digitizing these sketches [22][23][24][25]. With remarkable progress in computer vision and machine learning, coupled with the existence of vast numbers of undigitized legacy data, the need for a fully automated framework to digitize these drawings has become more imperative than ever before.

One primary limitation of learning methods is their heavy reliance on extensive feature extraction, which is highly dependent on the quality of the extracted features and often lacks generalizability to unobserved instances [26]. The existing literature in this domain has primarily focused on addressing specific aspects of digitizing engineering diagrams, rather than providing a comprehensive and fully automated framework, as indicated by a recent comprehensive review [27]. Some studies have concentrated on the identification and categorization of common symbols in engineering drawings, as well as the separation of text from other graphical elements in diagrams, employing image processing techniques for line identification and deep-learning methods for symbol detection [28]. Alternatively, heuristic strategies have been employed in other studies to locate and classify components in drawings using approaches such as random forests, achieving precision levels exceeding 90% [29], or to discern and differentiate text from graphic elements [30][31][32][33]. However, these approaches may require the modification of heuristic rules or the development of new ones when there are changes to the schematic or symbol representations [34]. Additionally, the effectiveness of these approaches heavily relies on a balanced distribution of data across the dataset.

In recent years, attempts have been made to apply deep-learning-based techniques to tasks similar to the digitization of engineering drawings [35]. Some studies have utilized techniques based on single-stage detection (SSD) to identify doors, windows, and furniture objects in floor-plan diagrams, yielding positive results [36]. However, these studies used small datasets with an insufficient number of furniture items in each drawing [37]. The unbalanced distribution of object classes within these datasets leads to a decline in performance [38][39].

Symbol recognition in process and instrumentation diagrams (P&IDs) is a closely related field. The complexity of P&IDs introduces various challenges in symbol recognition, including adjacent lines, overlapping symbols, unclear regions, and similarity between symbols [40]. A study evaluated four classification tools, namely, multi-stage deep neural networks, hidden Markov models, K-nearest neighbors, and support vector machines (SVMs), using both synthetic and original drawing sheet datasets [41]. Although the SVM model demonstrated the best performance, all techniques involved grouping symbols before classification and removing lines for clearer observation. Additionally, a faster R-CNN was employed to detect and classify handwritten symbols in technical diagrams, with a focus on specific document types, such as PFDs and flowcharts, achieving favorable results compared to traditional methods.

Various frameworks have been proposed for interpreting engineering sheets and P&ID drawings using deep-learning models. The combination of heuristic-based methods with deep-learning techniques has shown promising results in component detection for engineering drawings. A two-stage process was employed, involving Euclidean metrics to connect pipeline tags and symbols, as well as the probabilistic Hough transform for pipeline detection, to localize symbols and text. Another approach utilized a fully linked convolutional neural network to develop symbol localization techniques [42]. A dataset comprising 672 process flow drawings was used to automate engineering drawings, resulting in improved performance compared to conventional methods. However, accurate detection of all components was not achieved, and the class accuracy for different components in the drawings was around 64.0%.

To capture the time-varying signal produced by pen movements during the sketching process of a one-line hand-drawn electrical circuit diagram, hidden Markov models (HMMs) were utilized [43]. A dataset containing 100 hand-drawn sketches was examined for this purpose. The proposed approach, which employed HMMs, yielded promising outcomes. It achieved an accuracy of more than 83% in classifying the points associated with connector and symbol categories correctly.

A circuit-diagram recognition system was developed to address sketch recognition as a dynamic programming problem, incorporating a novel technique called 2D-DP [44]. The 2D-DP technique demonstrated successful identification of interspersed symbols within the sketches. The method introduced a tolerant connectivity function cost, which proved effective in recognizing free-form sketches. The experiment involved analyzing 130 sketches containing ten different types of electrical symbols. Point-level measurements revealed that the novel approach achieved an accuracy of over 90 percent. A grammar-based parsing strategy for recognition of hand-drawn one-line diagrams was introduced to evaluate the system on UML use-case diagrams, showing an improved recognition accuracy [45]. However, further accurate and formal user studies are necessary to understand the strategy’s limitations and explore potential enhancements.

The adoption of advanced deep-learning methodologies has resulted in significant improvements in the detection and recognition of notations and symbols in musical documents [46]. Techniques such as Faster R-CNNs, R-FCNs, YOLO, and SSD have been successfully applied to identify handwritten musical symbols, demonstrating superior performance compared to traditional structured image processing methods for symbol recognition and detection [21][47].

In summary, the existing research highlights a notable gap between the current state of machine learning and technical image comprehension. This discrepancy arises from the rapid progress in the field juxtaposed with the uneven and incremental advancements in a critical application area that has implications across various sectors.

3. GAN Networks

Generative adversarial networks (GANs) were first presented by Ian Goodfellow in 2014 [48]. GAN networks are thought of as generative models that can create unique and fresh content [21]. The generator (G) and the discriminator (D) are two competing models (such as CNNs, neural networks, etc.) that make up GANs. The discriminator serves as a classifier that receives input from the generator and the training set (fake input and authentic input). The discriminator will learn how to differentiate between real input samples and fake input samples fed into the network during the training procedure. However, the generator is taught to create samples that accurately reflect the fundamental properties of the original content [49].

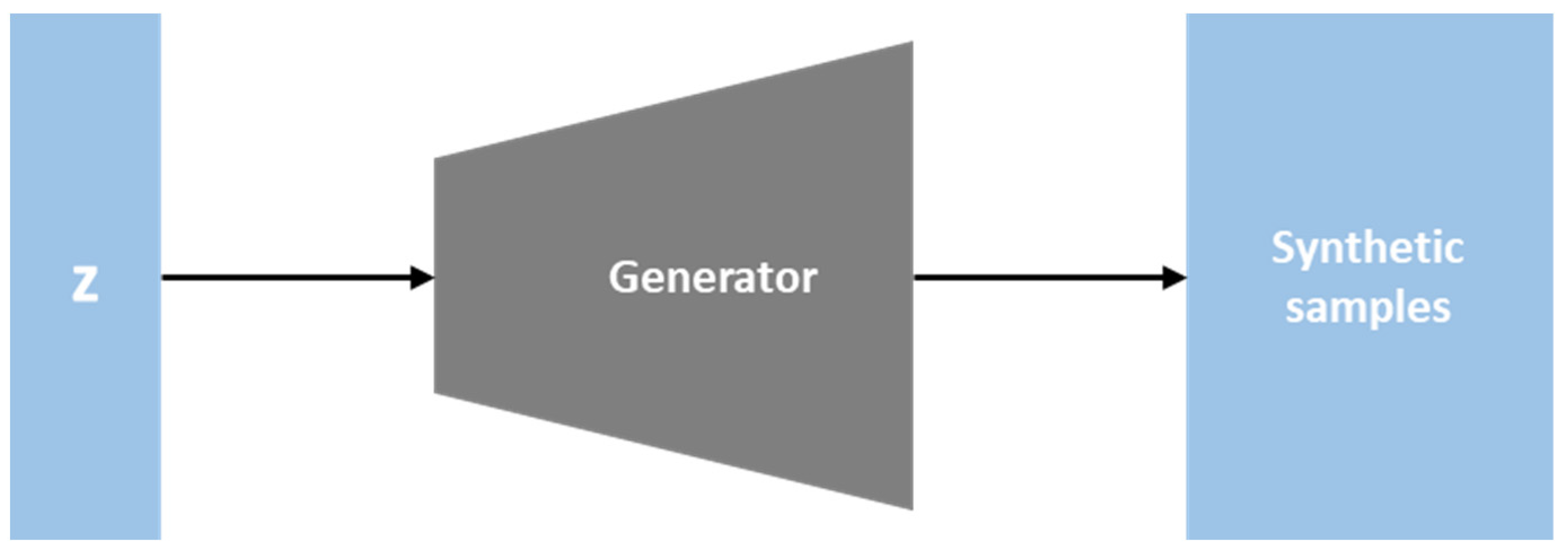

As depicted in Figure 2, the generator is a network that uses current data to produce new, realistic pictures. An image is created using random noise z. The generator’s objective is to deceive the discriminator into believing that the fake image it produces is genuine [48]. Equation (1) is a possible way to describe this scenario. When a sample produced by the generator is discriminated against, the generator attempts to reduce the discriminator’s accuracy as much as possible [50].

minGV(G) = Ez~px(z)[log(1 − D(G(z))]

Figure 2. The generator.

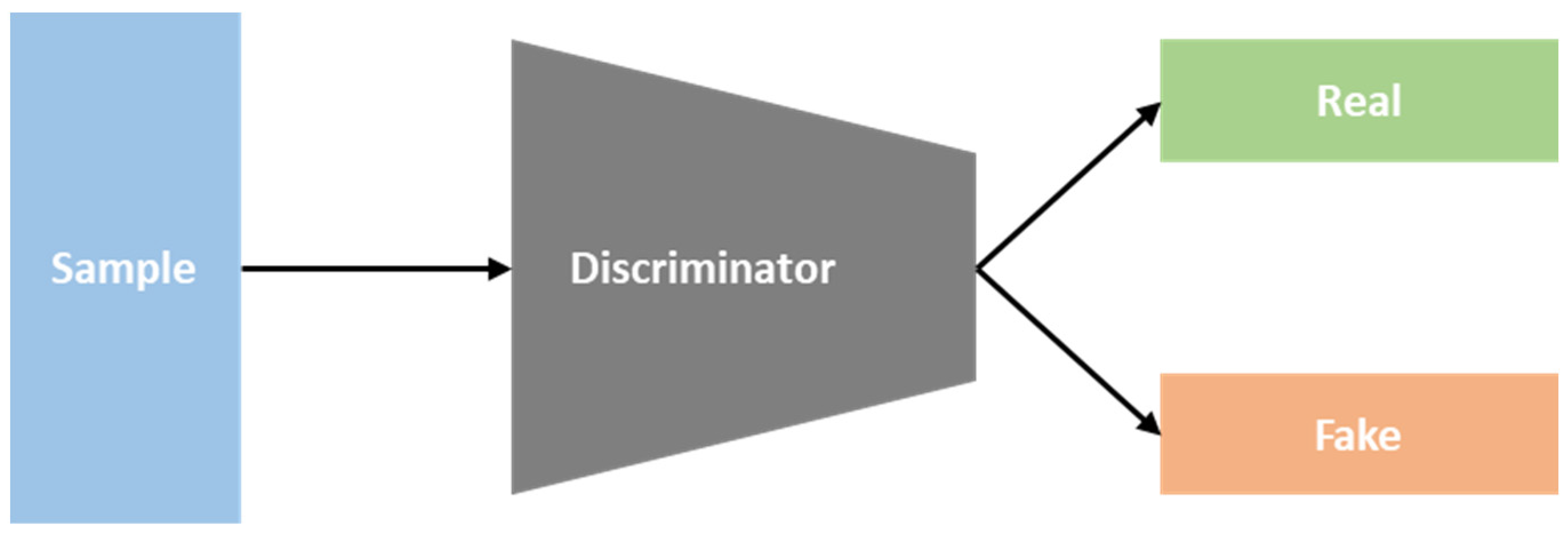

As seen in Figure 3, the discriminator is a network for differentiating the images. It determines whether an input image is a fake image created by the generator or a genuine image that already exists. The discriminator’s job is to highlight differences between the actual image that already exists and the fake image produced by the generator. Equation (2) is a possible way to describe this. Minimizing the difference between the real data distribution px and the artificial data distribution px(z) is the main goal of GAN training. When discriminating a real sample, the discriminator seeks to optimize accuracy (D(x)), and when separating fake samples from real samples it seeks to maximize 1 − D(G) [50].

maxDV(D) = Ez~px(z)[logD(x)] + Ez~px(z)[log(1 − D(G(z))]

Figure 3. The discriminator.

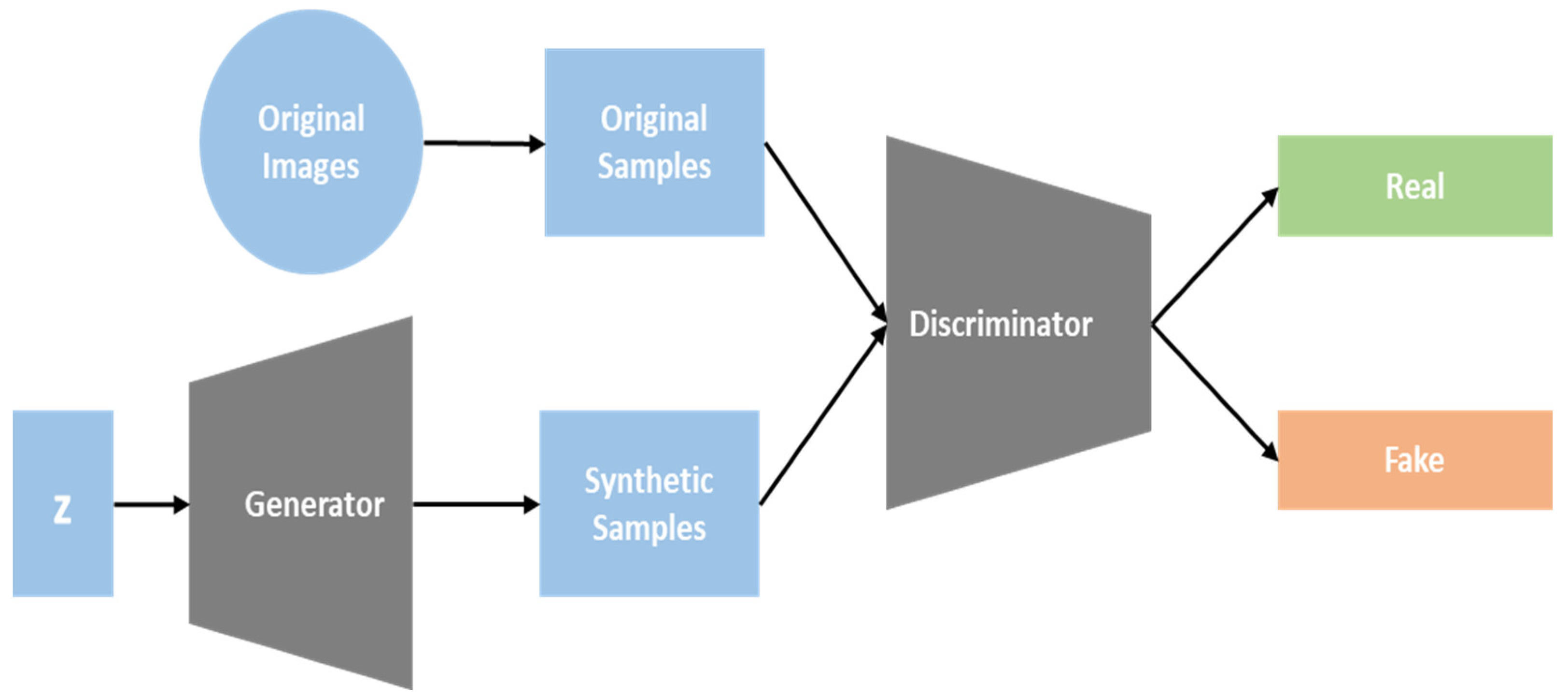

The GAN network architecture is depicted in Figure 4, which includes the generator and discriminator. The generator in a GAN is responsible for producing fictitious pictures. To determine which picture is real and which is fake, the discriminator uses either a fake image created by the generator or an already existing real image. The generator and discriminator will evolve in an adversarial manner after thousands of iterations. As a consequence, the generator will be able to produce pictures that resemble actual pictures. This can be stated using the goal function of the GAN (Equation (3)). The discriminator seeks to increase the V value, while the generator seeks to reduce the V value [51].

minGmaxDV(D,G) = Ez~px(z)[logD(x)] + Ez~px(z)[log(1 − D(G(z))]

Figure 4. Generative adversarial networks.

To enhance the overall performance of GANs, numerous study projects have been focused on various GAN variants. BigGAN models were developed by Brock et al. [52] to accomplish the task of producing distinctive images with high resolution from various datasets. The ImageNet dataset’s challenging samples as well as high-resolution images can both be processed by the image identification algorithm. An alternative generator design dubbed StyleGAN was proposed by Karras et al. [53]. The style of the generated image can be dynamically changed based on the most recent data in all convolutional layers thanks to a newly introduced architecture for the generator that the authors created. It aids in directing the complete process of synthesizing pictures, which starts with images of low resolution and progresses to high-resolution images, by beginning with that resolution.

Effective texture synthesis tools called Markovian generative adversarial networks (MGANs) were developed by Wang and Wang [32]. Brown noise can be directly decoded into a realistic texture; on the other hand, these networks can also decode images into the artwork, improving the quality of texture. A GAN-based architecture called spatial GAN (SGAN), which is excellent for generating texture, was developed by Bergmann et al. [34]. This method can combine numerous versatile source photographs to produce diverse textures and produce high-quality texture images.

In order to overcome the GAN’s limitations, the research in [53] explored the use of deep convolutional GANs, which are also referred to as DCGANs. DCGANs address the GAN’s limitations by replacing max-pooling layers with convolutional layers that have larger or fractional strides. This approach facilitates the use of a unified architectural framework to achieve multiple objectives. In the DCGAN model, the generator competes with the discriminator, incentivizing the generator to produce visually appealing images. In certain situations, its instability can make it difficult to apply in various domains due to the structure of the fully connected network.

Another GAN variant, the least-squares generative adversarial network (LSGAN), has two advantages over traditional GANs. Firstly, LSGANs can generate clearer images than regular GANs. Secondly, LSGANs have more consistent success during the learning process. Traditional GANs are unstable during learning, making it difficult to use them in practice [54]. Several recent studies have investigated how the objective function affects the uncertainty of GAN learning.

References

- Moreno-García, C.F.; Elyan, E.; Jayne, C. Heuristics-Based Detection to Improve Text/Graphics Segmentation in Complex Engineering Drawings. In Proceedings of the Engineering Applications of Neural Networks: 18th International Conference (EANN 2017), Athens, Greece, 25–27 August 2017; pp. 87–98.

- Bhanbhro, H.; Hassan, S.R.; Nizamani, S.Z.; Bakhsh, S.T.; Alassafi, M.O. Enhanced Textual Password Scheme for Better Security and Memorability. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 1–8.

- Ali-Gombe, A.; Elyan, E. MFC-GAN: Class-imbalanced dataset classification using Multiple Fake Class Generative Adversarial Network. Neurocomputing 2019, 361, 212–221.

- Elyan, E.; Jamieson, L.; Ali-Gombe, A. Deep learning for symbols detection and classification in engineering drawings. Neural Netw. 2020, 129, 91–102.

- Huang, R.; Gu, J.; Sun, X.; Hou, Y.; Uddin, S. A Rapid Recognition Method for Electronic Components Based on the Improved YOLO-V3 Network. Electronics 2019, 8, 825.

- Jamieson, L.; Moreno-Garcia, C.F.; Elyan, E. Deep Learning for Text Detection and Recognition in Complex Engineering Diagrams. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–7.

- Karthi, M.; Muthulakshmi, V.; Priscilla, R.; Praveen, P.; Vanisri, K. Evolution of YOLO-V5 Algorithm for Object Detection: Automated Detection of Library Books and Performace validation of Dataset. In Proceedings of the 2021 International Conference on Innovative Computing, Intelligent Communication and Smart Electrical Systems (ICSES), Chennai, India, 24–25 September 2021; pp. 1–6.

- Lee, H.; Lee, J.; Kim, H.; Mun, D. Dataset and method for deep learning-based reconstruction of 3D CAD models containing machining features for mechanical parts. J. Comput. Des. Eng. 2021, 9, 114–127.

- Naosekpam, V.; Sahu, N. Text detection, recognition, and script identification in natural scene images: A Review. Int. J. Multimedia Inf. Retr. 2022, 11, 291–314.

- Theisen, M.F.; Flores, K.N.; Balhorn, L.S.; Schweidtmann, A.M. Digitization of chemical process flow diagrams using deep convolutional neural networks. Digit. Chem. Eng. 2023, 6, 100072.

- Wang, J.; Chen, Y.; Dong, Z.; Gao, M. Improved YOLOv5 network for real-time multi-scale traffic sign detection. Neural Comput. Appl. 2022, 35, 7853–7865.

- Whang, S.E.; Roh, Y.; Song, H.; Lee, J.-G. Data collection and quality challenges in deep learning: A data-centric AI perspective. VLDB J. 2023, 32, 791–813.

- Guptaa, M.; Weia, C.; Czerniawskia, T. Automated Valve Detection in Piping and Instrumentation (P&ID) Diagrams. In Proceedings of the 39th International Symposium on Automation and Robotics in Construction (ISARC 2022), Bogota, Colombia, 13–15 July 2022; pp. 630–637.

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object dtection. arXiv 2020, arXiv:2004.10934.

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object detection using YOLO: Challenges, architectural successors, datasets and applications. Multimedia Tools Appl. 2022, 82, 9243–9275.

- Lee, J.; Hwang, K.-I. YOLO with adaptive frame control for real-time object detection applications. Multimed. Tools Appl. 2022, 81, 36375–36396.

- Gada, M. Object Detection for P&ID Images using various Deep Learning Techniques. In Proceedings of the 2021 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 27–29 January 2021; pp. 1–5.

- Zhang, Q.; Zhang, M.; Chen, T.; Sun, Z.; Ma, Y.; Yu, B. Recent advances in convolutional neural network acceleration. Neurocomputing 2018, 323, 37–51.

- Hong, J.; Li, Y.; Xu, Y.; Yuan, C.; Fan, H.; Liu, G.; Dai, R. Substation One-Line Diagram Automatic Generation and Visualization. In Proceedings of the 2019 IEEE Innovative Smart Grid Technologies-Asia (ISGT Asia), Chengdu, China, 21–24 May 2019; pp. 1086–1091.

- Ismail, M.H.A.; Tailakov, D. Identification of Objects in Oilfield Infrastructure Using Engineering Diagram and Machine Learning Methods. In Proceedings of the 2021 IEEE Symposium on Computers & Informatics (ISCI), Kuala Lumpur, Malaysia, 16 October 2021; pp. 19–24.

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo algorithm developments. Procedia Comput. Sci. 2022, 199, 1066–1073.

- Liu, X.; Meng, G.; Pan, C. Scene text detection and recognition with advances in deep learning: A survey. Int. J. Doc. Anal. Recognit. (IJDAR) 2019, 22, 143–162.

- Mani, S.; Haddad, M.A.; Constantini, D.; Douhard, W.; Li, Q.; Poirier, L. Automatic Digitization of Engineering Diagrams using Deep Learning and Graph Search. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 673–679.

- Moreno-García, C.F.; Elyan, E.; Jayne, C. New trends on digitisation of complex engineering drawings. Neural Comput. Appl. 2018, 31, 1695–1712.

- Nguyen, T.; Van Pham, L.; Nguyen, C.; Van Nguyen, V. Object Detection and Text Recognition in Large-scale Technical Drawings. In Proceedings of the 10th International Conference on Pattern Recognition Applications and Methods (Icpram), Vienna, Austria, 17 December 2021; pp. 612–619.

- Nurminen, J.K.; Rainio, K.; Numminen, J.-P.; Syrjänen, T.; Paganus, N.; Honkoila, K. Object detection in design diagrams with machine learning. In Proceedings of the International Conference on Computer Recognition Systems, Polanica Zdroj, Poland, 20–22 May 2019; pp. 27–36.

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788.

- Rezvanifar, A.; Cote, M.; Albu, A.B. Symbol Spotting on Digital Architectural Floor Plans Using a Deep Learning-based Framework. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 2419–2428.

- Sarkar, S.; Pandey, P.; Kar, S. Automatic Detection and Classification of Symbols in Engineering Drawings. arXiv 2022, arXiv:2204.13277.

- Shetty, A.K.; Saha, I.; Sanghvi, R.M.; Save, S.A.; Patel, Y.J. A review: Object detection models. In Proceedings of the 2021 6th International Conference for Convergence in Technology (I2CT), Maharashtra, India, 2–4 April 2021; pp. 1–8.

- Shin, H.-J.; Jeon, E.-M.; Kwon, D.-k.; Kwon, J.-S.; Lee, C.-J. Automatic Recognition of Symbol Objects in P&IDs using Artificial Intelligence. Plant J. 2021, 17, 37–41.

- Wang, Q.S.; Wang, F.S.; Chen, J.G.; Liu, F.R. Faster R-CNN Target-Detection Algorithm Fused with Adaptive Attention Mechanism. Laser Optoelectron P 2022, 12, 59.

- Wen, L.; Jo, K.-H. Fast LiDAR R-CNN: Residual Relation-Aware Region Proposal Networks for Multiclass 3-D Object Detection. IEEE Sens. J. 2022, 22, 12323–12331.

- Yu, E.-S.; Cha, J.-M.; Lee, T.; Kim, J.; Mun, D. Features Recognition from Piping and Instrumentation Diagrams in Image Format Using a Deep Learning Network. Energies 2019, 12, 4425.

- Denton, E.L.; Chintala, S.; Fergus, R. Deep generative image models using a laplacian pyramid of adversarial networks. Adv. Neural Inf. Process. Syst. 2015.

- Dong, Q.; Gong, S.; Zhu, X. Class rectification hard mining for imbalanced deep learning. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1851–1860.

- Dosovitskiy, A.; Springenberg, J.T.; Riedmiller, M.; Brox, T. Discriminative unsupervised feature learning with convolutional neural networks. Adv. Neural Inf. Process. Syst. 2014.

- Fernández, A.; López, V.; Galar, M.; del Jesus, M.J.; Herrera, F. Analysing the classification of imbalanced data-sets with multiple classes: Binarization techniques and ad-hoc approaches. Knowledge-Based Syst. 2013, 42, 97–110.

- Frid-Adar, M.; Klang, E.; Amitai, M.; Goldberger, J.; Greenspan, H. Synthetic data augmentation using GAN for improved liver lesion classification. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 289–293.

- Yun, D.-Y.; Seo, S.-K.; Zahid, U.; Lee, C.-J. Deep Neural Network for Automatic Image Recognition of Engineering Diagrams. Appl. Sci. 2020, 10, 4005.

- Zhang, Z.; Xia, S.; Cai, Y.; Yang, C.; Zeng, S. A Soft-YoloV4 for High-Performance Head Detection and Counting. Mathematics 2021, 9, 3096.

- Zhao, Z.-Q.; Zheng, P.; Xu, S.-T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232.

- Costagliola, G.; Deufemia, V.; Risi, M. A Multi-layer Parsing Strategy for On-line Recognition of Hand-drawn Diagrams. In Proceedings of the Visual Languages and Human-Centric Computing (VL/HCC’06), Brighton, UK, 4–8 September 2006; pp. 103–110.

- Feng, G.; Viard-Gaudin, C.; Sun, Z. On-line hand-drawn electric circuit diagram recognition using 2D dynamic programming. Pattern Recognit. 2009, 42, 3215–3223.

- Zhang, Y.; Viard-Gaudin, C.; Wu, L. An Online Hand-Drawn Electric Circuit Diagram Recognition System Using Hidden Markov Models. In Proceedings of the 2008 International Symposium on Information Science and Engineering, Shanghai, China, 20–22 December 2008; Volume 2, pp. 143–148.

- Luque, A.; Carrasco, A.; Martín, A.; de Las Heras, A. The impact of class imbalance in classification performance metrics based on the binary confusion matrix. Pattern Recognit. 2019, 91, 216–231.

- Douzas, G.; Bacao, F. Effective data generation for imbalanced learning using conditional generative adversarial networks. Expert Syst. Appl. 2018, 91, 464–471.

- Baur, C.; Albarqouni, S.; Navab, N. MelanoGANs: High resolution skin lesion synthesis with GANs. arXiv 2018, arXiv:1804.04338.

- Antoniou, A.; Storkey, A.; Edwards, H. Data Augmentation Generative Adversarial Networks. arXiv 2017, arXiv:1711.04340.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778.

- Huang, C.; Li, Y.; Loy, C.C.; Tang, X. Learning deep representation for imbalanced classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5375–5384.

- Inoue, H. Data augmentation by pairing samples for images classification. arXiv 2018, arXiv:1801.02929.

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive growing of gans for improved quality, stability, and variation. arXiv 2017, arXiv:1710.10196.

- Mariani, G.; Scheidegger, F.; Istrate, R.; Bekas, C.; Malossi, C. Bagan: Data augmentation with balancing gan. arXiv 2018, arXiv:1803.09655.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.2K

Revisions:

2 times

(View History)

Update Date:

01 Nov 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No