You're using an outdated browser. Please upgrade to a modern browser for the best experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Qing Li | -- | 1972 | 2023-10-13 11:05:37 | | | |

| 2 | Wendy Huang | Meta information modification | 1972 | 2023-10-16 05:52:53 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Li, Q.; Hu, S.; Shimasaki, K.; Ishii, I. Multiple Object Tracking and High-Speed Vision. Encyclopedia. Available online: https://encyclopedia.pub/entry/50255 (accessed on 22 December 2025).

Li Q, Hu S, Shimasaki K, Ishii I. Multiple Object Tracking and High-Speed Vision. Encyclopedia. Available at: https://encyclopedia.pub/entry/50255. Accessed December 22, 2025.

Li, Qing, Shaopeng Hu, Kohei Shimasaki, Idaku Ishii. "Multiple Object Tracking and High-Speed Vision" Encyclopedia, https://encyclopedia.pub/entry/50255 (accessed December 22, 2025).

Li, Q., Hu, S., Shimasaki, K., & Ishii, I. (2023, October 13). Multiple Object Tracking and High-Speed Vision. In Encyclopedia. https://encyclopedia.pub/entry/50255

Li, Qing, et al. "Multiple Object Tracking and High-Speed Vision." Encyclopedia. Web. 13 October, 2023.

Copy Citation

Multiple object tracking detects and tracks multiple targets in videos, such as pedestrians, vehicles, and animals. It is an important research direction in the field of computer vision, and has been widely applied in intelligent surveillance and behavior recognition. High-speed vision is a computer vision technology that aims to realize real-time image recognition and analysis through the use of fast and efficient image processing algorithms at high frame rates of 1000 fps or more. It is an important direction in the field of computer vision and is used in a variety of applications, such as intelligent transportation, security monitoring, and industrial automation.

object detection

computer vision

multiple object tracking

high-speed vision

deep learning

1. Introduction

Multi-target tracking and high-definition image acquisition are important issues in the field of computer vision [1]. High-definition images of many different targets can provide more details, which is helpful for object recognition and improves the accuracy of image analysis. It has been widely used in traffic management. [2], security monitoring [3], intelligent transportation systems [4], robot navigation [5], auto pilot [6], and video surveillance [7].

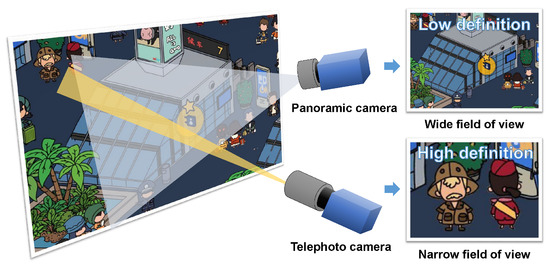

However, there is a contradiction between wide field of view and high-definition resolution, as shown in Figure 1. The discovery and tracking of multiple targets depends on a wide field of view. While a panoramic camera with a short focal length can provide a wide field of view, the definition of the image is low. A telephoto camera is the exact opposite of a panoramic camera. Using a panoramic camera with a larger resolution is a feasible solution; however, it requires greater expenditure and larger camera size [8]. With the rapid development of deep learning in the image field, the super-resolution reconstruction method based on autoencoding has become the mainstream, and its reconstruction accuracy is significantly better than that of traditional methods [9]. However, due to the huge network model and large amount of model training required in the super-resolution method based on deep learning, there are defects in the reconstruction speed and the flexibility of the model [10].

Figure 1. Contradiction between wide field of view and high-definition images.

Therefore, researchers have tried to use telephoto cameras to obtain a larger field of view and track multiple targets. A feasible solution is to stitch the images obtained from a telephoto camera array together into high-resolution images and track multiple targets [11]. Again, this results in greater expenditure and an increase in device size. Another research method is to make the telephoto camera an active system by mounting it on a gimbal. Through the horizontal and vertical movement of the gimbal, the field of view of a pan-tilt-zoom (PTZ) camera can be changed to obtain a wide field of view [12]. However, the original design of such a gimbal camera is not intended for multi-target tracking. Due to the limited movement speed of the gimbal and the size of the telephoto lens, it is difficult for gimbal-based PTZ cameras to move at high speeds and observe multiple objects [13]. Compared to traditional camera systems operating at 30 or 60 fps, high-speed vision systems can work at 1000 fps or more [14]. The high-speed vision system acquires and processes image information with extremely low latency and interacts with the environment through visual feedback control [15]. In recent years, a galvanometer-based reflective camera system has been developed that can switch the perspective of a telephoto camera at hundreds of frames per second [16]. This reflective PTZ camera system is able to virtualize multiple virtual cameras in a time-division multiplexing manner in order to observe multiple objects [17]. Compared with traditional gimbal-based and panoramic cameras, galvanometer-based reflective PTZ cameras have the advantages of low cost, high speed, and high stability [18], and are suitable for multi-target tracking and high-definition capture.

However, the current galvanometer-based PTZ cameras rarely perform active visual control in the process of capturing multiple targets. Instead, they mainly rely on panoramic cameras, laser radars, and photoelectric sensors to obtain the positions of multiple targets, and finally use reflective PTZ cameras for multi-angle capture [19]. Due to the impact of detection delay and accuracy, it is difficult for multiple objects to be tracked smoothly. With the victory of AlexNet in the visual competition, CNN-based detectors continue to develop, and can now detect various objects in an image at a dozens of frames per second [20]. For high-speed vision at a speed of hundreds of frames per second, however, it is difficult to achieve real-time detection with deep learning.

2. Object Detection

Object detection is a computer vision task that involves detecting instances of semantic objects of a certain class (such as a person, bicycle, or car) in digital images and videos [21]. The earliest research in the field of object detection can be traced back to the Eigenface method for face detection proposed by researchers at MIT [22]. Over the past few decades, object detection has received great attention and achieved significant progress. Object detection algorithms are roughly divided into two stages, namely, traditional object detection algorithms and the object detection algorithms based on deep learning [23].

Due to the limitations of computing speed, traditional object detection algorithms focus mainly on pixel information in images. Traditional object detection algorithms can be divided into two categories, sliding window-based methods [24] and region proposal-based methods [25]. The sliding window-based approach achieves object detection by sliding windows of different sizes over an image and classifying the contents within the different windows. Many such feature extraction methods have been proposed, such as histogram of oriented gradients (HOG), local binary patterns (LBP), and Haar-based classifiers. These features, in conjunction with traditional machine learning methods such as SVM and BOOST, have been widely applied in pedestrian detection [26] and face recognition [27]. Region proposal-based methods achieve object detection by proposing regions in which objects may exist in generated images, then classifying and regressing these regions [28].

Traditional algorithms have been proven effective; however, with continuous improvements in computing power and dataset availability, object detection technologies based on deep learning have gradually replaced the traditional manual feature extraction methods and become the main research direction. Thanks to continuous development, convolutional neural network (CNN)-based object detection methods have evolved into a series of high-performance structural models such as AlexNet [29], VGG [30], GoogLeNet [31], ResNet [32], ResNeXt [33], CSPNet [34], and EfficientNet [35]. These network models have been widely employed as backbone architectures in various CNN-based object detectors. According to the differences in the detection process, object detection algorithms based on deep learning can be divided into two research directions, One-Stage and Two-Stage [36]. Two-stage object detection algorithms transform the detection problem into a classification problem for generated local region images based on region proposals. Such algorithms generate region proposals in the first stage, then classify and regress the content in the region of interest in the second stage. There are many efficient object detection algorithms that use a two-stage detection process, such as R-CNN [37], SPP-Net [38], Fast R-CNN [39], Faster R-CNN [40], FPN [41], R-FCN [42], and DetectoRS [43]. R-CNN was the earliest method to apply deep learning technology to object detection, reaching an MAP of 58.5% on the VOC2007 data. Subsequently, SPP-Net, Fast R-CNN, and Faster R-CNN sped up the running speed of the algorithm while maintaining the detection accuracy. One-stage object detection algorithms, on the other hand, are based on regression, which converts the object detection task into a regression problem for the entire image [44]. Among the one-stage object detection algorithms, the most famous are single shot multibox detector (SSD) [45], YOLO [46], RetinaNet [47], CenterNet [48], and Transformer [49]-based detectors [50]. YOLO was the earliest one-stage target detection algorithm applied to actual scenes, obtaining stable and high-speed detection results [51]. The YOLO algorithm divides the input image into 𝑆 × 𝑆 grids, predicts B bounding boxes for each grid, and then predicts the objects in each grid separately. The result of each prediction includes the location, size, confidence of the bounding box, and the probability that the object in the bounding box belongs to each category. This method of dividing the grid avoids a large number of repeated calculations, helping the YOLO algorithm to achieve a faster detection speed. In follow-up studies, algorithms such as YOLOv2 [52], YOLOv3 [53], YOLOv4 [54], YOLOv5 [55], and YOLOv6 [56] have been proposed. Owing to its high stability and detection speed, in this research yolov4 is used as the AI detector.

3. Multiple Object Tracking (Mot)

Currently, there are three popular research directions in multi-object tracking: detection-based MOT, detection and tracking-based joint MOT, and attention-based MOT. In detection-based MOT algorithms, object detection is performed on each frame to obtain image patches of all detected objects. A similarity matrix is then constructed based on the IoU and appearance between all objects across adjacent frames, and the best matching result is obtained using a Hungarian or greedy algorithm; representative algorithms include SORT [57] and DeepSORT [58]. In detection and tracking-based joint MOT algorithms, detection and tracking are integrated into a single process. Based on CNN detection, multiple targets are fed into the feature extraction network to extract features and directly output the tracking results for the current frame. Representative algorithms include JDE [59], MOTDT [60], Tracktor++ [61], and FFT [62]. The attention mechanism-based MOT is inspired by the powerful processing ability of the Transformer model in natural language processing. Representative algorithms include TransTrack [63] and TrackFormer [64]. TransTrack takes the feature map of the current frame as the key and the target feature query from the previous frame and a set of learned target feature queries from the current frame as the input query of the whole network.

4. High-Speed Vision

High-speed vision has two properties: (1) the image displacement from frame to frame is small, and (2) the time interval between frames is extremely short. In order to realize vision-based high-speed feedback control, it is necessary to process massive images in a short time. Unfortunately, current image processing algorithms, such as noise reduction, tracking, and recognition, are all based on traditional image data involving dozens frames per second. An important idea in high-speed image processing is that it reduces the amount of work required for small-scale shifts between high-speed frames. Field programmable gate arrays (FPGAs) and graphics processing units (GPU), which support massively parallel operations, are ideal for processing two-dimensional data such as images. In [65], the authors presented a high-speed vision platform called 𝐻3 vision. This platform employs dedicated FPGA hardware to implement image processing algorithms and enables simultaneous processing of a 256 × 256 pixel image at 10,000 fps. The hardware implementation of image processing algorithms on an FPGA board provides high performance and low latency, making it suitable for real-time applications that require high-speed image processing. In [66], a super-high-speed vision platform (HSVP) was introduced that was capable of processing 12-bit 1024 × 1024 grayscale images at a speed of 12,500 frames per second using an FPGA platform. While the fast computing speed of FPGA is ideal for high-speed image processing, its programming complexity and limited memory capacity can pose significant challenges. Compared with FPGA, GPU platforms can realize high-frame-rate image processing with lower programming difficulty. A GPU-based real-time full-pixel optical flow analysis method was proposed in [67].

In addition to high-speed image processing, high-speed vision feedback control is very important. Gimbal-based camera systems are specifically designed for image streams with dozens of frames per second. Due to the limited size and movement speed of the camera, it is often difficult to track objects at high speeds while simultaneously observing multiple objects. Recently, a high-speed galvanometer-based reflective PTZ camera system was proposed in [68]. The PTZ camera system can acquire images from multiple angles in an extremely short time and virtualize multiple cameras from a large number of acquired image streams. In [69], a novel dual-camera system was proposed which is capable of simultaneously capturing zoomed-in images using an ultrafast pan-tilt camera and wide-view images using a separate camera. The proposed system provides a unique capability for capturing both wide-field and detailed views of a scene simultaneously. To enable the tracking of specific objects in complex backgrounds, a hybrid tracking method that combines convolutional neural networks (CNN) and traditional object tracking techniques was proposed in [70]. This hybrid method achieves high-speed tracking performance of up to 500 fps and has shown promising results in various applications, such as robotics and surveillance.

References

- Luo, W.; Xing, J.; Milan, A.; Zhang, X.; Liu, W.; Kim, T.K. Multiple object tracking: A literature review. Artif. Intell. 2021, 293, 103448.

- Mwamba, I.C.; Morshedi, M.; Padhye, S.; Davatgari, A.; Yoon, S.; Labi, S.; Hastak, M. Synthesis Study of Best Practices for Mapping and Coordinating Detours for Maintenance of Traffic (MOT) and Risk Assessment for Duration of Traffic Control Activities; Technical Report; Joint Transportation Research Program; Purdue University: West Lafayette, IN, USA, 2021.

- Ghafir, I.; Prenosil, V.; Svoboda, J.; Hammoudeh, M. A survey on network security monitoring systems. In Proceedings of the 2016 IEEE 4th International Conference on Future Internet of Things and Cloud Workshops (FiCloudW), Vienna, Austria, 22–24 August 2016; pp. 77–82.

- Qureshi, K.N.; Abdullah, A.H. A survey on intelligent transportation systems. Middle-East J. Sci. Res. 2013, 15, 629–642.

- Weng, X.; Wang, J.; Held, D.; Kitani, K. 3d multi-object tracking: A baseline and new evaluation metrics. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, USA, 24–30 October 2020; pp. 10359–10366.

- Rzadca, K.; Findeisen, P.; Swiderski, J.; Zych, P.; Broniek, P.; Kusmierek, J.; Nowak, P.; Strack, B.; Witusowski, P.; Hand, S.; et al. Autopilot: Workload autoscaling at Google. In Proceedings of the Fifteenth European Conference on Computer Systems, New York, NY, USA, 8–12 May 2023; pp. 1–16.

- Bohush, R.; Zakharava, I. Robust person tracking algorithm based on convolutional neural network for indoor video surveillance systems. In Proceedings of the Pattern Recognition and Information Processing: 14th International Conference, PRIP 2019, Minsk, Belarus, 21–23 May 2019; Revised Selected Papers 14. pp. 289–300.

- Kaiser, R.; Thaler, M.; Kriechbaum, A.; Fassold, H.; Bailer, W.; Rosner, J. Real-time Person Tracking in High-resolution Panoramic Video for Automated Broadcast Production. In Proceedings of the 2011 Conference for Visual Media Production, Washington, DC, USA, 16–17 November 2011; pp. 21–29.

- Fukami, K.; Fukagata, K.; Taira, K. Super-resolution reconstruction of turbulent flows with machine learning. J. Fluid Mech. 2019, 870, 106–120.

- Anwar, S.; Khan, S.; Barnes, N. A deep journey into super-resolution: A survey. ACM Comput. Surv. (CSUR) 2020, 53, 1–34.

- Carles, G.; Downing, J.; Harvey, A.R. Super-resolution imaging using a camera array. Opt. Lett. 2014, 39, 1889–1892.

- Kang, S.; Paik, J.K.; Koschan, A.; Abidi, B.R.; Abidi, M.A. Real-time video tracking using PTZ cameras. In Proceedings of the Sixth International Conference on Quality Control by Artificial Vision, Gatlinburg, TN, USA, 19–22 May 2003; Volume 5132, pp. 103–111.

- Ding, C.; Song, B.; Morye, A.; Farrell, J.A.; Roy-Chowdhury, A.K. Collaborative sensing in a distributed PTZ camera network. IEEE Trans. Image Process. 2012, 21, 3282–3295.

- Li, Q.; Chen, M.; Gu, Q.; Ishii, I. A Flexible Calibration Algorithm for High-speed Bionic Vision System based on Galvanometer. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 4222–4227.

- Gu, Q.Y.; Ishii, I. Review of some advances and applications in real-time high-speed vision: Our views and experiences. Int. J. Autom. Comput. 2016, 13, 305–318.

- Okumura, K.; Oku, H.; Ishikawa, M. High-speed gaze controller for millisecond-order pan/tilt camera. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 6186–6191.

- Aoyama, T.; Li, L.; Jiang, M.; Inoue, K.; Takaki, T.; Ishii, I.; Yang, H.; Umemoto, C.; Matsuda, H.; Chikaraishi, M.; et al. Vibration sensing of a bridge model using a multithread active vision system. IEEE/ASME Trans. Mechatron. 2017, 23, 179–189.

- Hu, S.; Shimasaki, K.; Jiang, M.; Takaki, T.; Ishii, I. A dual-camera-based ultrafast tracking system for simultaneous multi-target zooming. In Proceedings of the 2019 IEEE International Conference on Robotics and Biomimetics (ROBIO), Dali, China, 6–8 December 2019; pp. 521–526.

- Jiang, M.; Sogabe, R.; Shimasaki, K.; Hu, S.; Senoo, T.; Ishii, I. 500-fps omnidirectional visual tracking using three-axis active vision system. IEEE Trans. Instrum. Meas. 2021, 70, 1–11.

- Chua, L.O.; Roska, T. The CNN paradigm. IEEE Trans. Circuits Syst. Fundam. Theory Appl. 1993, 40, 147–156.

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276.

- Turk, M.; Pentland, A. Eigenfaces for Recognition. J. Cogn. Neurosci. 1991, 3, 71–86.

- Amit, Y.; Felzenszwalb, P.; Girshick, R. Object detection. In Computer Vision: A Reference Guide; Springer International Publishing: Cham, Switzerland, 2020; pp. 1–9.

- Li, G.; Xu, D.; Cheng, X.; Si, L.; Zheng, C. Simvit: Exploring a simple vision transformer with sliding windows. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; pp. 1–6.

- Ghasemi, Y.; Jeong, H.; Choi, S.H.; Park, K.B.; Lee, J.Y. Deep learning-based object detection in augmented reality: A systematic review. Comput. Ind. 2022, 139, 103661.

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893.

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001; Volume 1, p. I.

- Nguyen, V.D.; Tran, D.T.; Byun, J.Y.; Jeon, J.W. Real-time vehicle detection using an effective region proposal-based depth and 3-channel pattern. IEEE Trans. Intell. Transp. Syst. 2018, 20, 3634–3646.

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50× fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778.

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9.

- Targ, S.; Almeida, D.; Lyman, K. Resnet in resnet: Generalizing residual architectures. arXiv 2016, arXiv:1603.08029.

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995.

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391.

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114.

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49.

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587.

- Purkait, P.; Zhao, C.; Zach, C. SPP-Net: Deep absolute pose regression with synthetic views. arXiv 2017, arXiv:1712.03452.

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448.

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28.

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125.

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. Adv. Neural Inf. Process. Syst. 2016, 29.

- Qiao, S.; Chen, L.C.; Yuille, A. Detectors: Detecting objects with recursive feature pyramid and switchable atrous convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10213–10224.

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Cision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636.

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14, pp. 21–37.

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788.

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007.

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. CenterNet: Keypoint Triplets for Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6568–6577.

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787.

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929.

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo algorithm developments. Procedia Comput. Sci. 2022, 199, 1066–1073.

- Sang, J.; Wu, Z.; Guo, P.; Hu, H.; Xiang, H.; Zhang, Q.; Cai, B. An improved YOLOv2 for vehicle detection. Sensors 2018, 18, 4272.

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767.

- Yu, J.; Zhang, W. Face mask wearing detection algorithm based on improved YOLO-v4. Sensors 2021, 21, 3263.

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788.

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976.

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468.

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649.

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards real-time multi-object tracking. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XI 16, pp. 107–122.

- Chen, L.; Ai, H.; Zhuang, Z.; Shang, C. Real-time multiple people tracking with deeply learned candidate selection and person re-identification. In Proceedings of the 2018 IEEE International Conference on Multimedia and Expo (ICME), San Diego, CA, USA, 23–27 July 2018; pp. 1–6.

- Bergmann, P.; Meinhardt, T.; Leal-Taixe, L. Tracking without bells and whistles. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 941–951.

- Zhang, J.; Zhou, S.; Chang, X.; Wan, F.; Wang, J.; Wu, Y.; Huang, D. Multiple object tracking by flowing and fusing. arXiv 2020, arXiv:2001.11180.

- Sun, P.; Cao, J.; Jiang, Y.; Zhang, R.; Xie, E.; Yuan, Z.; Wang, C.; Luo, P. Transtrack: Multiple object tracking with transformer. arXiv 2020, arXiv:2012.15460.

- Meinhardt, T.; Kirillov, A.; Leal-Taixe, L.; Feichtenhofer, C. Trackformer: Multi-object tracking with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8844–8854.

- Ishii, I.; Taniguchi, T.; Sukenobe, R.; Yamamoto, K. Development of high-speed and real-time vision platform, H3 vision. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, New Orleans, LA, USA, 18–24 June 2022; pp. 3671–3678.

- Sharma, A.; Shimasaki, K.; Gu, Q.; Chen, J.; Aoyama, T.; Takaki, T.; Ishii, I.; Tamura, K.; Tajima, K. Super high-speed vision platform for processing 1024 × 1024 images in real time at 12500 fps. In Proceedings of the 2016 IEEE/SICE International Symposium on System Integration (SII), Shiroishi, Japan, 13–15 December 2016; pp. 544–549.

- Gu, Q.; Nakamura, N.; Aoyama, T.; Takaki, T.; Ishii, I. A Full-Pixel Optical Flow System Using a GPU-Based High-Frame-Rate Vision. In Proceedings of the 2015 Conference on Advances In Robotics, Goa, India, 2–4 July 2015; Association for Computing Machinery: New York, NY, USA, 2015.

- Kobayashi-Kirschvink, K.; Oku, H. Design principles of a high-speed omni-scannable gaze controller. IEEE Robot. Autom. Lett. 2016, 1, 836–843.

- Hu, S.; Shimasaki, K.; Jiang, M.; Senoo, T.; Ishii, I. A simultaneous multi-object zooming system using an ultrafast pan-tilt camera. IEEE Sens. J. 2021, 21, 9436–9448.

- Jiang, M.; Shimasaki, K.; Hu, S.; Senoo, T.; Ishii, I. A 500-fps pan-tilt tracking system with deep-learning-based object detection. IEEE Robot. Autom. Lett. 2021, 6, 691–698.

More

Information

Subjects:

Engineering, Electrical & Electronic

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

797

Revisions:

2 times

(View History)

Update Date:

16 Oct 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No