Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Manuel Mazzara | -- | 1795 | 2023-09-27 08:23:35 | | | |

| 2 | Sirius Huang | + 2 word(s) | 1797 | 2023-09-27 09:30:15 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Olabanjo, O.; Wusu, A.; Asokere, M.; Afisi, O.; Okugbesan, B.; Olabanjo, O.; Folorunso, O.; Mazzara, M. Deep Learning Models in Prostate Cancer Diagnosis. Encyclopedia. Available online: https://encyclopedia.pub/entry/49697 (accessed on 07 February 2026).

Olabanjo O, Wusu A, Asokere M, Afisi O, Okugbesan B, Olabanjo O, et al. Deep Learning Models in Prostate Cancer Diagnosis. Encyclopedia. Available at: https://encyclopedia.pub/entry/49697. Accessed February 07, 2026.

Olabanjo, Olusola, Ashiribo Wusu, Mauton Asokere, Oseni Afisi, Basheerat Okugbesan, Olufemi Olabanjo, Olusegun Folorunso, Manuel Mazzara. "Deep Learning Models in Prostate Cancer Diagnosis" Encyclopedia, https://encyclopedia.pub/entry/49697 (accessed February 07, 2026).

Olabanjo, O., Wusu, A., Asokere, M., Afisi, O., Okugbesan, B., Olabanjo, O., Folorunso, O., & Mazzara, M. (2023, September 27). Deep Learning Models in Prostate Cancer Diagnosis. In Encyclopedia. https://encyclopedia.pub/entry/49697

Olabanjo, Olusola, et al. "Deep Learning Models in Prostate Cancer Diagnosis." Encyclopedia. Web. 27 September, 2023.

Copy Citation

Prostate imaging refers to various techniques and procedures used to visualize the prostate gland for diagnostic and treatment purposes. Deep learning (DL) architectures have shown promising effectiveness and relative efficiency in prostate cancer (PCa) diagnosis due to their ability to analyze complex patterns and extract features from medical imaging data.

machine learning

deep learning

prostate cancer

1. Introduction

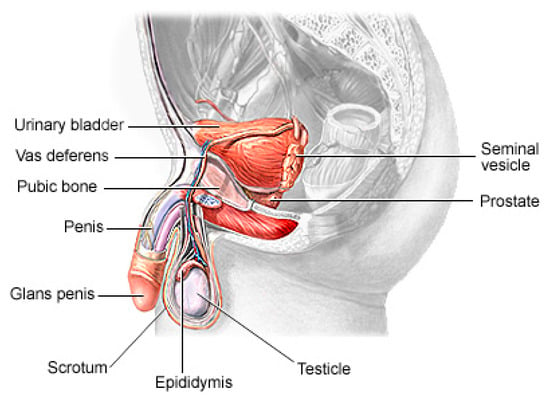

Prostate cancer (PCa) is the second most lethal and prevalent non-cutaneous tumor in males globally [1]. Published statistics from the American Cancer Society (ACS) show that it is the most common cancer in American men after skin cancer, with 288 and 300 new cases in 2023, resulting in about 34,700 deaths. By 2030, it is anticipated that there will be 11 million cancer deaths, which would be a record high [2]. Worldwide, this type of cancer affects many males, with developing and underdeveloped countries having a higher prevalence and higher mortality rates [3]. PCa is a type of cancer that develops in the prostate gland, a small walnut-shaped gland located below the bladder in men [4]. The male reproductive system contains the prostate, which is a small gland that is located under the bladder and in front of the rectum. It surrounds the urethra, which is the tube that carries urine from the bladder out of the body. The primary function of the prostate (Figure 1) is to produce and secrete a fluid that makes up a part of semen, which is the fluid that carries sperm during ejaculation [5]. The development of PCa in an individual can be caused by a variety of circumstances including age (older men are more likely to develop prostate cancer), family history (having a close relative who has prostate cancer increases the risk), race (African American males are more likely to develop prostate cancer) and specific genetic mutations [6][7].

Figure 1. The physiology of a human prostate.

The recent advances in sophisticated computers and algorithms in recent decades have paved the way for improved PCa diagnosis and treatment [8]. Computer-aided diagnosis (CAD) refers to the use of computer algorithms and technology to assist healthcare professionals in the prognosis and diagnosis of patients [9]. CAD systems are designed to serve as Decision Support (or Expert) Systems, which analyze medical data, such as images or test results, and provide experts with additional information or suggestions to aid in the interpretation and diagnosis of various medical conditions. They are commonly used in medical imaging fields to detect anomalies or assist in the interpretation and analysis of medical images such as X-rays, Computed Tomography (CT) scans, Magnetic Resonance Imaging (MRI) scans and mammograms [10]. These systems use pattern recognition, machine learning algorithms and deep learning algorithms to identify specific features or patterns that may indicate the presence or absence of a disease or condition [11]. It can also help radiologists by highlighting regions of interest (ROI) or by providing quantitative measurements for further analysis. Soft computing techniques play a major role in decision making across several sectors of the field of medical image analysis [12][13]. Deep learning, a branch of artificial intelligence, has shown promising performance in the identification of patterns and the classification of medical images [14][15].

2. Imaging Modalities

Prostate imaging refers to various techniques and procedures used to visualize the prostate gland for diagnostic and treatment purposes. These imaging methods help in evaluating the size, shape and structure of the prostate, as well as detecting any abnormalities or diseases, such as prostate cancer [16][17], and they include Transrectal Ultrasound (TRUS) [18], Magnetic Resonance Imaging (MRI) [19], Computed Tomography (CT) [20], Prostate-Specific Antigen (PSA) [21], Prostate-Specific Membrane Antigen (PET/CT) [22] and bone scans [23]. A TRUS involves inserting a small probe into the rectum, which emits high-frequency sound waves to create real-time images of the prostate gland. A TRUS is commonly used to guide prostate biopsies and assess the size of the prostate [18][24]. MRI, one of the most common prostate imaging methods, uses a powerful magnetic field and radio waves to generate detailed images of the prostate gland. It can provide information about the size, location and extent of tumors or other abnormalities. A multiparametric MRI (mpMRI) combines different imaging sequences to improve the accuracy of prostate cancer detection [25][26]. A CT scan uses X-ray technology to produce cross-sectional images of the prostate gland. It may be utilized to evaluate the spread of prostate cancer to nearby lymph nodes or other structures. PSMA PET/CT imaging is a relatively new technique that uses a radioactive tracer targeting PSMA, a protein that is highly expressed in prostate cancer cells [27]. It provides detailed information about the location and extent of prostate cancer, including metastases. Bone scans are often performed in cases where prostate cancer has spread to the bones. A small amount of radioactive material is injected into the bloodstream, which is then detected by a scanner [23]. The scan can help to identify areas of bone affected by cancer. PSA (density mapping) combines the results of PSA blood tests with transrectal ultrasound measurements to estimate the risk of prostate cancer. It helps to assess the likelihood of cancer based on the size of the prostate and the PSA level [28]. The choice of imaging technique depends on various factors, including the specific clinical scenario, the availability of resources and the goals of the evaluation [29][30].

3. Risks of PCa

The risk of PCa varies in men depending on several factors, and identifying these factors can aid in the prevention and early detection of PCa, personalized healthcare, research and public health policies, genetic counseling and testing and lifestyle modifications. The most common clinically and scientifically verified risk factors include age, obesity and family history [31][32]. In low-risk vulnerable populations, the risk factors include benign prostatic hyperplasia (BPH), smoking, diet and alcohol consumption [33]. Although PCa is found to be rare in people below 40 years of age, an autopsy study on China, Israel, Germany, Jamaica, Sweden and Uganda showed that 30% of men in their fifties and 80% of men in their seventies had PCa [34]. Studies also found that genetic factors, a lack of exercise and sedentary lifestyles are cogent risk factors of PCa, including obesity and an elevated blood testosterone level [35][36][37][38]. The consumption of fruits and vegetables, the frequency of high-fat meat consumption, the level of Vitamin D in blood streams, cholesterol level, infections and other environmental factors are deemed to contribute to PCa occurrence in men [39][40].

4. Generic Overview of Deep Learning Architecture for PCa Diagnosis

Deep learning (DL) architectures have shown promising effectiveness and relative efficiency in PCa diagnosis due to their ability to analyze complex patterns and extract features from medical imaging data [13]. One commonly used deep learning architecture for cancer diagnosis is Convolutional Neural Networks (CNNs). CNNs are particularly effective in image analysis tasks, including medical image classification, segmentation, prognosis and detection [41]. Deep learning, given its ever-advancing variations, has recorded significant advancements in the analysis of cancer images including histopathology slides, mammograms, CT scans and other medical imaging modalities. DL models can automatically learn hierarchical representations of images, enabling them to detect patterns and features that are indicative of cancer. They are also trained to classify PCa images into different categories or subtypes. By learning from labeled training data, these models can accurately classify new images, aiding in cancer diagnosis and subtyping [42].

Transfer learning is often employed in PCa image analysis. Pre-trained models, such as CNNs trained on large-scale datasets like ImageNet, are fine-tuned or used as feature extractors for PCa-related tasks. This approach leverages the learned features from pre-training, improving performance even with limited annotated medical image data. One image dataset augmentation framework is a Generative Adversarial Network (GAN). GANs can generate realistic synthetic images, which can be used to supplement training data, enhance model generalization and improve the performance of cancer image analysis models. The performance and effectiveness of deep learning models for PCa image analysis, however, depend on various factors, including the quantity and quality of labeled data, the choice of architecture, the training methodology and careful validation of diverse datasets.

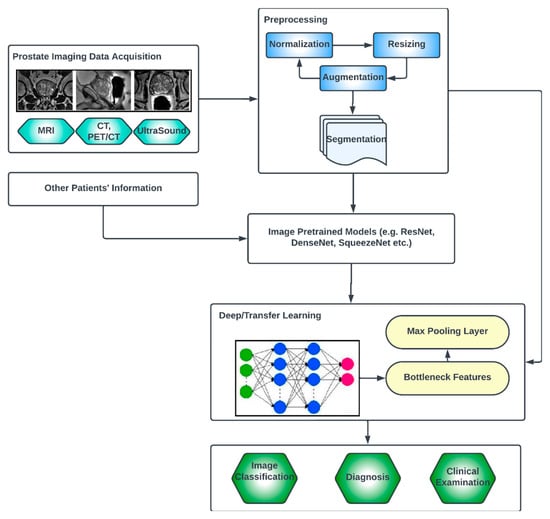

The key compartments in a typical deep CNN model for PCa diagnosis, as shown in Figure 2, include the convolutional layers, the pooling layers, the fully connected layers, the activation functions, the data augmentation and the attention mechanisms [43][44]. The convolutional layers are the fundamental building blocks of CNNs. They apply filters or kernels to input images to extract relevant features. These filters detect patterns at different scales and orientations, allowing for the network to learn meaningful representations from the input data. The pooling layers downsample feature maps, reducing the spatial dimensions while retaining important features. Max pooling is a commonly used pooling technique, where the maximum value in each pooling window is selected as the representative value [45]. The fully connected layers are used at the end of CNN architectures to make predictions based on the extracted features. These layers connect all the neurons from the previous layer to the subsequent layer, allowing for the network to learn complex relationships and make accurate classifications. Activation functions introduce non-linearity into the CNN architecture, enabling the network to model more complex relationships. Common activation functions include ReLU (Rectified Linear Unit), sigmoid and tanh [46][47]. Transfer learning involves leveraging pre-trained CNN models on large datasets (such as ImageNet, ResNet, VGG-16, VGG-19, Inception-v3, ShuffleNet, EfficientNet, GoogleNet, ResNet-50, SqueezeNet, etc.) and adapting them to specific medical imaging tasks. By using pre-trained models, which have learned general features from extensive data, the model construction time can be saved, as well as computational resources, and can achieve good performance even on smaller medical datasets. Data augmentation techniques, such as rotation, scaling and flipping, can be employed to artificially increase the diversity of the training data. Data augmentation helps to improve the generalization of a CNN model by exposing it to variations and reducing overfitting. Attention mechanisms allow for the network to focus on relevant regions or features within the image. These mechanisms assign weights or importance to different parts of the input, enabling the network to selectively attend to salient information [48][49].

Figure 2. Generic deep learning architecture for PCa image analysis.

Vision Transformers (ViTs) [50][51][52] are a special type of deep learning architecture, which, although originally designed for natural language processing (NLP) tasks, has shown promising performances for medical image processing. They consist of an encoder, which typically comprises multiple transformer layers. The authors of [53] studied the use of ViTs to perform prostate cancer prediction using Whole Slide Images (WSIs). A patch extraction from the detected region of interest (ROI) was first performed, and the performance showed promising results. A novel artificial intelligent transformer U-NET was proposed in a recent study [54]. The authors found that inserting a Visual Transformer block between the encoder and decoder of the U-Net architecture was ideal to achieve the lowest loss value, which is an indicator of better performance. In another study [55], a 3D ViT stacking ensemble model was presented for assessing PCa aggressiveness from T2w images with state-of-the-art results in terms of AUC and precision. Similar work was presented by other authors [56][57].

References

- Litwin, M.S.; Tan, H.-J. The diagnosis and treatment of prostate cancer: A review. JAMA 2017, 317, 2532–2542.

- Akinnuwesi, B.A.; Olayanju, K.A.; Aribisala, B.S.; Fashoto, S.G.; Mbunge, E.; Okpeku, M.; Owate, P. Application of support vector machine algorithm for early differential diagnosis of prostate cancer. Data Sci. Manag. 2023, 6, 1–12.

- Ayenigbara, I.O. Risk-Reducing Measures for Cancer Prevention. Korean J. Fam. Med. 2023, 44, 76.

- Musekiwa, A.; Moyo, M.; Mohammed, M.; Matsena-Zingoni, Z.; Twabi, H.S.; Batidzirai, J.M.; Singini, G.C.; Kgarosi, K.; Mchunu, N.; Nevhungoni, P. Mapping evidence on the burden of breast, cervical, and prostate cancers in Sub-Saharan Africa: A scoping review. Front. Public Health 2022, 10, 908302.

- Walsh, P.C.; Worthington, J.F. Dr. Patrick Walsh’s Guide to Surviving Prostate Cancer; Grand Central Life & Style: New York, NY, USA, 2010.

- Hayes, R.; Pottern, L.; Strickler, H.; Rabkin, C.; Pope, V.; Swanson, G.; Greenberg, R.; Schoenberg, J.; Liff, J.; Schwartz, A. Sexual behaviour, STDs and risks for prostate cancer. Br. J. Cancer 2000, 82, 718–725.

- Plym, A.; Zhang, Y.; Stopsack, K.H.; Delcoigne, B.; Wiklund, F.; Haiman, C.; Kenfield, S.A.; Kibel, A.S.; Giovannucci, E.; Penney, K.L. A healthy lifestyle in men at increased genetic risk for prostate cancer. Eur. Urol. 2023, 83, 343–351.

- Alkadi, R.; Taher, F.; El-Baz, A.; Werghi, N. A deep learning-based approach for the detection and localization of prostate cancer in T2 magnetic resonance images. J. Digit. Imaging 2019, 32, 793–807.

- Ishioka, J.; Matsuoka, Y.; Uehara, S.; Yasuda, Y.; Kijima, T.; Yoshida, S.; Yokoyama, M.; Saito, K.; Kihara, K.; Numao, N. Computer-aided diagnosis of prostate cancer on magnetic resonance imaging using a convolutional neural network algorithm. BJU Int. 2018, 122, 411–417.

- Reda, I.; Shalaby, A.; Abou El-Ghar, M.; Khalifa, F.; Elmogy, M.; Aboulfotouh, A.; Hosseini-Asl, E.; El-Baz, A.; Keynton, R. A new NMF-autoencoder based CAD system for early diagnosis of prostate cancer. In Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016.

- Wildeboer, R.R.; van Sloun, R.J.; Wijkstra, H.; Mischi, M. Artificial intelligence in multiparametric prostate cancer imaging with focus on deep-learning methods. Comput. Methods Programs Biomed. 2020, 189, 105316.

- Aribisala, B.; Olabanjo, O. Medical image processor and repository–mipar. Inform. Med. Unlocked 2018, 12, 75–80.

- Shen, D.; Wu, G.; Suk, H.-I. Deep learning in medical image analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248.

- Liu, Y.; An, X. A classification model for the prostate cancer based on deep learning. In Proceedings of the 2017 10th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Shanghai, China, 13–16 April 2016.

- Wang, X.; Yang, W.; Weinreb, J.; Han, J.; Li, Q.; Kong, X.; Yan, Y.; Ke, Z.; Luo, B.; Liu, T. Searching for prostate cancer by fully automated magnetic resonance imaging classification: Deep learning versus non-deep learning. Sci. Rep. 2017, 7, 15415.

- Hricak, H.; Choyke, P.L.; Eberhardt, S.C.; Leibel, S.A.; Scardino, P.T. Imaging prostate cancer: A multidisciplinary perspective. Radiology 2007, 243, 28–53.

- Kyle, K.Y.; Hricak, H. Imaging prostate cancer. Radiol. Clin. North Am. 2000, 38, 59–85.

- Cornud, F.; Brolis, L.; Delongchamps, N.B.; Portalez, D.; Malavaud, B.; Renard-Penna, R.; Mozer, P. TRUS–MRI image registration: A paradigm shift in the diagnosis of significant prostate cancer. Abdom. Imaging 2013, 38, 1447–1463.

- Reynier, C.; Troccaz, J.; Fourneret, P.; Dusserre, A.; Gay-Jeune, C.; Descotes, J.L.; Bolla, M.; Giraud, J.Y. MRI/TRUS data fusion for prostate brachytherapy. Preliminary results. Med. Phys. 2004, 31, 1568–1575.

- Rasch, C.; Barillot, I.; Remeijer, P.; Touw, A.; van Herk, M.; Lebesque, J.V. Definition of the prostate in CT and MRI: A multi-observer study. Int. J. Radiat. Oncol. 1999, 43, 57–66.

- Pezaro, C.; Woo, H.H.; Davis, I.D. Prostate cancer: Measuring PSA. Intern. Med. J. 2014, 44, 433–440.

- Takahashi, N.; Inoue, T.; Lee, J.; Yamaguchi, T.; Shizukuishi, K. The roles of PET and PET/CT in the diagnosis and management of prostate cancer. Oncology 2008, 72, 226–233.

- Sturge, J.; Caley, M.P.; Waxman, J. Bone metastasis in prostate cancer: Emerging therapeutic strategies. Nat. Rev. Clin. Oncol. 2011, 8, 357.

- Raja, J.; Ramachandran, N.; Munneke, G.; Patel, U. Current status of transrectal ultrasound-guided prostate biopsy in the diagnosis of prostate cancer. Clin. Radiol. 2006, 61, 142–153.

- Bai, H.; Xia, W.; Ji, X.; He, D.; Zhao, X.; Bao, J.; Zhou, J.; Wei, X.; Huang, Y.; Li, Q. Multiparametric magnetic resonance imaging-based peritumoral radiomics for preoperative prediction of the presence of extracapsular extension with prostate cancer. J. Magn. Reson. Imaging 2021, 54, 1222–1230.

- Jansen, B.H.; Nieuwenhuijzen, J.A.; Oprea-Lager, D.E.; Yska, M.J.; Lont, A.P.; van Moorselaar, R.J.; Vis, A.N. Adding multiparametric MRI to the MSKCC and Partin nomograms for primary prostate cancer: Improving local tumor staging? In Urologic Oncology: Seminars and Original Investigations; Elsevier: Amsterdam, The Netherlands, 2019.

- Maurer, T.; Eiber, M.; Schwaiger, M.; Gschwend, J.E. Current use of PSMA–PET in prostate cancer management. Nat. Rev. Urol. 2016, 13, 226–235.

- Stavrinides, V.; Papageorgiou, G.; Danks, D.; Giganti, F.; Pashayan, N.; Trock, B.; Freeman, A.; Hu, Y.; Whitaker, H.; Allen, C. Mapping PSA density to outcome of MRI-based active surveillance for prostate cancer through joint longitudinal-survival models. Prostate Cancer Prostatic Dis. 2021, 24, 1028–1031.

- Fuchsjäger, M.; Shukla-Dave, A.; Akin, O.; Barentsz, J.; Hricak, H. Prostate cancer imaging. Acta Radiol. 2008, 49, 107–120.

- Ghafoor, S.; Burger, I.A.; Vargas, A.H. Multimodality imaging of prostate cancer. J. Nucl. Med. 2019, 60, 1350–1358.

- Rohrmann, S.; Roberts, W.W.; Walsh, P.C.; Platz, E.A. Family history of prostate cancer and obesity in relation to high-grade disease and extraprostatic extension in young men with prostate cancer. Prostate 2003, 55, 140–146.

- Porter, M.P.; Stanford, J.L. Obesity and the risk of prostate cancer. Prostate 2005, 62, 316–321.

- Gann, P.H. Risk factors for prostate cancer. Rev. Urol. 2002, 4 (Suppl. 5), S3.

- Tian, W.; Osawa, M. Prevalent latent adenocarcinoma of the prostate in forensic autopsies. J. Clin. Pathol. Forensic Med. 2015, 6, 11–13.

- Marley, A.R.; Nan, H. Epidemiology of colorectal cancer. Int. J. Mol. Epidemiol. Genet. 2016, 7, 105.

- Kumagai, H.; Zempo-Miyaki, A.; Yoshikawa, T.; Tsujimoto, T.; Tanaka, K.; Maeda, S. Lifestyle modification increases serum testosterone level and decrease central blood pressure in overweight and obese men. Endocr. J. 2015, 62, 423–430.

- Moyad, M.A. Is obesity a risk factor for prostate cancer, and does it even matter? A hypothesis and different perspective. Urology 2002, 59, 41–50.

- Parikesit, D.; Mochtar, C.A.; Umbas, R.; Hamid, A.R.A.H. The impact of obesity towards prostate diseases. Prostate Int. 2016, 4, 1–6.

- Tse, L.A.; Lee, P.M.Y.; Ho, W.M.; Lam, A.T.; Lee, M.K.; Ng, S.S.M.; He, Y.; Leung, K.-S.; Hartle, J.C.; Hu, H. Bisphenol A and other environmental risk factors for prostate cancer in Hong Kong. Environ. Int. 2017, 107, 1–7.

- Vaidyanathan, V.; Naidu, V.; Kao, C.H.-J.; Karunasinghe, N.; Bishop, K.S.; Wang, A.; Pallati, R.; Shepherd, P.; Masters, J.; Zhu, S. Environmental factors and risk of aggressive prostate cancer among a population of New Zealand men–a genotypic approach. Mol. BioSystems 2017, 13, 681–698.

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3523–3542.

- Zhang, X.; Li, H.; Wang, C.; Cheng, W.; Zhu, Y.; Li, D.; Jing, H.; Li, S.; Hou, J.; Li, J. Evaluating the accuracy of breast cancer and molecular subtype diagnosis by ultrasound image deep learning model. Front. Oncol. 2021, 11, 623506.

- Tammina, S. Transfer learning using vgg-16 with deep convolutional neural network for classifying images. Int. J. Sci. Res. Publ. (IJSRP) 2019, 9, 143–150.

- Abbas, A.; Abdelsamea, M.M.; Gaber, M.M. Classification of COVID-19 in chest X-ray images using DeTraC deep convolutional neural network. Appl. Intell. 2021, 51, 854–864.

- Christlein, V.; Spranger, L.; Seuret, M.; Nicolaou, A.; Král, P.; Maier, A. Deep generalized max pooling. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, NSW, Australia, 20–25 September 2019.

- Sharma, S.; Sharma, S.; Athaiya, A. Activation functions in neural networks. Towards Data Sci. 2017, 6, 310–316.

- Sibi, P.; Jones, S.A.; Siddarth, P. Analysis of different activation functions using back propagation neural networks. J. Theor. Appl. Inf. Technol. 2013, 47, 1264–1268.

- Fu, J.; Zheng, H.; Mei, T. Look closer to see better: Recurrent attention convolutional neural network for fine-grained image recognition. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017.

- Yin, W.; Schütze, H.; Xiang, B.; Zhou, B. Abcnn: Attention-based convolutional neural network for modeling sentence pairs. Trans. Assoc. Comput. Linguist. 2016, 4, 259–272.

- Ranftl, R.; Bochkovskiy, A.; Koltun, V. Vision transformers for dense prediction. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021.

- Fan, H.; Xiong, B.; Mangalam, K.; Li, Y.; Yan, Z.; Malik, J.; Feichtenhofer, C. Multiscale vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021.

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in vision: A survey. ACM Comput. Surv. (CSUR) 2022, 54, 1–41.

- Ikromjanov, K.; Bhattacharjee, S.; Hwang, Y.-B.; Sumon, R.I.; Kim, H.-C.; Choi, H.-K. Whole slide image analysis and detection of prostate cancer using vision transformers. In Proceedings of the 2022 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Jeju Island, Republic of Korea, 21–24 February 2022.

- Singla, D.; Cimen, F.; Narasimhulu, C.A. Novel artificial intelligent transformer U-NET for better identification and management of prostate cancer. Mol. Cell. Biochem. 2023, 478, 1439–1445.

- Pachetti, E.; Colantonio, S. 3D-Vision-Transformer Stacking Ensemble for Assessing Prostate Cancer Aggressiveness from T2w Images. Bioengineering 2023, 10, 1015.

- Pachetti, E.; Colantonio, S.; Pascali, M.A. On the effectiveness of 3D vision transformers for the prediction of prostate cancer aggressiveness. In Image Analysis and Processing; Springer: Cham, Switzerland, 2022.

- Li, C.; Deng, M.; Zhong, X.; Ren, J.; Chen, X.; Chen, J.; Xiao, F.; Xu, H. Multi-view radiomics and deep learning modeling for prostate cancer detection based on multi-parametric MRI. Front. Oncol. 2023, 13, 1198899.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

455

Revisions:

2 times

(View History)

Update Date:

27 Sep 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No