Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Weiliang Xie | -- | 1416 | 2023-09-27 08:09:59 | | | |

| 2 | Lindsay Dong | + 3 word(s) | 1419 | 2023-09-28 05:50:57 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Huang, Q.; Xie, W.; Li, C.; Wang, Y.; Liu, Y. Sensor-Based Human Action Recognition. Encyclopedia. Available online: https://encyclopedia.pub/entry/49695 (accessed on 07 February 2026).

Huang Q, Xie W, Li C, Wang Y, Liu Y. Sensor-Based Human Action Recognition. Encyclopedia. Available at: https://encyclopedia.pub/entry/49695. Accessed February 07, 2026.

Huang, Qian, Weiliang Xie, Chang Li, Yanfang Wang, Yanwei Liu. "Sensor-Based Human Action Recognition" Encyclopedia, https://encyclopedia.pub/entry/49695 (accessed February 07, 2026).

Huang, Q., Xie, W., Li, C., Wang, Y., & Liu, Y. (2023, September 27). Sensor-Based Human Action Recognition. In Encyclopedia. https://encyclopedia.pub/entry/49695

Huang, Qian, et al. "Sensor-Based Human Action Recognition." Encyclopedia. Web. 27 September, 2023.

Copy Citation

Sensor-based Human Action Recognition (HAR) is a fundamental component in human–robot interaction and pervasive computing. It achieves HAR by acquiring sequence data from embedded sensor devices (accelerometers, magnetometers, gyroscopes, etc.) of multiple sensor modalities worn at different body locations for data processing and analysis.

human action recognition

machine learning

HAR

Sensor

1. Introduction

Human Action Recognition (HAR) is gradually attracting attention, and it is widely used in the fields of human–robot interaction, elderly care, healthcare, and sports [1][2][3]. In addition, it plays an important role in areas such as biometrics, entertainment, and intelligent-assisted living. Examples include fall behavior detection for the homebound elderly population, rehabilitative exercise training for patients, and exercise action assessment for athletes [4][5]. HAR can be performed from both visual and non-visual modalities [6][7][8], where the visual modalities are mainly data modalities such as RGB video, depth, bone, and point cloud; and the non-visual modalities are mainly data modalities such as sensor signals, radar, magnetic field, and Wi-Fi signals based on wearable devices [9]. These data modalities encode different sources of information, and different modalities have their own advantages and characteristics in different application scenarios.

Visual-modality-based approaches perform feature extraction from video streams captured by cameras; although this approach can visualize the characteristics of human actions, its performance is affected by the viewing angle, camera occlusion, and the quality of the background illumination, and there may be privacy issues. On the contrary, the non-visual modality-based approach, which acquires sensor data of human actions through wearable devices, does not suffer from privacy issues, has a relatively small amount of data, does not have occlusion issues, and is adaptable to the environment. Better results are expected by processing and analyzing sensor data for HAR.

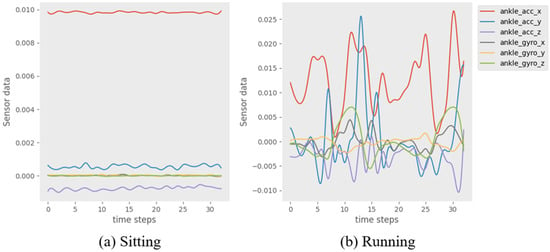

Sensor-based HAR is a fundamental component in human–robot interaction and pervasive computing [10]. It achieves HAR by acquiring sequence data from embedded sensor devices (accelerometers, magnetometers, gyroscopes, etc.) of multiple sensor modalities worn at different body locations for data processing and analysis. Generally, the data collected by the sensors in a HAR system form a time series of information. After noise reduction and normalization of the data sequence, it is segmented into individual windows by a sliding window method with a fixed window size and overlap rate. Then, each window is categorized as an action by the HAR method. Figure 1 illustrates an example of window action on the PAMAP2 dataset. In daily life, human physical actions include not only some simple actions, but also some complex actions consisting of multiple microscopic processes. For example, the action of running includes many microscopic processes, such as starting, accelerating, maintaining, sprinting, decelerating, and so on.

Figure 1. Example of a window of “Sitting” (a) and “Running” (b) actions on the PAMAP2 dataset, timestep = 1 s.

Traditional machine learning methods [11][12] rely heavily on hand-crafted features and expert knowledge [13] and only capture shallow features, making it difficult to perform HAR accurately. Recently, deep learning methods have provided promising results in the field of HAR [14]. It can learn feature representations for classification tasks without involving domain-specific knowledge, which achieves more accurate HAR. Therefore, many researchers have applied CNNs and RNNs to HAR to effectively perform feature extraction, automatic learning of feature representations, and removal of hand-crafted features [15][16][17]. However, since action recognition is a time-series classification problem, CNNs may have difficulty in capturing time-dimensional information. The Long Short-Term Memory (LSTM) network can effectively capture the temporal context information and long-term dependency of sequence data, so some works successfully apply LSTM to HAR [18][19][20].

In addition, since CNNs can extract local spatial feature information and LSTMs can capture temporal context information, hybrid models can effectively capture spatio-temporal motion patterns from sensor signals. Some recent work combining hybrid models of CNNs and RNNs has shown promising results [21][22][23][24]. However, since LSTMs compress all the input information into the network, this will lead to the incorporation of noise from the sensor data acquisition when extracting features, which will affect the effectiveness of action recognition. Based on this, there are some works to solve this problem by introducing the attention mechanism [25][26][27][28][29]. The attention mechanism enables the model to focus more on the parts that are relevant to the current recognition to improve accuracy. Also, some works optimize the action recognition and window segmentation problems by multi-task learning for HAR [30].

2. Sensor-Based Human Action Recognition

Research work on sensor-based HAR can be categorized into two types: machine learning methods and deep learning methods. Earlier research works on HAR were mainly based on traditional machine learning methods such as the Random Forest (RF), Support Vector Machine (SVM), and Hidden Markov Model (HMM). Gomes et al. [31] compared the performances of three classifiers: SVM, RF, and KNN. Kasteren et al. [32] proposed a sensor that can automatically recognize actions and data labeling system; they demonstrated the performance of a HMM in recognizing actions. Tran et al. [33] constructed a HAR system via an SVM that was able to recognize six human actions by extracting 248 features. However, traditional machine learning methods rely heavily on hand-crafted features such as mean, maximum, variance, and fast Fourier transform coefficients [34]. Since extracting hand-crafted features relies on human experience and expert knowledge and only captures shallow features, the accuracy is limited.

Unlike traditional machine learning methods, deep learning can learn the feature representation of a classification task without involving domain-specific knowledge, and HAR can be achieved without extracting hand-crafted features. Yang et al. [15] proposed that CNNs can effectively capture salient features in the spatial dimension and outperform traditional machine learning methods. Jiang et al. [35] proposed a CNN model that arranges raw sensor signals into signal images as model inputs and learns low-level to high-level features from action images to achieve effective HAR.

Meanwhile, since action recognition is a time-series classification problem, it may be difficult for CNNs to capture time dimension information. In contrast, Hammerla et al. [18] and Dua et al. [19] used the LSTM network for HAR, which can effectively capture contextual information and long-term dependencies of the temporal dimension of the sensor sequence data. Ullah et al. [36] proposed a stacked LSTM network for recognizing six types of human actions using smartphone data, with 93.13% recognition accuracy. Mohsen et al. [37] used GRU to classify human actions, achieving 97% accuracy on the WISDM dataset. Gaur et al. [38] achieved a high accuracy in classifying repetitive and non-repetitive actions over time based on LSTM–RNN networks. Although the above methods can recognize some simple human actions (e.g., cycling, walking) well, the recognition of some complex actions (e.g., stair up/down, open/close door) is still challenging, which is due to the difficulty in capturing the spatio-temporal correlation of sensor signals using a single CNN or RNN network.

Recently, much of the work in HAR has focused on hybrid models of CNN and RNN. Ordóñez et al. [21] combined an CNN and an LSTM to achieve significant results in capturing spatio-temporal features from sensor signals. Yao et al. [22] constructed separate CNNs for the different types of data in the sensor inputs, and then merged them to form global feature information; they then extracted temporal relationships through an RNN to achieve HAR. Nafea et al. [39] used CNN with varying kernel dimensions and BiLSTM to capture features with different resolutions. They effectively extracted spatio-temporal features from sensor data with high accuracy.

In addition, some works address the problem that LSTMs may compress the noise of sensor data into the network. They introduce the attention mechanism to prevent the incorporation of noisy and irrelevant parts when extracting features, thus improving the effectiveness of HAR. Murahari et al. [27] added an attention layer to the DeepConvLSTM architecture proposed in Ordóñez et al. [21] to learn the correlation weight of the hidden state outputs of the LSTM layer to create context vectors, instead of directly using the last hidden state. Ma et al. [25] also proposed an architecture based on attention-enhanced CNNs and GRUs, which uses attention to augment the weight of the sensor modalities and encapsulate the temporal correlation and temporal context information of specific sensor signal features. In contrast, Mahmud et al. [26] completely discarded the recurrent structure and adapted the transformer architecture [40] proposed in the field of machine translation to use a self-attention-based neural network model to generate feature representations for classification to better recognize human actions. Zhang et al. [41] proposed a hybrid model ConvTransformer for HAR, which can fully extract local and global information of sensor signals and use attention to enhance the model feature characterization capability. Xiao et al. [42] proposed a two-stream transformer network to extract sensor features from temporal and spatial channels that effectively model the spatio-temporal dependence of sensor signals.

References

- Anagnostis, A.; Benos, L.; Tsaopoulos, D.; Tagarakis, A.; Tsolakis, N.; Bochtis, D. Human activity recognition through recurrent neural networks for human–robot interaction in agriculture. Appl. Sci. 2021, 11, 2188.

- Asghari, P.; Soleimani, E.; Nazerfard, E. Online human activity recognition employing hierarchical hidden Markov models. J. Ambient Intell. Humaniz. Comput. 2020, 11, 1141–1152.

- Ramos, R.G.; Domingo, J.D.; Zalama, E.; Gómez-García-Bermejo, J.; López, J. SDHAR-HOME: A sensor dataset for human activity recognition at home. Sensors 2022, 22, 8109.

- Khan, W.Z.; Xiang, Y.; Aalsalem, M.Y.; Arshad, Q. Mobile phone sensing systems: A survey. IEEE Commun. Surv. Tutor. 2012, 15, 402–427.

- Taylor, K.; Abdulla, U.A.; Helmer, R.J.; Lee, J.; Blanchonette, I. Activity classification with smart phones for sports activities. Procedia Eng. 2011, 13, 428–433.

- Zhang, S.; Wei, Z.; Nie, J.; Huang, L.; Wang, S.; Li, Z. A review on human activity recognition using vision-based method. J. Healthc. Eng. 2017, 2017, 3090343.

- Dang, L.M.; Min, K.; Wang, H.; Piran, M.J.; Lee, C.H.; Moon, H. Sensor-based and vision-based human activity recognition: A comprehensive survey. Pattern Recognit. 2020, 108, 107561.

- Abdel-Salam, R.; Mostafa, R.; Hadhood, M. Human activity recognition using wearable sensors: Review, challenges, evaluation benchmark. In Proceedings of the International Workshop on Deep Learning for Human Activity Recognition, Montreal, QC, Canada, 21–26 August 2021; pp. 1–15.

- Sun, Z.; Ke, Q.; Rahmani, H.; Bennamoun, M.; Wang, G.; Liu, J. Human action recognition from various data modalities: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3200–3225.

- Bulling, A.; Blanke, U.; Schiele, B. A tutorial on human activity recognition using body-worn inertial sensors. ACM Comput. Surv. (CSUR) 2014, 46, 1–33.

- Bao, L.; Intille, S.S. Activity recognition from user-annotated acceleration data. In Proceedings of the International Conference on Pervasive Computing, Nottingham, UK, 7–10 September 2004; pp. 1–17.

- Plötz, T.; Hammerla, N.Y.; Olivier, P.L. Feature learning for activity recognition in ubiquitous computing. In Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence, Barcelona, Spain, 16–22 July 2011.

- Bengio, Y. Deep learning of representations: Looking forward. In Proceedings of the Statistical Language and Speech Processing, Tarragona, Spain, 29–31 July 2013; pp. 1–37.

- Islam, M.M.; Nooruddin, S.; Karray, F.; Muhammad, G. Human activity recognition using tools of convolutional neural networks: A state of the art review, data sets, challenges, and future prospects. Comput. Biol. Med. 2022, 149, 106060.

- Yang, J.; Nguyen, M.N.; San, P.P.; Li, X.; Krishnaswamy, S. Deep convolutional neural networks on multichannel time series for human activity recognition. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015; pp. 3995–4001.

- Ha, S.; Yun, J.-M.; Choi, S. Multi-modal convolutional neural networks for activity recognition. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 3017–3022.

- Guan, Y.; Plötz, T. Ensembles of deep lstm learners for activity recognition using wearables. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 1–28.

- Hammerla, N.Y.; Halloran, S.; Plötz, T. Deep, convolutional, and recurrent models for human activity recognition using wearables. arXiv 2016, arXiv:1604.08880.

- Dua, N.; Singh, S.N.; Semwal, V.B. Multi-input CNN-GRU based human activity recognition using wearable sensors. Computing 2021, 103, 1461–1478.

- Zhao, Y.; Yang, R.; Chevalier, G.; Xu, X.; Zhang, Z. Deep residual bidir-LSTM for human activity recognition using wearable sensors. Math. Probl. Eng. 2018, 2018, 7316954.

- Ordóñez, F.J.; Roggen, D. Deep convolutional and lstm recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115.

- Yao, S.; Hu, S.; Zhao, Y.; Zhang, A.; Abdelzaher, T. Deepsense: A unified deep learning framework for time-series mobile sensing data processing. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; pp. 351–360.

- Nan, Y.; Lovell, N.H.; Redmond, S.J.; Wang, K.; Delbaere, K.; van Schooten, K.S. Deep learning for activity recognition in older people using a pocket-worn smartphone. Sensors 2020, 20, 7195.

- Radu, V.; Tong, C.; Bhattacharya, S.; Lane, N.D.; Mascolo, C.; Marina, M.K.; Kawsar, F. Multimodal deep learning for activity and context recognition. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 1, 1–27.

- Ma, H.; Li, W.; Zhang, X.; Gao, S.; Lu, S. AttnSense: Multi-level attention mechanism for multimodal human activity recognition. In Proceedings of the International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 3109–3115.

- Mahmud, S.; Tonmoy, M.; Bhaumik, K.K.; Rahman, A.M.; Amin, M.A.; Shoyaib, M.; Khan, M.A.H.; Ali, A.A. Human activity recognition from wearable sensor data using self-attention. arXiv 2020, arXiv:2003.09018.

- Murahari, V.S.; Plötz, T. On attention models for human activity recognition. In Proceedings of the 2018 ACM International Symposium on Wearable Computers, Singapore, 8–12 October 2018; pp. 100–103.

- Haque, M.N.; Tonmoy, M.T.H.; Mahmud, S.; Ali, A.A.; Khan, M.A.H.; Shoyaib, M. Gru-based attention mechanism for human activity recognition. In Proceedings of the 2019 1st International Conference on Advances in Science, Engineering and Robotics Technology (ICASERT), Dhaka, Bangladesh, 3–5 May 2019; pp. 1–6.

- Al-qaness, M.A.; Dahou, A.; Abd Elaziz, M.; Helmi, A. Multi-ResAtt: Multilevel residual network with attention for human activity recognition using wearable sensors. IEEE Trans. Ind. Inform. 2022, 19, 144–152.

- Duan, F.; Zhu, T.; Wang, J.; Chen, L.; Ning, H.; Wan, Y. A Multi-Task Deep Learning Approach for Sensor-based Human Activity Recognition and Segmentation. IEEE Trans. Instrum. Meas. 2023, 72, 2514012.

- Gomes, E.; Bertini, L.; Campos, W.R.; Sobral, A.P.; Mocaiber, I.; Copetti, A. Machine learning algorithms for activity-intensity recognition using accelerometer data. Sensors 2021, 21, 1214.

- Van Kasteren, T.; Noulas, A.; Englebienne, G.; Kröse, B. Accurate activity recognition in a home setting. In Proceedings of the 10th International Conference on Ubiquitous Computing, Seoul, Republic of Korea, 21–24 September 2008; pp. 1–9.

- Tran, D.N.; Phan, D.D. Human activities recognition in android smartphone using support vector machine. In Proceedings of the 2016 7th International Conference on Intelligent Systems, Modelling and Simulation (Isms), Bangkok, Thailand, 25–27 January 2016; pp. 64–68.

- Figo, D.; Diniz, P.C.; Ferreira, D.R.; Cardoso, J.M. Preprocessing techniques for context recognition from accelerometer data. Pers. Ubiquitous Comput. 2010, 14, 645–662.

- Jiang, W.; Yin, Z. Human activity recognition using wearable sensors by deep convolutional neural networks. In Proceedings of the 23rd ACM International Conference on Multimedia, Brisbane, Australia, 26–30 October 2015; pp. 1307–1310.

- Ullah, M.; Ullah, H.; Khan, S.D.; Cheikh, F.A. Stacked lstm network for human activity recognition using smartphone data. In Proceedings of the 8th European Workshop on Visual Information Processing (EUVIP), Roma, Italy, 28–31 October 2019; pp. 175–180.

- Mohsen, S. Recognition of human activity using GRU deep learning algorithm. Multimed. Tools Appl. 2023, 1–17.

- Gaur, D.; Kumar Dubey, S. Development of Activity Recognition Model using LSTM-RNN Deep Learning Algorithm. J. Inf. Organ. Sci. 2022, 46, 277–291.

- Nafea, O.; Abdul, W.; Muhammad, G.; Alsulaiman, M. Sensor-based human activity recognition with spatio-temporal deep learning. Sensors 2021, 21, 2141.

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762.

- Zhang, Z.; Wang, W.; An, A.; Qin, Y.; Yang, F. A human activity recognition method using wearable sensors based on convtransformer model. Evol. Syst. 2023, 1–17.

- Xiao, S.; Wang, S.; Huang, Z.; Wang, Y.; Jiang, H. Two-stream transformer network for sensor-based human activity recognition. Neurocomputing 2022, 512, 253–268.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

890

Revisions:

2 times

(View History)

Update Date:

28 Sep 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No