Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Georgios Fotis | -- | 1844 | 2023-09-26 06:55:38 | | | |

| 2 | Rita Xu | Meta information modification | 1844 | 2023-09-26 07:19:38 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Pavlatos, C.; Makris, E.; Fotis, G.; Vita, V.; Mladenov, V. Precise Electrical Load Prediction. Encyclopedia. Available online: https://encyclopedia.pub/entry/49621 (accessed on 07 February 2026).

Pavlatos C, Makris E, Fotis G, Vita V, Mladenov V. Precise Electrical Load Prediction. Encyclopedia. Available at: https://encyclopedia.pub/entry/49621. Accessed February 07, 2026.

Pavlatos, Christos, Evangelos Makris, Georgios Fotis, Vasiliki Vita, Valeri Mladenov. "Precise Electrical Load Prediction" Encyclopedia, https://encyclopedia.pub/entry/49621 (accessed February 07, 2026).

Pavlatos, C., Makris, E., Fotis, G., Vita, V., & Mladenov, V. (2023, September 26). Precise Electrical Load Prediction. In Encyclopedia. https://encyclopedia.pub/entry/49621

Pavlatos, Christos, et al. "Precise Electrical Load Prediction." Encyclopedia. Web. 26 September, 2023.

Copy Citation

In the energy-planning sector, the precise prediction of electrical load is a critical matter for the functional operation of power systems and the efficient management of markets.

electrical load

recurrent neural network

short-term forecasting

1. Introduction

Electric load forecasting (ELF) in a power system is crucial for the operation planning of the system, and it also generates an increasing academic interest [1]. For the management and planning of the power system, forecasting demand factors such as load per hour, maximum (peak) load, and the total amount of energy is crucial. As a result, forecasting according to the time horizon is beneficial for meeting the various needs according to their application, as indicated in Table 1. The ELF is divided into three groups [2]:

Table 1. Applications of the forecasting processes based on time horizons.

| Time Horizon | Area of Application |

|---|---|

| 12 months–20 months | Planning of the Power System |

| 1 week–12 months | Scheduling the maintenance of the power system elements |

| 1 min–1 week | Commitment analysis of the power units |

| Automatic Generation Control (AGC) | |

| Economic load dispatch (ELD) | |

| ms–s | Power system dynamic analysis |

| ns–ms | Power system transient analysis |

-

Long-term forecasting (LTF): 1–20 years. The LTF is crucial for the inclusion of new-generation units in the system and the development of the transmission system.

-

Medium-term forecasting (MTF): 1 week–12 months. The MTF is most helpful for the setting of tariffs, the planning of the system maintenance, financial planning, and the scheduling of fuel supply.

-

Short-term forecasting (STF): 1 h–1 week. The STF is necessary for the data supply to the generation units to schedule their start-up and shutdown time, to prepare the spinning reserves, and to conduct an in-depth analysis of the restrictions in the transmission system. STF is also crucial for the evaluation of power system security.

Various approaches can be used based on the model found. Although MTF and LTF forecasting will rely on techniques such as trend analysis [3][4], end-use analysis [5], neural network technique [6][7][8][9][10], and multiple linear regressions [11], STF requires an approach based on regression [12], analysis of time series [13], implementation of artificial neural networks [14][15][16], expert systems [17], fuzzy logic [18][19], and support vector machines [20][21]. STF is important for both the Transmission System Operators (TSOs) in the case of extreme weather conditions to maintain the reliable operation of the system [22][23] and for the Distribution System Operators (DSOs) due to the constant increase of microgrids affecting the total load [24][25] as well as the difficulty of matching varying renewable energy to the demand with diminishing margins.

The MTF and LTF require both expertise in data analysis and experience of how power systems and the liberated markets function, but the STF relies more on data modeling (trying to fit data into models) than on in-depth knowledge of how a power system operates [26]. The load forecast of the day ahead (STF) is a work that the operational planning department of every TSO must establish every day of the year. The forecast must be as accurate as possible because its accuracy will depend on which units will participate in the power system energy production the next day, to produce the required amount of energy to cover the requested system load. The historical data on load patterns, the weather, air temperature, wind speed, calendar seasonal data, economic events, and geographical data are only a few of the many variables that influence load forecasting.

The strategic actions of several entities, including companies involved in power generation, retailers, and aggregators, depend on the precise load projections, nevertheless, as a result of the liberalization and increased competitiveness of contemporary power markets. Additionally, a robust forecasting model for prosumers would result in the best resource management, such as energy generation, management, and storage.

The augmentation of “active consumers” [27] and the penetration of renewable energy sources (RES) by 2029–2049 [28] will result in very different planning and operation challenges in this new scenario [29]. Toolboxes from the past will not function the same way they do now. For instance, it becomes increasingly difficult for the future energy mix to match load demands whenever creating intriguing prospects for new players on both the supply and demand sides of a power system. The literature raises questions about how to handle the availability of energy outputs from RES and the adaptability of customers in light of the uncertainty and unpredictability concerns associated with power consumption. An imperfect solution to this problem can be found in the new and sophisticated forecasting techniques [30][31][32]. To assess and predict future electricity consumption, a deep learning (DL) forecasting model was developed, investigating electricity-load strategies in an attempt to prevent future electricity crises.

2. RNN for Variable Inputs/Outputs

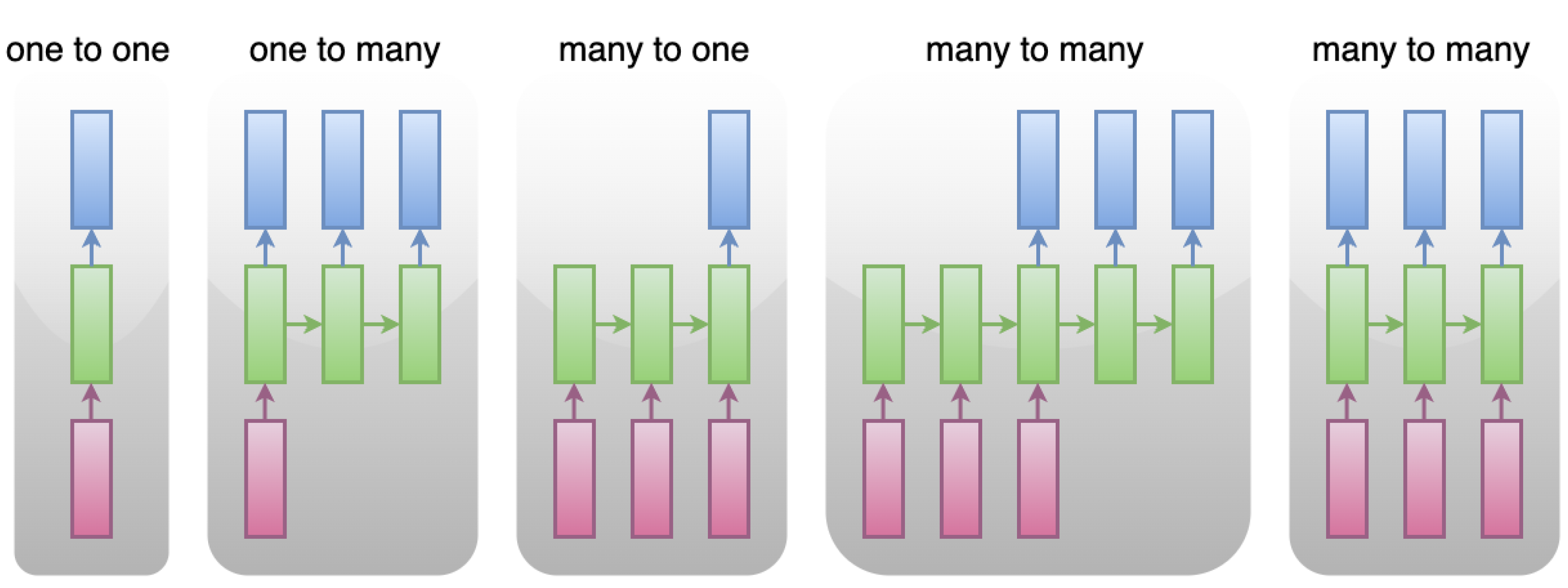

The task of handling input and output of varied sizes is considered to be challenging in the field of normal neural networks. Part of the use cases that normal neural networks are incapable of handling are shown in Figure 1, where “many” does not stand for a fixed number for each input or output to the models.

Figure 1. RNN for Variable Inputs/Outputs.

The cases presented in Figure 1 may be summarized in the following three categories.

-

One to Many, applied in fields of image captioning, text generation

-

Many to One, applied in fields of sentiment analysis, text classification

-

Many to Many, applied in fields of machine translation, voice recognition

However, RNN may deal with the above-mentioned cases as it includes a recursive processing unit with single or multiple layers of cells) plus a hidden state extracted from past data.

The use of a recursive processing unit has advantages and disadvantages. The main advantage is that the network may handle inputs and outputs of varied sizes. Unfortunately, the main disadvantages are the difficulty of storing past data and the complication of vanishing/exploding gradients.

3. Vanilla RNN

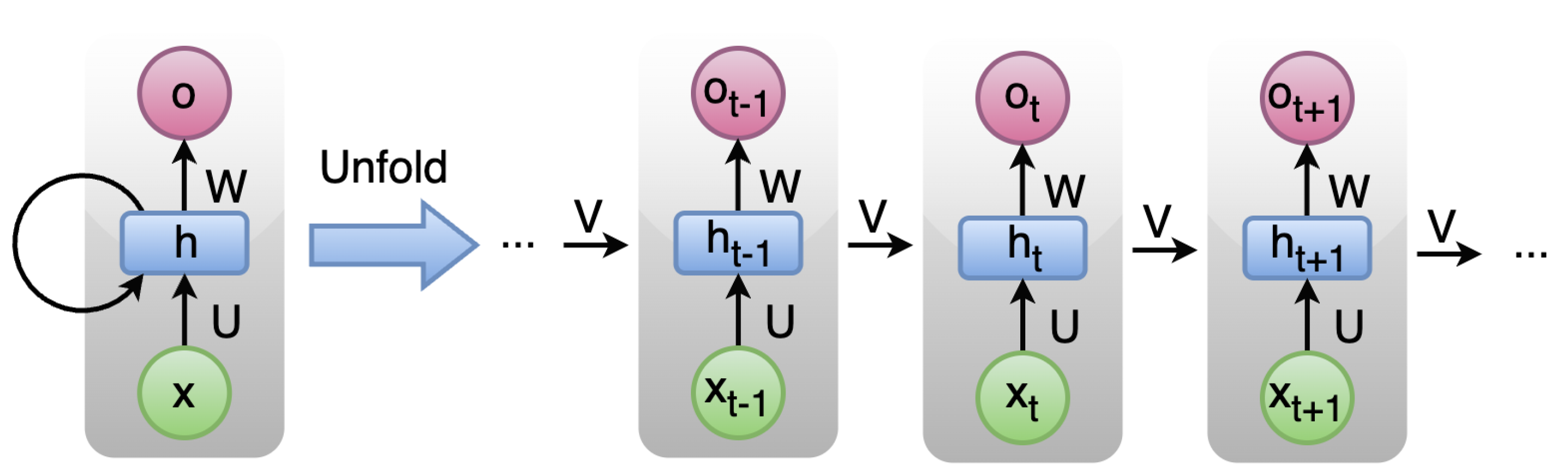

A Vanilla RNN is a type of neural network that can process sequential data, such as time-series data or natural language text, by maintaining an internal state. In a vanilla RNN, the output of the previous time step is fed back into the network as input for the current time step, along with the current input. This allows the network to capture information from previous inputs and use it to inform the processing of future inputs. The term “vanilla” is used to distinguish this type of RNN from more complex variants, such as LSTMs (Long Short-Term Memory) and GRUs (Gated Recurrent Units), which incorporate additional mechanisms to better handle long-term dependencies in sequential data. Despite their simplicity, vanilla RNNs can be effective for a range of tasks, such as language modeling, speech recognition, and sentiment analysis. However, they can struggle with long-term dependencies and suffer from vanishing or exploding gradients, which can make training difficult. In Figure 2 the main RNN architecture incorporating self-looping or recursive pointer is presented. Let us consider input, output, and hidden state as x, o, and h, respectively, while U, W, and V are the corresponding parameters. In this architecture, an RNN cell (in green) may include one or more layers of normal neurons or other types of RNN cells.

Figure 2. Basic RNN architecture.

The main concept of RNN is the recursive pointing structure which is based on the following rules:

-

Inputs and outputs are of variable size

-

In each stage the hidden state from the previous stage as well as the current input is utilized to compute the current hidden state that feeds the next stage. Consequently, knowledge from past data is transmitted through the hidden states to the next stages. Hence, the hidden state is a means of connecting the past with the present as well as input with output.

-

The set of parameters U, V, and W as well as the activation function are common to all RNN cells.

Unfortunately, the shared among all cells set of parameters (U, V, W) constitutes a serious bottleneck when the RNN raises enough. In this case, a brain consisting of only one set of memory may be overloaded. Therefore, Vanilla RNN cells that have multi-layers may easily enhance the performance.

4. Long Short-Term Memory

LSTM networks are a type of RNN designed to handle long-term dependencies in data. LSTMs were first introduced by Hochreiter and Schmidhuber in 1997 and have since become widely used due to their success in solving a variety of problems. Unlike standard RNNs, LSTMs are specifically created to address the issue of long-term dependency, making it their default behavior.

LSTMs consist of a chain of repeating modules, similar to all RNNs, but the repeating module in LSTMs is structured differently. Instead of a single layer, LSTMs have four interacting layers. The key component of LSTMs is the cell state, a horizontal line that runs through the modules and enables an easy flow of information. The LSTMs regulate the flow of information in the cell state through gates, which consist of a sigmoid neural network layer and a pointwise multiplication operation. The sigmoid layer outputs numbers between 0 and 1 to determine the amount of information to let through.

LSTMs have three gates to control the cell state: the forget gate, the input gate, and the output gate. The forget gate uses a sigmoid layer to decide which information to discard from the cell state. The input gate consists of two parts: a sigmoid layer that decides which values to update, and a tanh layer that creates new candidate values. The new information is combined with the cell state to create an updated state. Finally, the output gate uses a sigmoid layer to decide which parts of the cell state to output, then passes the cell state through tanh and multiplies it by the output of the sigmoid gate to produce the final output.

5. Convolutional Neural Network

CNN [33] is a type of deep learning neural network commonly used in image recognition and computer vision tasks. It is based on the concept of convolution operation, where the network learns to extract features from the input data through filters or kernels. The filters move over the input data and detect specific features such as edges, lines, patterns, and shapes, which are then processed and passed through multiple layers of the network. These multiple layers allow the CNN to learn increasingly complex representations of the input data. The final layer of the CNN outputs predictions for the input data based on the learned features. In addition to image recognition, CNNs can be applied to various other tasks including natural language processing, audio processing, and video analysis.

6. Gated Recurrent Unit

The GRU [34] operates similarly to an RNN, but with different operations and gates for each GRU unit. To address issues faced by standard RNNs, GRUs incorporate two gate mechanisms: the Update gate and the Reset gate. The Update gate determines the amount of previous information to pass on to the next stage, enabling the model to copy all previous information and reduce the risk of vanishing gradients. The Reset gate decides how much past information to ignore, deciding whether previous cell states are important. The Reset gate first stores relevant past information into a new memory then multiplies the input and hidden states with their weights, calculates the element-wise multiplication between the Reset gate and the previous hidden state, and applies a non-linear activation function to generate the next sequence.

References

- Kang, C.; Xia, Q.; Zhang, B. Review of power system load forecasting and its development. Autom. Electr. Power Syst. 2004, 28, 1–11.

- Shi, T.; Lu, F.; Lu, J.; Pan, J.; Zhou, Y.; Wu, C.; Zheng, J. Phase Space Reconstruction Algorithm and Deep Learning-Based Very Short-Term Bus Load Forecasting. Energies 2019, 12, 4349.

- Zhang, K.; Tian, X.; Hu, X.; Guo, Z.N. Partial Least Squares regression load forecasting model based on the combination of grey Verhulst and equal-dimension and new-information model. In Proceedings of the 7th International Forum on Electrical Engineering And Automation (IFEEA), Hefei, China, 25–27 September 2020; pp. 915–919.

- Liu, Z.; Wang, X.; Pan, S.; Zhang, M.; Ji, Y.Z. Midterm Power Load Forecasting Model Based on Kernel Principal Compo-nent Analysis. Big Data 2019, 7, 130–138.

- Al-Hamadi, H.; Soliman, S.A. Long-term/mid-term electric load forecasting based on short-term correlation and annual growth. Electr. Power Syst. Res. 2005, 74, 353–361.

- Baek, S. Mid-term Load Pattern Forecasting with Recurrent Artificial Neural Network. IEEE Access 2019, 7, 172830–172838.

- Nalcaci, G.; Ozmen, A.; Weber, G.W. Long-term load forecasting: Models based on MARS, ANN and LR methods. Cent. Eur. J. Oper. Res. 2019, 27, 1033–1049.

- Adhiswara, R.; Abdullah, A.G.; Mulyadi, Y. Long-term electrical consumption forecasting using Artificial Neural Network (ANN). J. Phys. Conf. Ser. 2019, 1402, 033081.

- Tsakoumis, A.C.; Vladov, S.S.; Mladenov, V.M. Electric load forecasting with multilayer perceptron and Elman neural network. In Proceedings of the 6th Seminar on Neural Network Applications in Electrical Engineering, Belgrade, Yugoslavia, 26–28 September 2002; pp. 87–90, ISBN 0-7803-7593-9.

- Dondon, P.; Carvalho, J.; Gardere, R.; Lahalle, P.; Tsenov, G.; Mladenov, V. Implementation of a feed-forward Artificial Neural Network in VHDL on FPGA. In Proceedings of the 12th Symposium on Neural Network Applications in Electrical Engineering, Belgrade, Serbia, 25–27 November 2014; pp. 37–40, ISBN 978-147995888-7.

- Abu-Shikhah, N.; Aloquili, F.; Linear, O.; Regression, N. Smart Grid and Renewable Energy. Smart Grid Renew. Energy 2011, 2, 126–135.

- Pappas, S.; Ekonomou, L.; Moussas, V.C.; Karampelas, P.; Katsikas, S.K. Adaptive Load Forecasting Of The Hellenic Electric Grid. J. Zhejiang Univ. Sci. 2008, 2, 1724–1730.

- Pappas, S.; Ekonomou, L.; Karampelas, P.; Karamousantas, D.C.; Katsikas, S.K.; Chatzarakis, G.E.; Skafidas, P.D. Electricity Demand Load Forecasting of the Hellenic Power System Using an ARMA Model. Electr. Power Syst. Res. 2010, 80, 256–264.

- Ekonomou, L.; Oikonomou, S.D. Application and comparison of several artificial neural networks for forecasting the Hellenic daily electricity demand load. In Proceedings of the 7th WSEAS International Conference on Artificial Intelligence, Knowledge Engineering and Data Bases (AIKED’ 08), Cambridge, UK, 20–22 February 2008; pp. 67–71.

- Ekonomou, L.; Christodoulou, C.A.; Mladenov, V. A short-term load forecasting method using artificial neural networks and wavelet analysis. Int. J. Power Syst. 2016, 1, 64–68.

- Karampelas, P.; Pavlatos, C.; Mladenov, V.; Ekonomou, L. Design of artificial neural network models for the prediction of the Hellenic energy consumption. In Proceedings of the 10th Symposium on Neural Network Applications in Electrical Engineering, Belgrade, Serbia, 23–25 September 2010.

- Hwan, K.J.; Kim, G.W. A short-term load forecasting expert system. In Proceedings of the 5th Korea-Russia International Symposium on Science and Technology, Tomsk, Russia, 26 June–3 July 2001; Volume 1, pp. 112–116.

- Ali, M.; Adnan, M.; Tariq, M.; Poor, H.V. Load Forecasting through Estimated Parametrized Based Fuzzy Inference System in Smart Grids. IEEE Trans. Fuzzy Syst. 2021, 29, 156–165.

- Bhotto, M.Z.A.; Jones, R.; Makonin, S.; Bajić, I.V. Short-Term Demand Prediction Using an Ensemble of Linearly-Constrained Estimators. IEEE Trans. Power Syst. 2021, 36, 3163–3175.

- Jiang, H.; Zhang, Y.; Muljadi, E.; Zhang, J.J.; Gao, D.W. A Short-Term and High-Resolution Distribution System Load Forecasting Using Support Vector Regression with Hybrid Parameters Optimization. IEEE Trans. Smart Grid 2018, 9, 3341–3350.

- Li, G.; Li, Y.; Roozitalab, F. Midterm Load Forecasting: A Multistep Approach Based on Phase Space Reconstruction and Sup-port Vector Machine. IEEE Syst. J. 2020, 14, 4967–4977.

- Zafeiropoulou, M.; Sijakovic, I.; Terzic, N.; Fotis, G.; Maris, T.I.; Vita, V.; Zoulias, E.; Ristic, V.; Ekonomou, L. Forecasting Transmission and Distribution System Flexibility Needs for Severe Weather Condition Resilience and Outage Management. Appl. Sci. 2022, 12, 7334.

- Fotis, G.; Vita, V.; Maris, I.T. Risks in the European Transmission System and a Novel Restoration Strategy for a Power System after a Major Blackout. Appl. Sci. 2023, 23, 83.

- Sambhi, S.; Kumar, H.; Fotis, G.; Vita, V.; Ekonomou, L. Techno-Economic Optimization of an Off-Grid Hybrid Power Generation for SRM IST Delhi-NCR Campus. Energies 2022, 15, 7880.

- Sambhi, S.; Bhadoria, H.; Kumar, V.; Chaurasia, P.; Chaurasia, G.S.; Fotis, G.; Vita, G.; Ekonomou, V.; Pavlatos, C. Economic Feasibility of a Renewable Integrated Hybrid Power Generation System for a Rural Village of Ladakh. Energies 2022, 15, 9126.

- Khuntia, S.; Rueda, J.; Meijden, M. Forecasting the load of electrical power systems in mid- and long-term horizons: A review. IET Gener. Transm. Distrib. 2016, 10, 3971–3977.

- Directive (EU) 2019/944 of the European Parliament and of the Council of 5 June 2019 on Common Rules for the Internal Market for Electricity and Amending Directive 2012/27/EU. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?qid=1570790363600&uri=CELEX:32019L0944 (accessed on 29 December 2022).

- IRENA. Innovation Landscape Brief: Market Integration of Distributed Energy Resources; International Renewable Energy Agency: Abu Dhabi, United Arab Emirates, 2019.

- Commission, M.; Company, D. Integrating Renewables into Lower Michigan Electric Grid. Available online: https://www.brattle.com/wp-content/uploads/2021/05/15955_integrating_renewables_into_lower_michigans_electricity_grid.pdf (accessed on 29 December 2022).

- Wang, F.C.; Hsiao, Y.S.; Yang, Y.Z. The Optimization of Hybrid Power Systems with Renewable Energy and Hydrogen Gen-eration. Energies 1948, 11, 1948.

- Wang, F.; Lin, K.-M. Impacts of Load Profiles on the Optimization of Power Management of a Green Building Employing Fuel Cells. Energies 2019, 12, 57.

- Sun, W.; Zhang, C. A Hybrid BA-ELM Model Based on Factor Analysis and Similar-Day Approach for Short-Term Load Forecasting. Energies 2018, 11, 1282.

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324.

- Cho, K.; Van Merriënboer, B.; Bahdanau, D.; Bengio, Y. On the properties of neural machine translation: Encoder-decoder approaches. arXiv 2014, arXiv:1409.1259.

More

Information

Subjects:

Engineering, Electrical & Electronic

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

559

Revisions:

2 times

(View History)

Update Date:

26 Sep 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No