Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Thorsten Wuest | -- | 2666 | 2023-09-14 13:41:30 | | | |

| 2 | Rita Xu | -6 word(s) | 2660 | 2023-09-15 03:36:57 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Rahman, M.M.; Farahani, M.A.; Wuest, T. Industrial Drying Hopper Operations. Encyclopedia. Available online: https://encyclopedia.pub/entry/49176 (accessed on 07 February 2026).

Rahman MM, Farahani MA, Wuest T. Industrial Drying Hopper Operations. Encyclopedia. Available at: https://encyclopedia.pub/entry/49176. Accessed February 07, 2026.

Rahman, Md Mushfiqur, Mojtaba Askarzadeh Farahani, Thorsten Wuest. "Industrial Drying Hopper Operations" Encyclopedia, https://encyclopedia.pub/entry/49176 (accessed February 07, 2026).

Rahman, M.M., Farahani, M.A., & Wuest, T. (2023, September 14). Industrial Drying Hopper Operations. In Encyclopedia. https://encyclopedia.pub/entry/49176

Rahman, Md Mushfiqur, et al. "Industrial Drying Hopper Operations." Encyclopedia. Web. 14 September, 2023.

Copy Citation

The advancement of Industry 4.0 and smart manufacturing has made a large amount of industrial process data attainable with the use of sensors installed on machines. This stands true for Industrial Dryer Hoppers, which are used for most polymer manufacturing processes. Insights derived via AI from the collected data allow for improved processes and operations.

industry 4.0

smart manufacturing

machine learning

deep learning

1. Introduction

The advancement of smart manufacturing, which combines information technology and operational technology, has enabled the collection and processing of large amounts of industrial process data [1]. This progress has been facilitated by the installation of numerous sensors in industrial equipment and machine tools on the shop floor, leading to an increase in available data. In many cases, these sensors record the activity of manufacturing machine tools over time, thus generating time-series data [2]. The analysis of time-series data has proven valuable in extracting meaningful events in smart manufacturing systems [3]. While time-series data can be found in various domains such as healthcare [4], climate [5], robotics [6], ecohydrology [7], stock markets [8], energy systems [9], etc.

Sensors play a crucial role in the advancement of smart manufacturing systems, collecting data for various key variables over time, and forming Multivariate Time-Series (MTS) data. MTS data consists of multiple univariate time series (UTS), making MTS more complex due to the correlation between different variables. This research focuses on the analysis of time-series data for classification, which involves identifying key events and their respective classes within a dataset. Classification models aim to categorize events based on specific patterns and assign them to corresponding categories. In MTS classification, the time series is divided into segments, each belonging to a category with distinct patterns.

Several algorithms have been developed to analyze MTS data. Traditional approaches used prior to the evolution of smart manufacturing include simple exponential smoothing [10], dynamic time warping [11][12], and autoregressive integrated moving averages [13]. Machine learning algorithms such as K-nearest neighbor [14], decision trees [15], and Support Vector Machine (SVM) [16] have also been employed. Some authors have combined the K-nearest neighbor with distance measures like DTW [17][18] or Euclidean distance [19][20]. It has been shown that ensembling different discriminant classifiers, such as SVM and nearest neighbor, along with other machine learning classifiers like decision trees and random forests, can yield better results than using nearest neighbor with dynamic time warping [11]. Traditional methods often struggle to identify important features within time-series data and fail to capture correlations between variables, leading to the false identification of categorical events [3]. Additionally, traditional approaches and machine learning algorithms face challenges in handling the massive volume of data. Deep learning, with its ability to handle large amounts of data using deep neural networks, has emerged as a solution for extracting meaningful features from MTS data.

Deep learning techniques, including various neural network algorithms, have gained significant attention in dealing with time-series problems, particularly MTS. Deep neural networks can learn patterns in the data by capturing the correlations between variables, surpassing traditional methods such as NN-DTW. While NN-DTW may perform well for a small number of variables, it becomes more complex as the number of variables increases [21]. There are twelve distinct temperature measures, deep neural networks are particularly relevant. The two most used neural networks are Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN). CNNs are popular for computer vision tasks [22] and have been extensively applied to image recognition [23], natural language processing [24][25][26], image compression, and speech recognition [27]. CNNs have also been successful in handling MTS problems [3][21][28][29][30][31][32][33]. RNN, on the other hand, excels in sequential learning and performs well for univariate time series, but its application to MTS classification is limited [34]. However, it shows promise in dealing with time-series datasets containing missing values [35].

2. Manufacturing Process

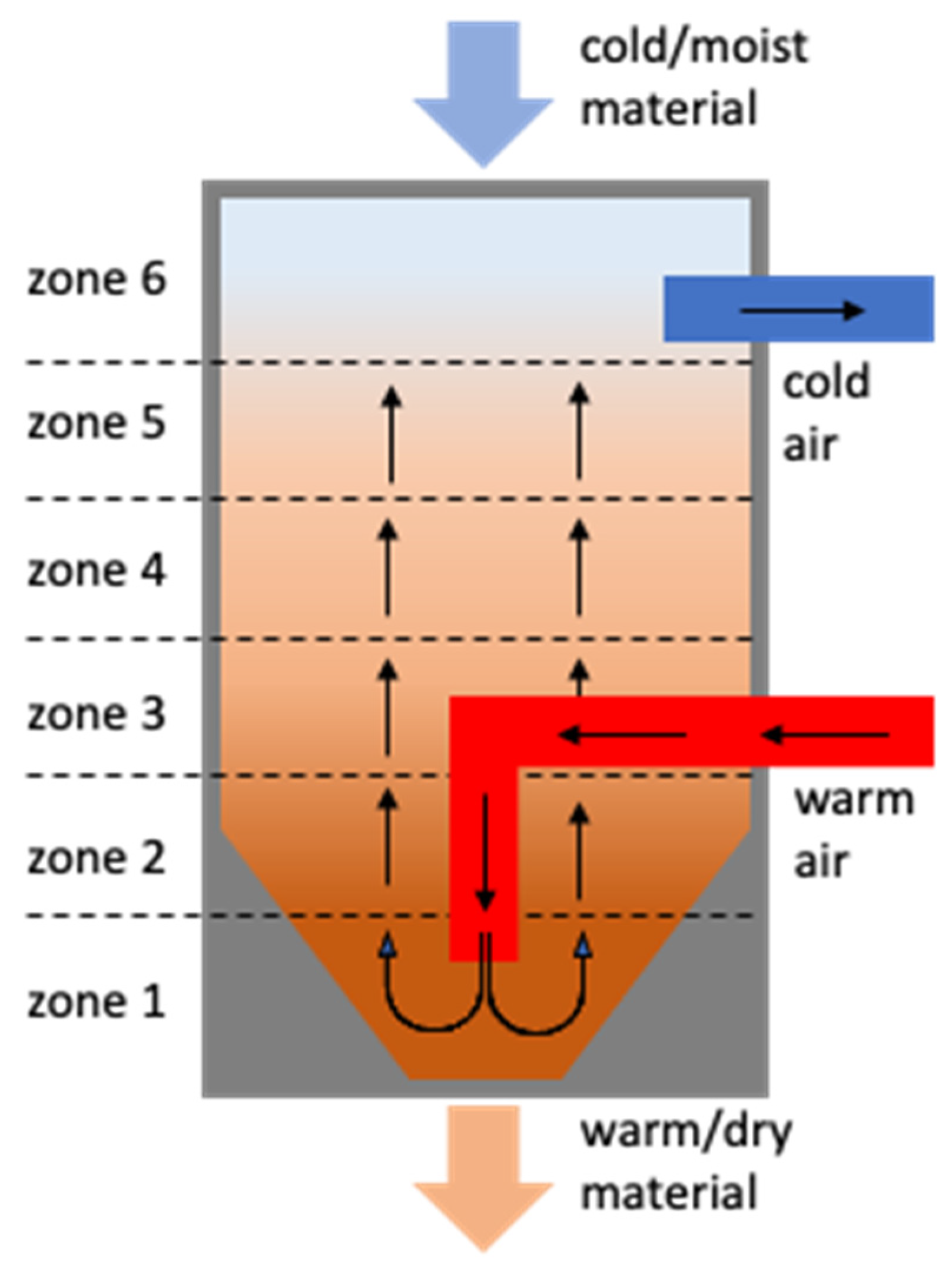

In the polymer processing industry, dryers play a crucial role in supplying dry-heated air that is blown upward through the to-be-dried material for several hours, while new undried, cold/moist material is continuously loaded on top of the dryer module, steadily moving downward through the dryer [36][37]. Modern drying hoppers are designed with a cylindrical body and a conical hole at the bottom. They ensure even temperature distribution and material flow by using spreader tubes to inject hot and dry air into the chamber. The process involves recirculating the hot air to continuously dry the material until the desired humidity level is achieved. Successful drying requires considering three main factors: drying time, drying temperature, and the dryness of circulating air. Drying time depends on the air temperature, initial dryness of the material, and target humidity. Higher air dryness and temperature accelerate the drying process, but excessively high temperatures may affect the material’s quality [38].

Monitoring the drying process is essential to avoid malfunctions. In a typical use case, a six-zoned temperature probe continuously measures the temperature at different heights within the vertically aligned drying chamber. This helps detect various disruptions in the drying process, such as over- or under-dried material and heater malfunctions [38]. The drying hopper consists of a drying hopper monitor and a regen wheel, both of which have a significant impact on polymer processing. The drying hopper monitor has eight temperature sensors, while the regen has three temperature sensors, including dew point temperature measurements for air delivery. Temperature sensors are used to measure these twelve temperatures over a period of one year for this specific case study. Figure 1 depicts a schematic of a drying hopper and sensor setup similar to the one used in this study.

Figure 1. A view of a drying hopper with different temperature zones.

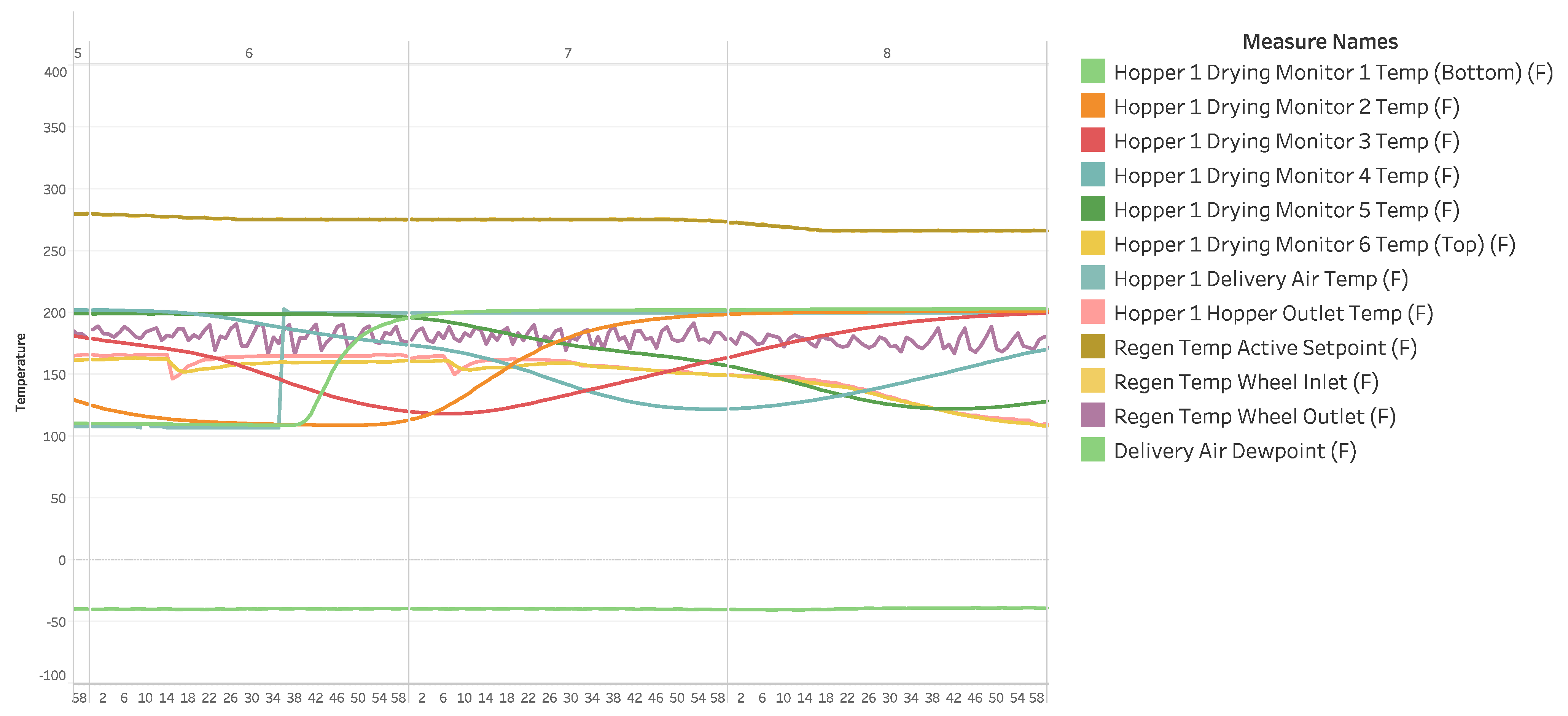

The collected data are preprocessed by handling missing values, outliers, and extraneous cases. The dataset contains temperature readings for twelve temperature measures sampled at one-minute intervals over the course of a year. With a large amount of data available for the main temperature zones in the dryer/hopper system and additional zones in the regen and dryer regions, meaningful features can be extracted via real-time analysis. By analyzing these data in real time, the production planner can gain insights into the drying hopper’s performance and determine the necessary maintenance actions. Figure 2 shows the temperature profiles obtained from the sensors.

Figure 2. The temperature profile of the data gathered from the case study drying hopper.

3. Time-Series Classification in Manufacturing—Algorithms

MTS has gained popularity across various domains for different purposes, including clinical diagnosis, weather prediction, stock price analysis, human motion detection, and fault detection in manufacturing processes. The manufacturing industry, in particular, has seen a significant increase in the use of MTS data due to the deployment of sensor systems in shop-floor machinery and machine tools. As a result, researchers in the manufacturing domain have focused on MTS analysis, such as classification, to address the challenges posed by these data. Temporal data mining, including MTS analysis, presents complexities arising from factors like spatial structure, time dependency, and correlations among variables. Consequently, researchers have been developing a variety of algorithms to handle these challenges.

3.1. Traditional Algorithms

The K-nearest neighbor algorithm with Dynamic Time Warping (DTW) is commonly used as a benchmark for classifying MTS data. In ref. [39], the authors used the Large Margin Nearest Neighbor (LMNN) and DTW. Mahalanobis distance-based DTW is used to calculate the relations among variables using the Mahalanobis matrix and LMNN is used to learn the matrix by minimizing a renewed, non-differentiable cost function using the coordinate descent method. This method is compared with other similarity measure techniques of MTS and the authors claimed the superiority of their proposed method over other techniques. This technique is also used by the authors in [40]. DTW multivariate prototyping is used in evaluating scoring and assessment methods for virtual reality training simulators. It classifies the VR data as novice, intermediate, or expert where 1-NN DTW performed reasonably well; the only better algorithm for this case was RESNET, which is an advanced version of CNN [41]. Overall, using DTW as a dissimilarity measure among features of time series and adapting the nearest neighbor classifier in temporal data mining was very popular before the evolution of deep learning [42].

According to [37], there are two approaches that can be taken for MTS data using DTW. One approach involves summing up the DTW distances of UTS for each dimension of the MTS. The other approach calculates the distance between two time-series data by summing up the distances between each corresponding pair of time-series data. The authors argue that the traditional belief that these two methods are equivalent for MTS classification is not true, and their effectiveness varies depending on the specific case. They conducted experiments on a wide range of MTS datasets to support their claim and justify the use of different DTW approaches based on the problem at hand.

A parametric derivative DTW is another variant of the DTW used in temporal data mining. This technique combines two distances, which are the DTW distance between MTS and the DTW distance between derivatives of MTS. This new distance is used afterward for classification with nearest neighbor rules [43]. Using a template selection approach based on DTW so that the complex feature selection approach and domain knowledge can be avoided is another approach used for classifying MTS in [44]. Another variant of DTW is using DTW distance measured via integral transformation. Integral DTW is calculated as the value of DTW on the integrated time series. This technique combines the DTW and integral DTW with the 1-nearest neighbor classifier which shows no overfitting issue [45].

The symbolic representation of MTS is a traditional technique used for classification. It involves learning symbols using supervised learning algorithms, considering all elements of the time series simultaneously. Tree-based ensembles are utilized to detect interactions between UTS columns, enabling efficient handling of nominal and missing values [46]. Another symbolic representation technique is MrSEQL, which transforms the time-series data using symbolic aggregate approximation (SAX) [47] in the time domain and symbolic Fourier approximation (SFA) [48] in the frequency domain. Discriminative subsequences are extracted from the symbolic data and used as features for training a classification model [49][50]. WEASEL + MUSE is another approach that uses SFA transformation to create sequences of words. Feature selection is performed using a chi-squared model, and logistic regression is employed to learn the selected features. These symbolic representation methods provide alternatives to traditional DTW-based approaches in MTS classification tasks [51].

One of the most extensive research on traditional methods for both MTS and UTS can be found in [11], which highlights almost all of the above-mentioned traditional approaches in different categories like whole series similarity, phase-dependent intervals, phase-independent shapelets, dictionary-based classifiers, and combinations of transformations. This research is a great resource for any time-series classification enthusiast to gain an overview of all the traditional methods. Another review paper that shows a brief overview of different classification approaches for MTS can be found in [50].

Machine learning algorithms, including both nonlinear techniques and ensemble learning techniques, have also been applied for time-series classification over the years. Traditional classifiers like Naïve Bayes, Decision Tree, and SVM are the most popular. Before using these algorithms, MTS data need to be converted into feature vector format. This is why the authors in [16] segmented the time series to obtain a qualitative description of each series and determined the frequent patterns. Afterward, the patterns that are highly discriminative between the classes are selected, and the data are transformed into vector format where the features are discriminative patterns.

3.2. Deep Learning Algorithms

ANN and deep learning, specifically Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN) such as Long Short-Term Memory (LSTM), have gained significant popularity in the field of temporal data mining, particularly for time-series classification. CNN has been widely used with a 1D filter in the convolutional layer, allowing it to automatically discover and extract meaningful internal structures in input time series via convolution and pooling operations. This eliminates the need for manual feature engineering, which is typically required in traditional feature extraction methods [52]. The combination of CNN and LSTM, leveraging the strengths of both algorithms, has also shown excellent performance in time-series classification tasks. Researchers have proposed various versions and adaptations of these algorithms, each showing promising results in different case studies. The authors of [21][53] provided the summary and basics of the recent algorithmic advance in the use of deep learning for MTS classification.

The authors in [28] used a tensor scheme with multivariate CNN for the time-series classification where the model considers multivariate aspect and lag feature characteristics simultaneously. Four stages were used in CNN architecture, which are the input tensor transformations stage, univariate convolution stage, multivariate convolution stage, and fully connected stage. In this method, they used an image-like tensor scheme to encode the MTS data. This approach is taken because of the highly successful nature of CNN in computing the vision for image classification.

Deconvolution has been utilized in time-series data mining, in addition to the convolution operation. In a study [54], the authors employed a deconvolutional network combined with SAX discretization to learn the representation of MTS. This approach captured correlations using deconvolution and applied pooling operations for dimension reduction across each position of each variable. SAX discretization was used to extract a bag of features, resulting in improved classification accuracy. Another variation of CNN called dilated CNN treated MTS as an image and employed stacks of dilated and stridden convolutions to extract features across variables [30]. Among other CNN approaches, multi-channel deep CNN is widely utilized, where the model learns features from individual time series and combines them after the convolution and pooling stages. The combined features are then fed into a multilayer perceptron (MLP) for final classification [33].

In [55], the authors performed a principal component analysis for feature extraction and reduced the number of MTS variables to two so that they could identify the most useful two components in the machine. The time series are encoded into images using Gramian Angular field (GAF) and the images are used as input for the CNN. Another similar research can be found in [56] where three techniques of converting MTS data into images have been used and tested, which are GAF, Gramian Angular Difference Field (GADF), and Markov Transition Field (MTF). It has been found that different approaches to converting MTS into images do not affect the classification performance, and a simple CNN can outperform other approaches. In semiconductor manufacturing, it has been tested that MTS- CNN can successfully detect fault wafers with high accuracy, recall, and precision [3].

Combining CNN, LSTM, and DNN has been another highly used approach over the years. In [57], the authors proposed a combined architecture abbreviated as CLDNN and applied it to large vocabulary tasks which outperformed three individual algorithms. A similar approach named MDDNN has been used to predict the class of a subsequence in terms of earliness and accuracy. The attention mechanism is incorporated with the deep learning framework in order to identify critical segments related to model performance [58]. The proposed framework used both the time domain and frequency domain via fast Fourier transformation and merged them for prediction. Another similar research that focused on early classification can be found in [29].

Apart from LSTM, other recurrent network variants like bidirectional RNN (BiRNN), bidirectional Long Short-Term Memory (BiLSTM), Gated Recurrent Unit (GRU), Bidirectional Gated Recurrent Unit (BiGRU) have been adapted to use in MTS classification. In [59], the authors used MLSTM-FCN, which is the combination of LSTM, squeeze and excitation (SE) block, and CNN, in which the SE block is integrated within FCN to leverage its high performance for MTS classification. A similar approach of using an excitation block has also been used in [31].

Multi-scale entropy and inception structure ideas have been used with the LSTM-FCNN model for MTS classification. The subsequences of each variable have been convolved using a 1D convolutional kernel with different filter sizes to extract high-level multi-scale spatial features. Afterward, LSTM has been applied to further process and capture temporal information. Both these spatial and temporal features are used as input to the fully connected layer [32]. In addition to CNN, the Evidence Feed Forward Hidden Markov Model (EFF-HMM) has been combined with LSTM to classify MTS. According to [60], learning EFF-HMM is based on the mistakes of the LSTM that outperformed other state of the art in human activity recognition.

References

- Mccormick, M.R.; Wuest, T. Challenges for Smart Manufacturing and Industry 4.0 Research in Academia: A Case Study; ResearchGate: Berlin, Germany, 2023.

- Oztemel, E.; Gursev, S. Literature review of Industry 4.0 and related technologies. J. Intell. Manuf. 2020, 31, 127–182.

- Hsu, C.-Y.; Liu, W.-C. Multiple time-series convolutional neural network for fault detection and diagnosis and empirical study in semiconductor manufacturing. J. Intell. Manuf. 2021, 32, 823–836.

- Jones, S.S.; Evans, R.S.; Allen, T.L.; Thomas, A.; Haug, P.J.; Welch, S.J.; Snow, G.L. A multivariate time series approach to modeling and forecasting demand in the emergency department. J. Biomed. Inform. 2009, 42, 123–139.

- Du, Z.; Lawrence, W.R.; Zhang, W.; Zhang, D.; Yu, S.; Hao, Y. Interactions between climate factors and air pollution on daily HFMD cases: A time series study in Guangdong, China. Sci. Total Environ. 2019, 656, 1358–1364.

- Perez-D’Arpino, C.; Shah, J.A. Fast target prediction of human reaching motion for cooperative human-robot manipulation tasks using time series classification. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 6175–6182.

- Farahani, M.A.; Vahid, A.; Goodwell, A.E. Evaluating Ecohydrological Model Sensitivity to Input Variability with an Information-Theory-Based Approach. Entropy 2022, 24, 994.

- Maknickienė, N.; Rutkauskas, A.V.; Maknickas, A. Investigation of financial market prediction by recurrent neural network. Innov. Technol. Sci. Bus. Educ. 2011, 2, 3–8.

- Martín, L.; Zarzalejo, L.F.; Polo, J.; Navarro, A.; Marchante, R.; Cony, M. Prediction of global solar irradiance based on time series analysis: Application to solar thermal power plants energy production planning. Sol. Energy 2010, 84, 1772–1781.

- Muth, J.F. Optimal Properties of Exponentially Weighted Forecasts. J. Am. Stat. Assoc. 1960, 55, 299–306.

- Bagnall, A.; Lines, J.; Bostrom, A.; Large, J.; Keogh, E. The great time series classification bake off: A review and experimental evaluation of recent algorithmic advances. Data Min. Knowl. Discov. 2017, 31, 606–660.

- Berndt, D.J.; Clifford, J. Using Dynamic Time Warping to Find Patterns in Time Series. In Proceedings of the 3rd International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 31 July–1 August 1994; pp. 359–370.

- Box, G.E.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control; John Wiley & Sons: Hoboken, NJ, USA, 2015.

- He, G.; Li, Y.; Zhao, W. An uncertainty and density based active semi-supervised learning scheme for positive unlabeled multivariate time series classification. Knowl.-Based Syst. 2017, 124, 80–92.

- Tuballa, M.L.; Abundo, M.L. A review of the development of Smart Grid technologies. Renew. Sustain. Energy Rev. 2016, 59, 710–725.

- Batal, I.; Sacchi, L.; Bellazzi, R.; Hauskrecht, M. Multivariate Time Series Classification with Temporal Abstractions. In Proceedings of the Twenty-Second International FLAIRS Conference, Sanibel Island, FL, USA, 19–21 May 2009.

- Yang, K.; Shahabi, C. An efficient k nearest neighbor search for multivariate time series. Inf. Comput. 2007, 205, 65–98.

- Hills, J.; Lines, J.; Baranauskas, E.; Mapp, J.; Bagnall, A. Classification of time series by shapelet transformation. Data Min. Knowl. Discov. 2014, 28, 851–881.

- Chang, Y.; Rubin, J.; Boverman, G.; Vij, S.; Rahman, A.; Natarajan, A.; Parvaneh, S. A Multi-Task Imputation and Classification Neural Architecture for Early Prediction of Sepsis from Multivariate Clinical Time Series. In Proceedings of the 2019 Computing in Cardiology Conference, Singapore, 8–11 September 2019.

- Lines, J.; Bagnall, A. Time series classification with ensembles of elastic distance measures. Data Min. Knowl. Discov. 2015, 29, 565–592.

- Fawaz, H.I.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.-A. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2019, 33, 917–963.

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90.

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, E.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9.

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2016, arXiv:1409.0473.

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks. arXiv 2014, arXiv:1409.3215.

- Alayba, A.M.; Palade, V.; England, M.; Iqbal, R. A Combined CNN and LSTM Model for Arabic Sentiment Analysis. In Machine Learning and Knowledge Extraction; Holzinger, A., Kieseberg, P., Tjoa, A.M., Weippl, E., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 11015, pp. 179–191. ISBN 978-3-319-99739-1.

- Sainath, T.N.; Kingsbury, B.; Mohamed, A.; Dahl, G.E.; Saon, G.; Soltau, H.; Beran, T.; Aravkin, A.Y.; Ramabhadran, B. Improvements to Deep Convolutional Neural Networks for LVCSR. In Proceedings of the 2013 IEEE Workshop on Automatic Speech Recognition and Understanding, Olomouc, Czech Republic, 8–12 December 2013; pp. 315–320.

- Liu, C.-L.; Hsaio, W.-H.; Tu, Y.-C. Time Series Classification With Multivariate Convolutional Neural Network. IEEE Trans. Ind. Electron. 2019, 66, 4788–4797.

- Huang, H.-S.; Liu, C.-L.; Tseng, V.S. Multivariate Time Series Early Classification Using Multi-Domain Deep Neural Network. In Proceedings of the 2018 IEEE 5th International Conference on Data Science and Advanced Analytics (DSAA), Turin, Italy, 1–3 October 2018; pp. 90–98.

- Yazdanbakhsh, O.; Dick, S. Multivariate Time Series Classification using Dilated Convolutional Neural Network. arXiv 2019, arXiv:1905.01697.

- Karim, F.; Majumdar, S.; Darabi, H.; Harford, S. Multivariate LSTM-FCNs for Time Series Classification. Neural Netw. 2019, 116, 237–245.

- Guo, Z.; Liu, P.; Yang, J.; Hu, Y. Multivariate Time Series Classification Based on MCNN-LSTMs Network. In Proceedings of the 2020 12th International Conference on Machine Learning and Computing, Shenzhen, China, 15–17 February 2020; pp. 510–517.

- Zheng, Y.; Liu, Q.; Chen, E.; Ge, Y.; Zhao, J.L. Exploiting multi-channels deep convolutional neural networks for multivariate time series classification. Front. Comput. Sci. 2016, 10, 96–112.

- Lei, K.-C.; Zhang, X.D. An approach on discretizing time series using recurrent neural network. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2522–2526.

- Che, Z.; Purushotham, S.; Cho, K.; Sontag, D.; Liu, Y. Recurrent Neural Networks for Multivariate Time Series with Missing Values. Sci. Rep. 2018, 8, 6085.

- Lenz, J.; Swerdlow, S.; Landers, A.; Shaffer, R.; Geller, A.; Wuest, T. Smart Services for Polymer Processing Auxiliary Equipment: An Industrial Case Study. Smart Sustain. Manuf. Syst. 2020, 4, 20200032.

- Shokoohi-Yekta, M.; Wang, J.; Keogh, E. On the Non-Trivial Generalization of Dynamic Time Warping to the Multi-Dimensional Case. In Proceedings of the 2015 SIAM International Conference on Data Mining; Society for Industrial and Applied Mathematics, Vancouver, BC, Canada, 30 April–2 May 2015; pp. 289–297.

- Kapp, V.; May, M.C.; Lanza, G.; Wuest, T. Pattern Recognition in Multivariate Time Series: Towards an Automated Event Detection Method for Smart Manufacturing Systems. J. Manuf. Mater. Process. 2020, 4, 88.

- Shen, J.; Huang, W.; Zhu, D.; Liang, J. A Novel Similarity Measure Model for Multivariate Time Series Based on LMNN and DTW. Neural Process. Lett. 2017, 45, 925–937.

- Mei, J.; Liu, M.; Wang, Y.-F.; Gao, H. Learning a Mahalanobis Distance-Based Dynamic Time Warping Measure for Multivariate Time Series Classification. IEEE Trans. Cybern. 2016, 46, 1363–1374.

- Vaughan, N.; Gabrys, B. Scoring and assessment in medical VR training simulators with dynamic time series classification. Eng. Appl. Artif. Intell. 2020, 94, 103760.

- Ircio, J.; Lojo, A.; Mori, U.; Lozano, J.A. Mutual information based feature subset selection in multivariate time series classification. Pattern Recognit. 2020, 108, 107525.

- Górecki, T.; Łuczak, M. Multivariate time series classification with parametric derivative dynamic time warping. Expert Syst. Appl. 2015, 42, 2305–2312.

- Seto, S.; Zhang, W.; Zhou, Y. Multivariate Time Series Classification Using Dynamic Time Warping Template Selection for Human Activity Recognition. In Proceedings of the 2015 IEEE Symposium Series on Computational Intelligence, Cape Town, South Africa, 1–7 December 2015; pp. 1399–1406.

- Łuczak, M. Univariate and multivariate time series classification with parametric integral dynamic time warping. J. Intell. Fuzzy Syst. 2017, 33, 2403–2413.

- Baydogan, M.G.; Runger, G. Learning a symbolic representation for multivariate time series classification. Data Min. Knowl. Discov. 2015, 29, 400–422.

- Lin, J.; Keogh, E.; Wei, L.; Lonardi, S. Experiencing SAX: A novel symbolic representation of time series. Data Min. Knowl. Discov. 2007, 15, 107–144.

- Schäfer, P.; Högqvist, M. SFA: A symbolic fourier approximation and index for similarity search in high dimensional datasets. In Proceedings of the 15th International Conference on Extending Database Technology, Berlin, Germany, 27–30 March 2012; pp. 516–527.

- Le Nguyen, T.; Gsponer, S.; Ilie, I.; O’Reilly, M.; Ifrim, G. Interpretable time series classification using linear models and multi-resolution multi-domain symbolic representations. Data Min. Knowl. Discov. 2019, 33, 1183–1222.

- Dhariyal, B.; Le Nguyen, T.; Gsponer, S.; Ifrim, G. An Examination of the State-of-the-Art for Multivariate Time Series Classification. In Proceedings of the 2020 International Conference on Data Mining Workshops (ICDMW), Sorrento, Italy, 17–20 November 2020; pp. 243–250.

- Schäfer, P.; Leser, U. Multivariate Time Series Classification with WEASEL+MUSE 2018. arXiv 2018, arXiv:1711.11343.

- 53. Zhao, B.; Lu, H.; Chen, S.; Liu, J.; Wu, D. Convolutional neural networks for time series classification. J. Syst. Eng. Electron. 2017, 28, 162–169.

- Ruiz, A.P.; Flynn, M.; Large, J.; Middlehurst, M.; Bagnall, A. The great multivariate time series classification bake off: A review and experimental evaluation of recent algorithmic advances. Data Min. Knowl. Discov. 2021, 35, 401–449.

- Song, W.; Liu, L.; Liu, M.; Wang, W.; Wang, X.; Song, Y. Representation Learning with Deconvolution for Multivariate Time Series Classification and Visualization. In Data Science; Zeng, J., Jing, W., Song, X., Lu, Z., Eds.; Springer: Singapore, 2020; Volume 1257, pp. 310–326. ISBN 9789811579806.

- Kiangala, K.S.; Wang, Z. An Effective Predictive Maintenance Framework for Conveyor Motors Using Dual Time-Series Imaging and Convolutional Neural Network in an Industry 4.0 Environment. IEEE Access 2020, 8, 121033–121049.

- Martínez-Arellano, G.; Terrazas, G.; Ratchev, S. Tool wear classification using time series imaging and deep learning. Int. J. Adv. Manuf. Technol. 2019, 104, 3647–3662.

- Sainath, T.N.; Vinyals, O.; Senior, A.; Sak, H. Convolutional, Long Short-Term Memory, fully connected Deep Neural Networks. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015; pp. 4580–4584.

- Hsu, E.-Y.; Liu, C.-L.; Tseng, V.S. Multivariate Time Series Early Classification with Interpretability Using Deep Learning and Attention Mechanism. In Advances in Knowledge Discovery and Data Mining; Yang, Q., Zhou, Z.-H., Gong, Z., Zhang, M.-L., Huang, S.-J., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; Volume 11441, pp. 541–553. ISBN 978-3-030-16141-5.

- Khan, M.; Wang, H.; Ngueilbaye, A.; Elfatyany, A. End-to-end multivariate time series classification via hybrid deep learning architectures. Pers. Ubiquitous Comput. 2023, 27, 177–191.

- Tripathi, A.M. Enhancing Multivariate Time Series Classification Using LSTM and Evidence Feed Forward HMM. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–7.

More

Information

Subjects:

Engineering, Manufacturing; Engineering, Industrial; Computer Science, Artificial Intelligence

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

658

Revisions:

2 times

(View History)

Update Date:

15 Sep 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No