Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | zhengxin zhang | -- | 5018 | 2023-06-29 04:04:12 | | | |

| 2 | Sirius Huang | -2 word(s) | 5016 | 2023-06-30 06:47:39 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Zhang, Z.; Zhu, L. Unmanned Aerial Vehicle Remote Sensing. Encyclopedia. Available online: https://encyclopedia.pub/entry/46196 (accessed on 08 February 2026).

Zhang Z, Zhu L. Unmanned Aerial Vehicle Remote Sensing. Encyclopedia. Available at: https://encyclopedia.pub/entry/46196. Accessed February 08, 2026.

Zhang, Zhengxin, Lixue Zhu. "Unmanned Aerial Vehicle Remote Sensing" Encyclopedia, https://encyclopedia.pub/entry/46196 (accessed February 08, 2026).

Zhang, Z., & Zhu, L. (2023, June 29). Unmanned Aerial Vehicle Remote Sensing. In Encyclopedia. https://encyclopedia.pub/entry/46196

Zhang, Zhengxin and Lixue Zhu. "Unmanned Aerial Vehicle Remote Sensing." Encyclopedia. Web. 29 June, 2023.

Copy Citation

Unmanned aerial vehicle (UAV) remote sensing has been widely used in agriculture, forestry, mining, and other industries. UAVs can be flexibly equipped with various sensors, such as optical, infrared, and LIDAR, and become an essential remote sensing observation platform. Based on UAV remote sensing, researchers can obtain many high-resolution images, with each pixel being a centimeter or millimeter.

UAV

remote sensing

land applications

UAV imagery

1. Introduction

Since the 1960s, Earth observation satellites have garnered significant attention from both military [1][2] and civilian [3][4][5] sectors, due to their unique high-altitude observation ability, enabling simultaneous monitoring of a wide range of ground targets. Since the 1970s, several countries have launched numerous Earth observation satellites, such as NASA’s Landsat [6] series; ESA’s SPOT [7] series; and commercial satellites such as IKONOS [8], QuickBird, and the WorldView series, generating an enormous volume of remote sensing data. These satellites have facilitated the development of several generations of remote sensing image analysis methods, including remote sensing index methods [9][10][11][12][13][14][15], object-oriented analysis methods (OBIA) [16][17][18][19][20][21][22], and deep neural network methods [23][24][25][26][27] in recent years, all of which rely on the multi-spectral and high-resolution images generated by these remote sensing satellites.

From the 1980s onward, remote sensing research had mainly been based on satellite data. Due to the cost of satellite launches, there were only a few remote sensing satellites available for a long time, and most satellite images required high costs to obtain limited data, except for a few satellites such as the Landsat series that were partially free. This also affected the direction of remote sensing research. During this period, many remote sensing index methods based on ground target spectral characteristics mainly used free Landsat satellite data. Other satellite data were less used, due to their high purchase costs.

Beside the high cost and lack of supply, remote sensing satellite data acquisition is also constrained by several factors that affect the observation ability and direction of research:

-

The observation ability of a remote sensing satellite is determined by its cameras. A satellite can only carry one or two cameras as sensors, and these cameras cannot be replaced once the satellite has been launched. Therefore, the observation performance of a satellite cannot be improved in its lifetime;

-

Remote sensing satellites can only observe targets when flying over the adjacent area above the target and along the satellite’s orbit, which limits the ability to observe targets from a specific angle;

-

Optical remote sensing satellites use visible and infrared light reflected by observation targets as a medium, such as panchromatic, colored, multi-spectral, and hyper-spectral remote sensing satellites. For these satellites, the target illumination conditions seriously affect the observation quality. Effective remote sensing imagery data only can be obtained when the satellite is flying over the observation target and when the target has good illumination conditions;

-

For optical remote sensing satellites, meteorological conditions, such as cloud cover, can also affect the observation result, which limits the selection of remote sensing images for research;

-

The resolution of remote sensing imagery data is limited by the distance between the satellite and the target. Since remote sensing satellites are far from ground targets, their image resolution is relatively low.

These constraints not only limit the scope of remote sensing research but also affect research directions. For instance, land cover/land use is a important aspect of remote sensing research. However, the research object of land cover/land use is limited by the spatial resolution of remote sensing image data. The current panchromatic cameras carried by remote sensing satellites have a resolution of 31 cm/pixel, which can only identify the type, location, and outline information of ground targets with a 3 m [28] size or more, such as buildings, roads, trees, ships, cars, etc. Ground objects with smaller sized aerial projections, such as people, animals, bicycles, etc., cannot be distinguished from the images, due to the relatively large pixel size. Similarly, change detection, which compares different information in images taken of the same target in two or more periods, is another example. Since the data used in many research articles are images taken by the same remote sensing satellite at different times along its orbit and at the same spatial location, the observation angles and spatial resolution of these images are similar, making them suitable for pixel-by-pixel information comparison methods. Hence, change detection has become a key direction in remote sensing research since the 1980s.

In the past decade, the emergence of multi-rotor unmanned aerial vehicles (UAV) has gradually changed the above-mentioned limitations in remote sensing research. This type of unmanned aircraft is pilotless, consumes no fuel, and does not require maintenance of turboshaft engines. These multi-copters are equipped with cheap but reliable brushless motors, which only require a small amount of electricity per flight. Users can schedule the entire flight process of a multi-copter, from takeoff to landing, and edit flight parameters such as passing points, flight speed, acceleration, and climbing rate. Compared to human-crewed aircraft such as helicopters and small fixed-wing aircraft, multi-rotor drones are more stable and reliable, and have several advantages for remote sensing applications.

2. UAV Platforms and Sensors

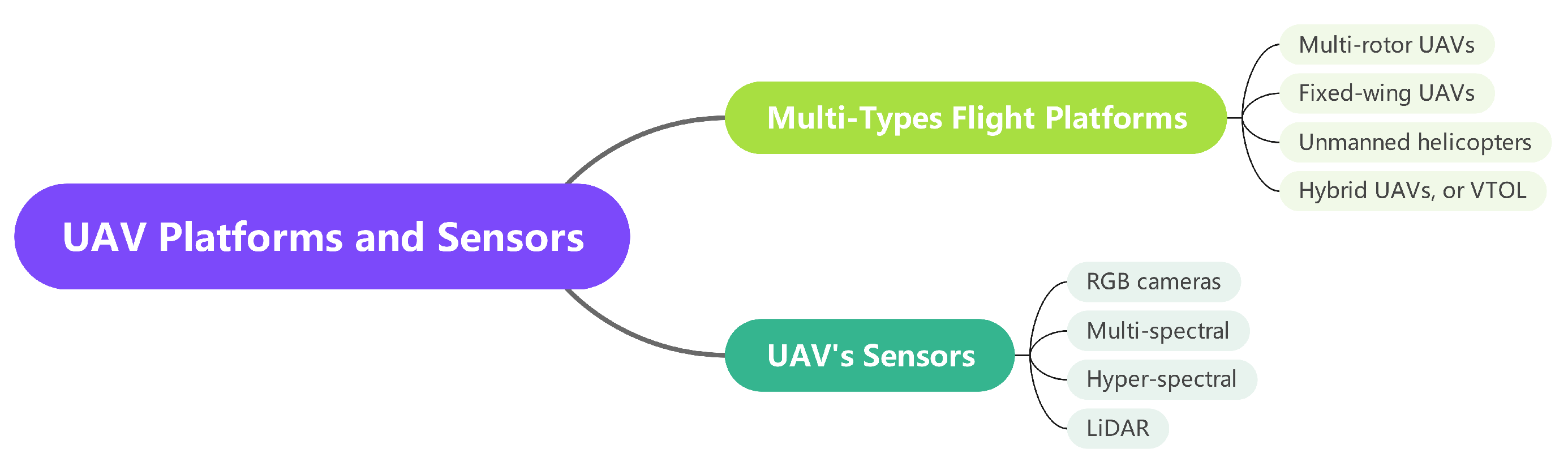

The hardware of a UAV remote sensing platform consists of two parts: the flight platform of the drone, and the sensors they are equipped with. Compared to remote sensing satellites, one of the most significant advantages of UAV remote sensing is the flexible replacement of sensors, which allows researchers to use the same drone to study the properties and characteristics of different objects by using different types of sensors. Figure 1 shows this sections’ structure, including the drone’s flight platform and the different types of sensors carried.

Figure 1. UAV platforms and sensors.

2.1. UAV Platform

UAVs have been increasingly employed as a remote sensing observation platform for near-ground applications. Multi-rotor, fixed-wing, hybrid UAVs, and unmanned helicopters are the commonly used categories of UAVs. Among these, multi-rotor UAVs have gained the most popularity, owing to their numerous advantages. These UAVs, which come in various configurations, such as four-rotor, six-rotor, and eight-rotor, offer high safety during takeoff and landing and do not require a large airport or runway. They are highly controllable during flight and can easily adjust their flight altitude and speed. Additionally, some multi-rotor UAVs are equipped with obstacle detection abilities, allowing them to stop or bypass obstacles during flight. Figure 2 shows four typical UAV platforms.

Figure 2. UAV platforms: (a) Multi-rotor UAV, (b) Fixed-wing UAV, (c) Unmanned Helicopter, (d) VTOL UAV.

Multi-rotor UAVs utilize multiple rotating propellers powered by brushless motors to control lift. This mechanism enables each rotor to independently and frequently adjust its rotation speed, thereby facilitating quick recovery of flight altitude and attitude in case of disturbances. However, the power efficiency of multi-rotor UAVs is not prominent, and their flight duration is relatively short. Common consumer grade drones, after carefully optimizing their weight and power, have a duration of about 30 min; for example, DJI’s Mavic Pro has a flight range of 27 min, Mavic 2 has a range of 31 min, and Mavic Air 2 has a range of 34 min. Despite these limitations, multi-rotor UAVs have been extensively used as remote sensing data acquisition platforms in the reviewed literature.

Fixed-wing UAVs, which are similar in structure to common aircraft, generate lift force from the upper and lower air pressure generated by their fixed wings during forward movement. These UAVs require a runway for takeoff and landing, and their landing process is more challenging to control than that of multi-rotor UAVs. The stable flight of fixed-wing UAVs necessitates that the wings provide more lift than the weight of the aircraft, requiring the UAV to maintain a certain minimum speed throughout its flight. Consequently, these UAVs cannot hover, and their response to rising or falling airflow is limited. While the flight speed of fixed-wing UAVs is superior to that of multi-rotor UAVs, their flight duration is also longer.

Unmanned helicopters, which have a structure similar to helicopters, employ a large rotor to provide lift and a tail rotor to control direction. These UAVs possess excellent power efficiency and flight duration, but their mechanical blade structure is complex, leading to high vibrations and costs. Nonetheless, limited research work on using unmanned helicopters as a remote sensing platform was reported in the reviewed literature.

Hybrid UAVs, also known as vertical take-off and landing (VTOL), combine the features of both multi-rotor and fixed-wing UAVs. These UAVs take off and land in multi-rotor mode and fly in fixed-wing mode, providing the advantages of easy control during takeoff and landing and energy-saving during flight.

2.2. Sensors Carried by UAVs

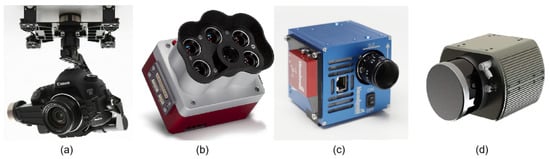

UAVs have been widely utilized as a platform for remote sensing, and the sensors carried by these aircraft play a critical role in data acquisition. Among the sensors commonly used by multi-rotor UAVs, there are two main categories: imagery sensors and three-dimensional information sensors. In addition to the two types of sensor that are commonly used, other types of sensors carried by drones include gas sensors, air particle sensors, small radars, etc. Figure 3 shows four typical UAV-carried sensors.

Figure 3. Sensors carried by UAVs: (a) RGB Camera, (b) Multi-spectral Camera, (c) Hyper-spectral Camera, (d) LIDAR.

Imagery sensors capture images of the observation targets and can be further classified into several types. RGB cameras capture images in the visible spectrum and are commonly used for vegetation mapping, land use classification, and environmental monitoring. Multi-spectral/hyper-spectral cameras capture images in multiple spectral bands, enabling the identification of specific features such as vegetation species, water quality, and mineral distribution. Thermal imagers capture infrared radiation emitted by the targets, making it possible to identify temperature differences and detect heat anomalies. These sensors can provide high-quality imagery data for various remote sensing applications.

In addition to imagery sensors, multi-rotor UAVs can also carry three-dimensional information sensors. These sensors are relatively new and have been developed in recent years with the advancement of simultaneous localization and mapping (SLAM) technology. LIDAR sensors use laser beams to measure the distance between the UAV and the target, enabling the creation of high-precision three-dimensional maps. Millimeter wave radar sensors use electromagnetic waves to measure the distance and velocity of the targets, making them suitable for applications that require long-range and all-weather sensing. Multi-camera arrays capture images from different angles, allowing the creation of 3D models of the observation targets. These sensors can provide rich spatial information, enabling the analysis of terrain elevation, structure, and volume.

2.2.1. RGB Cameras

RGB cameras are a prevalent remote sensing sensor among UAVs, and two types of RGB cameras are commonly used on UAV platforms. The first type is the UAV-integrated camera, which is mounted on the UAV using its gimbal. This camera typically has a resolution of 20 megapixels or higher, such as the 20-megapixel 4/3-inch image sensor integrated into the DJI Mavic 3 aircraft and the 20-megapixel 1-inch image sensor integrated into AUTEL’s EVO II Pro V3 UAV. These cameras can capture high-resolution images at high frame rates, offering the advantages of being lightweight, compact, and having a long endurance. However, they cannot replace the original lens with telephoto and wide-angle lenses, which are required for remote and wide-angle environments.

The second type of camera commonly carried by UAVs is a single lens reflex (SLR) camera, which enables the replacement of lenses with different focal lengths. UAVs equipped with SLR cameras offer the advantage of lens flexibility and can be used for remote sensing or wide-angle observation, making them a valuable tool for such applications. Nonetheless, SLR cameras are heavier and require gimbals for installation, necessitating a UAV with sufficient size and load capacity to accommodate them. For example, Liu et al. [29] utilized the SONY A7R camera, which provides multiple lens options, including zoom and fixed focus lenses, to produce a high-precision digital elevation model (DEM) in their research.

2.2.2. Multi-Spectral and Hyper-Spectral Camera

Multi-spectral and hyper-spectral cameras are remote sensing instruments that collect the spectral radiation intensity of reflected sunlight at specific wavelengths. A multi-spectral camera is designed to provide data similar to that of multi-spectral remote sensing satellites, allowing for quantitative observation of the radiation intensity of reflected light on ground targets in specific sunlight bands. In processing multi-spectral satellite remote sensing image data, the reflected light intensity data of the same ground target in different spectral bands are used as remote sensing indices, such as the widely used normalized difference vegetation index (NDVI) [9] dimensionless index, which is defined as in Equation (1):

In Equation (1), NIR refers to the measured intensity of reflected light in the near-infrared spectral range (700∼800 nm), while Red refers to the measured intensity of reflected light in the red spectral range (600∼700 nm). The NDVI index is used to measure vegetation density, as living green plants, algae, cyanobacteria, and other photosynthetic autotrophs absorb red and blue light but reflect near-infrared light. Thus, vegetation-rich areas have higher NDVI values.

After the launch of the Landsat-1 satellite in 1972, multi-spectral scanner system (MSS) sensors that can independently observe the ground reflected light according to the frequency range became a research hot spot data source. When dealing with the problem of spring vegetation greening and subsequent degradation in the Great Plains of the Central United States, the studied regional latitude differences are large, so NVDI [9] was proposed as a spectral index method that is not sensitive to changes of latitude and solar zenith angle. The NDVI index ranges from 0.3 to 0.8 in densely vegetated areas, and the NDVI value range is negative for cloud- and snow-covered areas; for a water body, the NDVI value is close to 0; for bare soil, the NDVI value is a small positive value.

In addition to the vegetation index, other common remote sensing indices include the normalized difference water index (NDWI) [12], enhanced vegetation index (EVI) [11], leaf area index (LAI) [30], modified soil adjusted vegetation index (MSAVI) [13], soil adjusted vegetation index (SAVI) [14], and other remote sensing index methods. These methods measure the spectral radiation intensity of blue light, green light, red light, red edge, near-infrared, and other object reflection bands.

Table 1 presents a comparison between the multi-spectral cameras of UAVs and the multi-spectral sensors of satellites. One notable difference is that a UAV’s multi-spectral camera has a specific narrow band known as the “red edge” [31], which is not present in many satellites’ multi-spectral sensors. This band has a wavelength range of 680 nm to 730 nm, transitioning from the visible light frequencies easily absorbed by plants to the infrared band largely reflected by plant cells. From a spectral perspective, this band represents an area where the reflectance of sunlight of plants changes significantly. A few satellites, such as the European Space Agency(ESA)’s Sentinel-2, have data available in this band. Research on satellite data has revealed a correlation between leaf area index (LAI) [30] and this band [32][33][34]. LAI [30] is a crucial variable in predicting photosynthetic productivity and evapotranspiration. Another significant difference between UAV multi-spectral cameras and satellite sensors is the advantage of UAVs’ multi-spectral cameras in spatial resolution. UAV multi-spectral cameras can reach centimeter/pixel spatial resolution, which is currently unattainable by satellite sensors. Centimeter-resolution multi-spectral images have many applications in precision agriculture.

Table 1. Parameters of UAV multi-spectral cameras and several satellite multi-spectral sensors.

| Device Name | Multi-Spectral Bandwidth and Spatial Resolution | ||||||

|---|---|---|---|---|---|---|---|

| Blue | Green | Red | Red Edge | Near Infrared I | Spatial Resolution | ||

| Multi-spectral Camera of UAV | Parrot Sequoia+ | None | 550±40550±40 nm 1 | 660±40660±40 nm | 735±10735±10 nm | 790±40790±40 nm | 8 cm/pixel 2 |

| Rededge-MX | 475±32475±32 nm | 560±27560±27 nm | 668±16668±16 nm | 717±12717±12 nm | 842±57842±57 nm | 8 cm/pixel 2 | |

| Altum PT | 475±32475±32 nm | 560±27560±27 nm | 668±16668±16 nm | 717±12717±12 nm | 842±57842±57 nm | 2.5 cm/pixel 2 | |

| Sentera 6X | 475±30475±30 nm | 550±20550±20 nm | 670±30670±30 nm | 715±10715±10 nm | 840±20840±20 nm | 5.2 cm/pixel 2 | |

| DJI P4 Multi 3 | 450±16450±16 nm | 560±16560±16 nm | 650±16650±16 nm | 730±16730±16 nm | 840±26840±26 nm | ||

| Landsat-5 TM 4 | 485±35485±35 nm | 560±40560±40 nm | 660±30660±30 nm | None | 830±70830±70 nm | 30 m/pixel | |

| Multi-spectral Sensors on Satellites | Landsat-5 MSS 5 | None | 550±50550±50 nm | 650±50650±50 nm | None | 750±50750±50 nm | 60 m/pixel |

| Landsat-7 ETM+ 6 | 485±35485±35 nm | 560±40560±40 nm | 660±30660±30 nm | None | 835±65835±65 nm | 30 m/pixel | |

| Landsat-8 OLI 7 | 480±30480±30 nm | 560±30560±30 nm | 655±15655±15 nm | None | 865±15865±15 nm | 30 m/pixel | |

| IKONOS 8 | 480±35480±35 nm | 550±45550±45 nm | 665±33665±33 nm | None | 805±48805±48 nm | 3.28 m/pixel | |

| QuickBird 9 | 485±35485±35 nm | 560±40560±40 nm | 660±30660±30 nm | None | 830±70830±70 nm | 2.62 m/pixel | |

| WorldView-4 10 | 480±30480±30 nm | 560±30560±30 nm | 673±18673±18 nm | None | 850±70850±70 nm | 1.24 m/pixel | |

| Sentinel-2A 11 | 492±33492±33 nm | 560±18560±18 nm | 656±16656±16 nm | 745±49745±49 nm 12 | 833±53833±53 nm | 10 m/pixel | |

1 nm—nanometer. 2 Flight height at 120 m. 3 DJI Phantom 4 Multispectral Camera. 4 Landsat 4–5 Thematic Mapper. 5 Landsat 1–5 Multispectral Scanner. 6 Landsat 7 Enhanced Thematic Mapper Plus. 7 Landsat 8–9 Operational Land Imager. 8 IKONOS Multispectral Sensor. 9 QuickBird Multispectral Sensor. 10 WorldView-4 Multispectral Sensor. 11 Sentinel-2A Multispectral Sensor of Sentinel-2. 12 Sentinel-2A have 3 red-edge spectral band, with the spatial resolution 20 m/pixel.

Hyper-spectral and multi-spectral cameras are both imaging devices that can capture data across multiple wavelengths of light. However, there are some key differences between these two types of camera. Multi-spectral cameras typically capture data across a few discrete wavelength bands, while hyper-spectral cameras capture data across many more (often hundreds) of narrow and contiguous wavelength bands. Moreover, multi-spectral cameras generally have a higher spatial resolution than hyper-spectral cameras. Additionally, hyper-spectral cameras are typically more expensive than multi-spectral cameras. Table 2 provides a summary of several hyper-spectral cameras and their features and that were utilized in the papers herein reviewed.

Table 2. Parameters of Hyper-spectral Cameras.

| Camera Name | Spectral Range | Spectral Bands | Spectral Sampling | FWHM 1 |

|---|---|---|---|---|

| Cubert S185 | 450∼950 nm | 125 bands | 4 nm | 8 nm |

| Headwall Nano-Hyperspec | 400∼1000 nm | 301 bands | 2 nm | 6 nm |

| RESONON PIKA L | 400∼1000 nm | 281 bands | 2.1 nm | 3.3 nm |

| RESONON PIKA XC2 | 400∼1000 nm | 447 bands | 1.3 nm | 1.9 nm |

| HySpex Mjolnir S-620 | 970∼2500 nm | 300 bands | 5.1 nm | unspecified |

1 FWHM–Full Width at Half Maximum of Spectral Resolution.

The data produced by hyper-spectral cameras are not only useful for investigating the reflected spectral intensity of green plants but also for analyzing the chemical properties of ground targets. Hyper-spectral data can provide information about the chemical composition and water content of soil [35], as well as the chemical composition of ground minerals [36][37]. This is because hyper-spectral cameras can capture data across many narrow and contiguous wavelength bands, allowing for detailed analysis of the unique spectral signatures of different materials. The chemical composition and water content of soil can be determined based on the unique spectral characteristics of certain chemical compounds or water molecules, while the chemical composition of minerals and artifacts can be identified based on their distinctive spectral features. As such, hyper-spectral cameras are highly versatile tools that can be utilized for a broad range of applications in various fields, including agriculture, geology, and archaeology.

2.2.3. LIDAR

LIDAR, an acronym for “laser imaging, detection, and ranging”, is a remote sensing technology that has become increasingly popular in recent years, due to its ability to generate precise and highly accurate 3D images of the Earth’s surface. LIDAR systems mounted on UAVs are capable of collecting data for a wide range of applications, including surveying [38][39], environmental monitoring [40], and infrastructure inspection [41][42][43].

One of the key advantages of using LIDAR in UAV remote sensing is its ability to provide highly accurate and detailed elevation data. By measuring the time it takes for laser pulses to bounce off the ground and return to the sensor, LIDAR can create a high-resolution digital elevation model (DEM) of the terrain. This data can be used to create detailed 3D maps of the landscape, which are useful for a variety of applications, such as flood modeling, land use planning, and urban design.

Another benefit of using LIDAR in UAV remote sensing is its ability to penetrate vegetation cover to some extent, allowing for the creation of detailed 3D models of forests and other vegetation types. Multiple return LIDAR has the ability to measure the return time of different pulses of reflected light emitted at the same time. By precisely using this feature, information on the canopy structure in a forest can be obtained by measuring the different return times. This data can be used for ecosystem monitoring, wildlife habitat assessment, and other environmental applications.

In addition to mapping and environmental monitoring, LIDAR-equipped UAVs are also used for infrastructure inspection and construction environment monitoring. By collecting high-resolution images of bridges, buildings, and other structures, LIDAR can help engineers and construction professionals identify potential problems. Figure 4 shows mechanical scanning and solid-state LIDAR.

Figure 4. LIDAR: (a) Mechanical Scanning LIDAR, (b) Solid-state LIDAR.

LIDAR technology has evolved significantly in recent years with the emergence of solid-state LIDAR technology, which uses an array of stationary lasers and photodetectors to scan the target area. Solid-state LIDAR technology offers several advantages over mechanical scanning LIDAR, which use a rotating mirror or prism to scan a laser beam across the target area. Solid-state LIDAR is typically more compact and lightweight, making it well suited for use on UAVs.

3. UAV Remote Sensing Data Processing

UAV remote sensing has several advantages compared with satellite remote sensing: (1) UAV remote sensing can be equipped with specific sensors for observation, as required. (2) UAV remote sensing can observe targets at any time period allowed by weather and environmental conditions. (3) UAV remote sensing can set a repeatable flight route, to achieve multiple target observations from a set altitude and angle. (4) The image sensor mounted on the UAV is closer to the target, and the image resolution obtained by observation is higher. These characteristics have not only allowed the remote sensing community to produce new techniques in land cover/land use and change detection based on remote sensing satellite data in the past, but have also contributed to the growth of forest remote sensing, precision agriculture remote sensing, and other research directions.

3.1. Land Cover/Land Use

Land cover and land use are fundamental topics in satellite remote sensing research. This field aims to extract information about ground observation targets from low-resolution image data captured by early remote sensing satellites. NASA’s Landsat series satellite program is the longest-running Earth resource observation satellite program to date, with 50 years of operation since the launch of Landsat-1 [44] in 1972.

In the early days of remote sensing, land use classification methods focused on identifying and classifying the spectral information of pixels covering the target object, known as sub-pixel approaches [45]. The concept of these methods is that the spectral characteristics of a single pixel in a remote sensing image are based on the spatial average of the spectral signatures reflected from multiple object surfaces within the area covered by that pixel.

However, with the emergence of high-resolution satellites, such as QuickBird and IKONOS, which can capture images with meter-level or decimeter-level spatial resolution, the industry has produced a large amount of high-resolution remote sensing data with sufficient object textural features. This has led to the development of object-based image analysis (OBIA) methods for land use/land cover.

OBIA uses a super-pixel segmentation method to segment the image and then applies a classifier method to classify the spectral features of the segmented blocks and identify the type of ground targets. In recent years, neural network methods, especially the full convolution neural network (FCN) [46] method, have become the mainstream methods of land use and land cover research. Semantic segmentation [23][47][48] and instance segmentation [24][49][50] neural network methods can extract the type, location, and spatial range information of ground targets end-to-end from remote sensing images.

The emergence of unmanned aerial vehicle (UAV) remote sensing has produced a new generation of data for land cover/land use research. The image sensors carried by UAVs can acquire images with decimeter-level, centimeter-level, or even millimeter-level resolution, allowing the problem of information extraction for small objects on the ground, which were previously difficult to study, to become a new research interest, such as people on the street, cars, animals, and plants.

Researchers have proposed various methods to address these challenges. For instance, PEMCNet [51], an encoder–decoder neural network method proposed by Zhao et al., achieved good classification results for LIDAR data taken by UAVs, with a high accuracy for ground objects such as buildings, shrubs, and trees. Harvey et al. [52] proposed a terrain matching system based on the Xception [53] network model, which uses a pretrained neural network to determine the position of the aircraft without relying on inertial measurement units (IMUs) and global navigation satellite systems (GNSS). Additionally, Zhuang et al. [54] proposed a method based on neural networks to match remote sensing images of the same location taken from different perspectives and resolutions, called multiscale block attention (MSBA). By segmenting and combining the target image and calculating the loss function separately for the local area of the image, the authors realized a matching method for complex building targets photographed from different angles.

3.2. Change Detection

Remote sensing satellites can observe the same target area multiple times. Comparing the images obtained from two observations, we can detect changes in the target area over time. Change detection using remote sensing satellite data has wide-ranging applications, such as in urban planning, agricultural surveying, disaster detection and assessment, map compilation, and more.

UAV remote sensing technology allows for data acquisition from multiple aerial photos taken at different times along a preset route. Compared to other types of remote sensing, UAV remote sensing has advantages in spatial resolution and data acquisition for change detection. Some of the key benefits include: (1) UAV remote sensing operates at a lower altitude, making it less susceptible to meteorological conditions such as clouds and rain. (2) The data obtained through UAV remote sensing are generated through structure-from-motion and multi-view stereo (SfM-MVS) and airborne laser scanning (ALS) methods, which enable the creation of a DEM for the observed target and adjacent areas, allowing us to monitor changes in three dimensions over time. (3) UAVs can acquire data at user-defined time intervals by conducting multiple flights in a short time.

Recent research on change detection based on UAV remote sensing data has focused on identifying small and micro-targets, such as vehicles, bicycles, motorcycles, and tricycles, and tracking their movements using UAV aerial images and video data. Another area of research involves the practical application of UAV remote sensing for detecting changes in 3D models of terrain, landforms, and buildings.

For instance, Chen et al. [55] proposed a method to detect changes in buildings using RGB images obtained from UAV aerial photography and 3D reconstruction of RGB-D data. Cook et al. [56] compared the accuracy of 3D models generated using a SfM-MVS method and LIDAR scanning measurement for reconstructing complex mountainous river terrain areas, with a root-mean-square error (RMSE) of 30∼40 cm. Mesquita et al. [57] developed a change detection method, which was tested on the Oil Pipes Construction Dataset(OPCD) and successfully detected construction traces from multiple pictures taken by UAV at different times in the same area and space. Hastaouglu et al. [58] monitored three-dimensional displacement in a garbage dump using aerial image data and the SfM-MVS method [59] to generate a three-dimensional model. Lucieer et al. [60] proposed a method for reconstructing a three-dimensional model of landslides in mountainous areas based on unmanned aerial vehicle multi-view images using the SfM-MVS method. The measured horizontal accuracy was 7 cm, and the vertical accuracy was 6 cm. Li et al. [61] monitored the deformation of the slope section of large water conservancy projects using UAV aerial photography and achieved a measurement error of less than 3 mm, which was significantly higher than traditional aerial photography methods. Han et al. [62] proposed a method of using UAVs to monitor road construction, which was applied to an extended road construction site and accurately identified changed ground areas with an accuracy of 84.5∼85%. Huang et al. [63] developed a semantic detection method for changes in construction sites, based on a 3D point cloud data model generated from images obtained through UAV aerial photography.

3.3. Digital Elevation Model (DEM) Information

In recent years, the accurate generation of digital elevation models (DEM) has become increasingly important in remote sensing landform research. DEMs provide crucial information about ground elevation, including both digital terrain models (DTM) and digital surface models (DSM). A DTM represents the natural surface elevation, while a DSM includes additional features such as vegetation and artificial objects. There are two primary methods for calculating elevation information: structure-from-motion and multi-view stereo (SfM-MVS) [59] and airborne laser scanning (ALS).

Among the reviewed articles, the SfM-MVS method gained more attention due to its simple requirements. Sanz-Ablanedo et al. [64] conducted a comparative experiment to assess the accuracy of the SfM-MVS method when establishing a DTM model in a complex mining area covering over 1200 hectares (1.2×1071.2×107 m2). The results showed that when a small number of ground control points (GCPs) were used, the root-mean-square error (RMSE) of the checkpoint was plus or minus five times the ground sample distance (GSD), or about 34 cm. In contrast, when more GCPs were used (i.e., more than 2 GCP in 100 images), the RMSE of the checkpoint response converged to twice the GSD, or about 13.5 cm. Increasing the number of GCPs had a significant impact on the accuracy of the 3D-model generated by the SfM-MVS method. It is worth noting that the authors used a small fixed-wing UAV as their remote sensing platform. Rebelo et al. [65] proposed a method to generate a DTM by taking RGB images from multi-rotor UAVs. The authors used an RGB sensor carried by a DJI Phantom 4 UAV to take images within an area of 55 hectares (5.5×1055.5×105 m2) and established a 3D point cloud DTM through the SfM-MVS method. Although the GNSS receiver used was the same model, the horizontal RMSE of the DTM was 3.1 cm, the vertical RMSE was 8.3 cm, and the comprehensive RMSE was 8.8 cm. This precision was much better than that of the fixed-wing UAV method of Sanz-Ablanedo et al. [64]. In another study, Almeida et al. [66] proposed a method for qualitative detection of single trees in forest land based on UAV remote sensing RGB data. In their experiment, the authors used a 20-megapixel camera carried by a DJI Phantom 4 PRO to reconstruct a DTM in the SfM-MVS mode of Agisoft Metashape, over an area of 0.15 hectares. For the DTM model obtained, the RMSE of GCPs in the horizontal direction was 1.6 cm, and that in the vertical direction was 3 cm. Hartwig et al. [67] reconstructed different forms of ravine using SfM-MVS based on multi-view images captured by multiple drones. Through experiments, the authors verified that, even without using GCP for geographic registration, SfM-MVS technology alone could achieve a 5% accuracy in the volume measurement of ravines.

In airborne laser scanning (ALS) methods, Zhang et al. [40] proposed a method to detect ground height in tropical rainforests based on LIDAR data. This method involved scanning a forest area with airborne LIDAR to obtain three-dimensional point cloud data. Local minima were extracted from the point cloud data as candidate points, with some of these candidates representing the ground between trees in the forest area. The DTM generated by the method had high consistency with the ALS-based reference, with a RMSE of 2.1 m.

References

- Simonett, D.S. Future and Present Needs of Remote Sensing in Geography; Technical Report; 1966. Available online: https://ntrs.nasa.gov/citations/19670031579 (accessed on 23 May 2023).

- Hudson, R.; Hudson, J.W. The military applications of remote sensing by infrared. Proc. IEEE 1975, 63, 104–128.

- Badgley, P.C. Current Status of NASA’s Natural Resources Program. Exploring Unknown. 1960; p. 226. Available online: https://ntrs.nasa.gov/citations/19670031597 (accessed on 23 May 2023).

- Roads, B.O.P. Remote Sensing Applications to Highway Engineering. Public Roads 1968, 35, 28.

- Taylor, J.I.; Stingelin, R.W. Infrared imaging for water resources studies. J. Hydraul. Div. 1969, 95, 175–190.

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R.; et al. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172.

- Chevrel, M.; Courtois, M.; Weill, G. The SPOT satellite remote sensing mission. Photogramm. Eng. Remote Sens. 1981, 47, 1163–1171.

- Dial, G.; Bowen, H.; Gerlach, F.; Grodecki, J.; Oleszczuk, R. IKONOS satellite, imagery, and products. Remote Sens. Environ. 2003, 88, 23–36.

- Rouse, J.W., Jr.; Haas, R.H.; Schell, J.; Deering, D. Monitoring the Vernal Advancement and Retrogradation (Green Wave Effect) of Natural Vegetation; Technical Report; 1973. Available online: https://ntrs.nasa.gov/citations/19740022555 (accessed on 23 May 2023).

- Jordan, C.F. Derivation of leaf-area index from quality of light on the forest floor. Ecology 1969, 50, 663–666.

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213.

- Gao, B.C. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266.

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126.

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309.

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107.

- Blaschke, T.; Lang, S.; Lorup, E.; Strobl, J.; Zeil, P. Object-oriented image processing in an integrated GIS/remote sensing environment and perspectives for environmental applications. Environ. Inf. Plan. Politics Public 2000, 2, 555–570.

- Blaschke, T.; Strobl, J. What’s wrong with pixels? Some recent developments interfacing remote sensing and GIS. Z. Geoinformationssysteme 2001, 12–17. Available online: https://www.researchgate.net/publication/216266284_What’s_wrong_with_pixels_Some_recent_developments_interfacing_remote_sensing_and_GIS (accessed on 23 May 2023).

- Schiewe, J. Segmentation of high-resolution remotely sensed data-concepts, applications and problems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34, 380–385.

- Hay, G.J.; Blaschke, T.; Marceau, D.J.; Bouchard, A. A comparison of three image-object methods for the multiscale analysis of landscape structure. ISPRS J. Photogramm. Remote Sens. 2003, 57, 327–345.

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258.

- Blaschke, T.; Burnett, C.; Pekkarinen, A. New contextual approaches using image segmentation for objectbased classification. In Remote Sensing Image Analysis: Including the Spatial Domain; De Meer, F., de Jong, S., Eds.; 2004; Available online: https://courses.washington.edu/cfr530/GIS200106012.pdf (accessed on 23 May 2023).

- Zhan, Q.; Molenaar, M.; Tempfli, K.; Shi, W. Quality assessment for geo-spatial objects derived from remotely sensed data. Int. J. Remote Sens. 2005, 26, 2953–2974.

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Cham, Switzerland, 2015; pp. 234–241.

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE international Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969.

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141.

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848.

- Chu, X.; Zheng, A.; Zhang, X.; Sun, J. Detection in crowded scenes: One proposal, multiple predictions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12214–12223.

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16.

- Liu, Y.; Zheng, X.; Ai, G.; Zhang, Y.; Zuo, Y. Generating a high-precision true digital orthophoto map based on UAV images. ISPRS Int. J. Geo-Inf. 2018, 7, 333.

- Watson, D.J. Comparative physiological studies on the growth of field crops: I. Variation in net assimilation rate and leaf area between species and varieties, and within and between years. Ann. Bot. 1947, 11, 41–76.

- Seager, S.; Turner, E.L.; Schafer, J.; Ford, E.B. Vegetation’s red edge: A possible spectroscopic biosignature of extraterrestrial plants. Astrobiology 2005, 5, 372–390.

- Delegido, J.; Verrelst, J.; Meza, C.; Rivera, J.; Alonso, L.; Moreno, J. A red-edge spectral index for remote sensing estimation of green LAI over agroecosystems. Eur. J. Agron. 2013, 46, 42–52.

- Lin, S.; Li, J.; Liu, Q.; Li, L.; Zhao, J.; Yu, W. Evaluating the effectiveness of using vegetation indices based on red-edge reflectance from Sentinel-2 to estimate gross primary productivity. Remote Sens. 2019, 11, 1303.

- Imran, H.A.; Gianelle, D.; Rocchini, D.; Dalponte, M.; Martín, M.P.; Sakowska, K.; Wohlfahrt, G.; Vescovo, L. VIS-NIR, red-edge and NIR-shoulder based normalized vegetation indices response to co-varying leaf and Canopy structural traits in heterogeneous grasslands. Remote Sens. 2020, 12, 2254.

- Datta, D.; Paul, M.; Murshed, M.; Teng, S.W.; Schmidtke, L. Soil Moisture, Organic Carbon, and Nitrogen Content Prediction with Hyperspectral Data Using Regression Models. Sensors 2022, 22, 7998.

- Jackisch, R.; Madriz, Y.; Zimmermann, R.; Pirttijärvi, M.; Saartenoja, A.; Heincke, B.H.; Salmirinne, H.; Kujasalo, J.P.; Andreani, L.; Gloaguen, R. Drone-borne hyperspectral and magnetic data integration: Otanmäki Fe-Ti-V deposit in Finland. Remote Sens. 2019, 11, 2084.

- Thiele, S.T.; Bnoulkacem, Z.; Lorenz, S.; Bordenave, A.; Menegoni, N.; Madriz, Y.; Dujoncquoy, E.; Gloaguen, R.; Kenter, J. Mineralogical mapping with accurately corrected shortwave infrared hyperspectral data acquired obliquely from UAVs. Remote Sens. 2021, 14, 5.

- Krause, S.; Sanders, T.G.; Mund, J.P.; Greve, K. UAV-based photogrammetric tree height measurement for intensive forest monitoring. Remote Sens. 2019, 11, 758.

- Yu, J.W.; Yoon, Y.W.; Baek, W.K.; Jung, H.S. Forest Vertical Structure Mapping Using Two-Seasonal Optic Images and LiDAR DSM Acquired from UAV Platform through Random Forest, XGBoost, and Support Vector Machine Approaches. Remote Sens. 2021, 13, 4282.

- Zhang, H.; Bauters, M.; Boeckx, P.; Van Oost, K. Mapping canopy heights in dense tropical forests using low-cost UAV-derived photogrammetric point clouds and machine learning approaches. Remote Sens. 2021, 13, 3777.

- Chen, C.; Yang, B.; Song, S.; Peng, X.; Huang, R. Automatic clearance anomaly detection for transmission line corridors utilizing UAV-Borne LIDAR data. Remote Sens. 2018, 10, 613.

- Zhang, R.; Yang, B.; Xiao, W.; Liang, F.; Liu, Y.; Wang, Z. Automatic extraction of high-voltage power transmission objects from UAV lidar point clouds. Remote Sens. 2019, 11, 2600.

- Alshawabkeh, Y.; Baik, A.; Fallatah, A. As-Textured As-Built BIM Using Sensor Fusion, Zee Ain Historical Village as a Case Study. Remote Sens. 2021, 13, 5135.

- Short, N.M. The Landsat Tutorial Workbook: Basics of Satellite Remote Sensing; National Aeronautics and Space Administration, Scientific and Technical Information Branch: Washington, DC, USA, 1982; Volume 1078.

- Schowengerdt, R.A. Soft classification and spatial-spectral mixing. In Proceedings of the International Workshop on Soft Computing in Remote Sensing Data Analysis, Milan, Italy, 4–5 December 1995; pp. 4–5.

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440.

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495.

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818.

- Wang, X.; Kong, T.; Shen, C.; Jiang, Y.; Li, L. Solo: Segmenting objects by locations. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XVIII 16. Springer: Cham, Switzerland, 2020; pp. 649–665.

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. Yolact: Real-time instance segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9157–9166.

- Zhao, G.; Zhang, W.; Peng, Y.; Wu, H.; Wang, Z.; Cheng, L. PEMCNet: An Efficient Multi-Scale Point Feature Fusion Network for 3D LiDAR Point Cloud Classification. Remote Sens. 2021, 13, 4312.

- Harvey, W.; Rainwater, C.; Cothren, J. Direct Aerial Visual Geolocalization Using Deep Neural Networks. Remote Sens. 2021, 13, 4017.

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258.

- Zhuang, J.; Dai, M.; Chen, X.; Zheng, E. A Faster and More Effective Cross-View Matching Method of UAV and Satellite Images for UAV Geolocalization. Remote Sens. 2021, 13, 3979.

- Chen, B.; Chen, Z.; Deng, L.; Duan, Y.; Zhou, J. Building change detection with RGB-D map generated from UAV images. Neurocomputing 2016, 208, 350–364.

- Cook, K.L. An evaluation of the effectiveness of low-cost UAVs and structure from motion for geomorphic change detection. Geomorphology 2017, 278, 195–208.

- Mesquita, D.B.; dos Santos, R.F.; Macharet, D.G.; Campos, M.F.; Nascimento, E.R. Fully convolutional siamese autoencoder for change detection in UAV aerial images. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1455–1459.

- Hastaoğlu, K.Ö.; Gül, Y.; Poyraz, F.; Kara, B.C. Monitoring 3D areal displacements by a new methodology and software using UAV photogrammetry. Int. J. Appl. Earth Obs. Geoinf. 2019, 83, 101916.

- Carrivick, J.L.; Smith, M.W.; Quincey, D.J. Structure from Motion in the Geosciences; John Wiley & Sons: Hoboken, NJ, USA, 2016.

- Lucieer, A.; Jong, S.M.d.; Turner, D. Mapping landslide displacements using Structure from Motion (SfM) and image correlation of multi-temporal UAV photography. Prog. Phys. Geogr. 2014, 38, 97–116.

- Li, M.; Cheng, D.; Yang, X.; Luo, G.; Liu, N.; Meng, C.; Peng, Q. High precision slope deformation monitoring by uav with industrial photogrammetry. IOP Conf. Ser. Earth Environ. Sci. 2021, 636, 012015.

- Han, D.; Lee, S.B.; Song, M.; Cho, J.S. Change detection in unmanned aerial vehicle images for progress monitoring of road construction. Buildings 2021, 11, 150.

- Huang, R.; Xu, Y.; Hoegner, L.; Stilla, U. Semantics-aided 3D change detection on construction sites using UAV-based photogrammetric point clouds. Autom. Constr. 2022, 134, 104057.

- Sanz-Ablanedo, E.; Chandler, J.H.; Rodríguez-Pérez, J.R.; Ordóñez, C. Accuracy of unmanned aerial vehicle (UAV) and SfM photogrammetry survey as a function of the number and location of ground control points used. Remote Sens. 2018, 10, 1606.

- Rebelo, C.; Nascimento, J. Measurement of Soil Tillage Using UAV High-Resolution 3D Data. Remote Sens. 2021, 13, 4336.

- Almeida, A.; Gonçalves, F.; Silva, G.; Mendonça, A.; Gonzaga, M.; Silva, J.; Souza, R.; Leite, I.; Neves, K.; Boeno, M.; et al. Individual Tree Detection and Qualitative Inventory of a Eucalyptus sp. Stand Using UAV Photogrammetry Data. Remote Sens. 2021, 13, 3655.

- Hartwig, M.E.; Ribeiro, L.P. Gully evolution assessment from structure-from-motion, southeastern Brazil. Environ. Earth Sci. 2021, 80, 548.

More

Information

Subjects:

Remote Sensing

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.6K

Revisions:

2 times

(View History)

Update Date:

30 Jun 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No