Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by zhengxin zhang and Version 2 by Sirius Huang.

Unmanned aerial vehicle (UAV) remote sensing has been widely used in agriculture, forestry, mining, and other industries. UAVs can be flexibly equipped with various sensors, such as optical, infrared, and LIDAR, and become an essential remote sensing observation platform. Based on UAV remote sensing, researchers can obtain many high-resolution images, with each pixel being a centimeter or millimeter.

- UAV

- remote sensing

- land applications

- UAV imagery

1. Introduction

Since the 1960s, Earth observation satellites have garnered significant attention from both military [1][2][1,2] and civilian [3][4][5][3,4,5] sectors, due to their unique high-altitude observation ability, enabling simultaneous monitoring of a wide range of ground targets. Since the 1970s, several countries have launched numerous Earth observation satellites, such as NASA’s Landsat [6] series; ESA’s SPOT [7] series; and commercial satellites such as IKONOS [8], QuickBird, and the WorldView series, generating an enormous volume of remote sensing data. These satellites have facilitated the development of several generations of remote sensing image analysis methods, including remote sensing index methods [9][10][11][12][13][14][15][9,10,11,12,13,14,15], object-oriented analysis methods (OBIA) [16][17][18][19][20][21][22][16,17,18,19,20,21,22], and deep neural network methods [23][24][25][26][27][23,24,25,26,27] in recent years, all of which rely on the multi-spectral and high-resolution images generated by these remote sensing satellites.

From the 1980s onward, remote sensing research had mainly been based on satellite data. Due to the cost of satellite launches, there were only a few remote sensing satellites available for a long time, and most satellite images required high costs to obtain limited data, except for a few satellites such as the Landsat series that were partially free. This also affected the direction of remote sensing research. During this period, many remote sensing index methods based on ground target spectral characteristics mainly used free Landsat satellite data. Other satellite data were less used, due to their high purchase costs.

Beside the high cost and lack of supply, remote sensing satellite data acquisition is also constrained by several factors that affect the observation ability and direction of research:

-

The observation ability of a remote sensing satellite is determined by its cameras. A satellite can only carry one or two cameras as sensors, and these cameras cannot be replaced once the satellite has been launched. Therefore, the observation performance of a satellite cannot be improved in its lifetime;

-

Remote sensing satellites can only observe targets when flying over the adjacent area above the target and along the satellite’s orbit, which limits the ability to observe targets from a specific angle;

Table 1.

Parameters of UAV multi-spectral cameras and several satellite multi-spectral sensors.

| Device Name | Multi-Spectral Bandwidth and Spatial Resolution | ||||||

|---|---|---|---|---|---|---|---|

| Blue | Green | Red | Red Edge | Near Infrared I | Spatial Resolution | ||

| Multi-spectral Camera of UAV | Parrot Sequoia+ | None | 550±40550±40 nm 1 | 660±40660±40 nm | 735±10735±10 nm | 790±40790±40 nm | 8 cm/pixel 2 |

| Rededge-MX | 475±32475±32 nm | 560±27560±27 | |||||

| 830 | |||||||

| ± | |||||||

| 70 | 830±70 | nm | 30 m/pixel | ||||

| Multi-spectral Sensors on Satellites | Landsat-5 MSS 5 | None | 550±50550±50 nm | 650±50650±50 nm | None | 750±50750±50 nm | 60 m/pixel |

| Landsat-7 ETM+ 6 | 485±35485±35 nm | 560±40560±40 nm | 660±30660±30 nm | None | 835±65835±65 nm | 30 m/pixel | |

| Landsat-8 OLI 7 | 480±30480±30 nm | 560±30560±30 nm | 655±15655±15 nm | None | 865±15865±15 nm | 30 m/pixel | |

| IKONOS 8 | 480±35480±35 nm | 550±45550±45 nm | 665±33665±33 nm | None | 805±48805±48 nm | 3.28 m/pixel | |

| QuickBird 9 | 485±35485±35 nm | 560±40560±40 nm | 660±30660±30 nm | None | 830±70830±70 nm | 2.62 m/pixel | |

| WorldView-4 10 | 480±30480±30 nm | 560±30560±30 nm | 673±18673±18 nm | None | 850±70850±70 nm | 1.24 m/pixel | |

| Sentinel-2A 11 | 492±33492±33 nm | 560±18560±18 nm | 656±16656±16 nm | 745±49745±49 nm 12 | 833±53833±53 nm | 10 m/pixel | |

1 nm—nanometer. 2 Flight height at 120 m. 3 DJI Phantom 4 Multispectral Camera. 4 Landsat 4–5 Thematic Mapper. 5 Landsat 1–5 Multispectral Scanner. 6 Landsat 7 Enhanced Thematic Mapper Plus. 7 Landsat 8–9 Operational Land Imager. 8 IKONOS Multispectral Sensor. 9 QuickBird Multispectral Sensor. 10 WorldView-4 Multispectral Sensor. 11 Sentinel-2A Multispectral Sensor of Sentinel-2. 12 Sentinel-2A have 3 red-edge spectral band, with the spatial resolution 20 m/pixel.

Hyper-spectral and multi-spectral cameras are both imaging devices that can capture data across multiple wavelengths of light. However, there are some key differences between these two types of camera. Multi-spectral cameras typically capture data across a few discrete wavelength bands, while hyper-spectral cameras capture data across many more (often hundreds) of narrow and contiguous wavelength bands. Moreover, multi-spectral cameras generally have a higher spatial resolution than hyper-spectral cameras. Additionally, hyper-spectral cameras are typically more expensive than multi-spectral cameras. Table 2 provides a summary of several hyper-spectral cameras and their features and that were utilized in the papers herein reviewed.

Table 2.

Parameters of Hyper-spectral Cameras.

| Camera Name | Spectral Range | Spectral Bands | Spectral Sampling | FWHM 1 |

|---|---|---|---|---|

| Cubert S185 | 450∼950 nm | 125 bands | 4 nm | 8 nm |

| Headwall Nano-Hyperspec | 400∼1000 nm |

- Optical remote sensing satellites use visible and infrared light reflected by observation targets as a medium, such as panchromatic, colored, multi-spectral, and hyper-spectral remote sensing satellites. For these satellites, the target illumination conditions seriously affect the observation quality. Effective remote sensing imagery data only can be obtained when the satellite is flying over the observation target and when the target has good illumination conditions;

-

For optical remote sensing satellites, meteorological conditions, such as cloud cover, can also affect the observation result, which limits the selection of remote sensing images for research;

-

The resolution of remote sensing imagery data is limited by the distance between the satellite and the target. Since remote sensing satellites are far from ground targets, their image resolution is relatively low.

These constraints not only limit the scope of remote sensing research but also affect research directions. For instance, land cover/land use is a important aspect of remote sensing research. However, the research object of land cover/land use is limited by the spatial resolution of remote sensing image data. The current panchromatic cameras carried by remote sensing satellites have a resolution of 31 cm/pixel, which can only identify the type, location, and outline information of ground targets with a 3 m [28] size or more, such as buildings, roads, trees, ships, cars, etc. Ground objects with smaller sized aerial projections, such as people, animals, bicycles, etc., cannot be distinguished from the images, due to the relatively large pixel size. Similarly, change detection, which compares different information in images taken of the same target in two or more periods, is another example. Since the data used in many research articles are images taken by the same remote sensing satellite at different times along its orbit and at the same spatial location, the observation angles and spatial resolution of these images are similar, making them suitable for pixel-by-pixel information comparison methods. Hence, change detection has become a key direction in remote sensing research since the 1980s.

In the past decade, the emergence of multi-rotor unmanned aerial vehicles (UAV) has gradually changed the above-mentioned limitations in remote sensing research. This type of unmanned aircraft is pilotless, consumes no fuel, and does not require maintenance of turboshaft engines. These multi-copters are equipped with cheap but reliable brushless motors, which only require a small amount of electricity per flight. Users can schedule the entire flight process of a multi-copter, from takeoff to landing, and edit flight parameters such as passing points, flight speed, acceleration, and climbing rate. Compared to human-crewed aircraft such as helicopters and small fixed-wing aircraft, multi-rotor drones are more stable and reliable, and have several advantages for remote sensing applications.

2. UAV Platforms and Sensors

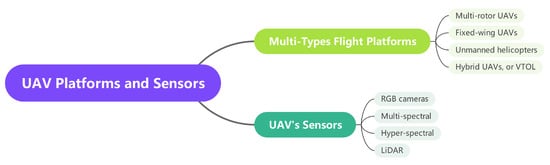

The hardware of a UAV remote sensing platform consists of two parts: the flight platform of the drone, and the sensors they are equipped with. Compared to remote sensing satellites, one of the most significant advantages of UAV remote sensing is the flexible replacement of sensors, which allows researchers to use the same drone to study the properties and characteristics of different objects by using different types of sensors. Figure 1 shows this sections’ structure, including the drone’s flight platform and the different types of sensors carried.

Figure 1.

UAV platforms and sensors.

2.1. UAV Platform

UAVs have been increasingly employed as a remote sensing observation platform for near-ground applications. Multi-rotor, fixed-wing, hybrid UAVs, and unmanned helicopters are the commonly used categories of UAVs. Among these, multi-rotor UAVs have gained the most popularity, owing to their numerous advantages. These UAVs, which come in various configurations, such as four-rotor, six-rotor, and eight-rotor, offer high safety during takeoff and landing and do not require a large airport or runway. They are highly controllable during flight and can easily adjust their flight altitude and speed. Additionally, some multi-rotor UAVs are equipped with obstacle detection abilities, allowing them to stop or bypass obstacles during flight. Figure 2 shows four typical UAV platforms.

Figure 2.

UAV platforms: (

a

) Multi-rotor UAV, (

b

) Fixed-wing UAV, (

c

) Unmanned Helicopter, (

d

) VTOL UAV.

2.2. Sensors Carried by UAVs

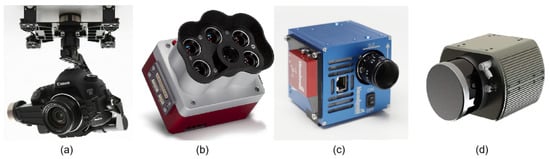

UAVs have been widely utilized as a platform for remote sensing, and the sensors carried by these aircraft play a critical role in data acquisition. Among the sensors commonly used by multi-rotor UAVs, there are two main categories: imagery sensors and three-dimensional information sensors. In addition to the two types of sensor that are commonly used, other types of sensors carried by drones include gas sensors, air particle sensors, small radars, etc. Figure 3 shows four typical UAV-carried sensors.

Figure 3.

Sensors carried by UAVs: (

a

) RGB Camera, (

b

) Multi-spectral Camera, (

c

) Hyper-spectral Camera, (

d

) LIDAR.

2.2.1. RGB Cameras

RGB cameras are a prevalent remote sensing sensor among UAVs, and two types of RGB cameras are commonly used on UAV platforms. The first type is the UAV-integrated camera, which is mounted on the UAV using its gimbal. This camera typically has a resolution of 20 megapixels or higher, such as the 20-megapixel 4/3-inch image sensor integrated into the DJI Mavic 3 aircraft and the 20-megapixel 1-inch image sensor integrated into AUTEL’s EVO II Pro V3 UAV. These cameras can capture high-resolution images at high frame rates, offering the advantages of being lightweight, compact, and having a long endurance. However, they cannot replace the original lens with telephoto and wide-angle lenses, which are required for remote and wide-angle environments. The second type of camera commonly carried by UAVs is a single lens reflex (SLR) camera, which enables the replacement of lenses with different focal lengths. UAVs equipped with SLR cameras offer the advantage of lens flexibility and can be used for remote sensing or wide-angle observation, making them a valuable tool for such applications. Nonetheless, SLR cameras are heavier and require gimbals for installation, necessitating a UAV with sufficient size and load capacity to accommodate them. For example, Liu et al. [29][42] utilized the SONY A7R camera, which provides multiple lens options, including zoom and fixed focus lenses, to produce a high-precision digital elevation model (DEM) in their research.2.2.2. Multi-Spectral and Hyper-Spectral Camera

Multi-spectral and hyper-spectral cameras are remote sensing instruments that collect the spectral radiation intensity of reflected sunlight at specific wavelengths. A multi-spectral camera is designed to provide data similar to that of multi-spectral remote sensing satellites, allowing for quantitative observation of the radiation intensity of reflected light on ground targets in specific sunlight bands. In processing multi-spectral satellite remote sensing image data, the reflected light intensity data of the same ground target in different spectral bands are used as remote sensing indices, such as the widely used normalized difference vegetation index (NDVI) [9] dimensionless index, which is defined as in Equation (1):NDVI=NIR−RedNIR+RedNDVI=NIR−RedNIR+Red

| nm | |||||||||

| 301 bands | 668 | ± | 16668±16 nm | 717±12 | 2 nm717±12 nm | 6 nm842±57842±57 nm | 8 cm/pixel 2 | ||

| Altum PT | 475±32475±32 | ||||||||

| RESONON PIKA L | nm | 560±27560±27 nm | 668±16668±16 nm | 717±12 | 400∼1000 nm717±12 nm | 281 bands842 | 2.1 nm± | 3.3 nm57842±57 nm | 2.5 cm/pixel 2 |

| Sentera 6X | 475±30475±30 nm | 550±20550±20 nm | 670±30670±30 nm | 715±10715±10 nm | 840±20840±20 nm | 5.2 cm/pixel 2 | |||

| RESONON PIKA XC2 | 400∼1000 nm | 447 bands | 1.3 nm | 1.9 nm | DJI P4 Multi 3 | 450±16450±16 nm | 560±16560±16 nm | 650±16650±16 nm | |

| HySpex Mjolnir S-620 | 970∼2500 nm | 730 | 300 bands | ± | 16730±16 nm | 840±26840±26 nm | |||

| 5.1 nm | unspecified | Landsat-5 TM 4 | 485±35485±35 nm | 560±40560±40 nm | 660±30660±30 nm | None |

1 FWHM–Full Width at Half Maximum of Spectral Resolution.

2.2.3. LIDAR

LIDAR, an acronym for “laser imaging, detection, and ranging”, is a remote sensing technology that has become increasingly popular in recent years, due to its ability to generate precise and highly accurate 3D images of the Earth’s surface. LIDAR systems mounted on UAVs are capable of collecting data for a wide range of applications, including surveying [38][39][51,52], environmental monitoring [40][53], and infrastructure inspection [41][42][43][54,55,56]. One of the key advantages of using LIDAR in UAV remote sensing is its ability to provide highly accurate and detailed elevation data. By measuring the time it takes for laser pulses to bounce off the ground and return to the sensor, LIDAR can create a high-resolution digital elevation model (DEM) of the terrain. This data can be used to create detailed 3D maps of the landscape, which are useful for a variety of applications, such as flood modeling, land use planning, and urban design. Another benefit of using LIDAR in UAV remote sensing is its ability to penetrate vegetation cover to some extent, allowing for the creation of detailed 3D models of forests and other vegetation types. Multiple return LIDAR has the ability to measure the return time of different pulses of reflected light emitted at the same time. By precisely using this feature, information on the canopy structure in a forest can be obtained by measuring the different return times. This data can be used for ecosystem monitoring, wildlife habitat assessment, and other environmental applications. In addition to mapping and environmental monitoring, LIDAR-equipped UAVs are also used for infrastructure inspection and construction environment monitoring. By collecting high-resolution images of bridges, buildings, and other structures, LIDAR can help engineers and construction professionals identify potential problems. Figure 4 shows mechanical scanning and solid-state LIDAR.

Figure 4.

LIDAR: (

a

) Mechanical Scanning LIDAR, (

b

) Solid-state LIDAR.