Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Miguel Mascarenhas Saraiva | -- | 2993 | 2023-05-08 04:16:05 | | | |

| 2 | Peter Tang | Meta information modification | 2993 | 2023-05-08 07:11:38 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Mascarenhas, M.; Afonso, J.; Ribeiro, T.; Andrade, P.; Cardoso, H.; Macedo, G. Artificial Intelligence in Digestive Healthcare. Encyclopedia. Available online: https://encyclopedia.pub/entry/43951 (accessed on 13 January 2026).

Mascarenhas M, Afonso J, Ribeiro T, Andrade P, Cardoso H, Macedo G. Artificial Intelligence in Digestive Healthcare. Encyclopedia. Available at: https://encyclopedia.pub/entry/43951. Accessed January 13, 2026.

Mascarenhas, Miguel, João Afonso, Tiago Ribeiro, Patrícia Andrade, Hélder Cardoso, Guilherme Macedo. "Artificial Intelligence in Digestive Healthcare" Encyclopedia, https://encyclopedia.pub/entry/43951 (accessed January 13, 2026).

Mascarenhas, M., Afonso, J., Ribeiro, T., Andrade, P., Cardoso, H., & Macedo, G. (2023, May 08). Artificial Intelligence in Digestive Healthcare. In Encyclopedia. https://encyclopedia.pub/entry/43951

Mascarenhas, Miguel, et al. "Artificial Intelligence in Digestive Healthcare." Encyclopedia. Web. 08 May, 2023.

Copy Citation

With modern society well entrenched in the digital area, the use of Artificial Intelligence (AI) to extract useful information from big data has become more commonplace in our daily lives than we perhaps realize. A number of medical specialties such as Gastroenterology rely heavily on medical images to establish disease diagnosis and patient prognosis, as well as to monitor disease progression. Moreover, some such imaging techniques have been adapted so that they can potentially deliver therapeutic interventions. The digitalization of medical imaging has paved the way for important advances in this field, including the design of AI solutions to aid image acquisition and analysis.

artificial intelligence

bioethics

medical imaging

big data

gastroenterology

capsule endoscopy

convolutional neural networks

privacy

data protection

bias

responsibility

1. Introduction

Medicine is advancing swiftly into the era of Big Data, particularly through the more widespread use of Electronic Health Records (EHRs) and the digitalization of clinical data, intensifying the demands on informatics solutions in healthcare settings. Like all major advances throughout history, the benefits on offer are associated with new rules of engagement. Some 50 years have passed since what is considered to have been the birth of Artificial Intelligence (AI) at the Dartmouth Summer Research Project [1]. This was an intensive 2-month project that set out to obtain solutions to the problems that are faced when attempting to make a machine that can simulate human intelligence. However, it was not until some years later before the first efforts to design biomedical computing solutions based on AI were seen [2][3][4][5]. These efforts are beginning to bear their fruit, and since the turn of the century, we have witnessed truly significant advances in this field, particularly in terms of medical image analysis [6][7][8][9][10][11][12][13].

2. The Emergence of AI Tools and the Questions They Raise

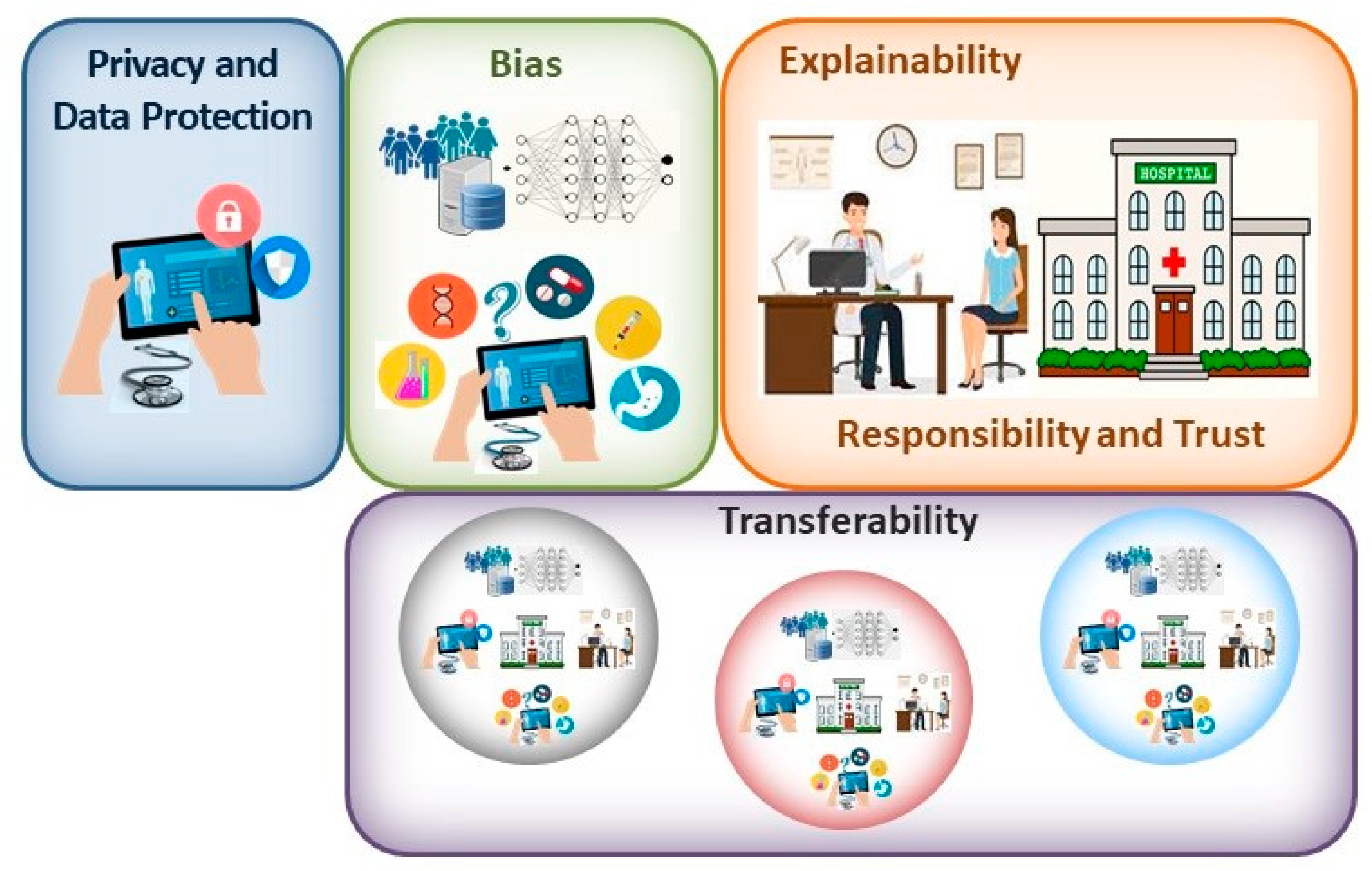

The potential benefits that are provided by any new technology must be weighed up against any risks associated with its introduction. Accordingly, if the AI tools that are developed to be used with CE are to fulfil their potential, they must offer guarantees against significant risks, perhaps the most important of which are related to issues of privacy and data protection, unintentional bias in the data and design of the tools, transferability, explainability and responsibility (Figure 1). In addition, it is clear that this is a disruptive technology that will require regulatory guidelines to be put in place to legislate the appropriate use of these tools, guidelines that are on the whole yet to be established. However, it is clear that the need for such regulation has not escaped the healthcare regulators, and, as in other fields, initiatives have been launched to explore the legal aspects surrounding the use of AI tools in healthcare that will clearly be relevant to digestive medicine as well [14][15].

Figure 1. When contemplating the main bioethical issues associated with the clinical implementation of AI solutions, the principal concerns may be related to the privacy and protection of patient data; bias introduced in the design and utilization of these systems; the explainability of the tools employed; responsibility for the output and patient trust in their clinician; and finally, the transferability of these systems.

2.1. Privacy and Data Management for AI-Based Tools

Ensuring the privacy of medical information is increasingly challenging in the digital age. Not only are electronic data easily reproduced, but they are also vulnerable to remote access and manipulation, with economic incentives intensifying cyberattacks on health-related organisations [16]. Breaches of medical confidentiality can have important consequences for patients. Indeed, they may not only be responsible for the shaming or alienation of patients with certain illnesses, but they could even perhaps limit their employment opportunities or affect their health insurance costs. As medical AI applications become more common, and as more data are collected and used/shared more widely, the threat to privacy increases. The hope is that measures such as de-identification will help maintain privacy and will require this process to be adopted more generally in many areas of life. However, the inconvenience associated with these approaches makes this unlikely to occur. Moreover, re-identification of de-identified data is surprisingly easy [17], and thus, we must perhaps accept that introducing clinical AI applications will compromise our privacy a little. This would be more acceptable if all individuals had the same chance of benefitting from these tools, in the absence of any bias, but at present, this does not appear to be the case (see below). While some progress in personal data protection has been made (e.g., General Data Protection Regulation 2016/79 in the E.U. or the Health Insurance Portability and Accountability Act in the USA: [18][19]), further advances with stakeholders are required to specifically address the data privacy issues associated with the deployment of AI applications [20].

The main aim of novel healthcare interventions and technologies is to reduce morbidity and mortality, or to achieve similar health outcomes more efficiently or economically. The evidence favouring the implementation of AI systems in healthcare generally focuses on their relative accuracy compared to gold standards [21], and as such, there have been fewer clinical trials carried out that measure their effects on outcomes [22][23]. This emphasis on accuracy may potentially lead to overdiagnosis [24]; although, this is a phenomenon that may be compensated for by considering other pathological, genomic and clinical data. Hence, it may be necessary to use more extended personal data from EHRs in AI applications to ensure the benefits of the tools are fully reaped and that they do not mislead physicians. One of the advantages of using such algorithms is that they might identify patterns and characteristics that are difficult for the human observer to perceive, and even those that may not currently be included in epidemiological studies, further enhancing diagnostic precision. However, this situation will create important demands on data management, on the safe and secure use of personal information and regarding consent for its use, accentuated by the large amount of quality data required to train and validate DL tools. Traditional opt-in/opt-out models of consent will be difficult to implement on the scale of these data and in such a dynamic environment [25]. Thus, addressing data-related issues will be fundamental to ensure a problem-free incorporation of AI tools into healthcare (Figure 1), perhaps requiring novel approaches to data protection.

One possible solution to the question of privacy and data management may come through the emergence of blockchain technologies in healthcare environments. In this sense, recent initiatives into the use of blockchain technology in healthcare may offer possible solutions to some of the problems regarding data handling and management, not least as this technology will facilitate the safer, traceable and efficient handling of an individual’s clinical information [26]. Indeed, the uniqueness of blockchain technology resides in the fact that it permits a massive, secure and decentralized public store of ordered records or events to be established [27]. Indeed, the local storage of medical information is a barrier to sharing this information, as well as potentially compromising its security. Blockchain technology enables data to be carefully protected and safely stored, assuring their immutability [28]. Thus, blockchain technology could help overcome the current fragmentation of a patient’s medical records, potentially benefitting the patient and healthcare professionals alike. Indeed, it could promote communication between healthcare professionals both at the same and perhaps at a different centre, radically reducing the costs associated with sharing medical data [29]. AI applications can benefit from different features of the use of a blockchain, offering trustworthiness, enhanced privacy and traceability. Indeed, when the data used in AI applications (both for training and in general) are acquired from a reliable, secure and trusted platform, AI algorithms will perform better.

2.2. The Issue of Bias in AI Applications

Among the most important issues faced by AI applications are those of bias and transferability [30]. Bias may be introduced through the training data employed or by decisions that are made during the design process [23][31][32][33]. In essence, ML systems are shaped by the data on which they are trained and validated, identifying patterns in large datasets that reproduce desired outcomes. Indeed, AI systems are tailor-made, and as such, they are only as good as the data with which they are trained. As such, when these data are incomplete, unrepresentative or poorly interpreted, the end result can be catastrophic [34][35]. One specific type of bias, spectrum bias, occurs when a diagnostic test is studied in individuals who differ from the population for which the test was intended. Indeed, spectrum bias has been recognized as a potential pitfall for AI applications in capsule endoscopy (CE) [36], as well as in the field of cardiovascular medicine [37]. Hence, AI learning models might not always be fully valid and applicable to new datasets. In this context, the integration of blockchain-enabled data from other healthcare platforms could serve to augment the number of what would otherwise be underrepresented cases in a dataset, thereby improving the training of the AI application and ultimately, its successful implementation.

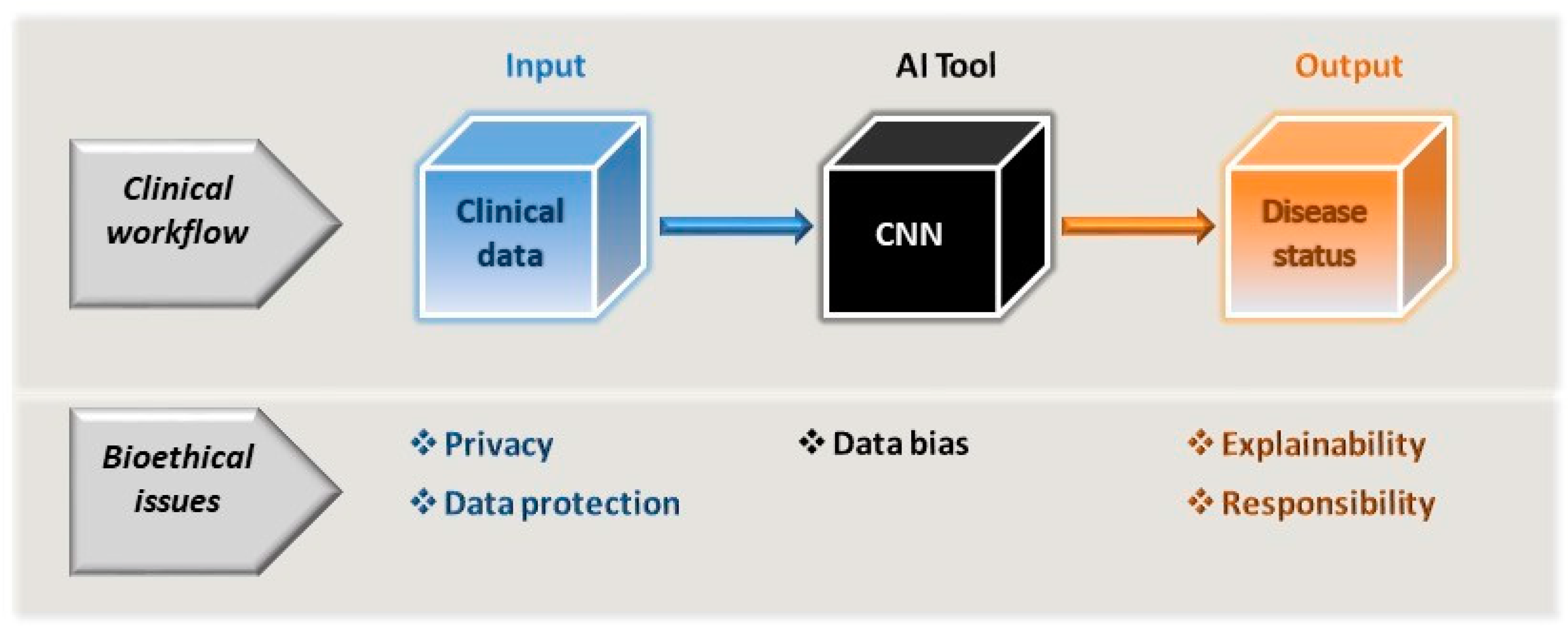

In real life, any inherent bias in clinical tools cannot be ignored and must be considered before validating AI applications. As a result, overfitting of these models should not be ignored, a phenomenon that occurs when the model is too tightly tuned to the training data, and as a result, it does not function correctly when fed with other data [38]. This can be avoided by using larger datasets for training and by not training the applications excessively, and possibly also by simplifying the models themselves. The way outcomes are identified is also entirely dependent on the data the models are fed. Indeed, there are examples of different pathologies where certain physical characteristics achieve better diagnostic performance, such as lighter rather than darker skin, yet perhaps this is a population that is overrepresented in the training data. Consequently, it is possible that only those with fair skin will fully benefit from such tools [39][40]. Human decisions may also skew AI tools, such that they may act in discriminatory ways [35]. Disadvantaged groups may not be well-represented in the formative stages of evidence-based medicine [41], and unless rectified, and human interventions can combat this bias, it will almost certainly be carried over into AI tools. Hence, programmes will need to be established to ensure ethical AI development, such as those contemplated to detect and eliminate bias in data and algorithms [42][43]. While bias may emerge from poor data collection and evaluation, it can also emerge in systems trained on high-quality datasets. Aggregation bias can emerge from using a single population to design a model that is not optimal for another group [30][34]. Thus, the potential that bias exists must be faced and not ignored, searching for solutions to overcome this problem rather than rejecting the implementation of AI tools on this basis (Figure 1 and Figure 2).

Figure 2. As part of the clinician’s workflow and decision-making process, the AI tools driven by CNNs can be considered a black box subject to data bias. As such, the AI tool itself cannot be allowed to introduce bias through its very design or to exacerbate any bias inherent to the input data used. The model input is essentially the patient’s clinical (or clinically related) data, which is subject to the constraints of privacy and data protection. As a consequence of using the tool, the clinician will extract information regarding the patient’s disease status and they must be in a position to be able to accept and explain the output of the model, and along with the healthcare providers, accept the same level of responsibility for this as would be expected in any clinical workflow.

2.3. The Explainability, Responsibility and the Role of the Clinician in the Era of AI-Based Medicine

Another critical issue with regards to the application of DL algorithms is that of explainability (Figure 2; [44][45]) and interpretability [22][23][31][46]. When explainable, what an algorithm does and the value it encodes can be readily understood [47]. However, it appears that less explainable algorithms may be more accurate [34][48], and thus, it remains unclear if it is possible to achieve both these features at the same time. How algorithms achieve a particular classification or recommendation may even be unclear to some extent to designers and users alike, not least due to the influence of training on the output of the algorithms and that of user interactions. Indeed, in situations where algorithms are being used to address relatively complex medical situations and relationships, this can lead to what is referred to as “black-box medicine”: circumstances in which the basis for clinical decision making becomes less clear [49]. While the explanations a clinician may give for their decisions may not be perfect, they are responsible for these decisions and can usually offer a coherent explanation if necessary. Thus, should AI tools be allowed to make diagnostic, prognostic and management decisions that cannot be explained by a physician [44][45]? Some lack of explainability has been widely accepted in modern medicine, with clinicians prescribing aspirin as an analgesic without understanding its mechanism of action for nearly a century [50].

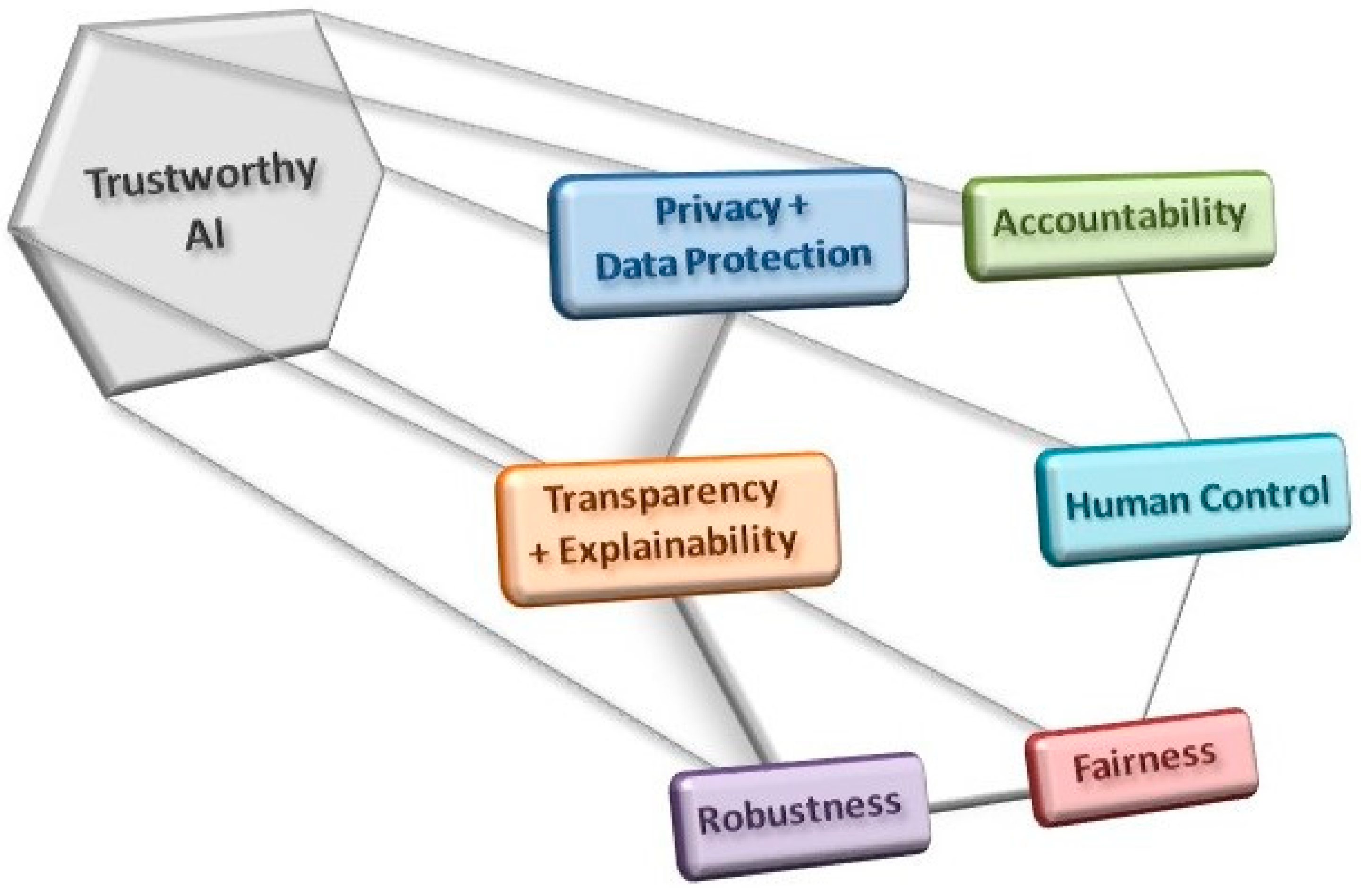

Other issues have been raised in association with the clinical introduction of AI applications, such as whether they will lead to greater surveillance of populations and how this should be controlled. Surveillance might compromise privacy but it could also be beneficial, enhancing the data with which the DL applications are trained, so perhaps this is an issue that will be necessary to contemplate in regulatory guidelines. Another issue that also needs to be addressed is the extent to which non-medical specialists such as computer scientists and IT specialists will gain power in clinical settings. Finally, the fragility associated with reliance on AI systems and the potential that monopolies will be established in specific areas of healthcare will also have to be considered [34]. In summary, it will be important to respect a series of criteria when designing and implementing AI-based clinical solutions to ensure that they are trustworthy (Figure 3; [51]).

Figure 3. The use and development of AI tools must comply with a series of criteria in order to obey ethical guidelines and good practices in their implementation, all with a view to establishing trustworthy AI applications.

3. The Bright Side and Benefits of AI in the Clinic

We are clearly at an interesting moment in the history of medicine as we embrace the use of AI and big data as a further step in the era of medical digitalisation. Despite the many challenges that must be faced, this is clearly going to be a disruptive technology in many medical fields, affecting clinical decision making and the doctor–patient dynamic in what will almost certainly be a tremendously positive way. Different levels of automation can be achieved by introducing AI tools into clinical decision-making routines, selecting between fully automated procedures and aids to conventional protocols as specific situations demand. Some issues that must be addressed prior to the clinical implementation of AI tools have already been recognised in healthcare scenarios. For example, bias is an existing problem evident through inequalities in the care received by some populations. AI applications can be used to incorporate and examine large amounts of data, allowing inequalities to be identified and leveraging this technology to address these problems. Through training on different populations, it may be possible to identify specific features of these populations that have an influence on disease prevalence, and/or on its progression and prognosis. Indeed, the identification of population-specific features that are associated with disease will undoubtedly have an important impact on medical research. However, there are other challenges that are posed by these systems that have not been faced previously and that will have to be resolved prior to their widespread incorporation into clinical decision decision-making procedures [52].

Automating procedures is commonly considered to be associated with greater efficiency, reduced costs and savings in time. The growing use of CE in digestive healthcare and the adaptation of these systems to an increasing number of circumstances generates a large amount of information and each examination may require over an hour to analyse. This not only requires the dedication of a clinician or specialist, and their training, but it may increase the chance of errors due to tiredness or monotony [53] (not least as lesions may only be present in a small number of the tens of thousands of images obtained [54]). DL tools have been developed based on CNNs to be used in conjunction with different CE techniques that aim to detect lesions or abnormalities in the intestinal mucosa [55][56][57][58][59]. These algorithms are capable of reducing the time required to read these examinations to a question of minutes (depending on the computational infrastructures available). Moreover, they have been shown to be capable of achieving accuracies and results not dissimilar to the current gold standard (expert clinician visual analysis), performances that will most likely improve with time and use. In addition, some of these tools will clearly be able to be used in real time, with the advantages that this will offer to clinicians and patients alike [60]. As well as the savings in time and effort that can be achieved by implementing AI tools, these advances may to some extent also drive the democratization of medicine and help in the application of specialist tools in less well-developed areas. Consequently, the use of AI solutions might reduce the need for specialist training to be able to offer healthcare services in environments that may be more poorly equipped. This may represent an important complement to systems such as CE that involve the use of more portable apparatus capable of being used in areas with more limited access and where patients may not necessarily have access to major medical facilities. Indeed, it may even be possible to use CE in the patient’s home environment.

It should also be noted that enhancing the capacity to review and evaluate large numbers of images in a significantly shorter period of time may also offer important benefits in the field of clinical research. Drug discovery programmes and research into other clinical applications are notoriously slow and laborious. Thus, any tools that can help speed up the testing and screening capacities in research pipelines may have important consequences in the development of novel treatments.

References

- McCarthy, J.; Minsky, M.L.; Rochester, N.; Shannon, C.E. “A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence”. Dartmouth Conference Papers. 1955. Available online: http://jmc.stanford.edu/articles/dartmouth.html (accessed on 10 August 2022).

- Gupta, R.; Srivastava, D.; Sahu, M.; Tiwari, S.; Ambasta, R.K.; Kumar, P. Artificial Intelligence to Deep Learning: Machine Intelligence Approach for Drug Discovery. Mol. Divers 2021, 25, 1315–1360.

- Buchanan, B.G.; Shortliffe, E.H. Rule-Based Expert Systems: The MYCIN Experiments of the Stanford Heuristic Programming Project; Addison-Wesley Series in Artificial Intelligence; Addison-Wesley: Boston, MA, USA, 1984.

- Clancey, W.J.; Shortliffe, E.H. Readings in Medical Artificial Intelligence: The First Decade; Addison-Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 1984.

- Kulikowski, C.A. Beginnings of Artificial Intelligence in Medicine (AIM): Computational Artifice Assisting Scientific Inquiry and Clinical Art—With Reflections on Present AIM Challenges. Yearb. Med. Inform. 2019, 28, 249–256.

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A Survey on Deep Learning in Medical Image Analysis. Med. Image Anal. 2017, 42, 60–88.

- Forslid, G.; Wieslander, H.; Bengtsson, E.; Wählby, C.; Hirsch, J.-M.; Stark, C.R.; Sadanandan, S.K. Deep Convolutional Neural Networks for Detecting Cellular Changes Due to Malignancy. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 82–89.

- Dong, X.; Zhou, Y.; Wang, L.; Peng, J.; Lou, Y.; Fan, Y. Liver Cancer Detection Using Hybridized Fully Convolutional Neural Network Based on Deep Learning Framework. IEEE Access 2020, 8, 129889–129898.

- Lyakhov, P.A.; Lyakhova, U.A.; Nagornov, N.N. System for the Recognizing of Pigmented Skin Lesions with Fusion and Analysis of Heterogeneous Data Based on a Multimodal Neural Network. Cancers 2022, 14, 1819.

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J.W.L. Artificial Intelligence in Radiology. Nat. Rev. Cancer 2018, 18, 500–510.

- Oren, O.; Gersh, B.J.; Bhatt, D.L. Artificial Intelligence in Medical Imaging: Switching from Radiographic Pathological Data to Clinically Meaningful Endpoints. Lancet Digit. Health 2020, 2, e486–e488.

- Yoon, H.J.; Jeong, Y.J.; Kang, H.; Jeong, J.E.; Kang, D.-Y. Medical Image Analysis Using Artificial Intelligence. Korean Soc. Med. Phys. 2019, 30, 49–58.

- Schmidt-Erfurth, U.; Bogunovic, H.; Sadeghipour, A.; Schlegl, T.; Langs, G.; Gerendas, B.S.; Osborne, A.; Waldstein, S.M. Machine Learning to Analyze the Prognostic Value of Current Imaging Biomarkers in Neovascular Age-Related Macular Degeneration. Ophthalmol Retin. 2018, 2, 24–30.

- Ad Hoc Committee on Artificial Intelligence (CAHAI). Possible Elements of a Legal Framework on Artificial Intelligence, Based on the Council of Europe’s Standards on Human Rights, Democracy and the Rule of Law; 2021. Available online: https://www.coe.int/en/web/artificial-intelligence/cahai# (accessed on 10 July 2022).

- European Commission. Proposal For a Regulation of the European Parliament and of the Council Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts; COM/2021/206 Final; European Commission: Brussels, Belgium, 2021.

- Kruse, C.S.; Frederick, B.; Jacobson, T.; Monticone, D.K. Cybersecurity in Healthcare: A Systematic Review of Modern Threats and Trends. THC 2017, 25, 1–10.

- Gymrek, M.; McGuire, A.L.; Golan, D.; Halperin, E.; Erlich, Y. Identifying Personal Genomes by Surname Inference. Science 2013, 339, 321–324.

- Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and Repealing Directive 95/46/EC (General Data Protection Regulation). OJ L 119, 4.5.2016, p. 1–88. Available online: http://data.europa.eu/eli/reg/2016/679/oj (accessed on 10 June 2021).

- United States Health Insurance Portability and Accountability Act of 1996; Public Law 104-191; US Statut Large, United States Government Printing Office: Washington, DC, USA, 1996; Volume 110, pp. 1936–2103.

- Geis, J.R.; Brady, A.; Wu, C.C.; Spencer, J.; Ranschaert, E.; Jaremko, J.L.; Langer, S.G.; Kitts, A.B.; Birch, J.; Shields, W.F.; et al. Ethics of Artificial Intelligence in Radiology: Summary of the Joint European and North American Multisociety Statement. Insights Imaging 2019, 10, 101.

- Houssami, N.; Lee, C.I.; Buist, D.S.M.; Tao, D. Artificial Intelligence for Breast Cancer Screening: Opportunity or Hype? Breast 2017, 36, 31–33.

- Cabitza, F.; Rasoini, R.; Gensini, G.F. Unintended Consequences of Machine Learning in Medicine. JAMA 2017, 318, 517.

- Fenech, M.; Strukelj, N.; Buston, O. Ethical, Social, and Political Challenges of Artificial Intelligence in Health; Future Advocacy. Welcome Trust: London, UK, 2018.

- Houssami, N. Overdiagnosis of Breast Cancer in Population Screening: Does It Make Breast Screening Worthless? Cancer Biol. Med. 2017, 14, 1–8.

- Racine, E.; Boehlen, W.; Sample, M. Healthcare Uses of Artificial Intelligence: Challenges and Opportunities for Growth. Healthc Manag. Forum 2019, 32, 272–275.

- Mascarenhas, M.; Santos, A.; Macedo, G. Fostering the Incorporation of Big Data and Artificial Intelligence Applications into Healthcare Systems by Introducing Blockchain Technology into Data Storage Systems. In Artificial Intelligence in Capsule Endoscopy, A Gamechanger for a Groundbreaking Technique; Mascarenhas, M., Cardoso, H., Macedo, G., Eds.; Elselvier: Amsterdam, The Netherlands.

- Gammon, K. Experimenting with Blockchain: Can One Technology Boost Both Data Integrity and Patients’ Pocketbooks? Nat. Med. 2018, 24, 378–381.

- Kuo, T.-T.; Kim, H.-E.; Ohno-Machado, L. Blockchain Distributed Ledger Technologies for Biomedical and Health Care Applications. J. Am. Med. Inform. Assoc. 2017, 24, 1211–1220.

- European Society of Radiology (ESR); Kotter, E.; Marti-Bonmati, L.; Brady, A.P.; Desouza, N.M. ESR White Paper: Blockchain and Medical Imaging. Insights Imaging 2021, 12, 82.

- Suresh, H.; Guttag, J.V. A Framework for Understanding Sources of Harm throughout the Machine Learning Life Cycle. arXiv 2019, arXiv:1901.10002.

- Loder, J.; Nicholas, L. Confronting Dr. Robot: Creating a People-Powered Future for AI in Health; NESTA Health Lab: London, UK, 2018.

- Coiera, E. On Algorithms, Machines, and Medicine. Lancet Oncol. 2019, 20, 166–167.

- Taddeo, M.; Floridi, L. How AI Can Be a Force for Good. Science 2018, 361, 751–752.

- Sparrow, R.; Hatherley, J. The Promise and Perils of AI in Medicine. Ijccpm 2019, 17, 79–109.

- O’Neil, C. Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy; Penguin Random House: London, UK, 2016.

- Mascarenhas, M. Artificial Intelligence and Capsule Endoscopy: Unravelling the Future. Ann. Gastroenterol. 2021, 34, 300–309.

- Mincholé, A.; Rodriguez, B. Artificial Intelligence for the Electrocardiogram. Nat. Med. 2019, 25, 22–23.

- Ying, X. An Overview of Overfitting and Its Solutions. J. Phys. Conf. Ser. 2019, 1168, 022022.

- Adamson, A.S.; Smith, A. Machine Learning and Health Care Disparities in Dermatology. JAMA Dermatol. 2018, 154, 1247.

- Burlina, P.; Joshi, N.; Paul, W.; Pacheco, K.D.; Bressler, N.M. Addressing Artificial Intelligence Bias in Retinal Diagnostics. Trans. Vis. Sci. Technol. 2021, 10, 13.

- Rogers, W.A. Evidence Based Medicine and Justice: A Framework for Looking at the Impact of EBM upon Vulnerable or Disadvantaged Groups. J. Med. Ethics 2004, 30, 141–145.

- Yala, A.; Lehman, C.; Schuster, T.; Portnoi, T.; Barzilay, R. A Deep Learning Mammography-Based Model for Improved Breast Cancer Risk Prediction. Radiology 2019, 292, 60–66.

- IBM Policy Lab Bias in AI: How We Build Fair AI Systems and Less-Biased Humans. Available online: https://www.ibm.com/policy/bias-in-ai/feb2018 (accessed on 3 June 2021).

- Holzinger, A.; Biemann, C.; Pattichis, C.S.; Kell, D.B. What Do We Need to Build Explainable AI Systems for the Medical Domain? arXiv preprint 2017, arXiv:1712.09923.

- Amann, J.; Blasimme, A.; Vayena, E.; Frey, D.; Vince, I. Madai Explainability for artificial intelligence in healthcare: A multidisciplinary perspective. BMC Med. Inform. Decis. Mak. 2020, 20, 310.

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy 2021, 23, 18.

- Cowls, J.; Floridi, L. Prolegomena to a White Paper on an Ethical Framework for a Good AI Society. SSRN J. 2018.

- Burrell, J. How the Machine ‘Thinks’: Understanding Opacity in Machine Learning Algorithms. Big Data Soc. 2016, 3, 205395171562251.

- Price, W.; Nicholson, I.I. Black-Box Medicine. Harv. J. Law Technol. 2014, 28, 419.

- London, A.J. Artificial Intelligence and Black-Box Medical Decisions: Accuracy versus Explainability. Hastings Cent. Rep. 2019, 49, 15–21.

- High-Level Expert Group on AI (Set Up by the European Commission). Ethics Guidelines for Trustworthy AI. 2019. Available online: https://ec.europa.eu/futurium/en/ai-alliance-consultation.1.html (accessed on 5 August 2021).

- European Parliament, Directorate-General for Parliamentary Research Services; Fox-Skelly, J.; Bird, E.; Jenner, N.; Winfield, A.; Weitkamp, E.; Larbey, R. The Ethics of Artificial Intelligence: Issues and Initiatives; Scientific Foresight Unit (STOA), European Parliamentary Research Service, European Parliament: Brussels, Belgium, 2020.

- Beg, S.; Card, T.; Sidhu, R.; Wronska, E.; Ragunath, K.; Ching, H.-L.; Koulaouzidis, A.; Yung, D.; Panter, S.; Mcalindon, M.; et al. The Impact of Reader Fatigue on the Accuracy of Capsule Endoscopy Interpretation. Dig. Liver Dis. 2021, 53, 1028–1033.

- Eliakim, R.; Yassin, K.; Niv, Y.; Metzger, Y.; Lachter, J.; Gal, E.; Sapoznikov, B.; Konikoff, F.; Leichtmann, G.; Fireman, Z.; et al. Prospective Multicenter Performance Evaluation of the Second-Generation Colon Capsule Compared with Colonoscopy. Endoscopy 2009, 41, 1026–1031.

- Mascarenhas Saraiva, M.; Ribeiro, T.; Afonso, J.; Ferreira, J.P.S.; Cardoso, H.; Andrade, P.; Parente, M.P.L.; Jorge, R.N.; Macedo, G. Artificial Intelligence and Capsule Endoscopy: Automatic Detection of Small Bowel Blood Content Using a Convolutional Neural Network. GE Port. J. Gastroenterol. 2022, 29, 331–338.

- Mascarenhas, M.; Afonso, J.; Ribeiro, T.; Cardoso, H.; Andrade, P.; Ferreira, J.P.S.; Saraiva, M.M.; Macedo, G. Performance of a Deep Learning System for Automatic Diagnosis of Protruding Lesions in Colon Capsule Endoscopy. Diagnostics 2022, 12, 1445.

- Mascarenhas, M.; Ribeiro, T.; Afonso, J.; Ferreira, J.P.S.; Cardoso, H.; Andrade, P.; Parente, M.P.L.; Jorge, R.N.; Mascarenhas Saraiva, M.; Macedo, G. Deep Learning and Colon Capsule Endoscopy: Automatic Detection of Blood and Colonic Mucosal Lesions Using a Convolutional Neural Network. Endosc. Int. Open 2022, 10, E171–E177.

- Afonso, J.; Saraiva, M.M.; Ferreira, J.P.S.; Cardoso, H.; Ribeiro, T.; Andrade, P.; Parente, M.; Jorge, R.N.; Macedo, G. Automated Detection of Ulcers and Erosions in Capsule Endoscopy Images Using a Convolutional Neural Network. Med. Biol. Eng. Comput. 2022, 60, 719–725.

- Ribeiro, T.; Mascarenhas, M.; Afonso, J.; Cardoso, H.; Andrade, P.; Lopes, S.; Ferreira, J.; Mascarenhas Saraiva, M.; Macedo, G. Artificial Intelligence and Colon Capsule Endoscopy: Automatic Detection of Ulcers and Erosions Using a Convolutional Neural Network. J. Gastroenterol. Hepatol. 2022, 37, 2282–2288.

- Rondonotti, E.; Spada, C.; Adler, S.; May, A.; Despott, E.J.; Koulaouzidis, A.; Panter, S.; Domagk, D.; Fernandez-Urien, I.; Rahmi, G.; et al. Small-Bowel Capsule Endoscopy and Device-Assisted Enteroscopy for Diagnosis and Treatment of Small-Bowel Disorders: European Society of Gastrointestinal Endoscopy (ESGE) Technical Review. Endoscopy 2018, 50, 423–446.

More

Information

Subjects:

Gastroenterology & Hepatology

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.1K

Entry Collection:

Gastrointestinal Disease

Revisions:

2 times

(View History)

Update Date:

08 May 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No