Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Jeon-Seong Kang | -- | 2630 | 2023-05-04 11:27:09 | | | |

| 2 | Sirius Huang | Meta information modification | 2630 | 2023-05-06 02:42:02 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Kang, J.; Kang, J.; Kim, J.; Jeon, K.; Chung, H.; Park, B. Neural Architecture Search: A Computer Vision Perspective. Encyclopedia. Available online: https://encyclopedia.pub/entry/43757 (accessed on 08 February 2026).

Kang J, Kang J, Kim J, Jeon K, Chung H, Park B. Neural Architecture Search: A Computer Vision Perspective. Encyclopedia. Available at: https://encyclopedia.pub/entry/43757. Accessed February 08, 2026.

Kang, Jeon-Seong, Jinkyu Kang, Jung-Jun Kim, Kwang-Woo Jeon, Hyun-Joon Chung, Byung-Hoon Park. "Neural Architecture Search: A Computer Vision Perspective" Encyclopedia, https://encyclopedia.pub/entry/43757 (accessed February 08, 2026).

Kang, J., Kang, J., Kim, J., Jeon, K., Chung, H., & Park, B. (2023, May 04). Neural Architecture Search: A Computer Vision Perspective. In Encyclopedia. https://encyclopedia.pub/entry/43757

Kang, Jeon-Seong, et al. "Neural Architecture Search: A Computer Vision Perspective." Encyclopedia. Web. 04 May, 2023.

Copy Citation

Deep learning (DL) has been widely studied using various methods across the globe, especially with respect to training methods and network structures, proving highly effective in a wide range of tasks and applications, including image, speech, and text recognition. One important aspect of this advancement is involved in the effort of designing and upgrading neural architectures, which has been consistently attempted thus far. However, designing such architectures requires the combined knowledge and know-how of experts from each relevant discipline and a series of trial-and-error steps. In this light, automated neural architecture search (NAS) methods are increasingly at the center of attention.

artificial intelligence (AI)

deep learning (DL)

convolutional neural network (CNN)

automated machine learning (Auto-ML)

neural architecture search (NAS)

1. Introduction

The artificial neural network (ANN) used today is the product of the combined efforts of a number of researchers, including McCulloch et al. [1], who first developed the concept in 1943. The concept of the convolutional neural network (CNN), among the most widely used neural network structures in computer vision (CV) applications, was first introduced using LeNet-5 in a 1989 study by LeCun et al. [2]. Back then, however, due to the lack of computing power of the hardware, CNN was found to be ineffective in dealing with complex and sophisticated tasks, such as object recognition and detection. After quite a while, AlexNet [3] was first introduced at the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2012; it made an impressive debut because the model was able to effectively overcome the limitations of deep learning (DL), making the most of GPU computing. With its unparalleled performance, AlexNet ushered in a new era of DL in earnest [4]. DL has attracted significant attention from researchers thanks to its ability to allow the automatic extraction of valid feature vectors from image data. Performing this automatic extraction requires the tuning of the hyper-parameters of DL. Thus far, extensive research for improved performance has been performed on a wide range of hyper-parameters, such as network structures, weight initialization, activation functions, operators, and loss functions [5][6][7][8][9]. However, the application of DL requires technical expertise and understanding, while incurring a significant amount of engineering time. In attempts to address these issues, automated machine learning (AutoML) has recently garnered significant attention.

The AutoML process is divided into multiple steps, including data preparation, feature engineering, model generation, and model estimation [10]. The first step of AutoML, data preparation, is a process in which data are collected; factors that may negatively affect the learning process, e.g., noise, are removed to train a given model to perform the target tasks. The second step, feature engineering, extracts features from given data to be used in the model. At the same time, feature construction techniques to improve the representative ability of the model, as well as feature selection techniques used to avoid overfitting, are applied in parallel. Conventional feature engineering methods include SURF [11], SIFT [12], HOG [13], the Kalman filter [14], and the Gabor filter. Recently, CNN and recurrent neural network (RNN) applications have been used to extract high-level features. The third step, model generation, is performed to obtain optimum output based on the extracted features. Model generation can be further subdivided into search space, hyper-parameters, and architecture optimization. During this process, model optimization is performed in combination with the model estimation step.

2. Neural Architecture Search

2.1. Search Space

If we simply think of the mechanism of a neural network, it is a graph composed of many operations, and it is a set of operations aimed at transforming from input data to expected output data. Such an expression can be represented by a directed acyclic graph (DAG) as Equation (1) [15].

where s(t) indicates a state at t s(t)∈S (is equal to the kth node of the neural network), and s(t−1) and o(t) represent a previous state and its associated operation o(t)∈O, respectively. O includes various series of operators such as convolution, deconvolution, skip-connection, pooling, and activation functions.

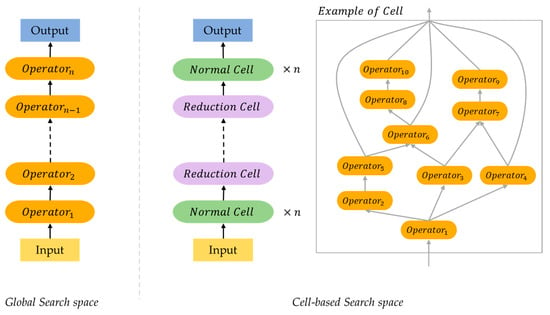

Neural architecture search space consists of a set of candidate operators and a restricted space in which the operators are placed. The operators of the candidate set depend on the task of the neural network. Most NAS research competes for performance through classification challenges in the CV field. In this case, convolution, dilated convolution [16], depth-wise convolution [17], etc., are widely used for candidate set. Search Space has various methods such as Sequential LayerWise Operations [18][19], Hierarchical Brench Connection Operations [20], Global Search space, Cell-Based search space [21], and Memory bank representation [22]. This text will discuss the search space divided into global search space and cell-based search space. Figure 1 shows a simple example structure of both methods.

Figure 1. Simplified example of global structured neural architecture and cell structured neural architecture.

2.1.1. Global Search Space

The global search space is equivalent to finding the series of operators O of the DAG. In other words, it is to determine the operator set with the best performance for a predefined number of nodes between the initial input and the final output. Searching the entire structure has several unavoidable disadvantages. Typically, most deep neural networks used in recent research consist of tens to hundreds of operators. If the global search method is applied to these networks, considerable GPU time will be required.

2.1.2. Cell-Based Search Space

Cell-based search space is based on the fact that the latest and successful artificial neural network structures [23][24][25] consist of repetitions of cells (also known as modules, blocks, units). Typical examples of such cell structures are a residual cell of ResNet [23] and a dense cell of DenseNet [24]. Since the aforementioned neural network structures are flexible in controlling the number of cells, the size of the structure can be determined by optimizing it for a scenario.

NAS research using cell-based search space was introduced in NASNet [21]. NASNet is a structure in which a reduction cell and a normal cell are found through RNN and reinforcement learning, and the final architecture is determined by stacking them several times. In [21], the computational cost and performance was improved compared to the state-of-the-art alternatives (e.g., Inception v3 [26], ResNet [23], DenseNet [24], ShuffleNet [27], etc.).

2.2. Search Strategy

As the search space is determined, we need a solution to search for the optimal neural architecture. To automate architecture optimization, traditional techniques up to the latest techniques are being studied. After architecture search, most NAS methods use grid search, random search, Bayesian Optimization, or gradient based optimization for hyperparameter optimization [10]. Table 1 compares the performance and efficiency of NAS algorithms using the CIFAR-10 and ImageNet dataset.

2.2.1. Evolutionary Algorithm

An evolutionary algorithm (EA) is a metaheuristic optimization algorithm inspired by evolutionary mechanisms in biology such as reproduction, mutation, and recombination. Specifically, an EA finds the optimal solution by repeating the steps of ‘evaluating the fitness of individual’, ‘selecting the individuals for reproduction’, and ‘crossover and mutation operation’. Because this algorithm does not have to assume fitness ideally, it can perform well in approximate solutions for many types of problem.

One of the most popular types of EA is the genetic algorithm (GA). The GA aims to transmit superior genetic information to the next generation by selecting competitive individuals from current generation. Genetic information for NAS includes information about neural network architecture, and each generation is accompanied by a training process to evaluate the performance of neural network architecture. This issue shows the limitation of the EA-based NAS method that requires significant GPU time. The schematic process of GA-based NAS can be summarized as Algorithm 1.

| Algorithm 1 GA-based NAS Algorithm |

|

In the case of NAS based on the GA, it is important to optimize the structure of the neural network through its genetic representation. Rikhtegar et al. [28] utilized the genetic representation encoded as integers for the number of filters, the size of filters, interconnection between feature maps, and activation functions. Using this encoding method, it is possible to find optimal parameters of neural network architecture, but the number of layers is fixed and it is only possible to search for a pre-defined connection structure. Xie et al. [29] designed a genetic representation focusing on the connection structure of convolutional layers. Their encoding method expressed the validity of the connection between each convolutional layer as a fixed-length binary string. However, contrary to [28], multiscale information cannot be utilized because the number of filters and size of filters are fixed.

2.2.2. Reinforcement Learning

Reinforcement learning (RL), inspired by behavioral psychology, is a method of machine learning in which agents learn in a predefined environment to select actions that seek to maximize the reward obtainable in the current state. Zoph et al. [18] is one of the earliest applications of reinforcement learning for NAS. They argued that Recurrent Neural Network (RNN) can be used as a NAS controller based on the fact that the structure and connectivity of the neural network can be expressed as a variable-length string. The RNN-based controller has the strength to flexibly respond to the architecture structure. In addition, the overall process speed was increased by using the asynchronous parameter updates for the controller and the distributed training for the child network [30]. In the same year, Baker et al. [19] proposed a MetaQNN that combines two techniques with Q-learning.

Table 1. Performance and Efficiency of NAS Algorithms on CIFAR-10 and ImageNet. The ‘S.S’ column indicated the search strategy. The dash (-) indicates that the corresponding information is not provided in the original paper.

| S.S. | Application | Performance (ACC, %) and Efficiency | Open Source | |||||

|---|---|---|---|---|---|---|---|---|

| CIFAR-10 | ImageNet | |||||||

| Top-1 | GPU Days | #GPUs | Top-1/5 | GPU Days | #GPUs | |||

| M | ResNet [23] | 93.57 | - | - | 80.62 /95.51 |

- | - | https://github.com/KaimingHe/deep-residual-networks, accessed on 30 July 2016 |

| DenseNet [24] | 96.54 | - | - | 78.54 /94.46 |

- | - | https://github.com/liuzhuang13/DenseNet, accessed on 11 September 2022 | |

| EA | GeNet#2 [29] | 92.9 | 17 | - | 72.13 /90.26 |

17 | - | - |

| RL | MetaQNN [19] | 93.08 | 100 | 10 | - | https://github.com/bowenbaker/metaqnn, accessed on 19 July 2017 | ||

| BlockQNN-Connection more filter [31] | 97.65 | 96 | 32 (1080Ti) |

81.0 /95.42 |

96 | 32 (1080Ti) | - | |

| BlockQNN-Depthwise, N = 3 [31] | 97.35 | |||||||

| ENAS + macro [32] | 96.13 | 0.32 | 1 | - | - | - | https://github.com/melodyguan/enas, accessed on 2 May 2019 | |

| ENAS + micro + c/o [32] | 97.11 | 0.45 | 1 | |||||

| GD | DARTS (1st order) + c/o [33] | 97.00 | 1.5 | 4 (1080Ti) |

73.3 /81.3 |

4 | - | https://github.com/quark0/darts, accessed on 12 October 2018 |

| DARTS (2nd order) + c/o [33] | 97.23 | 4 | ||||||

| DARTS+ [34] | 97.80 | 0.4 | 1 (V100) |

76.10 /92.80 |

6.8 | 1 (P100) |

- | |

| DARTS+ (Large) [34] | 98.32 | - | ||||||

| Fair-DARTS [35] | 97.46 | - | - | 75.6 /92.60 |

3 | - | https://github.com/xiaomi-automl/FairDARTS, accessed on 10 August 2020 | |

| P-DARTS + c/o [36] | 97.50 | 0.3 | - | 75.6 /92.60 |

0.3 | - | https://github.com/chenxin061/pdarts, accessed on 18 February 2020 | |

| P-DARTS (large) + c/o [36] | 97.75 | 0.3 | - | |||||

| Robust DARTS [37] | 97.05 | - | - | - | - | - | https://github.com/automl/RobustDARTS, accessed on 21 July 2020 | |

| Sharp DARTS [38] | 98.07 | 0.8 | 1 (2080Ti) |

74.9 /92.20 |

0.8 | - | - | |

| PC-DARTS + c/o [39] |

97.43 | 0.1 | 1 (1080Ti) |

75.8 /92.70 |

3.8 | 8 (V100) |

https://github.com/yuhuixu1993/PC-DARTS, accessed on 3 July 2020 | |

In traditional reinforcement learning, the convergence speed is slow due to over-exploration, and there is a problem if there is convergence to the local minima due to over-exploitation [40]. Baker et al. applied facts that can overcome these two problems with an ε-greedy strategy [41] and experience replay [42]. Zhong et al. [31] also introduced BlockQNN using the Q-learning paradigm with ε-greedy exploration strategy. However, unlike [19], they adopted a cell-based search space, referring to the fact that superior hand-crafted networks such as GoogLeNet [43] and ResNet [23] have the same block stacked structure. In [31], BlockQNN demonstrated advantageous in computational cost and strong generalizability through experiments. Inspired by the concept of ‘weight inheritance’ in [44], Pham et al. introduced ENAS [32], which applies a parameter sharing strategy to child architecture. According to the experimental results of [32], a searching time less than 16 h was required with a single GPU (GTX 1080Ti) on CIFAR-10. This result means that the GPU time is reduced by more than 1000× compared to [18], and it shows considerable efficiency in terms of computational cost.

2.2.3. Gradient Descent (GD)

Since the EA and RL search strategies are based on a discrete neural network space, a lot of search time is required. To solve this problem, studies on how to apply gradient-descent optimization techniques by transforming the discrete neural network space into continuous and differentiable spaces have been published. In 2018, Shin et al. [45] published DAS that solved the NAS as a fully-differentiable-problem, further developing from studies [46][47][48] that partially utilized the continuous domain concept. However, DAS has a limitation of optimizing only specific parameters of an architecture. DARTS [33] published by Liu et al. in 2019 focused on learning the complex operation graph topologies inside the cell constituting the neural network architecture. The DARTS is not limited to a specific neural network architecture, and has been the basis for many studies [34][35][36][37][38] as it can search both CNN and RNN. Liang et al. [34] observed performance collapse as the number of epochs of DARTS increased, and found that this was a result of overfitting due to the number of skip-connections that increased with epochs. They solved the performance collapse problem through DARTS+ [34], which applied the ‘early stopping’ technique, based on the research results that important connections and significant changes were determined in the early phase of training [49][50][51]. Chu et al. proposed Fair-DARTS [35], which applied independence of each operation’s architectural weight to solve the performance collapse problem caused by skip-connection, and eliminated the unfair advantage. Chen et al. [36] proposed progressive DARTS (P-DARTS) to solve the depth gap of DARTS. The depth gap is a problem that occurs in cell-based search methods, and is a performance collapse problem that occurs when different networks operate in the search phase and the evaluation phase. To solve the depth gap problem of DARTS, where the number of cells in the search phase and estimation phase are 8 and 20, respectively, P-DARTS reduced the difference from the estimation phase by gradually increasing the number of cells in the search phase. PC-DARTS [39] proposed by Xu et al. improved memory efficiency through random sampling for operation search and used a larger batch size for higher stability.

2.3. Evaluation Strategies

In addition to search space and search strategy, evaluation strategies are very important to improve NAS performance. An efficient NAS performance evaluation strategy can speed up the entire neural architecture search process while reducing the cost of computing resources. The simplest and most popular method, early-stopping [34][52][53][54] was widely used, but search results mostly showed simple and shallow layers. In general, these networks can be fast in terms of speed, but often have poor performance. The technique of parameter sharing is used to accelerate the process of NAS. For example, ENAS [32] constructs a large computational graph to perform a considerable amount of computation so that each subgraph represents a neural architecture and all architectures share parameters. In that way, this method improves the efficiency of NAS by avoiding training sub-models from scratch to convergence. Network morphism based algorithms [55][56] can also inherit the parameters of previous architectures, and single-path NAS [57] uses a single-path over parameterized ConvNet to encode all architectural decisions with a shared convolutional kernel. Another strategy is to use a proxy dataset. This method [58] is used by most existing NAS algorithms and can save computational cost by training and evaluating intermediate neural architectures on a small proxy dataset with limited training epochs. However, it is difficult to expect an architecture search with good performance with this coarse evaluation method.

Recently, various Evaluation Strategies are being studied to overcome the disadvantages of NAS. ReNAS [59] tries to predict the performance ranking of the searched architecture instead of calculating the accuracy as another strategy to accelerate the search process. In 2021, CTNAS [60] completely transforms the evaluation task into a contrastive learning problem. By proposing a method, an attempt is made to calculate the probability that the performance of the candidate architecture is better than the baseline. Most recently in 2022, ERNAS [61] proposes an end-to-end neural architecture search approach and a gradient descent search strategy to find candidates with better performance in latent space to efficiently find better neural structures.

References

- McCulloch, W.S.; Pitts, W. A Logical Calculus of the Ideas Immanent in Nervous Activity. Bull. Math. Biophys. 1943, 5, 115–133.

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551.

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90.

- ImageNet Large Scale Visual Recognition Competition 2012 (ILSVRC2012). Available online: https://image-net.org/challenges/LSVRC/2012/results.html#t1 (accessed on 21 December 2022).

- Sondhi, P. Feature Construction Methods: A Survey. Sifaka Cs Uiuc Edu. 2009, 69, 70–71.

- Hsu, C.-W.; Chang, C.-C.; Lin, C.-J. A Practical Guide to Support Vector Classification; National Taiwan University: Taipei, China, 2003.

- Hesterman, J.Y.; Caucci, L.; Kupinski, M.A.; Barrett, H.H.; Furenlid, L.R. Maximum-Likelihood Estimation with a Contracting-Grid Search Algorithm. IEEE Trans. Nucl. Sci. 2010, 57, 1077–1084.

- Feurer, M.; Hutter, F. Hyperparameter Optimization. In Automated Machine Learning; Springer: Cham, Switzerland, 2019; pp. 3–33.

- Yu, T.; Zhu, H. Hyper-Parameter Optimization: A Review of Algorithms and Applications. arXiv 2020, arXiv:200305689.

- He, X.; Zhao, K.; Chu, X. AutoML: A Survey of the State-of-the-Art. Knowl.-Based Syst. 2021, 212, 106622.

- Bay, H.; Tuytelaars, T.; Gool, L.V. Surf: Speeded up Robust Features. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2006; pp. 404–417.

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110.

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893.

- Bishop, G.; Welch, G. An Introduction to the Kalman Filter. Proc SIGGRAPH Course 2001, 8, 41.

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016.

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. arXiv 2015, arXiv:151107122.

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258.

- Zoph, B.; Le, Q.V. Neural Architecture Search with Reinforcement Learning. arXiv 2016, arXiv:161101578.

- Baker, B.; Gupta, O.; Naik, N.; Raskar, R. Designing Neural Network Architectures Using Reinforcement Learning. arXiv 2016, arXiv:161102167.

- Liu, H.; Simonyan, K.; Vinyals, O.; Fernando, C.; Kavukcuoglu, K. Hierarchical Representations for Efficient Architecture Search. arXiv 2017, arXiv:171100436.

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning Transferable Architectures for Scalable Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8697–8710.

- Brock, A.; Lim, T.; Ritchie, J.M.; Weston, N. Smash: One-Shot Model Architecture Search through Hypernetworks. arXiv 2017, arXiv:170805344.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778.

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708.

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015; pp. 234–241.

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826.

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856.

- Rikhtegar, A.; Pooyan, M.; Manzuri-Shalmani, M.T. Genetic Algorithm-Optimised Structure of Convolutional Neural Network for Face Recognition Applications. IET Comput. Vis. 2016, 10, 559–566.

- Xie, L.; Yuille, A. Genetic Cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1379–1388.

- Dean, J.; Corrado, G.; Monga, R.; Chen, K.; Devin, M.; Mao, M.; Ranzato, M.; Senior, A.; Tucker, P.; Yang, K. Large Scale Distributed Deep Networks. In Proceedings of the NIPS’12: Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012.

- Zhong, Z.; Yan, J.; Wu, W.; Shao, J.; Liu, C.-L. Practical Block-Wise Neural Network Architecture Generation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2423–2432.

- Pham, H.; Guan, M.; Zoph, B.; Le, Q.; Dean, J. Efficient Neural Architecture Search via Parameters Sharing. In Proceedings of the International conference on machine learning, Stockholm, Sweden, 10–15 July 2018; pp. 4095–4104.

- Liu, H.; Simonyan, K.; Yang, Y. Darts: Differentiable Architecture Search. arXiv 2018, arXiv:180609055.

- Liang, H.; Zhang, S.; Sun, J.; He, X.; Huang, W.; Zhuang, K.; Li, Z. Darts+: Improved Differentiable Architecture Search with Early Stopping. arXiv 2019, arXiv:190906035.

- Chu, X.; Zhou, T.; Zhang, B.; Li, J. Fair Darts: Eliminating Unfair Advantages in Differentiable Architecture Search. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2020; pp. 465–480.

- Chen, X.; Xie, L.; Wu, J.; Tian, Q. Progressive Differentiable Architecture Search: Bridging the Depth Gap between Search and Evaluation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1294–1303.

- Zela, A.; Elsken, T.; Saikia, T.; Marrakchi, Y.; Brox, T.; Hutter, F. Understanding and Robustifying Differentiable Architecture Search. arXiv 2019, arXiv:190909656.

- Hundt, A.; Jain, V.; Hager, G.D. Sharpdarts: Faster and More Accurate Differentiable Architecture Search. arXiv 2019, arXiv:190309900.

- Xu, Y.; Xie, L.; Zhang, X.; Chen, X.; Qi, G.-J.; Tian, Q.; Xiong, H. Pc-Darts: Partial Channel Connections for Memory-Efficient Architecture Search. arXiv 2019, arXiv:190705737.

- Kaelbling, L.P.; Littman, M.L.; Moore, A.W. Reinforcement Learning: A Survey. J. Artif. Intell. Res. 1996, 4, 237–285.

- Vermorel, J.; Mohri, M. Multi-Armed Bandit Algorithms and Empirical Evaluation. In European Conference on Machine Learning; Springer: Cham, Switzerland, 2005; pp. 437–448.

- Lin, L.-J. Reinforcement Learning for Robots Using Neural Networks; Carnegie Mellon University: Pittsburgh, PA, USA, 1992.

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9.

- Real, E.; Moore, S.; Selle, A.; Saxena, S.; Suematsu, Y.L.; Tan, J.; Le, Q.V.; Kurakin, A. Large-Scale Evolution of Image Classifiers. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 2902–2911.

- Shin, R.; Packer, C.; Song, D. Differentiable Neural Network Architecture Search. 2018. Available online: https://openreview.net/forum?id=BJ-MRKkwG (accessed on 21 December 2022).

- Saxena, S.; Verbeek, J. Convolutional Neural Fabrics. arXiv 2016, arXiv:1606.02492.

- Ahmed, K.; Torresani, L. Connectivity Learning in Multi-Branch Networks. arXiv 2017, arXiv:170909582.

- Veniat, T.; Denoyer, L. Learning Time/Memory-Efficient Deep Architectures with Budgeted Super Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3492–3500.

- Achille, A.; Rovere, M.; Soatto, S. Critical Learning Periods in Deep Networks. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018.

- Frankle, J.; Schwab, D.J.; Morcos, A.S. The Early Phase of Neural Network Training. arXiv 2020, arXiv:200210365.

- Santra, S.; Hsieh, J.-W.; Lin, C.-F. Gradient Descent Effects on Differential Neural Architecture Search: A Survey. IEEE Access 2021, 9, 89602–89618.

- Klein, A.; Falkner, S.; Springenberg, J.T.; Hutter, F. Learning Curve Prediction with Bayesian Neural Networks. In Proceedings of the ICLP 2017, Melbourne, Australia, 28 August–3 September 2017.

- Deng, B.; Yan, J.; Lin, D. Peephole: Predicting Network Performance before Training. arXiv 2017, arXiv:171203351.

- Domhan, T.; Springenberg, J.T.; Hutter, F. Speeding up Automatic Hyperparameter Optimization of Deep Neural Networks by Extrapolation of Learning Curves. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July2015.

- Chen, T.; Goodfellow, I.; Shlens, J. Net2net: Accelerating Learning via Knowledge Transfer. arXiv 2015, arXiv:151105641.

- Wei, T.; Wang, C.; Rui, Y.; Chen, C.W. Network Morphism. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 564–572.

- Stamoulis, D.; Ding, R.; Wang, D.; Lymberopoulos, D.; Priyantha, B.; Liu, J.; Marculescu, D. Single-Path Nas: Designing Hardware-Efficient Convnets in Less than 4 Hours. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases; Springer: Cham, Switzerland, 2020; pp. 481–497.

- Moser, B.; Raue, F.; Hees, J.; Dengel, A. Less Is More: Proxy Datasets in NAS Approaches. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1953–1961.

- Xu, Y.; Wang, Y.; Han, K.; Tang, Y.; Jui, S.; Xu, C.; Xu, C. Renas: Relativistic Evaluation of Neural Architecture Search. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 4411–4420.

- Chen, Y.; Guo, Y.; Chen, Q.; Li, M.; Zeng, W.; Wang, Y.; Tan, M. Contrastive Neural Architecture Search with Neural Architecture Comparators. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 9502–9511.

- Zhang, Y.; Li, B.; Tan, Y.; Jian, S. Evaluation Ranking Is More Important for NAS. In Proceedings of the 2022 IEEE International Joint Conference on Neural Networks (IJCNN), Padova, Italy, 18–23 July 2022; pp. 1–8.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.0K

Revisions:

2 times

(View History)

Update Date:

06 May 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No