Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Sapdo Utomo | -- | 3156 | 2022-12-19 07:40:48 | | | |

| 2 | Rita Xu | -3 word(s) | 3153 | 2022-12-19 08:43:28 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Pratap, A.; Sardana, N.; Utomo, S.; Ayeelyan, J.; Karthikeyan, P.; Hsiung, P. Deep Learning towards Digital Additive Manufacturing. Encyclopedia. Available online: https://encyclopedia.pub/entry/38938 (accessed on 07 February 2026).

Pratap A, Sardana N, Utomo S, Ayeelyan J, Karthikeyan P, Hsiung P. Deep Learning towards Digital Additive Manufacturing. Encyclopedia. Available at: https://encyclopedia.pub/entry/38938. Accessed February 07, 2026.

Pratap, Ayush, Neha Sardana, Sapdo Utomo, John Ayeelyan, P. Karthikeyan, Pao-Ann Hsiung. "Deep Learning towards Digital Additive Manufacturing" Encyclopedia, https://encyclopedia.pub/entry/38938 (accessed February 07, 2026).

Pratap, A., Sardana, N., Utomo, S., Ayeelyan, J., Karthikeyan, P., & Hsiung, P. (2022, December 19). Deep Learning towards Digital Additive Manufacturing. In Encyclopedia. https://encyclopedia.pub/entry/38938

Pratap, Ayush, et al. "Deep Learning towards Digital Additive Manufacturing." Encyclopedia. Web. 19 December, 2022.

Copy Citation

Machine learning is a type of deep learning. First in the machine learning (ML) process is the manual extraction of relevant image characteristics. These characteristics are also used to classify the image according to its particular characteristics. Researchers focused primarily on digital additive manufacturing, one of the most significant emerging topics in Industry 4.0.

deep learning

additive manufacturing

image segmentation

1. Introduction

Rapid prototyping (RP) is a collection of manufacturing techniques that may produce a finished product straight from a 3D model in a layer-by-layer fashion. Because of its numerous advantages, this technology has become a crucial component of the fourth industrial revolution. Globally, technology is transforming the manufacturing industry. Despite this, the industry’s adoption of this technology is hampered by layer-related flaws and poor process reproducibility. The function and mechanical qualities of printed objects can be significantly impacted by flaws such as lack of fusion, porosity, and undesirable dimensional deviation, which are frequent occurrences [1][2]. Variability in product quality, which poses a significant obstacle to its adoption in the production line, is one of the process’s key downsides. To overcome this hurdle, inspecting and overseeing the additive manufacturing (AM) process are essential. The importance of in-depth material and component analysis is growing, which leads this technology toward the integration of data science and deep learning. These newly discovered data are invaluable for acquiring a fresh understanding of AM processes and decision-making [3]. Unlike traditional manufacturing procedures, AM creates goods from digital 3D models layer-by-layer, line-by-line, or piece-by-piece [4][5]. AM fabrication methods have been developed to print natural working objects using diverse types and forms of materials, including fused filament fabrication (FFF), stereolithography (SLA), selective laser sintering (SLS), selective laser melting (SLM), and laser-engineered net shaping (LENS). The various techniques will be discussed in the further section. The materials’ anisotropic character, porosity caused by inadequate material fusion, and warping due to residual tension brought on by the fast-cooling nature of additive manufacturing techniques are only a few of the particular difficulties that must be solved. Deep learning (DL) has recently gained popularity in pattern recognition and computer vision due to its dominance in feature extraction and picture interpretation. Convolutional neural networks (CNNs) are one of the most widely employed techniques in deep learning, and they have been extensively used for object detection, action recognition, and image classification [6]. CNN is widely used for computer vision task applications [7]. DL integrated design is used for AM framework. In other words, deep learning simulates the input and output data for the given part [8].

2. Deep Learning Models in Additive Manufacturing

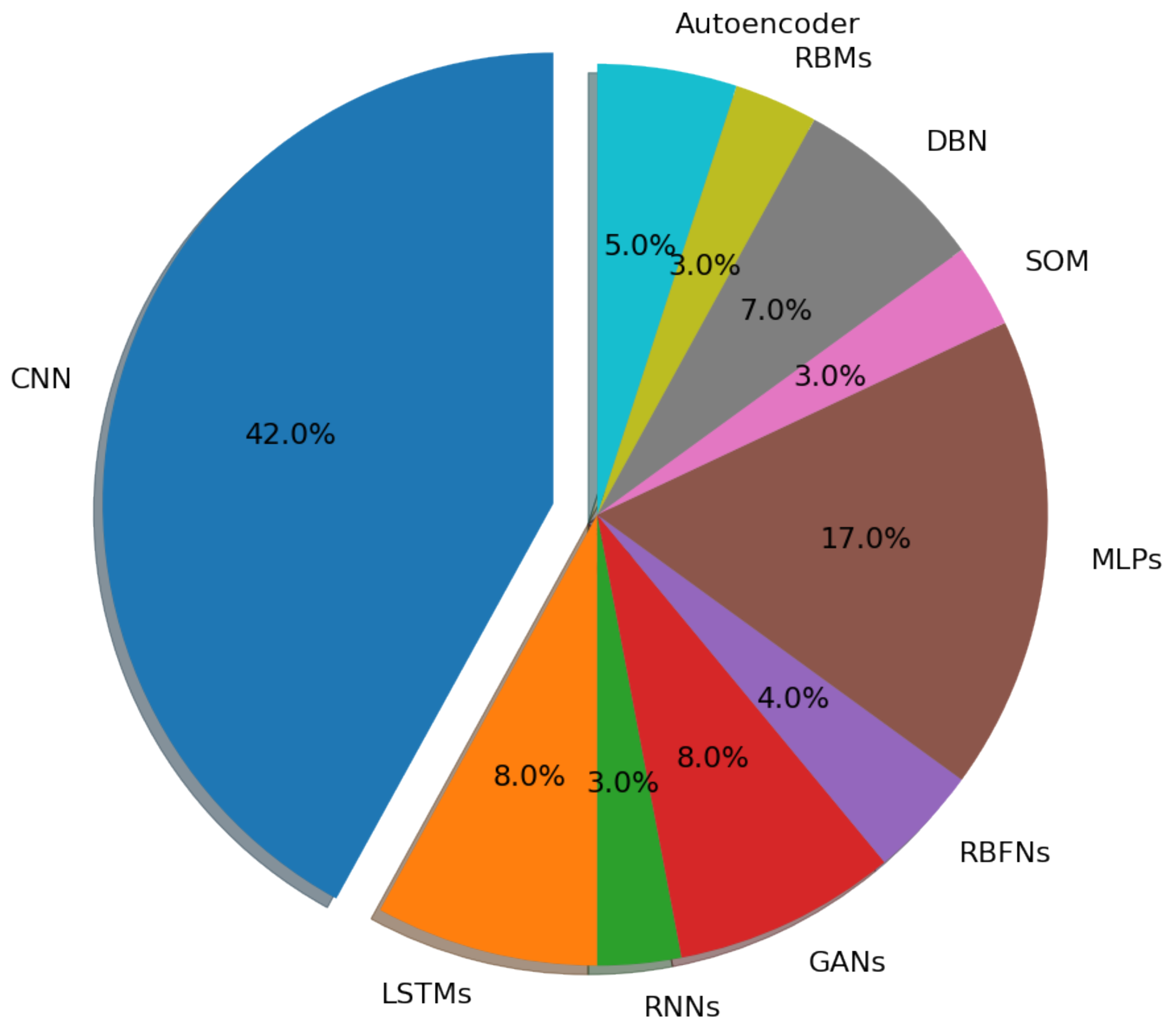

In the additive manufacturing sector, parts quality inspection is essential and can be used to enhance products. However, the manual recognition used in the conventional inspection procedure may be biased and low in efficiency. As a result, deep learning has emerged as a reliable technique for quality inspection of the AM-built part. The sector-wise representation of various deep learning models associated with AM to date is presented in Figure 1. The various model of DL associated with the AM has been discussed in this section elaborately.

Figure 1. Sector-wise representation of various deep learning models associated with AM.

2.1. Convolutional Neural Networks (CNNs)

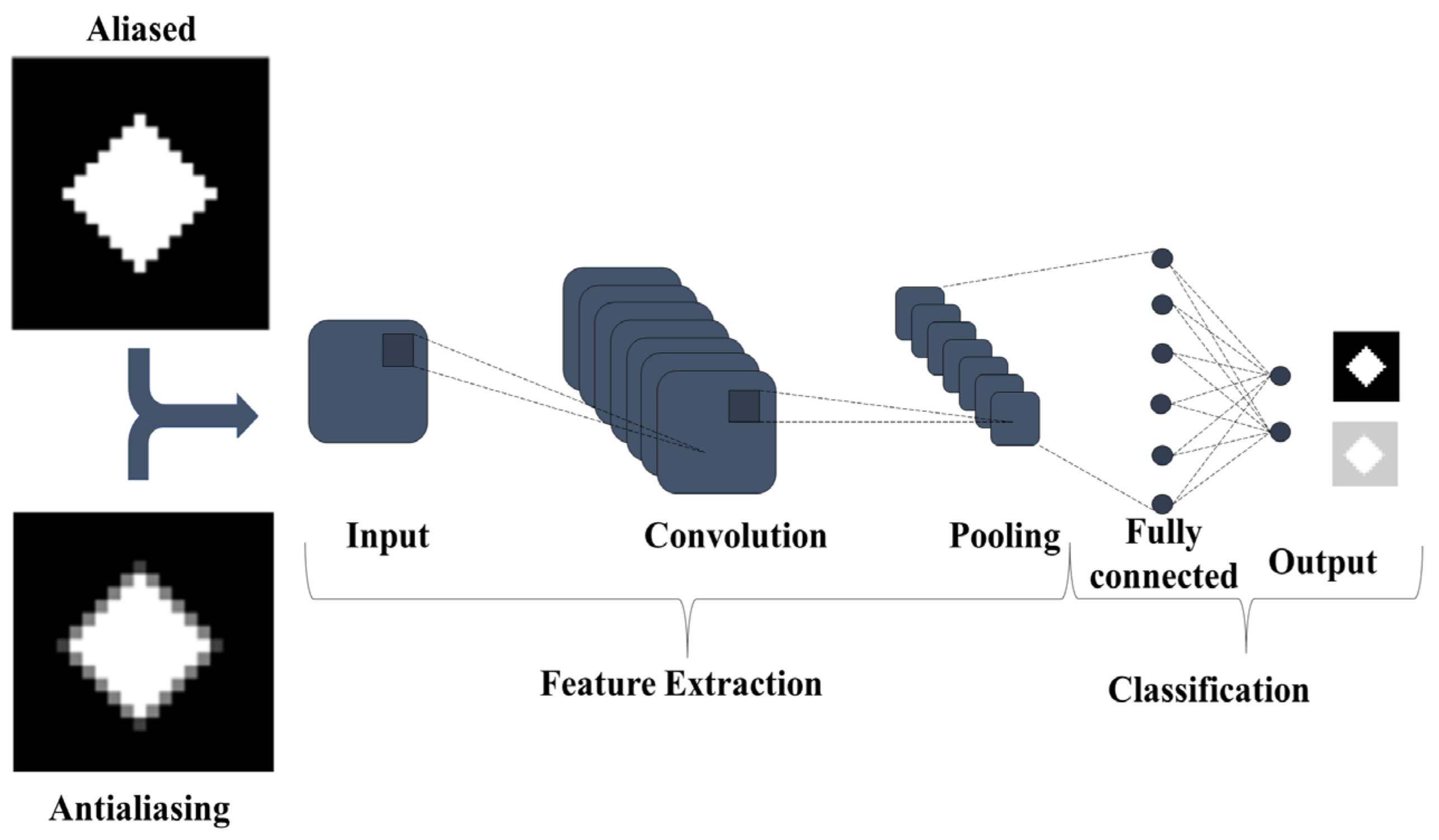

Convolutional neural networks are one of the deep neural network types that have received the most attention. Because of the rapid development in the amount of annotated data and considerable advances in the capacity of graphics processor units, convolutional neural network research has quickly developed and achieved state-of-the-art outcomes on several applications [9]. CNNs are made up of neurons that learn to optimize themselves, similar to traditional ANNs [10]. CNNs are frequently used in academic and commercial projects due to their benefits, such as down sampling, weight sharing, and local connection. A CNN model typically requires four components to be built. Convolution is an essential step in feature extraction. Feature maps are the results of convolution. Researchers will lose boundary information if researchers use a convolution kernel of a specific size [11]. Padding is thus used to increase the input with a zero value, which can modify the size indirectly. Furthermore, the stride is employed to control the density of convolving. The density diminishes as the stride size increases. Feature maps generated after convolution contain many features, which might cause overfitting. Pooling (also known as aggregation) avoids redundancy [12]. The basic architecture of CNN is presented in Figure 2. Table 1 provides a summary of various literature on CNN. The deep CNN was accepted as the winning entry in the ImageNet Challenge 2012 (LSVRC-2012), developed by Krizhevsky, Sutskever, and Hinton. Since then, DL has been successfully used for several use cases, including, text processing, computer visions, sentiment analysis, recommendation systems, etc. Besides that, big businesses like Google, Facebook, Amazon, IBM, and others have established their own DL research facilities [13]. In addition to that, AM has also incorporated it enormously. As shown in Figure 1.

Figure 2. Basic architecture of CNN.

Table 1. Summary of various literature on the related CNN.

| Type of CNN | AM Process | Activation | Loss | Optimizer | Accuracy | References |

|---|---|---|---|---|---|---|

| CNN | Leaky-Relu and SoftMax | Cross entropy | Adam | 99.3% | [14] | |

| Alex Net | Powder bed fusion | SoftMax and Relu | - | Momentum-based Stochastic Gradient Descent | 97% | [15] |

| CNN | Direct energy deposition | SoftMax and Relu | Cross entropy | Adam | 80 | [16] |

| CNN | Selective laser melting | SoftMax and Relu | Cross entropy | Gradient descent | 99.4 | [17] |

| CNN | Metal AM | SoftMax and Relu | Cross entropy | Adam | 92.1% | [18] |

| ResNet 50 | FDM | 98 | [19] | |||

| CNN | PBF | SoftMax and Relu | [20] | |||

| CNN | LASER PBF | ReLU and sigmoid |

Standard mean squared error and cross-entropy | Adam | 93.1 | [21] |

| CNN | PBF (melt pool classification) | Reply | 9.84 | [22] | ||

| CNN | Fused filament fabrication | SoftMax and Relu | 99.5 | [23] | ||

| CNN | PBF (Melt pool, plume and splatter) | SoftMax and Relu | Mini batch gradient descent | 92.7 | [24] |

2.2. Recurrent Neural Networks (RNNs), GRU and LSTM

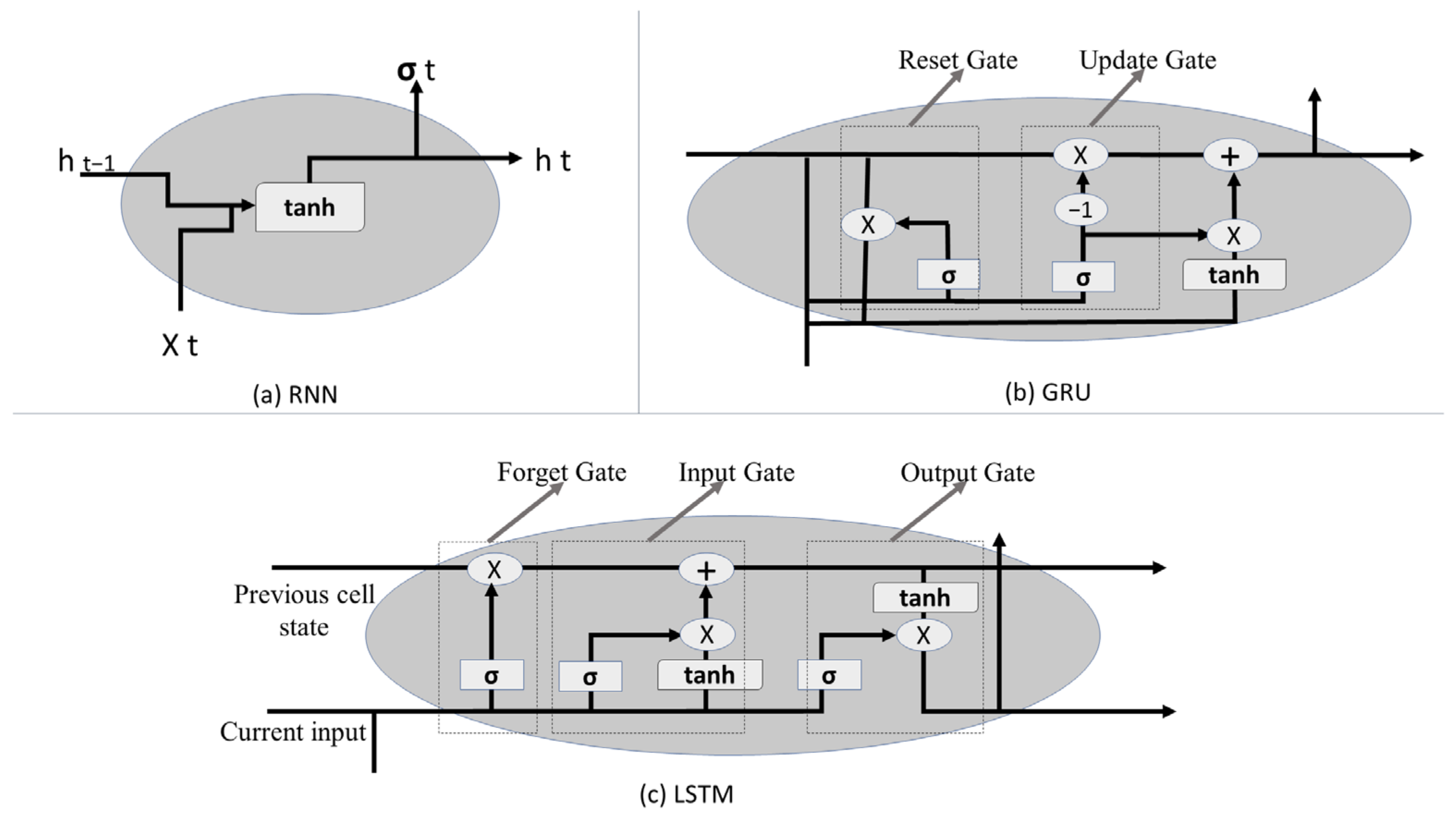

Recurrent neural networks (RNN) are built to handle sequential or time series data. Time series data can take the form of text, audio, video, and so on. The architecture of the RNN unit demonstrates this. It uses the previous step’s input as well as the current input. Tanh is the activation function here; alternative activation functions can be used in place of tanh. RNNs have short-term memory issues. The vanishing gradient issue causes it. RNN will not remember the long sequences of input [25]. To address this issue, two customized variants of RNN were developed. They are as follows: (1) GRU (gated recurrent unit) (2) LSTM (long-term memory). All the network is shown in Figure 3.

Figure 3. (a) Recurrent neural networks (RNNs),(b) GRU and (c) LSTM.

LSTMs and GRUs use memory cells to store the activation values of preceding words in extended sequences [26]. The concept of gates enters the picture now. Gates are used in networks to control the flow of information. Gates can learn which inputs in a sequence are essential and retain their knowledge in the memory unit. They can provide data in extended sequences and use it to generate predictions. The workflow of GRU is similar to that of RNN. However, the distinction is in the operations performed within the GRU unit. Table 2 summarizes the various literature on the sequences model.

Table 2. Summary of various literature on sequences model.

| Model | AM Procedure | Problem | Outcome | References |

| RNN +DNN | Laser-based | Laser scanning patterns and the thermal history distributions correlated, and finding a relationship is complex. | The created RNN-DNN model can forecast thermal fields for any geometry using various scanning methodologies. The agreement between the numerical simulation results and the RNN-DNN forecasts was more significant than 95%. | [27] |

| RGNN GNN |

DED | Specific model generalizability has remained a barrier across a wide range of geometries. | Deep learning architecture provides a feasible substitute for costly computational mechanics or experimental techniques by successfully forecasting long thermal histories for unknown geometries during the training phase. | [28] |

| Conv-RNN | Inkjet AM | Height data from the input–output relationship. | The model was empirically validated and shown to outperform a trained MLP with significantly fewer data. | [29] |

| RNN, GRU | DED | High-dimensional thermal history in DED processes is forecast with changes in geometry such as build dimensions, toolpath approach, laser power, and scan speed. | The model can predict the temperature history of each given point of the DED based on a test-set database and with minimum training. | [30] |

| LSTM | DED | To determine the temperature of the molten pool, analytical and numerical methods have been developed; however, since the real-time melt pool temperature distribution is not taken into account, the accuracy of these methods is rather low. | Developed a machine learning-based data-driven predictive algorithm to accurately estimate the melt pool temperature during DED. | [31] |

| CNN, LSTM |

DED | Forecasting melt pool temperature is layer-by-layer. | By combining CNN and LSTM networks, geographical and temporal information may be retrieved from melt pool temperature data. | [32] |

| CNN, LSTM | SLS | Several factors determine the energy consumption of AM systems. These aspects include traits with multiple dimensions and structures, making them difficult to examine. | A data fusion strategy is offered for estimating energy consumption. | [33] |

| PyroNet, IRNet, LSTM | Laser-based Additive Manufacturing | Intends to advance awareness of the fundamental connection between the LBAM method and porosity. | DL-based data fusion method that takes advantage of the measured melt pool’s thermal history as well as two newly built deep learning neural networks to estimate porosity in LBAM sections. | [34] |

| LSTM | FDM | It is investigated how equipment operating conditions affect the quality of the generated products using standard data features from the printer’s sensor signals (vibration, current, etc.). | An intelligent monitoring system has been designed in terms of working conditions and product quality. | [35] |

| LSTM | PBF | During the printing process to avoid an uneven and harsh temperature distribution across the printing plate | Anticipate temperature gradient distributions during the printing process | [36] |

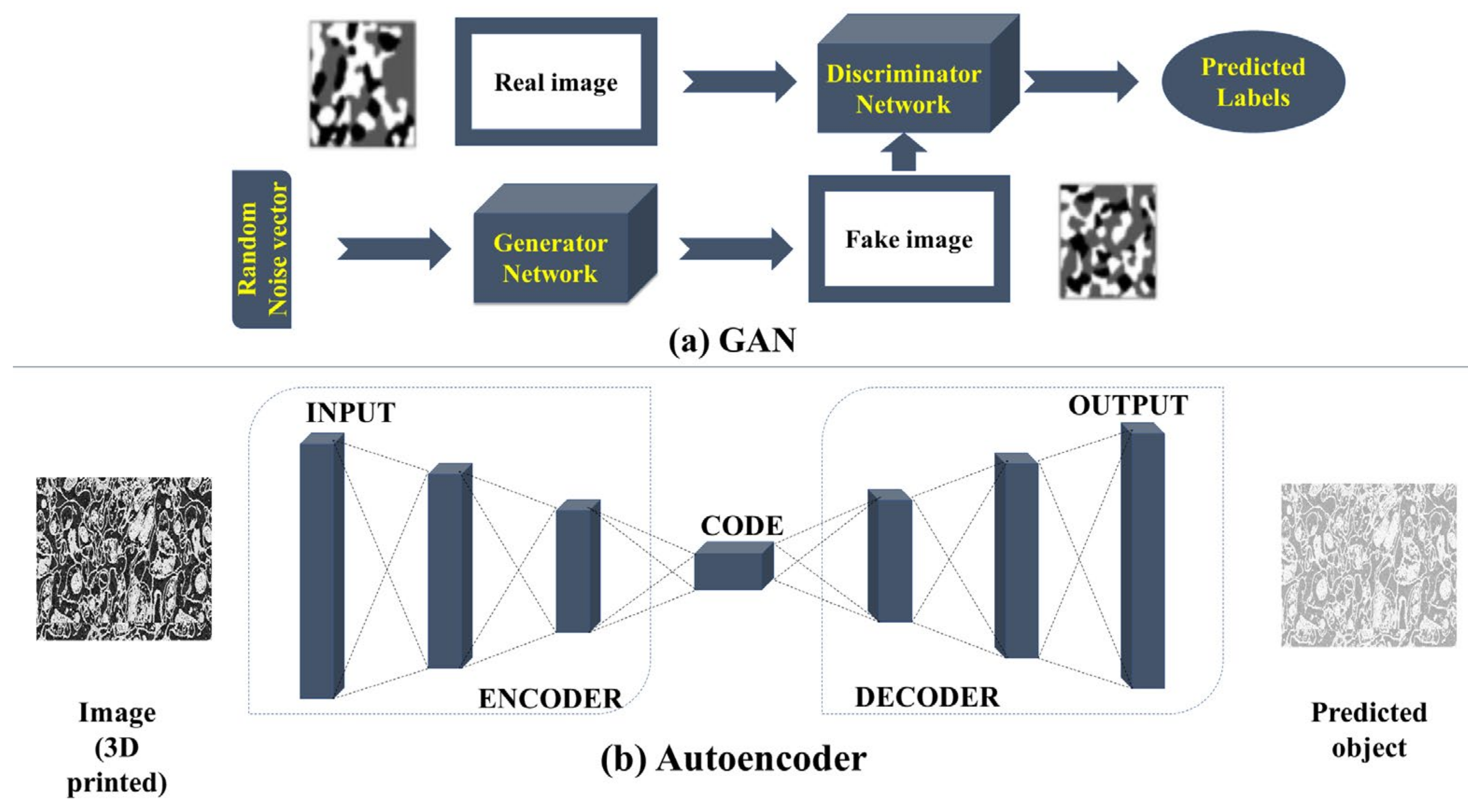

2.3. Generative Adversarial Networks (GANs) and Autoencoder

The GAN has recently gained popularity in the fields of computer science and manufacturing, ranking among the most widely used deep learning approaches. The GANs are used in computer vision applications in many areas such as medical, and industrial automation. The generating network and the discriminative network are the two networks that make up a GAN [37]. The generator generates the fake image and the decimator differentiates the fake image from the original image. First, using a generator, researchers create a fake image out of a batch of random vectors drawn from a Gaussian distribution. The generated image does not mirror the real input distribution because the generator has not been educated. Researchers feed the discriminator batches of actual and created fake images from the input distribution so that it can learn to distinguish between the two types of images. An image-enhancement generative adversarial network (IEGAN) is created, and the training procedure uses a new objective function. The thermal images obtained from an AM method are used for image segmentation to confirm the superiority and viability of the proposed IEGAN. Results of experiments show that the created IEGAN works better than the original GAN in raising the contrast ratio of thermal images [38]. Figure 4 depicts the GAN and autoencoder overview.

Figure 4. (a) Generative adversarial networks (GANs) and (b) Autoencoder.

An autoencoder is used for unsupervised learning data encodings. An autoencoder trains the network to identify the key elements of the input image to learn a lower-dimensional representation (encoding) for higher-dimensional data, generally for dimensionality reduction. Ironically, the bottleneck is the most crucial component of the neural network. The autoencoder is widely applied in noise reductions in the image. The auto-encode takes X as input and tries to generate X as output [39]. Table 3 summarizes the various literature on GAN and autoencoders.

Table 3. Summary of various literature on GAN and autoencoders.

| Model | AM Procedure | Problem | Outcome | References |

| RNN +DNN | Laser-based | Laser scanning patterns and the thermal history distributions correlated, and finding a relationship is complex. | The created RNN-DNN model can forecast thermal fields for any geometry using various scanning methodologies. The agreement between the numerical simulation results and the RNN-DNN forecasts was more significant than 95%. | [27] |

| RGNN GNN |

DED | Specific model generalizability has remained a barrier across a wide range of geometries. | Deep learning architecture provides a feasible substitute for costly computational mechanics or experimental techniques by successfully forecasting long thermal histories for unknown geometries during the training phase. | [28] |

| Conv-RNN | Inkjet AM | Height data from the input–output relationship. | The model was empirically validated and shown to outperform a trained MLP with significantly fewer data. | [29] |

| RNN, GRU | DED | High-dimensional thermal history in DED processes is forecast with changes in geometry such as build dimensions, toolpath approach, laser power, and scan speed. | The model can predict the temperature history of each given point of the DED based on a test-set database and with minimum training. | [30] |

| LSTM | DED | To determine the temperature of the molten pool, analytical and numerical methods have been developed; however, since the real-time melt pool temperature distribution is not taken into account, the accuracy of these methods is rather low. | Developed a machine learning-based data-driven predictive algorithm to accurately estimate the melt pool temperature during DED. | [31] |

| CNN, LSTM |

DED | Forecasting melt pool temperature is layer-by-layer. | By combining CNN and LSTM networks, geographical and temporal information may be retrieved from melt pool temperature data. | [32] |

| CNN, LSTM | SLS | Several factors determine the energy consumption of AM systems. These aspects include traits with multiple dimensions and structures, making them difficult to examine. | A data fusion strategy is offered for estimating energy consumption. | [33] |

| PyroNet, IRNet, LSTM | Laser-based Additive Manufacturing | Intends to advance awareness of the fundamental connection between the LBAM method and porosity. | DL-based data fusion method that takes advantage of the measured melt pool’s thermal history as well as two newly built deep learning neural networks to estimate porosity in LBAM sections. | [34] |

| LSTM | FDM | It is investigated how equipment operating conditions affect the quality of the generated products using standard data features from the printer’s sensor signals (vibration, current, etc.). | An intelligent monitoring system has been designed in terms of working conditions and product quality. | [35] |

| LSTM | PBF | During the printing process to avoid an uneven and harsh temperature distribution across the printing plate | Anticipate temperature gradient distributions during the printing process | [36] |

2.4. Restricted Boltzmann Machines (RBMs) and Deep Belief Networks (DBNs)

Geoffrey Hinton also developed RBMs, which have a wide range of applications including feature engineering, collaborative filtering, computer vision, and topic modeling [40]. RBMs, as their name suggests, are a minor variation of Boltzmann machines. They are simpler to design and more effective to train than Boltzmann machines since their neurons must form a bipartite network, which means there are no connections between nodes within a group (visible and hidden). Particularly, this connection constraint enables RBMs to adopt training methods that are more effective and sophisticated than those available to BM, such as the gradient-based contrastive divergence algorithm.

A strong generative model known as a deep belief network (DBN) makes use of a deep architecture made up of numerous stacks of restricted Boltzmann machines (RBM). Each RBM model transforms its input vectors nonlinearly (similar to how a standard neural network functions) and generates output vectors that are used as inputs by the subsequent RBM model in the sequence. DBNs now have a lot of flexibility, which also makes them simpler to grow. DBNs can be employed in supervised or unsupervised contexts using a generative model. In numerous applications, DBNs may perform feature learning, extraction, and classification [41]. Table 4 summarizes various literature on DBNs.

Table 4. Summary of various literature on DBN.

| Model | AM | Problem | Solution | Ref |

|---|---|---|---|---|

| DBN | SLM | Due to the addition of several phases during defect identification using conventional classification algorithms, the system becomes fairly complex. | The DBN technique might achieve a high defect identification rate among five melted states without signal preprocessing. It is implemented without feature extraction and signal preprocessing using a streamlined classification structure. | [42] |

| DBN | SLM | Melted state recognition during the SLM process. | [43] |

2.5. Other Deep-Learning Networks

In addition to the above-described deep learning model, there are a few more DL algorithms that have been used, such as radial basis function networks (RBFNs), self-organizing maps (SOMs), multilayer perceptrons (MLPs), etc. However, a significantly less prominent use case was present while doing a literature survey on these models. Much work has been done using MLP, but it has some limitations over CNN. Object detections and segmentations are used in defect detections in AM.

Li et al. used the YOLO object detection deep learning model for defect detection in adaptive manufacturing. It enables rapid and precise flaw identification for wire and arc additive manufacturing (WAAM). Yolo algorithm performances are compared with a traditional object detections algorithm. It shows that it can be used in real-world industrial applications and has the potential to be used as a vision-based approach in defect identification systems [44].

Chen et al. discuss how researchers improve classifying the product quality in AM by using the YOLO algorithm. The outcome shows that 70% of product quality is classified in Realtime video. The YOLO algorithm performances are compared with different version from version 2 to version 5 and the YOLO algorithm reduces the labor cost [45].

Wang et al. developed center net-based defect detection for AM. The center net uses object size, a heatmap, and a density map for defect detection. The suggested model, Center Net-CL, outperforms traditional object detection models, such as one-stage, two-stage, and anchor-free models, in terms of detection performance. Although this strategy worked effectively, it is only applicable in certain sectors [46].

The semantic segmentation framework for additive manufacturing can improve the visual analysis of production processes and allow the detection of specific manufacturing problems. The semantic segmentation work will enable the localization of 3D printed components in picture frames that were collected and the application of image processing techniques to its structural elements for further tracking of manufacturing errors. The use of image style transfer is highly valuable for future study in the area of converting synthetic renderings to actual photographs of 3D printed objects [47].

Wong et al. reported the challenges of segmentations in AM. The image size is very small and the appearance of defect variations is also very small, so it is very difficult to detect defects in AM. Three-dimensional CNN achieved good performances in volumetric images. A 3D U-Net model was used to detect errors automatically using computed tomography (XCT) pictures of AM specimens [48].

Wang et al. presented anunsupervised deep learning algorithm for defect segmentations in AM. The unsupervised models extract local features as well as global features in the image for improving the defect segmentations in AM. A self-attention model performs better than the without-self-attention model for defect detection in AM [49].

Job scheduling is the biggest problem in AM. The order in which the job is scheduled is to be decided for the better performance of AM. Deep reinforcement learning can be applied to decide the job orders. Traditional approaches need a lot of time since they can only find the best answer at a particular moment and must start again if the state changes. Deep reinforcement learning (DRL) is employed to handle the problem of job scheduling AM. The DRL approach uses proximal policy optimization (PPO) to identify the best scheduling strategy to address the state’s dimension disaster [50].

Abualkishik et al. discussed how natural language processing can be applied to customer satisfaction and improve the process of the AM. Graph pooling and the learning parameter can be applied as proof of customer satisfaction [51].

References

- Shamsaei, N.; Yadollahi, A.; Bian, L.; Thompson, S.M. An overview of Direct Laser Deposition for additive manufacturing; Part II: Mechanical behavior, process parameter optimization and control. Addit. Manuf. 2015, 8, 12–35.

- Grasso, M.; Colosimo, B.M. Process defects and in situ monitoring methods in metal powder bed fusion: A review. Meas. Sci. Technol. 2017, 28, 044005.

- Razvi, S.S.; Feng, S.; Narayanan, A.; Lee, Y.-T.T.; Witherell, P. A Review of Machine Learning Applications in Additive Manufacturing. In Proceedings of the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Anaheim, CA, USA, 18–21 August 2019; American Society of Mechanical Engineers: New York, NY, USA, 2019.

- Jiang, J.; Weng, F.; Gao, S.; Stringer, J.; Xu, X.; Guo, P. A support interface method for easy part removal in directed energy deposition. Manuf. Lett. 2019, 20, 30–33.

- Xiong, J.; Zhang, Y.; Pi, Y. Control of deposition height in WAAM using visual inspection of previous and current layers. J. Intell. Manuf. 2021, 32, 2209–2217.

- Schmidhuber, J. Deep Learning in Neural Networks: An Overview. Neural Netw. 2015, 61, 85–117.

- Li, Y.; Yan, H.; Zhang, Y. A Deep Learning Method for Material Performance Recognition in Laser Additive Manufacturing. In Proceedings of the 2019 IEEE 17th International Conference on Industrial Informatics (INDIN), Helsinki, Finland, 22–25 July 2019; pp. 1735–1740.

- Jiang, J.; Xiong, Y.; Zhang, Z.; Rosen, D.W. Machine learning integrated design for additive manufacturing. J. Intell. Manuf. 2022, 33, 1073–1086.

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377.

- O’Shea, K.; Nash, R. An Introduction to Convolutional Neural Networks. arXiv 2015, arXiv:1511.08458.

- Shrestha, A.; Mahmood, A. Review of Deep Learning Algorithms and Architectures. IEEE Access 2019, 7, 53040–53065.

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Trans. Neural Networks Learn. Syst. 2021, 33, 6999–7019.

- Karoly, A.I.; Galambos, P.; Kuti, J.; Rudas, I.J. Deep Learning in Robotics: Survey on Model Structures and Training Strategies. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 266–279.

- Saluja, A.; Xie, J.; Fayazbakhsh, K. A closed-loop in-process warping detection system for fused filament fabrication using convolutional neural networks. J. Manuf. Process. 2020, 58, 407–415.

- Scime, L.; Beuth, J. A multi-scale convolutional neural network for autonomous anomaly detection and classification in a laser powder bed fusion additive manufacturing process. Addit. Manuf. 2018, 24, 273–286.

- Li, X.; Siahpour, S.; Lee, J.; Wang, Y.; Shi, J. Deep Learning-Based Intelligent Process Monitoring of Directed Energy Deposition in Additive Manufacturing with Thermal Images. Procedia Manuf. 2020, 48, 643–649.

- Caggiano, A.; Zhang, J.; Alfieri, V.; Caiazzo, F.; Gao, R.; Teti, R. Machine learning-based image processing for on-line defect recognition in additive manufacturing. CIRP Ann. 2019, 68, 451–454.

- Cui, W.; Zhang, Y.; Zhang, X.; Li, L.; Liou, F. Metal Additive Manufacturing Parts Inspection Using Convolutional Neural Network. Appl. Sci. 2020, 10, 545.

- Jin, Z.; Zhang, Z.; Gu, G.X. Autonomous in-situ correction of fused deposition modeling printers using computer vision and deep learning. Manuf. Lett. 2019, 22, 11–15.

- Snow, Z.; Diehl, B.; Reutzel, E.W.; Nassar, A. Toward in-situ flaw detection in laser powder bed fusion additive manufacturing through layerwise imagery and machine learning. J. Manuf. Syst. 2021, 59, 12–26.

- Yuan, B.; Guss, G.M.; Wilson, A.C.; Hau--Riege, S.P.; DePond, P.J.; McMains, S.; Matthews, M.J.; Giera, B. Machine--Learning--Based Monitoring of Laser Powder Bed Fusion. Adv. Mater. Technol. 2018, 3, 1800136.

- Yang, Z.; Lu, Y.; Yeung, H.; Krishnamurty, S. Investigation of Deep Learning for Real-Time Melt Pool Classification in Additive Manufacturing. In Proceedings of the 2019 IEEE 15th International Conference on Automation Science and Engineering (CASE), Vancouver, BC, Canada, 22–26 August 2019; pp. 640–647.

- Narayanan, B.N.; Beigh, K.; Loughnane, G.; Powar, N.U. Support vector machine and convolutional neural network based approaches for defect detection in fused filament fabrication. In Applications of Machine Learning; SPIE: Bellingham, WA, USA, 2019; p. 36.

- Zhang, Y.; Hong, G.S.; Ye, D.; Zhu, K.; Fuh, J.Y. Extraction and evaluation of melt pool, plume and spatter information for powder-bed fusion AM process monitoring. Mater. Des. 2018, 156, 458–469.

- Sherstinsky, A. Fundamentals of Recurrent Neural Network (RNN) and Long Short-Term Memory (LSTM) Network. Phys. D Nonlinear Phenom. 2020, 404, 132306.

- Fu, R.; Zhang, Z.; Li, L. Using LSTM and GRU neural network methods for traffic flow prediction. In Proceedings of the 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC 2016), Wuhan, China, 11–13 November 2016; pp. 324–328.

- Ren, K.; Chew, Y.; Zhang, Y.; Fuh, J.; Bi, G. Thermal field prediction for laser scanning paths in laser aided additive manufacturing by physics-based machine learning. Comput. Methods Appl. Mech. Eng. 2020, 362, 112734.

- Mozaffar, M.; Liao, S.; Lin, H.; Ehmann, K.; Cao, J. Geometry-agnostic data-driven thermal modeling of additive manufacturing processes using graph neural networks. Addit. Manuf. 2021, 48, 102449.

- Inyang-Udoh, U.; Mishra, S. A Physics-Guided Neural Network Dynamical Model for Droplet-Based Additive Manufacturing. IEEE Trans. Control Syst. Technol. 2022, 30, 1863–1875.

- Mozaffar, M.; Paul, A.; Al-Bahrani, R.; Wolff, S.; Choudhary, A.; Agrawal, A.; Ehmann, K.; Cao, J. Data-driven prediction of the high-dimensional thermal history in directed energy deposition processes via recurrent neural networks. Manuf. Lett. 2018, 18, 35–39.

- Zhang, Z.; Liu, Z.; Wu, D. Prediction of melt pool temperature in directed energy deposition using machine learning. Addit. Manuf. 2021, 37, 101692.

- Nalajam, P.K.; Varadarajan, R. A Hybrid Deep Learning Model for Layer-Wise Melt Pool Temperature Forecasting in Wire-Arc Additive Manufacturing Process. IEEE Access 2021, 9, 100652–100664.

- Hu, F.; Qin, J.; Li, Y.; Liu, Y.; Sun, X. Deep Fusion for Energy Consumption Prediction in Additive Manufacturing. Procedia CIRP 2021, 104, 1878–1883.

- Tian, Q.; Guo, S.; Melder, E.; Bian, L.; Guo, W. Deep Learning-Based Data Fusion Method for In Situ Porosity Detection in Laser-Based Additive Manufacturing. J. Manuf. Sci. Eng. 2021, 143, 041011.

- Zhao, Y.; Zhang, Y.; Wang, W. Research on condition monitoring of FDM equipment based on LSTM. In Proceedings of the 2021 IEEE International Conference on Advances in Electrical Engineering and Computer Applications (AEECA), Dalian, China, 27–28 August 2021; pp. 612–615.

- Yarahmadi, A.M.; Breuß, M.; Hartmann, C. Long Short-Term Memory Neural Network for Temperature Prediction in Laser Powder Bed Additive Manufacturing. In Proceedings of SAI Intelligent Systems Conference; Springer: Cham, Switzerland, 2023; pp. 119–132.

- Chinchanikar, S.; Shaikh, A.A. A Review on Machine Learning, Big Data Analytics, and Design for Additive Manufacturing for Aerospace Applications. J. Mater. Eng. Perform. 2022, 31, 6112–6130.

- Ford, S.; Despeisse, M. Additive manufacturing and sustainability: An exploratory study of the advantages and challenges. J. Clean. Prod. 2016, 137, 1573–1587.

- Tan, Y.; Jin, B.; Nettekoven, A.; Chen, Y.; Yue, Y.; Topcu, U.; Sangiovanni-Vincentelli, A. An Encoder-Decoder Based Approach for Anomaly Detection with Application in Additive Manufacturing. In Proceedings of the 2019 18th IEEE International Conference On Machine Learning And Applications (ICMLA), Boca Raton, FL, USA, 16–19 December 2019; pp. 1008–1015.

- Salakhutdinov, R.; Mnih, A.; Hinton, G. Restricted Boltzmann machines for collaborative filter-ing. In Proceedings of the 24th International Conference on Machine Learning—ICML ’07, Corvalis, OR, USA, 20–24 June 2007; IEEE: New York, NY, USA, 2007; pp. 791–798.

- Hua, Y.; Guo, J.; Zhao, H. Deep Belief Networks and deep learning. In Proceedings of the 2015 International Conference on Intelligent Computing and Internet of Things, Harbin, China, 17–18 January 2015; pp. 1–4.

- Ye, D.; Hong, G.S.; Zhang, Y.; Zhu, K.; Fuh, J.Y.H. Defect detection in selective laser melting technology by acoustic signals with deep belief networks. Int. J. Adv. Manuf. Technol. 2018, 96, 2791–2801.

- Ye, D.; Fuh, J.Y.H.; Zhang, Y.; Hong, G.S.; Zhu, K. In situ monitoring of selective laser melting using plume and spatter signatures by deep belief networks. ISA Trans. 2018, 81, 96–104.

- Li, W.; Zhang, H.; Wang, G.; Xiong, G.; Zhao, M.; Li, G.; Li, R. Deep learning based online metallic surface defect detection method for wire and arc additive manufacturing. Robot. Comput. Manuf. 2023, 80, 102470.

- Chen, Y.W.; Shiu, J.M. An implementation of YOLO-family algorithms in classifying the product quality for the acrylonitrile butadiene styrene metallization. Int. J. Adv. Manuf. Technol. 2022, 119, 8257–8269.

- Wang, R.; Cheung, C.F. CenterNet-based defect detection for additive manufacturing. Expert Syst. Appl. 2022, 188, 116000.

- Petsiuk, A.; Singh, H.; Dadhwal, H.; Pearce, J.M. Synthetic-to-real Composite Semantic Seg-mentation in Additive Manufacturing. arXiv 2022, arXiv:2210.07466.

- Wong, V.W.H.; Ferguson, M.; Law, K.H.; Lee, Y.-T.T.; Witherell, P. Automatic Volumetric Segmentation of Additive Manufacturing Defects with 3D U-Net. Janaury 2021. Available online: http://arxiv.org/abs/2101.08993 (accessed on 30 October 2022).

- Wang, R.; Cheung, C.; Wang, C. Unsupervised Defect Segmentation with Self-Attention in Ad-ditive Manufacturing. SSRN Electron. J. 2022.

- Zhu, H.; Zhang, Y.; Liu, C.; Shi, W. An Adaptive Reinforcement Learning-Based Scheduling Approach with Combination Rules for Mixed-Line Job Shop Production. Math. Probl. Eng. 2022, 2022, 1672166.

- Abualkishik, A.Z.; Almajed, R. Deep Neural Network-based Fusion and Natural Language Processing in Additive Manufacturing for Customer Satisfaction. Fusion Pract. Appl. 2021, 3, 70–90.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

2.3K

Revisions:

2 times

(View History)

Update Date:

19 Dec 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No