Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Fikret Necati Catbas | -- | 1559 | 2022-12-16 17:36:27 | | | |

| 2 | Camila Xu | -26 word(s) | 1533 | 2022-12-19 03:23:11 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Catbas, F.N.; Luleci, F.; Zakaria, M.; Bagci, U.; Laviola, J.J.; Cruz-Neira, C.; Reiners, D. Extended Reality for Civil Engineering Structures. Encyclopedia. Available online: https://encyclopedia.pub/entry/38905 (accessed on 08 February 2026).

Catbas FN, Luleci F, Zakaria M, Bagci U, Laviola JJ, Cruz-Neira C, et al. Extended Reality for Civil Engineering Structures. Encyclopedia. Available at: https://encyclopedia.pub/entry/38905. Accessed February 08, 2026.

Catbas, Fikret Necati, Furkan Luleci, Mahta Zakaria, Ulas Bagci, Joseph J. Laviola, Carolina Cruz-Neira, Dirk Reiners. "Extended Reality for Civil Engineering Structures" Encyclopedia, https://encyclopedia.pub/entry/38905 (accessed February 08, 2026).

Catbas, F.N., Luleci, F., Zakaria, M., Bagci, U., Laviola, J.J., Cruz-Neira, C., & Reiners, D. (2022, December 16). Extended Reality for Civil Engineering Structures. In Encyclopedia. https://encyclopedia.pub/entry/38905

Catbas, Fikret Necati, et al. "Extended Reality for Civil Engineering Structures." Encyclopedia. Web. 16 December, 2022.

Copy Citation

Utilization of emerging immersive visualization technologies such as Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR) in the architectural, engineering, and construction (AEC) industry has demonstrated that these visualization tools can be paradigm-shifting. Extended Reality (XR), an umbrella term for VR, AR, and MR technologies, has found many diverse use cases in the AEC industry.

augmented reality

virtual reality

mixed reality

extended reality

civil

infrastructure

monitoring

SHM

1. Introduction

The interdisciplinary researchers of this research would like to present recent advances in structural health monitoring (SHM), mainly related to Virtual Reality, Augmented Reality, and Mixed Reality for condition assessment of civil engineering structures, as the development of such novel technologies has been progressing quite considerably over the last few years. The Civil Infrastructure Technologies for Resilience and Safety (CITRS) laboratory at the University of Central Florida has a history of exploring novel technologies for civil engineering applications starting from the late 1990s [1]. Since then, multiple studies have been carried out including the earliest investigations of novel technologies, such as the employment of computer vision [2][3][4][5] in SHM applications. Additionally, the prior research of CITRS members has demonstrated the integration of digital documentation of design, inspection, and monitoring data (collected from a civil structure) coupled with calibrated numerical models (“Model Updating”) [6][7]. Briefly, model updating is a methodology to update a numerically built model in real/near time based on the information obtained from the monitoring and inspection data (field data). Fundamentally, this technique is nowadays known as digital twin. Over the last decade at CITRS, the investigation of using novel methods for condition assessment and SHM applications has continued extensively. One of the earliest machine-learning-based studies was conducted for SHM applications [8][9]. Furthermore, various other new implementations followed, such as using Mixed Reality (MR) to assist the inspectors on the field [10][11] and using Virtual Reality (VR) to bring the field data to the office where parties can collaborate in a single VR environment [12]. Another recent collaborative work discusses the latest trends in bridge health monitoring [13]. Generative adversarial networks (GAN) have been recently explored in the civil SHM domain [14]. They were investigated to address the data scarcity problem [15][16][17] and used for the first time in undamaged-to-damaged domain translation applications where the aim is to obtain the damaged response while the civil structure is intact or vice versa [18][19]. Members of the CITRS group are motivated to present some of the recent advances in SHM and other notable studies from the literature.

Civil engineering is arguably one of the oldest and broadest disciplines. It employs various theories, methodologies, tools, and technologies to solve different problems in the field. With the advancements in sophisticated computer graphics and hardware, a combination of artificial intelligence (AI), Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR) has been utilized more in the last decade (Extended Reality (XR) is an umbrella term for VR, AR, and MR technologies). While the first VR prototype, named “Sensorama”, was introduced in 1956 by cinematographer Morton Heilig, the first AR headset was only created by Ivan Sutherland in 1968, named “The Sword of Damocles”. These two efforts marked the beginning of the new field of XR. In the 1990s and early 2000s, there were many research and commercial efforts to advance the technology, and AEC became one of the disciplines that could best benefit from this technology. However, it was not until about ten years ago that, thanks to the advances in real-time graphics hardware and increased computation power in mobile and personal computers, XR became much more widespread and more feasible as a tool that could be utilized in different workflows. In 2022, the use of XR has extended to various types of applications in different industries such as education, military, healthcare, and architecture–engineering–construction (AEC). The recent progress in XR was possible due to the advancements in and miniaturization of graphics and computational systems and the significant reduction in cost and complexity of this technology. In addition, the introduction of the concept of “metaverse”, where users experience virtual collaborative environments online, has also accelerated research and development in the XR industry in recent years. XR technologies have various advantageous uses in the AEC industry, such as training and education, structural design, heritage preservation, construction activities, and structural condition assessment.

2. Virtual Reality, Augmented Reality, and Mixed Reality

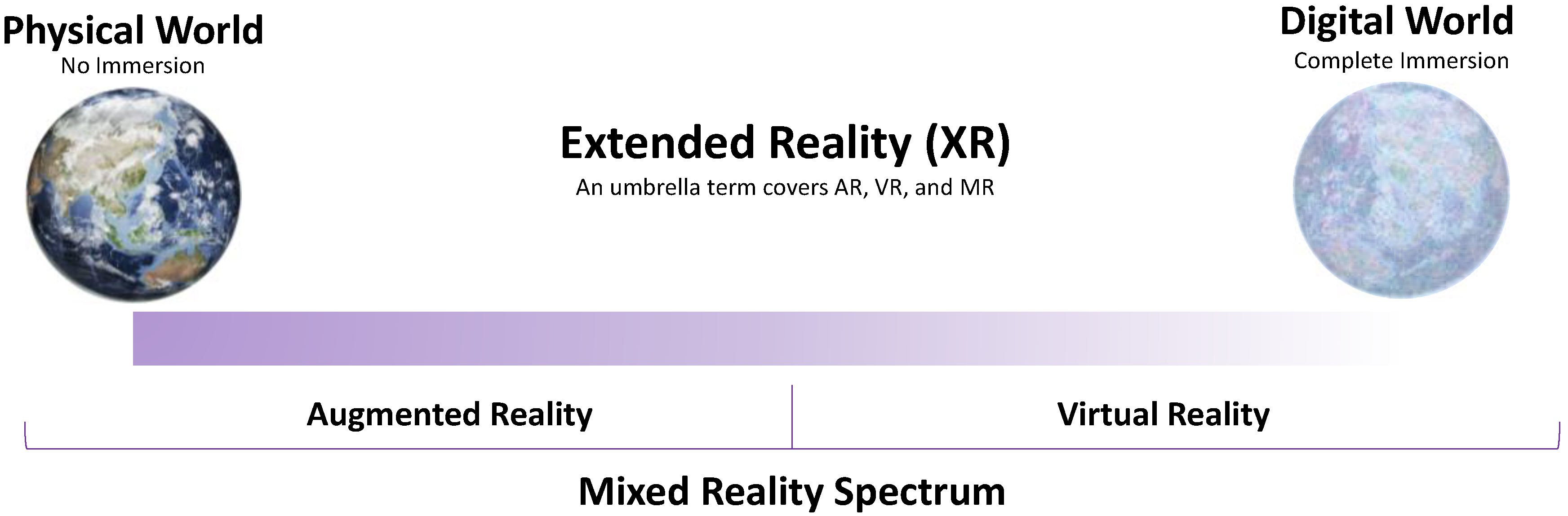

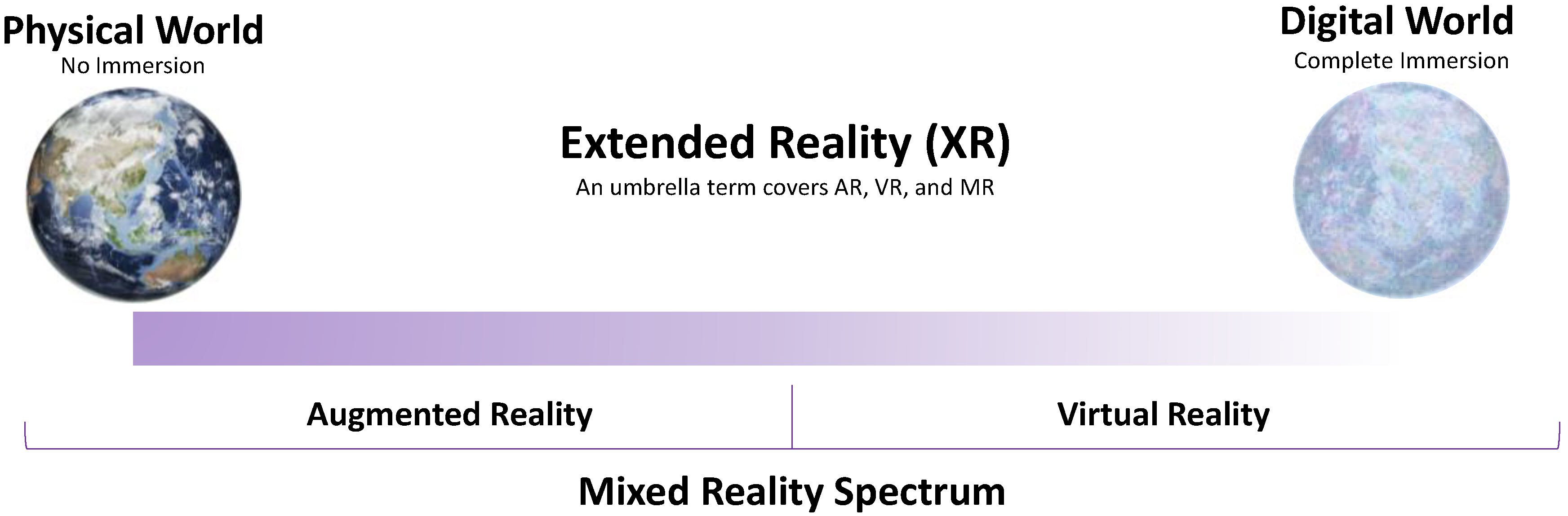

The term Extended Reality (XR) includes VR, AR, and MR, and the areas interpolated among them, which is a set of combinations of different spectrums of the virtual world and real world as introduced by Paul Milgram [20]. In Figure 1, the reality–virtuality spectrum of XR is shown. As described in the figure, while VR is an entirely immersive experience surrounded by a digital environment which isolates the user from the real world, AR enhances the real world with a digital augmentation overlaid on it. MR is a blend of the real and virtual worlds, providing interaction between real and digital elements. In essence, MR involves the merging of real and virtual environments somewhere through the mixed reality spectrum (or virtuality–reality spectrum) (Figure 1), which connects completely real environments to completely virtual ones [21]. This is, however, quite different in VR, which isolates the user from the real world, and the user can interact with the digital elements in the virtual world.

Figure 1. The mixed reality spectrum.

Virtual Reality (VR): As described previously, VR provides a completely digital environment where users experience full immersion. The applications that block the user’s view to provide a fully immersive digital experience are named Virtual Reality. At present, a head-mounted display (HMD) or multi-projected environments (e.g., specially designed rooms with multiple large screens: CAVE [22]) are the most common tools to immerse users in virtual spaces. The VR system allows the user to interact with virtual features or items in the virtual world. The main point of VR is the interactive, real-time nature of the medium provided to the user. Generally, while some VR systems incorporate auditory and visual feedback, some also enable different kinds of sensory and force feedback via haptic technology. Widely used HMDs are the Meta Oculus series, HTC Vive, Samsung Gear VR, and Google Cardboard. The VR applications are primarily used in education and training, gaming, marketing, travel, and AEC industries.

Augmented Reality (AR): AR is a view of the real world with an overlay of digital elements where the real world is enhanced with digital objects. The applications that overlay holograms or graphics in the real world (physical world) are named AR. While the interaction with virtual superimposed content in an AR setting can also be made possible, AR applications are generally used with the aim of enhancing the real-world environment with digital objects, usually providing the user with no-to-minimal interaction with the virtual superimposed content in the AR setting. Currently, AR systems use devices such as HoloLens, Magic Leap, Google glass, Vuzix smart glasses, smartphones, tablets, cameras, etc. While some of the HMDs, e.g., HoloLens and Magic Leap, are used for AR applications, they are also used for MR applications. Similar to VR, AR has a wide range of uses, such as gaming, manufacturing, maintenance and repair, education and training, and the AEC industries.

Mixed Reality (MR): The experiences that transition along the virtuality–reality spectrum (mixed reality spectrum) are named Mixed Reality. MR is also called Hybrid Reality, as it merges real and virtual worlds [23]. Presently, MR systems use HMDs for MR applications. These HMDs can be classified into two: HMD see-through glasses (e.g., HoloLens, Magic Leap, Samsung Odyssey+, and Vuzix smart glasses, which enable one to see surroundings clearly with additional digital holograms displayed in the glass that allows interaction with real surroundings) and immersive HMDs (e.g., Oculus Quest 2 and HTC Vive; although they have non-translucent displays which completely block out the real world, they use cameras for tracking the outer world). Similar to VR and AR, MR also has use cases in gaming, education and training, manufacturing, maintenance and repair, and AEC industries.

Side note: Distinguishing AR and MR applications from each other can be quite challenging due to a common confusion about the fundamentals of AR and MR (as also observed in the literature). Therefore, the studies that use MR in the literature are presented together with AR (AR for Structural Condition Assessment) in this research. It is generally observed from the reviewed studies that the relevant works use XR to conduct field assessment remotely while simultaneously providing a collaborative work environment for engineers, inspectors, and other third parties. In addition, some other studies use XR to reduce human labor in the field and to support inspection activity by providing inspectors with digital visual aids and enabling the interaction of those visual aids with the data observed in the real world.

Lastly, game engines and other programs are commonly used to develop VR, AR, and MR applications. Unity and Unreal engines are the most commonly used platforms for application development. Vuforia, Amazon Sumerian, and CRYENGINE are some of the others that are also utilized for developing VR, AR, and MR applications. The developed applications are then integrated into the standalone or PC-connected HMDs or other types of devices, mostly operating on Android, iOS, or Microsoft Windows, to be used by the end-user.

References

- Catbas, F.N. Investigation of Global Condition Assessment and Structural Damage Identification of Bridges with Dynamic Testing and Modal Analysis. Ph.D. Dissertation, University of Cincinnati, Cincinnati, OH, USA, 1997.

- Zaurin, R.; Catbas, F.N. Computer Vision Oriented Framework for Structural Health Monitoring of Bridges. Conference Proceedings of the Society for Experimental Mechanics Series; Scopus Export 2000s. 5959. 2007. Available online: https://stars.library.ucf.edu/scopus2000/5959 (accessed on 2 November 2022).

- Basharat, A.; Catbas, N.; Shah, M. A Framework for Intelligent Sensor Network with Video Camera for Structural Health Monitoring of Bridges. In Proceedings of the Third IEEE International Conference on Pervasive Computing and Communications Workshops, Kauai, HI, USA, 8–12 March 2005; IEEE: New York, NY, USA, 2005; pp. 385–389.

- Catbas, F.N.; Khuc, T. Computer Vision-Based Displacement and Vibration Monitoring without Using Physical Target on Structures. In Bridge Design, Assessment and Monitoring; Taylor Francis: Abingdon, UK, 2018.

- Dong, C.-Z.; Catbas, F.N. A Review of Computer Vision–Based Structural Health Monitoring at Local and Global Levels. Struct Health Monit. 2021, 20, 692–743.

- Catbas, F.N.; Grimmelsman, K.A.; Aktan, A.E. Structural Identification of Commodore Barry Bridge. In Nondestructive Evaluation of Highways, Utilities, and Pipelines IV.; Aktan, A.E., Gosselin, S.R., Eds.; SPIE: Bellingham, WA, USA, 2000; pp. 84–97.

- Aktan, A.E.; Catbas, F.N.; Grimmelsman, K.A.; Pervizpour, M. Development of a Model Health Monitoring Guide for Major Bridges; Report for Federal Highway Administration: Washington, DC, USA, 2002.

- Gul, M.; Catbas, F.N. Damage Assessment with Ambient Vibration Data Using a Novel Time Series Analysis Methodology. J. Struct. Eng. 2011, 137, 1518–1526.

- Gul, M.; Necati Catbas, F. Statistical Pattern Recognition for Structural Health Monitoring Using Time Series Modeling: Theory and Experimental Verifications. Mech. Syst. Signal. Process. 2009, 23, 2192–2204.

- Karaaslan, E.; Zakaria, M.; Catbas, F.N. Mixed Reality-Assisted Smart Bridge Inspection for Future Smart Cities. In The Rise of Smart Cities; Elsevier: Amsterdam, The Netherlands, 2022; pp. 261–280.

- Karaaslan, E.; Bagci, U.; Catbas, F.N. Artificial Intelligence Assisted Infrastructure Assessment Using Mixed Reality Systems. Transp. Res. Rec. J. Transp. Res. Board 2019, 2673, 413–424.

- Luleci, F.; Li, L.; Chi, J.; Reiners, D.; Cruz-Neira, C.; Catbas, F.N. Structural Health Monitoring of a Foot Bridge in Virtual Reality Environment. Procedia Struct. Integr. 2022, 37, 65–72.

- Catbas, N.; Avci, O. A Review of Latest Trends in Bridge Health Monitoring. In Proceedings of the Institution of Civil Engineers-Bridge Engineering; Thomas Telford Ltd.: London, UK, 2022; pp. 1–16.

- Luleci, F.; Catbas, F.N.; Avci, O. A Literature Review: Generative Adversarial Networks for Civil Structural Health Monitoring. Front. Built Environ. Struct. Sens. Control Asset Manag. 2022, 8, 1027379.

- Luleci, F.; Catbas, F.N.; Avci, O. Generative Adversarial Networks for Labeled Acceleration Data Augmentation for Structural Damage Detection. J. Civ. Struct. Health Monit. 2022.

- Luleci, F.; Catbas, F.N.; Avci, O. Generative Adversarial Networks for Data Generation in Structural Health Monitoring. Front Built Env. 2022, 8, 6644.

- Luleci, F.; Catbas, F.N.; Avci, O. Generative Adversarial Networks for Labelled Vibration Data Generation. In Special Topics in Structural Dynamics & Experimental Techniques; Conference Proceedings of the Society for Experimental Mechanics Series; Springer: Berlin/Heidelberg, Germany, 2023; Volume 5, pp. 41–50.

- Luleci, F.; Catbas, N.; Avci, O. Improved Undamaged-to-Damaged Acceleration Response Translation for Structural Health Monitoring. Eng. Appl. Artif. Intell. 2022.

- Luleci, F.; Catbas, F.N.; Avci, O. CycleGAN for Undamaged-to-Damaged Domain Translation for Structural Health Monitoring and Damage Detection. arXiv 2022, arXiv:2202.07831.

- Milgram, P.; Takemura, H.; Utsumi, A.; Kishino, F. Augmented Reality: A Class of Displays on the Reality-Virtuality Continuum. In Telemanipulator and Telepresence Technologies; Das, H., Ed.; SPIE: Bellingham, WA, USA, 1995; pp. 282–292.

- Milgram, P.; Kishino, F. A Taxonomy of Mixed Reality Visual Displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329.

- Cruz-Neira, C.; Sandin, D.J.; DeFanti, T.A.; Kenyon, R.v.; Hart, J.C. The CAVE: Audio Visual Experience Automatic Virtual Environment. Commun. ACM 1992, 35, 64–72.

- Brigham, T.J. Reality Check: Basics of Augmented, Virtual, and Mixed Reality. Med. Ref. Serv. Q 2017, 36, 171–178.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.4K

Entry Collection:

Remote Sensing Data Fusion

Revisions:

2 times

(View History)

Update Date:

19 Dec 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No