Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Jose Luis Sanchez-Lopez | -- | 2190 | 2022-12-12 10:35:41 | | | |

| 2 | Dean Liu | -4 word(s) | 2186 | 2022-12-15 08:28:46 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Tourani, A.; Bavle, H.; Sanchez-Lopez, J.L.; Voos, H. Visual Simultaneous Localization and Mapping. Encyclopedia. Available online: https://encyclopedia.pub/entry/38588 (accessed on 28 February 2026).

Tourani A, Bavle H, Sanchez-Lopez JL, Voos H. Visual Simultaneous Localization and Mapping. Encyclopedia. Available at: https://encyclopedia.pub/entry/38588. Accessed February 28, 2026.

Tourani, Ali, Hriday Bavle, Jose Luis Sanchez-Lopez, Holger Voos. "Visual Simultaneous Localization and Mapping" Encyclopedia, https://encyclopedia.pub/entry/38588 (accessed February 28, 2026).

Tourani, A., Bavle, H., Sanchez-Lopez, J.L., & Voos, H. (2022, December 12). Visual Simultaneous Localization and Mapping. In Encyclopedia. https://encyclopedia.pub/entry/38588

Tourani, Ali, et al. "Visual Simultaneous Localization and Mapping." Encyclopedia. Web. 12 December, 2022.

Copy Citation

Visual Simultaneous Localization and Mapping (VSLAM) methods refer to the SLAM approaches that employ cameras for pose estimation and map reconstruction and are preferred over Light Detection And Ranging (LiDAR)-based methods due to their lighter weight, lower acquisition costs, and richer environment representation.

visual SLAM

Computer Vision

robotics

1. Introduction

Simultaneous Localization and Mapping (SLAM) refers to the process of estimating an unknown environment’s map while monitoring the location of an agent at the same time [1]. Here, the agent can be a domestic robot [2], an autonomous vehicle [3], a planetary rover [4], even an Unmanned Aerial Vehicle (UAV) [5][6] or an Unmanned Ground Vehicle (UGV) [7]. In situations where a prior map of the environment is unavailable, or the robot’s location is unknown, SLAM can be utilized to cover a wide range of applications. In this regard, and considering the ever-growing applications of robotics, SLAM has gained huge attention among industry and research community members in recent years [8][9].

SLAM systems may use various sensors to collect data from the environment, including Light Detection And Ranging (LiDAR)-based, acoustic, and vision sensors [10]. The vision sensors category covers any variety of visual data detectors, including monocular, stereo, event-based, omnidirectional, and Red Green Blue-Depth (RGB-D) cameras. A robot equipped with a vision sensor uses the visual data provided by cameras to estimate the position and orientation of the robot with respect to its surroundings [11]. The process of using vision sensors to perform SLAM is particularly called Visual Simultaneous Localization and Mapping (VSLAM). Utilizing visual data in SLAM applications has the advantages of cheaper hardware requirements, more straightforward object detection and tracking, and the ability to provide rich visual and semantic information [12]. The captured images (or video frames) can also be used for vision-based applications, including semantic segmentation and object detection, as they store a wealth of data for processing. The mentioned characteristics have recently made VSLAM a trending topic in robotics and prompted robotics and Computer Vision (CV) experts to perform considerable studies and investigations in the last decades. Consequently, VSLAM can be found in various types of applications where it is essential to reconstruct the 3D model of the environment, such as autonomous, Augmented Reality (AR), and service robots [13].

As a general benchmark introduced by [14] to tackle high computational cost, SLAM approaches mainly contains two introductory threads to be executed in parallel, known as tracking and mapping. Hereby, a fundamental classification of the algorithms used in VSLAM is how researchers employ distinct methods and strategies in each thread. The mentioned solutions look differently at SLAM systems based on the type of data they use, making them dividable into two categories: direct and indirect (feature-based) methods [15]. Indirect methods extract feature points (i.e., keypoints) obtained from textures by processing the scene and keeping track of them by matching their descriptors in sequential frames. Despite the computationally expensive performance of feature extraction and matching stages, these methods are precise and robust against photometric changes in frame intensities. Direct algorithms, on the other hand, estimate camera motions directly from pixel-level data and build an optimization problem to minimize the photo-metric error. By relying on photogrammetry, these methods utilize all camera output pixels and track their replacement in sequential frames regarding their constrained aspects, such as brightness and color. These characteristics enable direct approaches to model more information from images than indirect techniques and enable a higher-accuracy 3D reconstruction. However, while direct methods work better in texture-less environments and do not require more computation for feature extraction, they often face large-scale optimization problems [16], and various lighting conditions negatively impact their accuracy. The pros and cons of each approach encouraged researchers to think about developing hybrid solutions, where a combination of both approaches is considered. Hybrid methods commonly integrate the detection stage of indirect and direct, in which one initializes and corrects the other.

Additionally, as VSLAMs mainly include a Visual Odometry (VO) front-end to locally estimate the path of the camera and a SLAM back-end to optimize the created map, the variety of modules used in each category results in implementation variations. VO provides a preliminary estimation of the location and poses of the robot based on local consistencies, which are sent to the back-end for optimization. Thus, the primary distinction between VSLAM and VO is whether or not to take into account the global consistency of the map and the predicted trajectory. Several state-of-the-art VSLAM applications also include two additional modules: loop closure detection and mapping [15]. They are responsible for recognizing previously visited locations for more precise tracking and map reconstruction based on the camera pose.

2. VSLAM Setup

According to the previous section, where the evolution of VSLAM algorithms was studied, adding and improving various modules improved the architecture of the formerly existing techniques and enriched the approaches introduced afterward. Thus, this section investigates multiple configurations that can be found in current VSLAM methodologies. Considering different setups, pipelines, and available configurations, current approaches can be classified into the categories mentioned below:

2.1. Sensors and Data Acquisition

The early-stage implementation of a VSLAM algorithm introduced by Davison et al. [17] was equipped with a monocular camera for trajectory recovery. Monocular cameras are the most common vision sensors for a wide range of tasks, such as object detection and tracking [18]. Stereo cameras, on the other hand, contain two or more image sensors, enabling them to perceive depth in the captured images, which leads to more accurate performance in VSLAM applications. These camera setups are cost-efficient and provide informative perception for higher accuracy demands. RGB-D cameras are other variations of visual sensors used in VSLAMs and supply both the depth and colors in the scene. The mentioned vision sensors can provide rich information about the environment in straightforward circumstances—e.g., proper lighting and motion speed—but they often struggle to cope with conditions where the illumination is low or the dynamic range in the scene is high.

In recent years, event cameras have also been used in various VSLAM applications. These low-latency bio-inspired vision sensors generate pixel-level brightness changes instead of standard intensity frames when a motion is detected, leading to a high dynamic range output with no motion blur impact [19]. In contrast with standard cameras, event-based sensors supply trustworthy visual information during high-speed motions and wide-range dynamic scenarios but fail to provide sufficient information when the motion rate is low. Although event cameras can outperform standard visual sensors in severe illumination and dynamic range conditions, they mainly generate unsynchronized information about the environment. This makes traditional vision algorithms unable to process the outputs of these sensors [20]. Additionally, using the spatio-temporal windows of events along with the data obtained from other sensors can provide rich pose estimation and tracking information.

Moreover, some approaches use a multi-camera setup to counter the common issues of working in a real-world environment and improve localization precision. Utilizing multiple visual sensors aid in situations where complicated problems such as occlusion, camouflage, sensor failure, or sparsity of trackable texture occur by providing cameras with overlapping fields of view. Although multi-camera setups can resolve some data acquisition issues, camera-only VSLAMs may face various challenges, such as motion blur when encountering fast-moving objects, features mismatching in low or severe illumination, dynamic object ignorance in scenarios with high pace changes, etc. Hence, some VSLAM applications may be equipped with multiple sensors alongside cameras. Fusing the events and standard frames [21] or integrating other sensors, such as LiDARs [22] and IMUs, to VSLAM are some of the existing solutions.

2.2. Visual Features

Detecting visual features and utilizing feature descriptor information for pose estimation is an inevitable stage of indirect VSLAM methodologies. These approaches employ various feature extraction algorithms to understand the environment better and track the feature points in consecutive frames. The feature extraction stage contains a wide range of algorithms, including SIFT [23], SURF [24], FAST [25], Binary Robust Independent Elementary Features (BRIEF) [26], ORB [27], etc. Among them, ORB features have the advantage of fast extraction and matching without losing huge accuracy compared to SIFT and SURF [28].

The problem with some of the mentioned methods is that they cannot effectively adapt to various complex and unforeseen situations. Thus, many researchers have employed CNNs to extract deep-seated features of images for various stages, including VO, pose estimation, and loop closure detection. These techniques may represent supervised or unsupervised frameworks according to the functionality of the methods.

2.3. Target Environments

As a strong presumption in many traditional VSLAM practices, the robot works in a static world with no sudden or unanticipated changes. Consequently, although many systems could demonstrate a successful application in specific settings, some unexpected changes in the environment (e.g., the existence of moving objects) are likely to cause complications for the system and degrade the state estimation quality to a large extent. Systems that work in dynamic environments usually employ algorithms such as Optical Flow or Random Sample Consensus (RANSAC) [29] to detect movements in the scene, classify the moving objects as outliers, and skip them while reconstructing the map. Such systems utilize either geometry/semantic information or try to improve the localization scheme by combining the results of these two [30].

Additionally, different environments are classified into indoor and outdoor categories as a general taxonomy. An outdoor environment can be an urban area with structural landmarks and massive motion changes, such as buildings and road textures, or an off-road zone with a weak motion state, such as moving clouds and vegetation, the texture of the sand, etc. As a result of this, the amount of trackable points in off-road environments is less than the urban areas, which increases the risk of localization and loop closure detection failure. Indoor environments, on the other hand, contain scenes with entirely different global spatial properties, such as corridors, walls, and rooms. It should be anticipated that while a VSLAM system might work well in one of the mentioned zones, it might not show the same performance in other environments.

2.4. System Evaluation

While some of the VSLAM approaches, especially those with the capability of working in dynamic and challenging environments, are tested on robots in real-world conditions, many research works have used publicly available datasets to demonstrate their applicability. In this regard, the RAWSEEDS Dataset by Bonarini et al. [31] is a well-known multi-sensor benchmarking tool containing indoor, outdoor, and mixed robot trajectories with ground-truth data. It is one of the oldest publicly available benchmarking tools for robotic and SLAM purposes. Scenenet RGB-D by McCormac et al. [32] is another favored dataset for scene understanding problems, such as semantic segmentation and object detection, containing five million large-scale rendered RGB-D images. The dataset also contains pixel-perfect ground-truth labels and exact camera poses and depth data, making it a potent tool for VSLAM applications. Many recent works in the domain of VSLAM and VO have tested their approaches on the Technische Universität München (TUM) RGB-D dataset [33]. The mentioned dataset and benchmarking tool contain color and depth images captured by a Microsoft Kinect sensor and their corresponding ground-truth sensor trajectories. Further, NTU VIRAL by Nguyen et al. [34] is a dataset collected by a UAV equipped with a 3D LiDAR, cameras, IMUs, and multiple Ultra-widebands (UWBs). The dataset contains indoor and outdoor instances and is targeted for evaluating autonomous driving and aerial operation performances.

Moreover, the European Robotics Challenge (EuRoC) Micro Aerial Vehicle (MAV) dataset by Burri et al. [35] is another popular dataset containing images captured by a stereo camera, along with synchronized IMU measurements and motion ground truth. The collected data in EuRoC MAV are classified into easy, medium, and difficult categories according to the surrounding conditions. OpenLORIS-Scene by Shi et al. [36] is another publicly available dataset for VSLAM works, containing a wide range of data collected by a wheeled robot equipped with various sensors. It provides proper data for monocular and RGB-D algorithms, along with odometry data from wheel encoders. As a more general-purpose dataset used in VSLAM applications, KITTI [37] is a popular collection of data captured by two high-resolution RGB and grayscale video cameras on a moving vehicle. KITTI provides accurate ground truth using GPS and laser sensors, making it a highly popular dataset for evaluation in mobile robotics and autonomous driving domains. TartanAir [38] is another benchmarking dataset for the evaluation of SLAM algorithms under challenging scenarios. Additionally, the Imperial College London and National University of Ireland Maynooth (ICL-NUIM) [39] dataset is another VO dataset containing handheld RGB-D camera sequences, considered a benchmark for many SLAM works.

In contrast with the previous datasets, some other datasets contain data acquired using particular cameras instead of regular ones. For instance, the Event Camera Dataset introduced by Mueggler et al. [40] is a dataset with samples collected using an event-based camera for high-speed robotic evaluations. Dataset instances contain inertial measurements and intensity images captured by a motion-capture system, making it a suitable benchmark for VSLAMs equipped with event cameras.

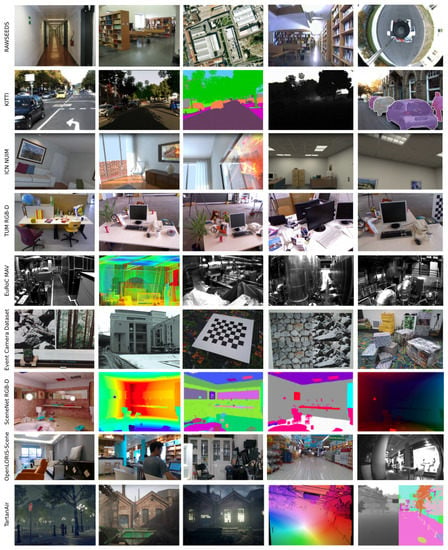

The mentioned datasets are used in multiple VSLAM methodologies according to their sensor setups, applications, and target environments. These datasets mainly contain cameras’ extrinsic and intrinsic calibration parameters and ground-truth data. The summarized characteristics of the datasets and some instances of each are shown in Table 1 and Figure 1, respectively.

Figure 1. Instances of some of the most popular visual SLAM datasets used for evaluation in various papers.

References

- Khairuddin, A.R.; Talib, M.S.; Haron, H. Review on simultaneous localization and mapping (SLAM). In Proceedings of the 2015 IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 27–29 November 2015; pp. 85–90.

- Vallivaara, I.; Haverinen, J.; Kemppainen, A.; Röning, J. Magnetic field-based SLAM method for solving the localization problem in mobile robot floor-cleaning task. In Proceedings of the 2011 15th International Conference on Advanced Robotics (ICAR), Tallinn, Estonia, 20–23 June 2011; pp. 198–203.

- Zou, Q.; Sun, Q.; Chen, L.; Nie, B.; Li, Q. A comparative analysis of LiDAR SLAM-based indoor navigation for autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6907–6921.

- Geromichalos, D.; Azkarate, M.; Tsardoulias, E.; Gerdes, L.; Petrou, L.; Perez Del Pulgar, C. SLAM for autonomous planetary rovers with global localization. J. Field Robot. 2020, 37, 830–847.

- Yang, T.; Li, P.; Zhang, H.; Li, J.; Li, Z. Monocular vision SLAM-based UAV autonomous landing in emergencies and unknown environments. Electronics 2018, 7, 73.

- Li, J.; Bi, Y.; Lan, M.; Qin, H.; Shan, M.; Lin, F.; Chen, B.M. Real-time simultaneous localization and mapping for uav: A survey. In Proceedings of the International Micro Air Vehicle Competition and Conference (IMAV), Beijing, China, 17–21 October 2016; Volume 2016, p. 237.

- Liu, Z.; Chen, H.; Di, H.; Tao, Y.; Gong, J.; Xiong, G.; Qi, J. Real-time 6d lidar slam in large scale natural terrains for ugv. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 662–667.

- Gupta, A.; Fernando, X. Simultaneous Localization and Mapping (SLAM) and Data Fusion in Unmanned Aerial Vehicles: Recent Advances and Challenges. Drones 2022, 6, 85.

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332.

- Zaffar, M.; Ehsan, S.; Stolkin, R.; Maier, K.M. Sensors, slam and long-term autonomy: A review. In Proceedings of the 2018 NASA/ESA Conference on Adaptive Hardware and Systems (AHS), Edinburgh, UK, 6–9 August 2018; pp. 285–290.

- Gao, X.; Zhang, T. Introduction to Visual SLAM: From Theory to Practice; Springer Nature: Berlin, Germany, 2021.

- Filipenko, M.; Afanasyev, I. Comparison of various slam systems for mobile robot in an indoor environment. In Proceedings of the 2018 International Conference on Intelligent Systems (IS), Madeira Island, Portugal, 25–27 September 2018; pp. 400–407.

- Yeh, Y.J.; Lin, H.Y. 3D reconstruction and visual SLAM of indoor scenes for augmented reality application. In Proceedings of the 2018 IEEE 14th International Conference on Control and Automation (ICCA), Anchorage, AK, USA, 12–15 June 2018; pp. 94–99.

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234.

- Duan, C.; Junginger, S.; Huang, J.; Jin, K.; Thurow, K. Deep Learning for Visual SLAM in Transportation Robotics: A Review. Transp. Saf. Environ. 2020, 1, 177–184.

- Outahar, M.; Moreau, G.; Normand, J.M. Direct and Indirect vSLAM Fusion for Augmented Reality. J. Imaging 2021, 7, 141.

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067.

- He, M.; Zhu, C.; Huang, Q.; Ren, B.; Liu, J. A review of monocular visual odometry. Vis. Comput. 2020, 36, 1053–1065.

- Gallego, G.; Delbrück, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.J.; Conradt, J.; Daniilidis, K.; et al. Event-based vision: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 154–180.

- Jiao, J.; Huang, H.; Li, L.; He, Z.; Zhu, Y.; Liu, M. Comparing representations in tracking for event camera-based slam. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1369–1376.

- Vidal, A.R.; Rebecq, H.; Horstschaefer, T.; Scaramuzza, D. Ultimate SLAM? Combining events, images, and IMU for robust visual SLAM in HDR and high-speed scenarios. IEEE Robot. Autom. Lett. 2018, 3, 994–1001.

- Xu, L.; Feng, C.; Kamat, V.R.; Menassa, C.C. An occupancy grid mapping enhanced visual SLAM for real-time locating applications in indoor GPS-denied environments. Autom. Constr. 2019, 104, 230–245.

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110.

- Bay, H.; Tuytelaars, T.; Gool, L.V. Surf: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 404–417.

- Viswanathan, D.G. Features from accelerated segment test (fast). In Proceedings of the 10th Workshop on Image Analysis for Multimedia Interactive Services, London, UK, 6–8 May 2009; pp. 6–8.

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. Brief: Binary robust independent elementary features. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; pp. 778–792.

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571.

- Karami, E.; Prasad, S.; Shehata, M. Image matching using SIFT, SURF, BRIEF and ORB: Performance comparison for distorted images. arXiv 2017, arXiv:1710.02726.

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395.

- Cui, L.; Ma, C. SOF-SLAM: A semantic visual SLAM for dynamic environments. IEEE Access 2019, 7, 166528–166539.

- Bonarini, A.; Burgard, W.; Fontana, G.; Matteucci, M.; Sorrenti, D.G.; Tardos, J.D. Rawseeds: Robotics advancement through web-publishing of sensorial and elaborated extensive data sets. In Proceedings of the 2006 International Conference on Intelligent Robots and Systems (IROS), Beijing, China, 9–15 October 2006; Volume 6, p. 93.

- McCormac, J.; Handa, A.; Leutenegger, S.; Davison, A.J. Scenenet rgb-d: Can 5m synthetic images beat generic imagenet pre-training on indoor segmentation? In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2678–2687.

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 573–580.

- Nguyen, T.M.; Yuan, S.; Cao, M.; Lyu, Y.; Nguyen, T.H.; Xie, L. NTU VIRAL: A Visual-Inertial-Ranging-Lidar dataset, from an aerial vehicle viewpoint. Int. J. Robot. Res. 2021, 41, 270–280.

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163.

- Shi, X.; Li, D.; Zhao, P.; Tian, Q.; Tian, Y.; Long, Q.; Zhu, C.; Song, J.; Qiao, F.; Song, L.; et al. Are we ready for service robots? the openloris-scene datasets for lifelong slam. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3139–3145.

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361.

- Wang, W.; Zhu, D.; Wang, X.; Hu, Y.; Qiu, Y.; Wang, C.; Hu, Y.; Kapoor, A.; Scherer, S. Tartanair: A dataset to push the limits of visual slam. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 4909–4916.

- Handa, A.; Whelan, T.; McDonald, J.; Davison, A.J. A benchmark for RGB-D visual odometry, 3D reconstruction and SLAM. In Proceedings of the 2014 IEEE International Conference on ROBOTICS and Automation (ICRA), Hong Kong, China, 31 May–5 June 2014; pp. 1524–1531.

- Mueggler, E.; Rebecq, H.; Gallego, G.; Delbruck, T.; Scaramuzza, D. The event-camera dataset and simulator: Event-based data for pose estimation, visual odometry, and SLAM. Int. J. Robot. Res. 2017, 36, 142–149.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.3K

Revisions:

2 times

(View History)

Update Date:

30 Dec 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No