| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Truong X. Nguyen | -- | 2917 | 2022-12-05 19:05:15 | | | |

| 2 | Jessie Wu | + 5 word(s) | 2922 | 2022-12-06 04:56:34 | | | | |

| 3 | Jessie Wu | Meta information modification | 2922 | 2022-12-06 05:02:02 | | | | |

| 4 | Jessie Wu | -5 word(s) | 2917 | 2022-12-06 05:03:47 | | |

Video Upload Options

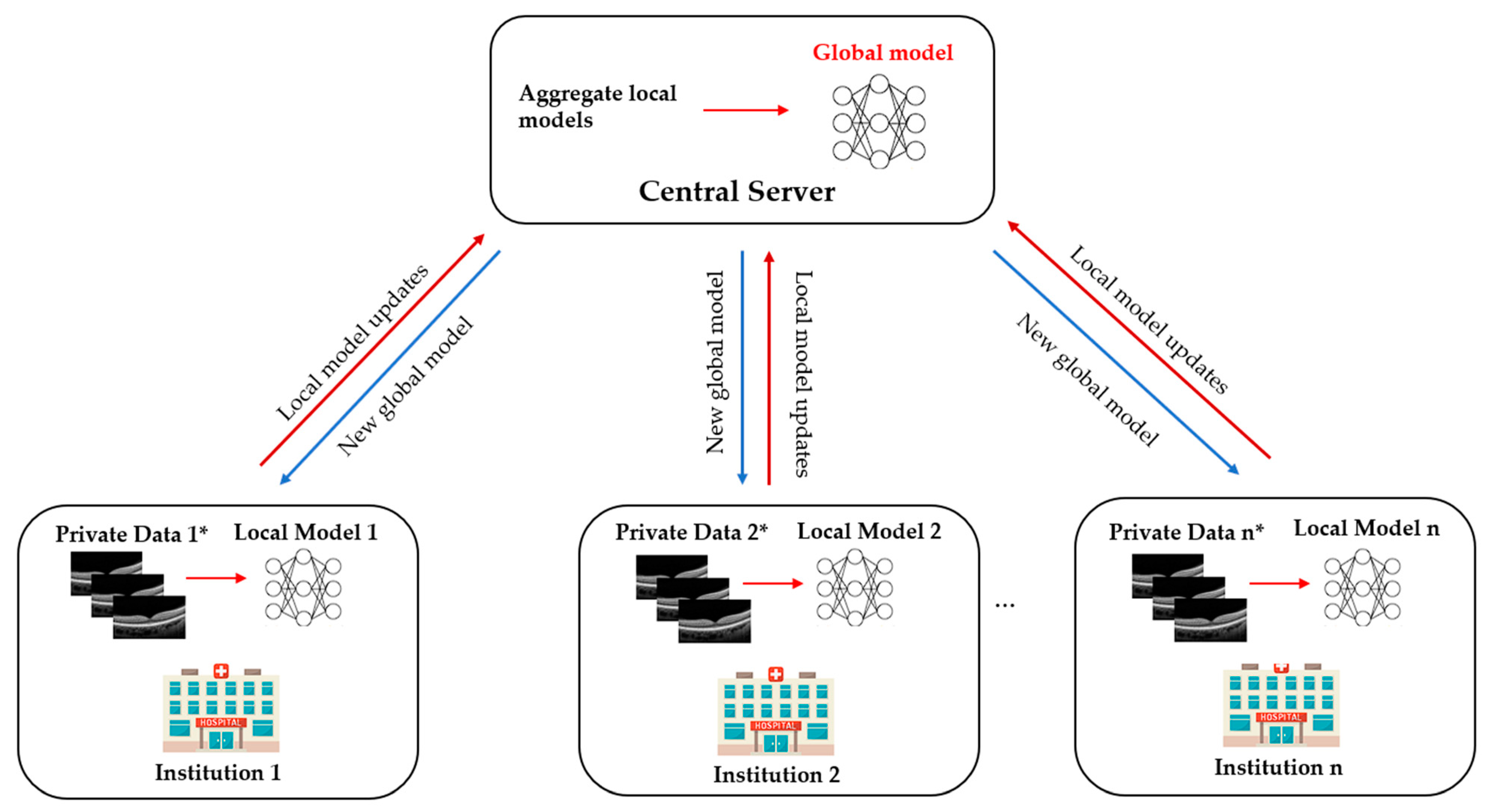

Advances in artificial intelligence deep learning (DL) have made tremendous impacts on the field of ocular imaging. Specifically, DL has been utilised to detect and classify various ocular diseases on retinal photographs, optical coherence tomography (OCT) images, and OCT-angiography images. In order to achieve good robustness and generalisability of model performance, DL training strategies traditionally require extensive and diverse training datasets from various sites to be transferred and pooled into a “centralised location”. However, such a data transferring process could raise practical concerns related to data security and patient privacy. Federated learning (FL) is a distributed collaborative learning paradigm which enables the coordination of multiple collaborators without the need for sharing confidential data. This distributed training approach has great potential to ensure data privacy among different institutions and reduce the potential risk of data leakage from data pooling or centralisation.

1. What Is Federated Learning?

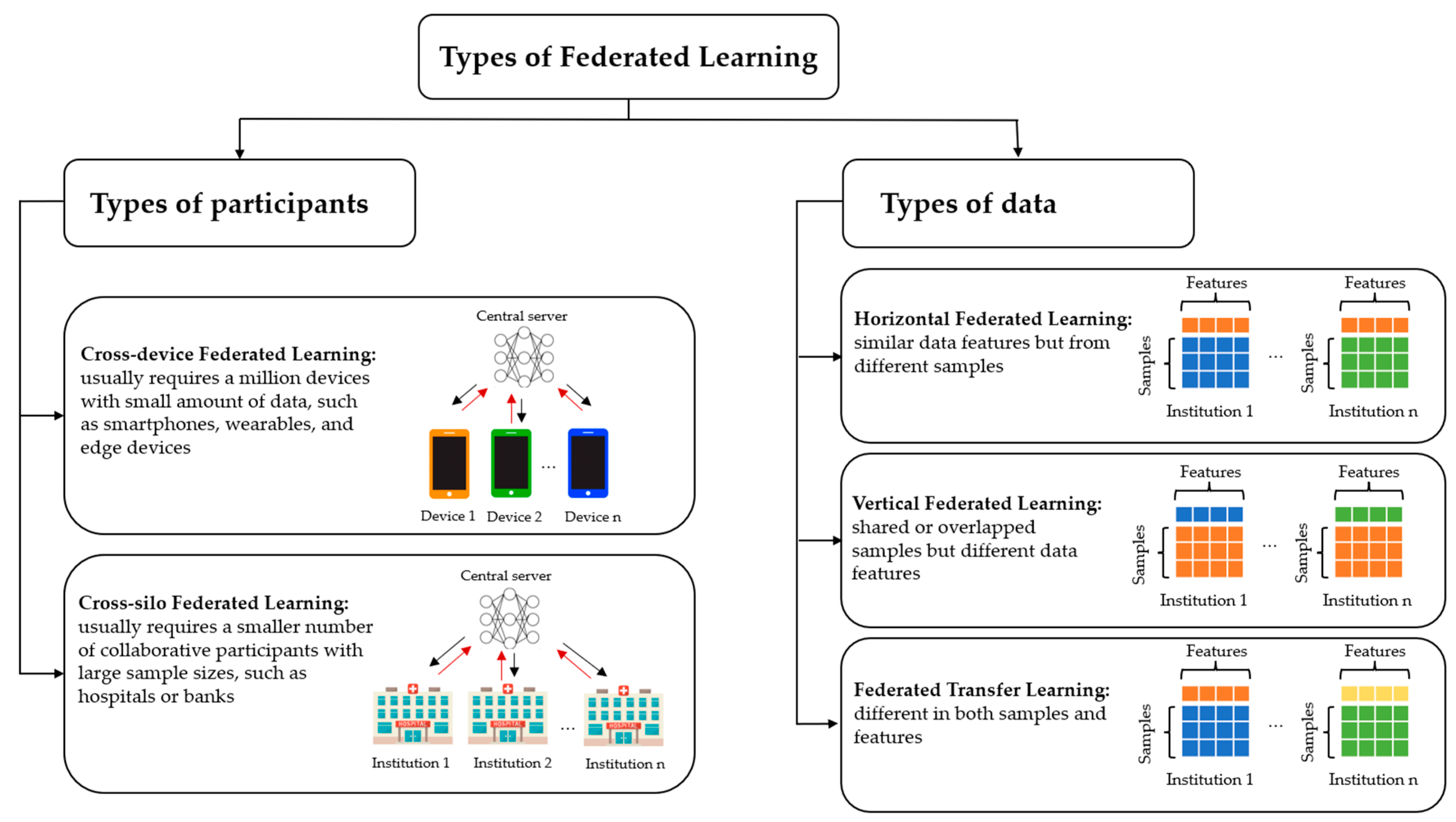

2. Types of Federated Learning

3. Current Federated Learning Applications in Ophthalmology

3.1. Diabetic Retinopathy

3.2. Retinopathy of Prematurity

4. Future Direction

Ophthalmology is a medical speciality driven by imaging that has unique opportunities for implementing DL systems. Ocular imaging is not only fast and cheap compared to other imaging modalities such as CT or MRI scans but contains essential information on ocular and systemic diseases. Utilising FL in prior DR and ROP studies illustrates the potential ability to overcome privacy challenges and inspires further deployment of FL in other ophthalmic diseases. In the future, FL applications and developments in real-world clinics are warranted.

4.1. Multi-Modal Federated Learning

4.2. Federated Learning and Rare Ocular Diseases

4.3. Blockchain-Based Federated Learning

4.4. Decentralised Federated Learning

4.5. Federated Learning and Fifth Generation (5G) and Beyond Technology

5. Conclusions

References

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282.

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated Machine Learning: Concept and Applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 12.

- Jin, Y.; Wei, X.; Liu, Y.; Yang, Q. Towards utilizing unlabeled data in federated learning: A survey and prospective. arXiv 2020, arXiv:2002.11545.

- Yang, Q.; Liu, Y.; Cheng, Y.; Kang, Y.; Chen, T.; Yu, H. Federated Learning. Synth. Lect. Artif. Intell. Mach. Learn. 2019, 13, 1–207.

- Yu, T.T.L.; Lo, J.; Ma, D.; Zang, P.; Owen, J.; Wang, R.K.; Lee, A.Y.; Jia, Y.; Sarunic, M.V. Collaborative Diabetic Retinopathy Severity Classification of Optical Coherence Tomography Data through Federated Learning. Investig. Ophthalmol. Vis. Sci. 2021, 62, 1029.

- Hanif, A.; Lu, C.; Chang, K.; Singh, P.; Coyner, A.S.; Brown, J.M.; Ostmo, S.; Chan, R.V.P.; Rubin, D.; Chiang, M.F.; et al. Federated Learning for Multicenter Collaboration in Ophthalmology: Implications for Clinical Diagnosis and Disease Epidemiology. Ophthalmol. Retina 2022, 6, 650–656.

- Lu, C.; Hanif, A.; Singh, P.; Chang, K.; Coyner, A.S.; Brown, J.M.; Ostmo, S.; Chan, R.V.P.; Rubin, D.; Chiang, M.F.; et al. Federated Learning for Multicenter Collaboration in Ophthalmology: Improving Classification Performance in Retinopathy of Prematurity. Ophthalmol. Retina 2022, 6, 657–663.

- Fleck, B.W.; Williams, C.; Juszczak, E.; Cocker, K.; Stenson, B.J.; Darlow, B.A.; Dai, S.; Gole, G.A.; Quinn, G.E.; Wallace, D.K.; et al. An international comparison of retinopathy of prematurity grading performance within the Benefits of Oxygen Saturation Targeting II trials. Eye 2018, 32, 74–80.

- Xiong, J.; Li, F.; Song, D.; Tang, G.; He, J.; Gao, K.; Zhang, H.; Cheng, W.; Song, Y.; Lin, F.; et al. Multimodal Machine Learning Using Visual Fields and Peripapillary Circular OCT Scans in Detection of Glaucomatous Optic Neuropathy. Ophthalmology 2022, 129, 171–180.

- Jin, K.; Yan, Y.; Chen, M.; Wang, J.; Pan, X.; Liu, X.; Liu, M.; Lou, L.; Wang, Y.; Ye, J. Multimodal deep learning with feature level fusion for identification of choroidal neovascularization activity in age-related macular degeneration. Acta Ophthalmol. 2022, 100, e512–e520.

- Zhao, Y.; Barnaghi, P.; Haddadi, H. Multimodal federated learning. arXiv 2021, arXiv:2109.04833.

- Qayyum, A.; Ahmad, K.; Ahsan, M.A.; Al-Fuqaha, A.; Qadir, J. Collaborative federated learning for healthcare: Multi-modal covid-19 diagnosis at the edge. arXiv 2021, arXiv:2101.07511.

- Sadilek, A.; Liu, L.; Nguyen, D.; Kamruzzaman, M.; Serghiou, S.; Rader, B.; Ingerman, A.; Mellem, S.; Kairouz, P.; Nsoesie, E.O.; et al. Privacy-first health research with federated learning. NPJ Digit. Med. 2021, 4, 132.

- Sheller, M.J.; Edwards, B.; Reina, G.A.; Martin, J.; Pati, S.; Kotrotsou, A.; Milchenko, M.; Xu, W.; Marcus, D.; Colen, R.R.; et al. Federated learning in medicine: Facilitating multi-institutional collaborations without sharing patient data. Sci. Rep. 2020, 10, 12598.

- Fujinami-Yokokawa, Y.; Ninomiya, H.; Liu, X.; Yang, L.; Pontikos, N.; Yoshitake, K.; Iwata, T.; Sato, Y.; Hashimoto, T.; Tsunoda, K.; et al. Prediction of causative genes in inherited retinal disorder from fundus photography and autofluorescence imaging using deep learning techniques. Br. J. Ophthalmol. 2021, 105, 1272–1279.

- Lu, M.Y.; Chen, R.J.; Kong, D.; Lipkova, J.; Singh, R.; Williamson, D.F.K.; Chen, T.Y.; Mahmood, F. Federated learning for computational pathology on gigapixel whole slide images. Med. Image Anal. 2022, 76, 102298.

- Haleem, A.; Javaid, M.; Singh, R.P.; Suman, R.; Rab, S. Blockchain technology applications in healthcare: An overview. Int. J. Intell. Netw. 2021, 2, 130–139.

- Ng, W.Y.; Tan, T.-E.; Xiao, Z.; Movva, P.V.H.; Foo, F.S.S.; Yun, D.; Chen, W.; Wong, T.Y.; Lin, H.T.; Ting, D.S.W. Blockchain Technology for Ophthalmology: Coming of Age? Asia-Pac. J. Ophthalmol. 2021, 10, 343–347.

- Tan, T.-E.; Anees, A.; Chen, C.; Li, S.; Xu, X.; Li, Z.; Xiao, Z.; Yang, Y.; Lei, X.; Ang, M.; et al. Retinal photograph-based deep learning algorithms for myopia and a blockchain platform to facilitate artificial intelligence medical research: A retrospective multicohort study. Lancet Digit. Health 2021, 3, e317–e329.

- Wang, Z.; Hu, Q. Blockchain-based Federated Learning: A Comprehensive Survey. arXiv 2021, arXiv:2110.02182.

- Warnat-Herresthal, S.; Schultze, H.; Shastry, K.L.; Manamohan, S.; Mukherjee, S.; Garg, V.; Sarveswara, R.; Händler, K.; Pickkers, P.; Aziz, N.A.; et al. Swarm Learning for decentralized and confidential clinical machine learning. Nature 2021, 594, 265–270.

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R. Advances and open problems in federated learning. Found. Trends® Mach. Learn. 2021, 14, 1–210.

- Saldanha, O.L.; Quirke, P.; West, N.P.; James, J.A.; Loughrey, M.B.; Grabsch, H.I.; Salto-Tellez, M.; Alwers, E.; Cifci, D.; Ghaffari Laleh, N. Swarm learning for decentralized artificial intelligence in cancer histopathology. Nat. Med. 2022, 28, 1232–1239.

- Simkó, M.; Mattsson, M.O. 5G Wireless Communication and Health Effects-A Pragmatic Review Based on Available Studies Regarding 6 to 100 GHz. Int. J. Environ. Res. Public Health 2019, 16, 3406.

- Hong, Z.; Li, N.; Li, D.; Li, J.; Li, B.; Xiong, W.; Lu, L.; Li, W.; Zhou, D. Telemedicine During the COVID-19 Pandemic: Experiences From Western China. J. Med. Internet Res. 2020, 22, e19577.

- Chen, H.; Pan, X.; Yang, J.; Fan, J.; Qin, M.; Sun, H.; Liu, J.; Li, N.; Ting, D.; Chen, Y. Application of 5G Technology to Conduct Real-Time Teleretinal Laser Photocoagulation for the Treatment of Diabetic Retinopathy. JAMA Ophthalmol. 2021, 139, 975–982.