Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Tuyen Le | -- | 4197 | 2022-12-01 05:27:39 | | | |

| 2 | Sirius Huang | Meta information modification | 4197 | 2022-12-02 02:27:18 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Le, T.; Elelu, K.; Le, C. Audio-Based Hazard Detection for Construction Safety. Encyclopedia. Available online: https://encyclopedia.pub/entry/37514 (accessed on 13 January 2026).

Le T, Elelu K, Le C. Audio-Based Hazard Detection for Construction Safety. Encyclopedia. Available at: https://encyclopedia.pub/entry/37514. Accessed January 13, 2026.

Le, Tuyen, Kehinde Elelu, Chau Le. "Audio-Based Hazard Detection for Construction Safety" Encyclopedia, https://encyclopedia.pub/entry/37514 (accessed January 13, 2026).

Le, T., Elelu, K., & Le, C. (2022, December 01). Audio-Based Hazard Detection for Construction Safety. In Encyclopedia. https://encyclopedia.pub/entry/37514

Le, Tuyen, et al. "Audio-Based Hazard Detection for Construction Safety." Encyclopedia. Web. 01 December, 2022.

Copy Citation

Safety-critical sounds at job sites play an essential role in construction safety, but hearing capability is often declined due to the use of hearing protection and the complicated nature of construction noise. Thus, preserving or augmenting the auditory situational awareness of construction workers has become a critical need.

auditory signal processing

hazard detection

auditory situational awareness

construction safety

AI

1. Introduction

The recognition of auditory safety cues at job sites plays a vital role in preventing injuries and deaths for construction workers [1]. Research has found a significant correlation between a lack of auditory signal awareness and an increase in unsafe actions leading to fatalities due to the failure to stay vigilant in hazardous situations [2][3]. Inadequate auditory situation awareness is often caused by declining hearing capability due to the use of hearing protection and the complex nature of construction noise [3]. Therefore, the development of wearable devices capable of automated detection of acoustic safety signals has received increasing attention from the research community.

Despite the critical need for augmenting the hearing of safety cues for construction workers, research in this area is still lagging. Previous studies on wearable safety devices have been focused on employing real-time data analytics to continuously measure a wide variety of safety performance metrics [4] other than auditory signals. Typical functions of existing wearable safety devices include physiological monitoring, environmental sensing, proximity detection, and location tracking of construction hazards [5]. Adopting computer vision algorithms to detect hazards is among the most popular approaches for extracting information from images or videos [6][7][8]. This approach still involves various challenges, such as limited field of view, illuminations, and occlusions of digital cameras that could harm the widespread use of this method at complicated construction sites. Others employed kinematic sensors, such as gyroscopes and accelerometers, to record the kinematic signals of the equipment and to detect its activities [9][10]. However, using kinematic sensors fails to detect hazards in such situations when sensors cannot be directly attached to the body of the machine that vibrates during operation, such as jackhammers, concrete pumps, and concrete truck mixers. It is also expensive and complex to deploy sensing devices on every piece of construction equipment.

Audio-based hazard detection has emerged as a supplement technique that can augment workers in effectively monitoring the work environment. Processing audio signals for automated detection has meaningful implications because hazardous situations usually cause strong acoustic emissions. A variety of hazard detection models employing advanced machine learning techniques for signal processing have been developed in many other workplace environments other than construction sites [11][12][13][14][15]. They possess great potential to enable workers to quickly detect auditory safety cues that are important for their safety. These computing advances have been implemented in many different environments, including indoor and public environments [16][17][18][19][20][21][22][23][24][25][26], medical and health care systems [27][28], and working environments [29][30][31][32][33]. However, current research does not sufficiently address automated auditory surveillance for construction safety.

2. Audio Signal Processing Applications and Methods

2.1. Application Contexts of Auditory Surveillance

Auditory surveillance has been applied to detect abnormal events in various contexts. As shown in Table 1, the literature shows the implementations of sound-based technology in the following areas: home security [23], public environments [16][17][18][19][20][21][22][24][25][26], office [29], medical and health care facilities [27][28], and in industrial plants [31][32].

Table 1. Applications of sound surveillance.

| Application | References |

|---|---|

| Home security | [23] |

| Detection of critical situations in a railway system | [24] |

| General surveillance in public areas | [16][17][19][20][21][22] |

| Detection of crimes in elevators | [25] |

| Detection of human emotions in public spaces | [18][26] |

| Office surveillance | [29] |

| Medical and health care facilities | |

| Surveillance of the elderly, the convalescent, or pregnant women | [27] |

| Automatic fall detection to improve the quality of life for independent older adults | [28] |

| Industrial plants | |

| Fault diagnosis of an induction motor | [31] |

| Detection of abnormality or failure of equipment | [32] |

Public environments have received the most attention from the research community. Despite complicated noises in public, many studies achieved promising results for the surveillance of abnormal sounds such as glass breaking, screams, gunshots, and explosions. For example, Ref. [24] developed a technique for detecting shouting events in a real-life railway environment. Not only are audio events automatically detected, but the positions of the acoustic sources are also localized [17]. Audio events of gunshots could be detected based on a novelty detection approach, which offers a solution to detect abnormality in continuous audio recordings in public places [16]. Another similar architecture for acoustic surveillance of abnormal situations under different acoustic backgrounds was built to detect vocal reactions (screams, expressions of pain) and non-vocal atypical events associated with hazardous situations (gunshots and explosions) [19]. Models for the detection of abnormal acoustic events from normal background sound were also developed by several authors [20]. A few efforts have been made focusing on the detection of crimes in elevators [25] and the detection of human emotions based on verbal sounds in hazardous situations [18][26]. Ref. [29] built a system for acoustic surveillance to detect abnormal sounds from people talking, opening and closing doors, and using computers and other devices in an office.

In addition, many studies have implemented signal processing for the surveillance of healthcare facilities. For example, a system to extract sound features for medical telesurvey was developed by [27]. It can classify detected sounds into normal and abnormal types. The system’s purpose is to detect severe accidents such as falls or faintings at any place in the living area, which is useful for the surveillance of the elderly, the convalescent, or pregnant women. Furthermore, since older adults living alone potentially get into trouble when they fall and are even unable to call for assistance, a framework that detects falls by using acoustic signals by analyzing environmental sounds was proposed [28].

In industrial sectors, current research on applications of sound-based detection has focused on detecting abnormal behaviors of the machine or the equipment. Acoustic-based fault diagnosis techniques of a three-phase induction motor are presented to see if the motor is in bad or good condition [31]. Many rotating electric motors can be diagnosed using acoustic signals; this can prevent unexpected failure and can improve the maintenance of electric motors. The advantages of the proposed acoustic-based fault diagnosis technique are its non-invasive technique, low cost, and instant measurement of acoustic signals. A novel optimization technique for the unsupervised anomaly detection of sound signals using an autoencoder (AE) is proposed [32]. The goal is to detect unknown sounds without training data to identify abnormality or failure in the operation of the stereolithography 3D printer, the air blower pump, and the water pump.

2.2. Principles of Audio-Based Hazard Detection

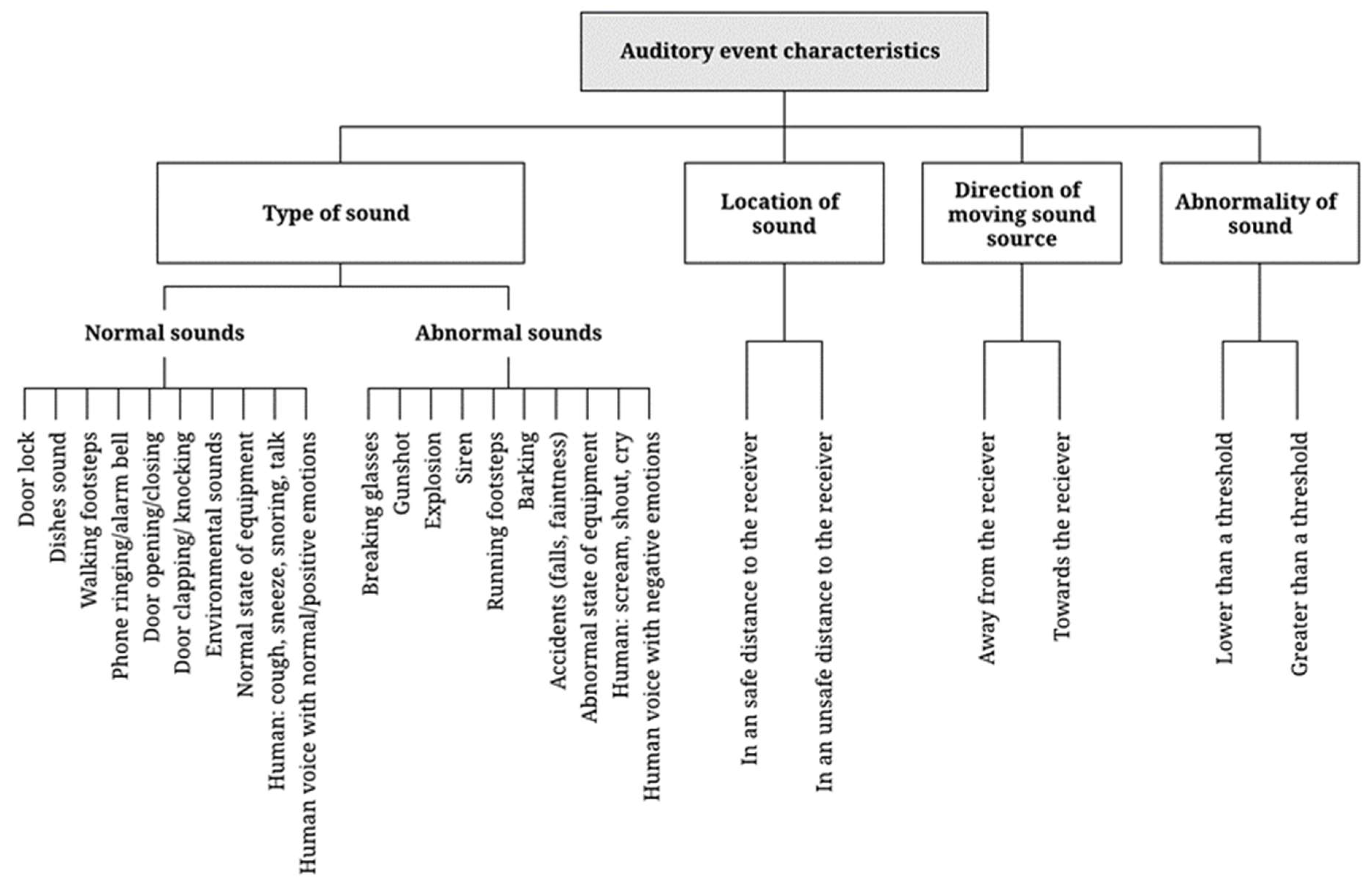

The detection of hazards using acoustic data is typically based on the extraction of the following four types of information: type of sound, location of the sound, the direction of moving sound sources, and the abnormality of sound (see Figure 1). These auditory event characteristics are used as input for detecting hazardous situations. The type of sound is the most common indicator used for detecting hazardous events and differentiating abnormal sounds from normal sound events. The occurrence of abnormal sounds, such as a gunshot or an explosion, is an important indicator of a dangerous situation requiring a quick safety response. Additionally, sound localization cues, such as the location and the direction of a moving sound source, are also essential for detecting potential abnormal events. They could inform receivers whether or not they are at an unsafe distance from the hazard. Moreover, measuring the abnormality of ambient sound has been used for evaluating hazardous situations, such as a scenario where a machine is operated and any abnormal sound that occurs in the parts or in the assembly process is often regarded as an abnormal sound and will require the attention of maintenance officers. Given the fact that one may not be able to classify all the unknown abnormal sound events that occur during equipment operational noise inspection, the anomaly would be useful in cases where researchers are developing the abnormal noise inspection device to automate the process.

Figure 1. Indicators of hazardous events.

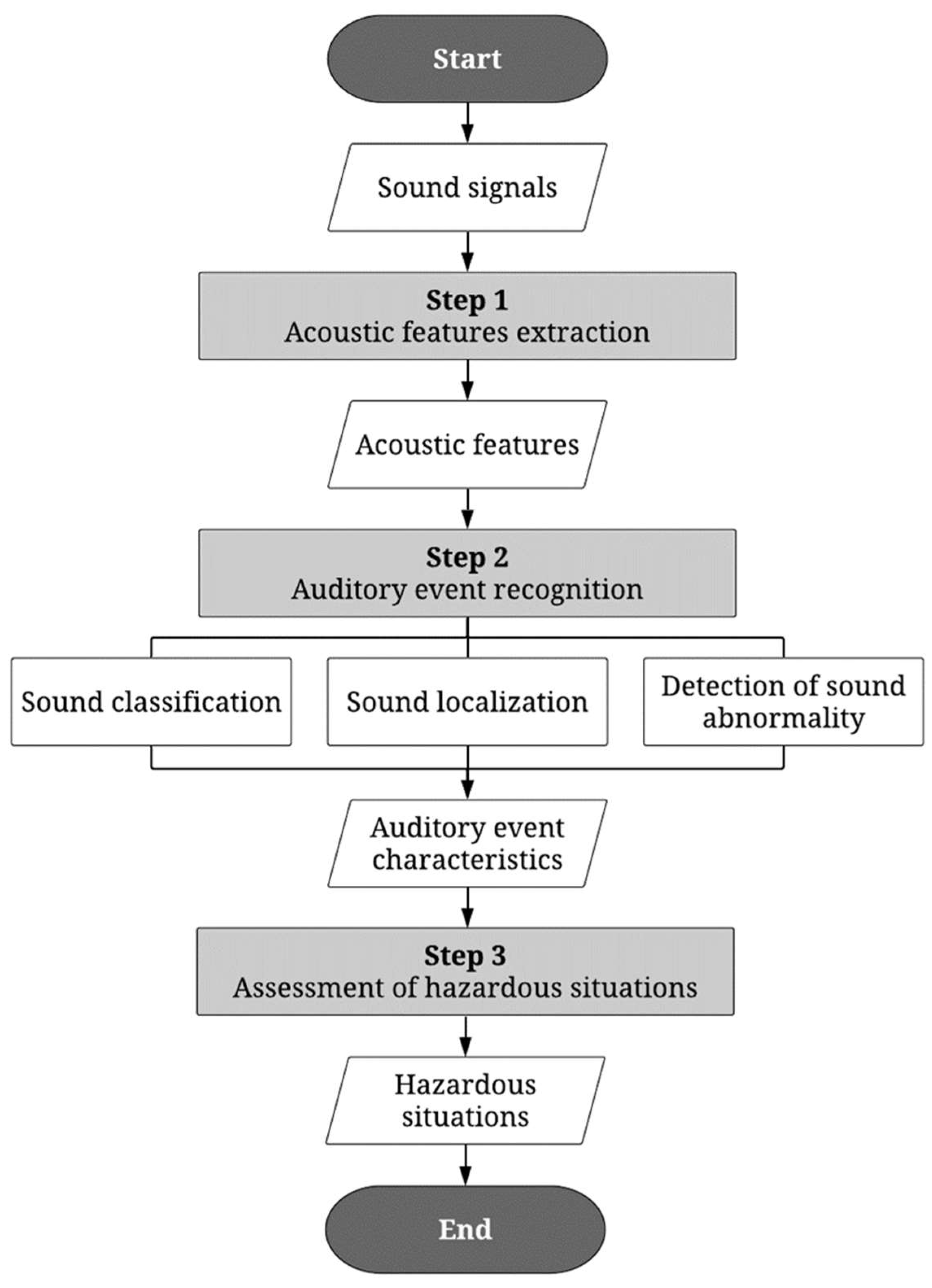

Automatic detection of auditory cues requires computational advances in processing the auditory signals. As discussed earlier, hazardous situations are detected based on auditory features, including the type of sound, sound localization, and sound abnormality. Figure 2 provides a typical procedure of sound-based hazard detection found in various literature. The process entails three steps: (1) acoustic feature extraction, (2) auditory event recognition, and (3) assessment of hazardous situations. Of those steps, recognizing auditory events in Step 2 is the most critical step in the signal-processing procedure.

Figure 2. Overall architecture of auditory signal processing to detect hazardous situations.

2.3. Sound Localization

Sound localization refers to the ability to identify acoustic sources in terms of direction and distance. It is one of the essential acoustic parameters that enables the ability to recognize and locate hazardous events. When dangerous objects or equipment are within an unsafe distance of a construction worker, detecting the location of acoustic sources can enable them to make appropriate preventive responses. Methods to localize sound events are mainly based on calculating the difference in the arrival times of the signal. Then, the similarity measure of the signal at different times is examined in either time or frequency domain for sound localization. For the time domain, the acoustic impulses reach the microphones at varying Times of Arrival (TOA) when they are spatially distant from one another. The signal’s Direction of Arrival (DOA) is determined from the recorded time delays using the given array geometry. Each pair of microphones in the array has a projected time delay. Then, using time delays and geometry, the best estimate of the DOA is determined, while the frequency domain is the difference between the time sound pressure reaching the array geometry and is mostly used to localize higher frequency sounds. Table 2 lists the methods for sound localization, of which the details are provided below.

Table 2. Summary of methods for sound localization.

| Methods | References |

|---|---|

| 1. Maximum-Likelihood Generalized Cross Correlation (GCC) | [17] |

| 2. Linear-correction least-square localization algorithm | [17] |

| 3. Similarity measure based on the Euclidean Distance (EUD) in the time domain | [34] |

| 4. Similarity measure based on Normalized Cross Correlation (NCC) in the frequency domain | [35] |

| 5. Similarity measure based on the Euclidean Distance (EUD) in the frequency domain | [36] |

| 6. Fast Fourier Transform (FFT) | [36] |

| 7. Fingerprinting algorithm | [37] |

Calculating the Time Difference of Arrivals (TDOA) of the signal is one approach to the detection of sound location. Valenzise et al. [17] adopted the Maximum-Likelihood Generalized Cross Correlation (GCC) method and linear-correction least-square localization algorithm for estimating the TDOA of the signal. Ref. [34] utilized the Euclidean Distance (EUD) to measure the similarity in the time domain. Other alternatives include measuring the similarity between signals at different arrivals based on the frequency domain. Compared with the time domain, the frequency domain showed significant improvements in the precision for sound localization as demonstrated by Satoh et al.’s [35] study, where they computed the cross-correlation in the frequency domain based on the Normalized Cross Correlation (NCC) method. Tarzia et al. [36] used Fast Fourier Transform (FFT) to measure the frequency domain similarity. Lastly, Wirz, Roggen, and Tröster [37] proposed an innovative method called the fingerprinting algorithm to measure the similarity based on the naive Bayes algorithm. Their results showed that the estimation of the quantitative distance in meters between a device DA and another device DB reaches an accuracy of approximately 80% when using ambient sound as a relevant source for obtaining proximity information.

Recently, a Deep Neural Network (DNN)-based approach has been proposed over the parametric approach (TDOA and IID) for sound source localization. Adavanne et al. [38] employed a convolutional RNN for sound localization of multiple overlapping sound events in three-dimensional (3D) space. The approach network takes a sequence of consecutive spectrogram timeframes as a multichannel input and maps it to a multi-output regression. The study shows that the proposed method is generic and applicable to any array structures and is robust to unseen DOA values, reverberation, and low SNR scenarios.

2.4. Sound Abnormality Detection

Measuring sound abnormality is another challenging issue, given that preparing all labeled sound data related to hazardous situations is unrealistic. If a surveillance system is trained using the data of specific sounds, such as explosions or gunshots, it cannot be applied to detect other auditory events. A method for detecting unknown abnormal sound events is required to develop an automated auditory surveillance system that is useful in more general cases. To address this issue, Ref. [39] developed a method that models the abnormality of sounds without using any samples of labeled sounds. The technique can detect those sounds that rarely occur in a normal situation. It first processes sound in the usual situation and trains a statistical model of the normal sounds. After training the model, the system continues to process sounds and calculates the likelihood of the sound. If the likelihood value goes beyond a predefined threshold, that sound is considered abnormal. Lu et al. [40] used another approach to detect abnormal sounds using the case-based identification algorithm. In this method, it is necessary to first convert the sound data into feature representation vectors and then to apply an establishment distribution of a supervised learning model. This supervised learning requires a small training dataset of sample elements of abnormality.

In the construction industry, sound equipment abnormality will affect the model performance since mobile equipment sound varies between different types of equipment. This could be because the acoustic characteristic of one sound is more difficult to train than that of another. Other equipment characteristics that may affect sound abnormality measurement are the models, brands, age, and maintenance programs. Obtaining different model metadata for the audio dataset would help us understand how they affect the detection capability. Collecting such data requires a significant effort in terms of time, cost, and human resources.

3. Audio-Based Surveillance in Construction

3.1. Feasibility of Implementing Audio-Based Hazard Detection in Construction

The potential auditory indicators of hazards in construction are provided in Table 3. As shown, one of the most important types of sounds is the sound source of moving heavy equipment or machinery, which can cause collision hazards [30][33][41]. Furthermore, the detection of screams, shouts, or cry sounds is also supportive for acoustic surveillance and monitoring of negative situations, since human emotions are somehow delivered in sound events [17][18][19][20][23][24][25][26][27][28][42][43][44][45][46]. Other sounds released by people can also help detect abnormal situations. Detecting ground ambient sound (i.e., a group of people) allows workers to be aware of violent events, natural disasters, riots, or chaotic acts in crowds [26]. Some types of safety-critical sounds which lead to dangerous situations, such as alarms of fire, earthquakes, explosions, and gunshots, could improve the situational awareness of hazards [23][25][45][46]. For example, the detection of an explosion allows workers to stay away from the hazardous source [16] and gunshot detection allows workers to stay away from a gun attack [16][17][19][20][25][42][43].

Table 3. Summary of auditory event characteristics in the construction field.

| Code | Auditory Event Characteristics | References | |

|---|---|---|---|

| A | Abnormality of sound | ||

| Greater than the threshold? | [39] | ||

| B | Direction of moving sound source | ||

| Towards the worker? | [34][35][36][37] | ||

| C | Location of sound | ||

| Less than a safe distance to the worker? | [34][35][36][37] | ||

| D | Type of sound | ||

| D.1 | Alert alarm (fire/earthquake) | [23][25][45][46] | |

| D.2 | Explosion | [19] | |

| D.3 | Gunshot | [16][17][19][20][25][42][43] | |

| D.4 | Human | a. Announcement | [23] |

| b. Crowd ambient sound | [26] | ||

| c. Scream/shout/cry | [17][18][19][20][23][24][25][26][27][28][42][43][44][45][46] | ||

| D.5 | Moving heavy equipment/machine | [30][33][41] | |

Other than the classification of sound, sound source location [34][35][36][37], the direction of a moving sound source [34][35][36][37], and sound abnormality [39] are useful for detecting hazardous situations in the construction field. For example, the information on the location and the direction of moving construction equipment from the engine sound could help alert if a heavy construction vehicle is in proximity to a worker. Additionally, suppose the construction equipment breaks down by falling, collapsing, colliding, or by failures in the engine, the information of the abnormality in the sound could give a cue to carry out proper assistance, e.g., the noise produced when excessive vibration of equipment occurs that is not usually expected to vibrate.

Existing frameworks for detecting safety cues solely rely upon a single type of information, for example, high-frequency filtering [47], sound identify classification [48][49], or direction of arrival of sound [50]. Using the information individually is insufficient for evaluating hazardousness in construction that requires a simultaneous consideration of many factors, including the size of the equipment in contact with, the breaking speed of a machine, the average reaction time of a worker, and the speed of the worker. Table 4 presents a list of hazardous situations in construction sites along with required auditory indicators. Specifically, hazardous situations include heavy equipment/machines being at an unsafe distance from workers when detected using the sound of moving equipment. The detection of equipment approaching or operating in an abnormal condition will be alerted if the direction of the moving sound source is toward the worker or if the sound source is abnormal. Other situations, such as someone crying/shouting, a crowd approaching, alert alarms, an explosion, or a gunshot, can indicate a hazardous situation. Defining hazardous situations that require quick and effective responses from construction workers is the priority of automated auditory surveillance in the construction field and could contribute to construction workers’ safety.

Table 4. List of hazardous situations and required auditory event characteristics (see Table 3 for the auditory event notations).

| No. | Hazardous Situations | Combination of Auditory Event Characteristics |

|---|---|---|

| 1 | Heavy equipment/machine is at an unsafe distance | A.1 + B |

| 2 | Heavy equipment/machine is approaching | A.1 + B + C |

| 3 | Heavy equipment/machine is operating in an abnormal condition | A.1 + D |

| 4 | There is someone screaming/shouting/crying | A.2a |

| 5 | There is a crowd approaching | A.2b + C |

| 6 | There is an abnormal crowd approaching | A.2b + C + D |

| 7 | There is an alert alarm | A.3 |

| 8 | There is an explosion | A.4 |

| 9 | There is a gunshot | A.5 |

| 10 | There is an abnormal sound | D |

3.2. State-of-the-Art Research in Auditory Signal Processing for Construction

There have been emerging studies on audio-based activity detection for improving construction management and productivity due to its advantages in terms of cost and applicability [51]. Other efforts have aimed to develop new methods for assisting workers in hearing critical sounds, which is a crucial need given the typical heterogeneity of sounds generated from diverse construction work activities, including static equipment and hand tools [52][53]. The examination of various OSHA accident reports by Hinze et al., 2011 [41] revealed that the heterogeneous nature of concurrent construction sounds (e.g., equipment sounds and alarms) indeed decreased workers’ safety awareness, since alarm signals may be drowned out or not audible enough for workers. They also reported that there were cases where multiple alarm signals issued warnings at the same time, influencing workers’ judgment and making the alert signals less effective or ineffective. However, due to the technical challenges of processing complex soundscapes, this problem has gained little attention from the academic community in the past decade.

Only a few papers were found related to this technology in construction management. In general, previous studies were focused on the tracking of activities of construction equipment, identification of working and operation activities, proximity detection and alert systems, and embedded sensory systems. Of those areas, a majority number of studies aimed at monitoring the activities of heavy construction equipment to reduce operating costs [30][54][55][56]. This is probably because a large portion of the expenses in a construction project is allocated towards the operating costs of heavy equipment. For example, Ref. [57] applied the Hidden Markov Model (HMM) and a frequency-domain technique on spectrogram data to accurately classify types of construction sounds and to identify patterns from each type of construction task. The classified sound signals’ strength and location are visualized with a Building Information Modeling (BIM) platform. The acoustic signals from construction activities were used to calculate working periods to allow field managers to track work progress and productivity and to provide a means to efficiently enhance project schedule management. Ref. [58] worked on a sound monitoring system for the prevention of underground pipeline damage. To develop a dataset similar to what is found on the construction site, they collected working equipment sound data of typical construction threats, including excavators, hammers, road cutters, and electric hammers. They also collected the background noise of a typical construction environment, such as pedestrians, traffic, and wind sound. Two random forest-based classifiers were developed to detect suspicious sounds and to help prevent pipeline damages caused by construction activities. The endpoint was to create an alarm system that uploads a report if the duration of a construction threat sound exceeds the threshold value specified.

Another study proposed a hybrid system for recognizing multiple construction equipment activities [30]. The study trained a machinery task classifier on integrated data of both audio and kinematics using Support Vector Machines (SVM). The proposed system results indicate that a hybrid system is capable of providing up to 20% more accurate results compared with cases using individual sources of data such as images [6][7][8], sensors [9][10], and audio [56][59][60]. The system allows the construction managers to monitor and track productivity, equipment downtime/idle time detection, equipment cycle time estimation, and equipment fuel use control. Wei et al. [61] also developed a noise hazard prediction method that combines a wearable audio sensor with Building Information Modeling (BIM) data to predict and visualize spatial noise distribution on BIM models.

There has also been a widespread usage of machine learning algorithms to train sound data for construction activity monitoring. Ref. [59] implemented a supervised machine learning-based sound identification approach to enhance the monitoring of construction site activities. Ref. [52] also developed a risk assessment framework using a machine learning algorithm. This method used the activity classification information from auditory signals to estimate safety risks based on the occupational injury and illness information contained in historical construction accident data. All these audio-based frameworks for detecting construction activities are effective, especially for night-time tasks, since activities on construction sites can be detected regardless of visibility levels that are not suitable for image-based approaches.

Another line of effort on auditory surveillance in construction is focused on the identification of construction collision hazards. The advanced computational techniques in auditory signal processing for collision hazard detection in construction are motivated by strong acoustic emissions from equipment operation, since construction equipment often produces unique sound patterns while performing certain activities (e.g., moving vs. idling) [55], which can be used as an indicator of safety-critical cues or warning signals. For example, the research done by Lee and Yang [47] used a high-frequency sound (18 kHz, inaudible to construction workers) to analyze the doppler effect change caused by a single subject’s movements to prevent struck-by hazards. They installed a speaker on equipment that plays a predefined high-frequency sound. A smartphone carried by an on-foot worker was used to capture the sound produced by the speaker. The input signal was processed to extract the position of the equipment relative to the on-foot worker. The proposed technology was able to classify the movement direction and speed with 97.4% accuracy. Although the study proves its potential for detecting collision threats from equipment, the study still has some limitations. Firstly, they only tested a struck-by hazard situation involving a single type of moving construction equipment. Since the construction site is a complex environment in which multiple pieces of equipment work simultaneously leading to signal overlap, the mixture of similar sounds would prevent the recognition of movement of individual pieces of equipment. Another limitation was that the sound source was a speaker attached to the equipment, not the sound produced by the mobile equipment. This means the deployment will require expensive installation of sound speakers on every piece of equipment present on the job site.

Recent studies by Refs. [48][49] developed sound classification models that can distinguish between mobile equipment and stationary equipment to support collision hazard detection. These studies collected and synthesized the sound of construction equipment at different signal-to-noise ratios and used the dataset to develop a machine learning model using a CNN for automated detection of mobile equipment occurrence. The efficacy of this model was tested on a real construction site and the result accuracy of the model was 99% in detecting sounds related to collision hazards when the signals were not buried in background noises. Compared with earlier efforts, Refs. [48][49] offer superior advancement, as their models are able to deal with complex soundscapes with overlapping sound sources, including mobile and stationary equipment and background noise (e.g., workers communicating, materials’ movement, and street noises). Another study that utilized equipment sounds for collision hazard assessment was performed by Ref. [50], which aimed at localizing the sound sources using the Direction of Arrival (DOA) signal processing techniques. The determination of sound location can supplement the sound classifiers developed by Refs. [48][49] to enable a more comprehensive assessment of the hazardousness-based situations. This information is vital for construction workers and safety engineers to precisely reduce false alarms by only notifying workers if they are in a danger zone based on the distance calculated using the direction of the hazard. For example, a mobile piece of equipment moving further away from an on-foot worker does not impose a hazard.

Quantitative benchmarks between existing frameworks for preventing struck-by hazards are greatly difficult as they all were tested with nonidentical testing conditions (e.g., software, hardware, job-site characteristics, and assumptions). Therefore, some performance metrics, such as recall, cost, computational power, and data usage, can still be used for a fair comparison. In terms of performance comparison, they all yielded a competitive recall of 99% [48], 98% [62], 99% [48][49], and 100% [63]. Cost comparison was another metric to measure past success in preventing struck-by hazards with mobile equipment. Audio-based collision hazard detection by Ref. [48] requires little financial investment as the model can be quickly deployed on workers’ smartphones, while a relatively high deployment cost is needed for many sensor devices [63] or high-quality cameras [62][64].

References

- Deshaies, P.; Martin, R.; Belzile, D.; Fortier, P.; Laroche, C.; Leroux, T.; Nélisse, H.; Girard, S.-A.; Arcand, R.; Poulin, M.; et al. Noise as an explanatory factor in work-related fatality reports. Noise Health 2015, 17, 294–299.

- Fang, D.; Zhao, C.; Zhang, M. A Cognitive Model of Construction Workers’ Unsafe Behaviors. J. Constr. Eng. Manag. 2016, 142, 4016039.

- Morata, T.C.; Themann, C.L.; Randolph, R.; Verbsky, B.L.; Byrne, D.C.; Reeves, E.R. Working in Noise with a Hearing Loss: Perceptions from Workers, Supervisors, and Hearing Conservation Program Managers. Ear Hear. 2005, 26, 529–545.

- Awolusi, I.; Marks, E.; Hallowell, M. Wearable technology for personalized construction safety monitoring and trending: Review of applicable devices. Autom. Constr. 2018, 85, 96–106.

- Awolusi, I.; Nnaji, C.; Marks, E.; Hallowell, M. Enhancing Construction Safety Monitoring through the Application of Internet of Things and Wearable Sensing Devices: A Review. In Proceedings of the Computing in Civil Engineering, Atlanta, Georgia, USA, 17–19 June 2019; American Society of Civil Engineers: Reston, VA, USA; pp. 530–538.

- Golparvar-Fard, M.; Heydarian, A.; Niebles, J.C. Vision-based action recognition of earthmoving equipment using spatio-temporal features and support vector machine classifiers. Adv. Eng. Inform. 2013, 27, 652–663.

- Gong, J.; Caldas, C.H. Computer Vision-Based Video Interpretation Model for Automated Productivity Analysis of Construction Operations. J. Comput. Civ. Eng. 2010, 24, 252–263.

- Gong, J.; Caldas, C.H.; Gordon, C. Learning and classifying actions of construction workers and equipment using Bag-of-Video-Feature-Words and Bayesian network models. Adv. Eng. Inform. 2011, 25, 771–782.

- Akhavian, R.; Behzadan, A.H. Construction equipment activity recognition for simulation input modeling using mobile sensors and machine learning classifiers. Adv. Eng. Inform. 2015, 29, 867–877.

- Ahn, C.R.; Lee, S.; Peña-Mora, F. Application of Low-Cost Accelerometers for Measuring the Operational Efficiency of a Construction Equipment Fleet. J. Comput. Civ. Eng. 2015, 29, 4014042.

- Salamon, J.; Jacoby, C.; Bello, J.P. A Dataset and Taxonomy for Urban Sound Research. In Proceedings of the 2014 ACM Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 1041–1044.

- Salamon, J.; Bello, J.P. Deep Convolutional Neural Networks and Data Augmentation for Environmental Sound Classification. IEEE Signal Process. Lett. 2017, 24, 279–283.

- Cramer, J.; Wu, H.-H.; Salamon, J.; Bello, J.P. Look, Listen, and Learn More: Design Choices for Deep Audio Embeddings. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3852–3856.

- Gemmeke, J.F.; Ellis, D.P.W.; Freedman, D.; Jansen, A.; Lawrence, W.; Moore, R.C.; Plakal, M.; Ritter, M. Audio Set: An Ontology and Human-Labeled Dataset for Audio Events. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 776–780.

- Piczak, K.J. Environmental Sound Classification with Convolutional Neural Networks. In Proceedings of the 2015 IEEE 25th International Workshop on Machine Learning for Signal Processing (MLSP), Boston, MA, USA, 17–20 September 2015; pp. 1–6.

- Clavel, C.; Ehrette, T.; Richard, G. Events Detection for an Audio-Based Surveillance System. In Proceedings of the 2005 IEEE International Conference on Multimedia and Expo, Amsterdam, The Netherlands, 5–6 July 2005; pp. 1306–1309.

- Valenzise, G.; Gerosa, L.; Tagliasacchi, M.; Antonacci, F.; Sarti, A. Scream and Gunshot Detection and Localization for Audio-Surveillance Systems. In Proceedings of the 2007 IEEE Conference on Advanced Video and Signal Based Surveillance, London, UK, 5–7 September 2007; pp. 21–26.

- Vasilescu, I.; Devillers, L.; Clavel, C.; Ehrette, T. Fiction Database for Emotion Detection in Abnormal Situations. In Proceedings of the 31st International Symposium on Computer Architecture (ISCA 2004), Munich, Germany, 19–23 June 2004; pp. 2277–2280.

- Ntalampiras, S.; Potamitis, I.; Fakotakis, N. An Adaptive Framework for Acoustic Monitoring of Potential Hazards. EURASIP J. Audio Speech Music Process 2009, 2009, 594103.

- Choi, W.; Rho, J.; Han, D.K.; Ko, H. Selective Background Adaptation Based Abnormal Acoustic Event Recognition for Audio Surveillance. In Proceedings of the 2012 IEEE Ninth International Conference on Advanced Video and Signal-Based Surveillance, Beijing, China, 18–21 September 2012; pp. 118–123.

- Carletti, V.; Foggia, P.; Percannella, G.; Saggese, A.; Strisciuglio, N.; Vento, M. Audio Surveillance Using a Bag of Aural Words Classifier. In Proceedings of the 2013 10th IEEE International Conference on Advanced Video and Signal Based Surveillance, Krakow, Poland, 27–30 August 2013; pp. 81–86.

- Chan, C.-F.; Yu, E.W.M. An Abnormal Sound Detection and Classification System for Surveillance Applications—IEEE Conference Publication. In Proceedings of the 2010 18th European Signal Processing Conference, Aalborg, Denmark, 23–27 August 2010; pp. 1851–1855.

- Lee, Y.; Han, D.; Ko, H. Acoustic Signal Based Abnormal Event Detection in Indoor Environment Using Multiclass Adaboost. IEEE Trans. Consum. Electron. 2013, 59, 615–622.

- Rouas, J.-L.; Louradour, J.; Ambellouis, S. Audio Events Detection in Public Transport Vehicle. In Proceedings of the 2006 IEEE Intelligent Transportation Systems Conference, Toronto, ON, Canada, 17–20 September 2006; pp. 733–738.

- Radhakrishnan, R.; Divakaran, A. Systematic Acquisition of Audio Classes for Elevator Surveillance. In Proceedings of the Image and Video Communications and Processing 2005, San Jose, CA, USA, 12–20 January 2005; Said, A., Apostolopoulos, J.G., Eds.; SPIE: Bellingham, WA, USA, 2005; Volume 5685, pp. 64–71.

- Clavel, C.; Vasilescu, I.; Devillers, L.; Richard, G.; Ehrette, T. Fear-Type Emotion Recognition for Future Audio-Based Surveillance Systems. Speech Commun. 2008, 50, 487–503.

- Vacher, M.; Istrate, D.; Besacier, L.; Serignat, J.-F.; Castelli, E. Sound Detection and Classification for Medical Telesurvey. In Proceedings of the 2nd Conference on Biomedical Engineering, Innsbruck, Austria, 16–18 February 2004.

- Adnan, S.M.; Irtaza, A.; Aziz, S.; Ullah, M.O.; Javed, A.; Mahmood, M.T. Fall Detection through Acoustic Local Ternary Patterns. Appl. Acoust. 2018, 140, 296–300.

- Härmä, A.; Mckinney, M.F.; Skowronek, J. Automatic Surveillance of the Acoustic Activity in Our Living Environment. In Proceedings of the IEEE International Conference on Multimedia and Expo, ICME 2005, Amsterdam, The Netherlands, 6–8 July 2005; Volume 2005, pp. 634–637.

- Sherafat; Rashidi; Lee; Ahn A Hybrid Kinematic-Acoustic System for Automated Activity Detection of Construction Equipment. Sensors 2019, 19, 4286.

- Glowacz, A. Acoustic Based Fault Diagnosis of Three-Phase Induction Motor. Appl. Acoust. 2018, 137, 82–89.

- Koizumi, Y.; Saito, S.; Uematsu, H.; Kawachi, Y.; Harada, N. Unsupervised Detection of Anomalous Sound Based on Deep Learning and the Neyman–Pearson Lemma. IEEE/ACM Trans. Audio Speech Lang. Process 2019, 27, 212–224.

- Marks, E.D.; Teizer, J. Method for Testing Proximity Detection and Alert Technology for Safe Construction Equipment Operation. Constr. Manag. Econ. 2013, 31, 636–646.

- Azizyan, M.; Choudhury, R.R. SurroundSense: Mobile Phone Localization Using Ambient Sound and Light. SIGMOBILE Mob. Comput. Commun. Rev. 2009, 13, 69–72.

- Satoh, H.; Suzuki, M.; Tahiro, Y.; Morikawa, H. Ambient Sound-Based Proximity Detection with Smartphones. In Proceedings of the 11th ACM Conference on Embedded Networked Sensor Systems; Association for Computing Machinery, Rome, Italy, 11–15 November 2013.

- Tarzia, S.P.; Dinda, P.A.; Dick, R.P.; Memik, G. Indoor Localization without Infrastructure Using the Acoustic Background Spectrum. In Proceedings of the MobiSys’11—Compilation Proceedings of the 9th International Conference on Mobile Systems, Applications and Services and Co-located Workshops, Washington, DC, USA, 28 June–1 July 2011.

- Wirz, M.; Roggen, D.; Tröster, G. A Wearable, Ambient Sound-Based Approach for Infrastructureless Fuzzy Proximity Estimation. In Proceedings of the International Symposium on Wearable Computers (ISWC) 2010, Seoul, Republic of Korea, 10–13 October 2010; pp. 1–4.

- Adavanne, S.; Politis, A.; Nikunen, J.; Virtanen, T. Sound Event Localization and Detection of Overlapping Sources Using Convolutional Recurrent Neural Networks. IEEE J. Sel. Top. Signal Process. 2018, 13, 34–48.

- Ito, A.; Aiba, A.; Ito, M.; Makino, S. Detection of Abnormal Sound Using Multi-Stage GMM for Surveillance Microphone. In Proceedings of the 2009 Fifth International Conference on Information Assurance and Security, Xi’an, China, 18–20 August 2009; Volume 1, pp. 733–736.

- Lu, H.; Li, Y.; Mu, S.; Wang, D.; Kim, H.; Serikawa, S. Motor Anomaly Detection for Unmanned Aerial Vehicles Using Reinforcement Learning. IEEE Internet Things J. 2018, 5, 2315–2322.

- Hinze, J.W.; Teizer, J. Visibility-related fatalities related to construction equipment. Saf. Sci. 2011, 49, 709–718.

- Janjua, Z.H.; Vecchio, M.; Antonini, M.; Antonelli, F. IRESE: An Intelligent Rare-Event Detection System Using Unsupervised Learning on the IoT Edge. Eng. Appl. Artif. Intell. 2019, 84, 41–50.

- Salekin, A.; Ghaffarzadegan, S.; Feng, Z.; Stankovic, J. A Real-Time Audio Monitoring Framework with Limited Data for Constrained Devices. In Proceedings of the 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini Island, Greece, 29–31 May 2019; pp. 98–105.

- Atrey, P.K.; Maddage, N.C.; Kankanhalli, M.S. Audio Based Event Detection for Multimedia Surveillance. In Proceedings of the 2006 IEEE International Conference on Acoustics Speech and Signal Processing, Toulouse, France, 14–19 May 2006.

- Marchi, E.; Vesperini, F.; Eyben, F.; Squartini, S.; Schuller, B. A Novel Approach for Automatic Acoustic Novelty Detection Using a Denoising Autoencoder with Bidirectional LSTM Neural Networks. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, QLD, Australia, 19–24 April 2015; pp. 1996–2000.

- Principi, E.; Vesperini, F.; Squartini, S.; Piazza, F. Acoustic Novelty Detection with Adversarial Autoencoders. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 3324–3330.

- Lee, J.; Yang, K. Mobile Device-Based Struck-By Hazard Recognition in Construction Using a High-Frequency Sound. Sensors 2022, 22, 3482.

- Elelu, K.; Le, T.; Le, C. Collision Hazard Detection for Construction Worker Safety Using Audio Surveillance. J. Constr. Eng. Manag. 2023, 149, 4022159.

- Elelu, K.; Le, T.; Le, C. Collision Hazards Detection for Construction Workers Safety Using Equipment Sound Data. In Proceedings of the 9th International Conference on Construction Engineering and Project Management, Las Vegas, NV, USA, 20–24 June 2022; pp. 736–743.

- Elelu, K.; Le, T.; Le, C. Direction of Arrival of Equipment Sound in the Construction Industry. In Proceedings of the 39th International Symposium on Automation and Robotics in Construction, Bogota, Colombia, 13–15 July 2022.

- Lee, Y.-C.; Shariatfar, M.; Rashidi, A.; Lee, H.W. Evidence-driven sound detection for prenotification and identification of construction safety hazards and accidents. Autom. Constr. 2020, 113, 103127.

- Lee, Y.-C.; Scarpiniti, M.; Uncini, A. Advanced Sound Classifiers and Performance Analyses for Accurate Audio-Based Construction Project Monitoring. J. Comput. Civ. Eng. 2020, 34, 4020030.

- Xie, Y.; Lee, Y.C.; Shariatfar, M.; Zhang, Z.D.; Rashidi, A.; Lee, H.W. Historical accident and injury database-driven audio-based autonomous construction safety surveillance. Computing in Civil Engineering 2019: Data, Sensing, and Analytics, Atlanta, GA, USA, 17–19 June 2019; pp. 105–113.

- Cheng, C.F.; Rashidi, A.; Davenport, M.A.; Anderson, D. Audio Signal Processing for Activity Recognition of Construction Heavy Equipment. In Proceedings of the ISARC 2016—33rd International Symposium on Automation and Robotics in Construction, Auburn, AL, USA, 18–21 July 2016; pp. 642–650.

- Cheng, C.-F.; Rashidi, A.; Davenport, M.A.; Anderson, D.V. Activity analysis of construction equipment using audio signals and support vector machines. Autom. Constr. 2017, 81, 240–253.

- Sabillon, C.A.; Rashidi, A.; Samanta, B.; Cheng, C.-F.; Davenport, M.A.; Anderson, D. V A Productivity Forecasting System for Construction Cyclic Operations Using Audio Signals and a Bayesian Approach. In Proceedings of the Construction Research Congress 2018, New Orleans, LA, USA, 2–4 April 2018; American Society of Civil Engineers: Reston, VA, USA, 2018; pp. 295–304.

- Cho, C.; Lee, Y.-C.; Zhang, T. Sound Recognition Techniques for Multi-Layered Construction Activities and Events. In Proceedings of the Computing in Civil Engineering 2017, Seattle, WA, USA, 25–27 June 2017; American Society of Civil Engineers: Reston, VA, USA; pp. 326–334.

- Liu, Z.; Li, S. A Sound Monitoring System for Prevention of Underground Pipeline Damage Caused by Construction. Autom. Constr. 2020, 113, 103125.

- Zhang, T.; Lee, Y.-C.; Scarpiniti, M.; Uncini, A. A supervised machine learning-based sound identification for construction activity monitoring and performance evaluation. In Proceedings of the Construction Research Congress, New Orleans, LA, USA, 2–4 April 2018; pp. 358–366.

- Sherafat, B.; Rashidi, A.; Lee, Y.; Ahn, C. Automated Activity Recognition of Construction Equipment Using a Data Fusion Approach. In Proceedings of the The 2019 ASCE International Conference on Computing in Civil EngineeringAt: Georgia Institute of Technology, Atlanta, GA, USA, 17–19 June 2019.

- Wei, W.; Wang, C.; Lee, Y. BIM-Based Construction Noise Hazard Prediction and Visualization for Occupational Safety and Health Awareness Improvement. In Proceedings of the ASCE International Workshop on Computing in Civil Engineering 2017, Seattle, WA, USA, 25–27 June 2017.

- Kim, D.; Liu, M.; Lee, S.H.; Kamat, V.R. Remote Proximity Monitoring between Mobile Construction Resources Using Camera-Mounted UAVs. Autom. Constr. 2019, 99, 168–182.

- Son, H.; Seong, H.; Choi, H.; Kim, C. Real-Time Vision-Based Warning System for Prevention of Collisions between Workers and Heavy Equipment. J. Comput. Civ. Eng. 2019, 33, 4019029.

- Huang, Y.; Hammad, A.; Zhu, Z. Providing Proximity Alerts to Workers on Construction Sites Using Bluetooth Low Energy RTLS. Autom. Constr. 2021, 132, 103928.

More

Information

Subjects:

Engineering, Civil

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.5K

Revisions:

2 times

(View History)

Update Date:

06 Dec 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No