Nowadays, more frequently, it is necessary to perform underwater operations such as surveying an area or inspecting and intervening on industrial infrastructures such as offshore oil and gas rigs or pipeline networks. The use of Autonomous Underwater Vehicles (AUV) has grown as a way to automate these tasks, reducing risks and execution time. One of the used sensing modalities is vision, providing RGB high-quality information in the mid to low range, making it appropriate for manipulation or detail inspection tasks. This research presents the use of a deep neural network to perform pixel-wise 3D segmentation of pipes and valves on underwater point clouds generated using a stereo pair of cameras. In addition, two novel algorithms are built to extract information from the detected instances, providing pipe vectors, gripping points, the position of structural elements such as elbows or connections, and valve type and orientation. The neural network and information algorithms are implemented on an AUV and executed in real-time, validating that the output information stream frame rate of 0.72 fps is high enough to perform manipulation tasks and to ensure full seabed coverage during inspection tasks. The used dataset, along with a trained model and the information algorithms, are provided to the scientific community.

1. Introduction

The need for conducting underwater intervention tasks has grown significantly in recent decades. Often it is necessary to perform underwater operations in different fields such as archaeology, biology, rescue and recovery or industry that include not only inspection but also interaction with the environment. One of the most relevant cases is the manipulation tasks performed on offshore oil and gas rigs or pipeline networks

[1][2][3][4][5].

In the past, the aforementioned tasks were mostly carried out, manually, by scuba divers. Nonetheless, conducting these missions in a hard-to-reach scenario such as open waters tends to be slow, dangerous and resource-consuming. Recently, Remotely Operated Vehicles (ROVs) equipped with diverse sensing systems and manipulators have been used to access deeper and more complex underwater scenarios, allowing the elimination of some of the drawbacks of human intervention.

However, ROVs still presented downsides such as its hard and error-prone piloting due to complex water dynamics, requiring trained operators; or the need for a support vessel, leading to expensive operational costs. To ease these drawbacks, there has been increasing research towards intervention Autonomous Underwater Vehicles (AUVs)

[6][7][8] and Underwater Vehicle Manipulator Systems (UVMS)

[9][10].

Other challenges faced in underwater environments are presented regarding sensing in general and object perception in particular. Underwater sensing presents several challenges such as distortion in signals, light absorption and scattering, water turbidity changes or depth-depending colour distortion.

Intervention ROVs and AUVs are often equipped with a variety of sensing systems. When operating in unknown underwater environments, sonar systems are usually preferred as they are able to obtain bathymetric maps of large areas in a short time. Even though sonar is mostly used to provide general information about the environment or used in a first-stage approach to the area of interest, it has also been used to perform object detection by itself. Nonetheless, the preferred sensing modalities to obtain detailed, short-distance information with higher resolution are laser and video. These modalities are often used during the approach, object recognition and intervention phases.

2. AUV Description

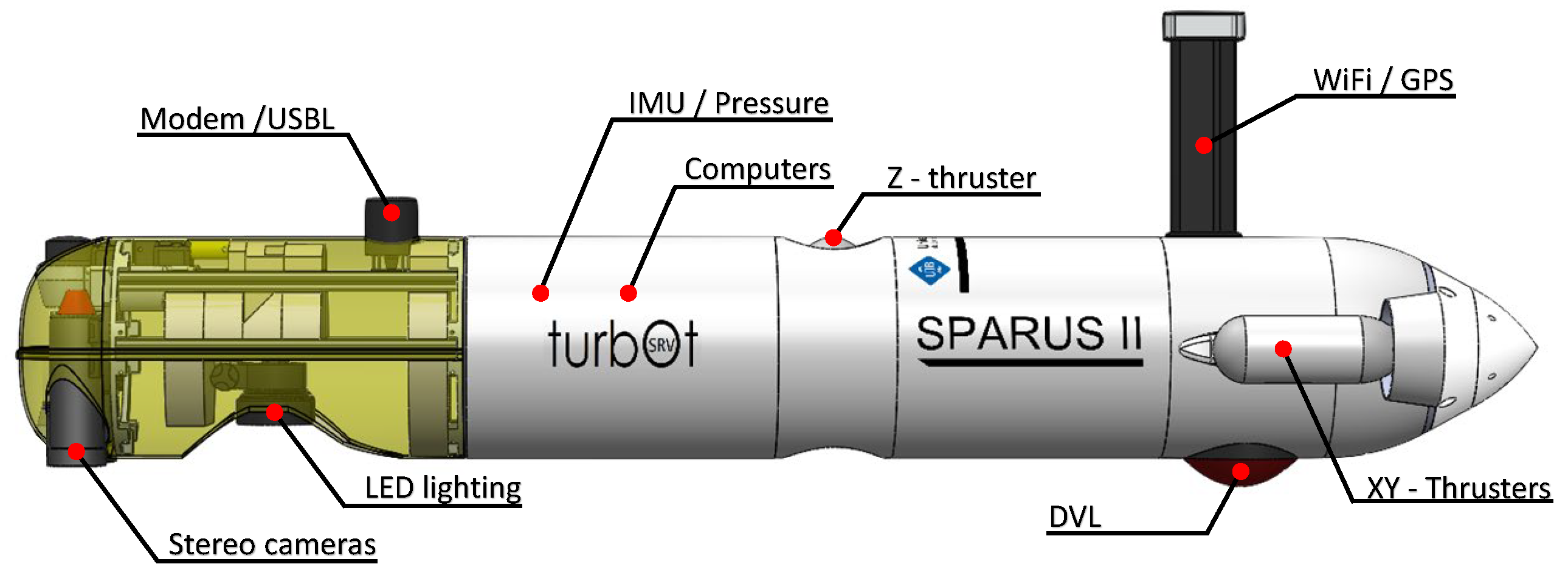

The used AUV is a SPARUS II model unit

[11] (

Figure 1) equipped with three motors, granting it three degrees of mobility (surge, heave and yaw). Its navigation payload is composed of: (1) a Doppler Velocity Logger (DVL) to obtain linear and angular speeds and altitude; (2) a pressure sensor which provides depth measurements; (3) an Inertial Measurement Unit (IMU) to measure accelerations and angular speeds; (4) a Compass for heading; (5) a GPS to be georeferenced during surface navigation; and (6) a Short Baseline acoustic Link (USBL) used for localisation and data exchange between the robot and a remote station. Additionally, it is equipped with a stereo pair of Manta G283 cameras facing downwards.

The robot has two computers. One is dedicated to receiving and managing the navigation sensor data and running the main robot architecture developed under ROS (Intel i7 processor at 2.2 GHz, Intel HD Graphics 3000 engine and 4 GB of RAM). The second computer is used to capture the images from the stereo cameras and execute the online semantic segmentation and information algorithms (Intel i7 processor at 2.5 GHz, Intel Iris Graphics 6100 and 16 GB of RAM).

The localisation of the vehicle is obtained through the fusion of multiple state estimations produced by the DVL, IMU, Compass, GPS, USBL, visual odometry and a navigation filter

[12]. This localisation can be integrated into the point clouds generated from the images captured by the stereo pair of cameras to spatially reference them, which is a requirement to execute the IUA.

3. Implementation

To perform the online implementation the researchers design a pipeline based on ROS.

First, the images published by the stereo pair are transformed into point clouds to be processed by the neural network. To do so, diverse C++ ROS nodes are set up to: (1) rectify the raw images using the camera calibration parameters; (2) decimate the rectified images from their original size (1920×1440 pixels) to 960×720 pixels; (3) calculate the disparity map and generate the point clouds; and (4) downsample the point clouds using a voxel grid. Additionally, a python ROS node is set up to subscribe to the downsampled point clouds.

Following this, the point cloud is fed into a previously loaded inference graph of a DGCNN trained model, performing the semantic segmentation. From there, the IEA and IUA are executed. Finally, a publishing python ROS node is set up to publish the extracted information back into ROS to be accessed by other robots, sensors or actuators.

This pipeline achieves the implementation of the semantic segmentation network and information algorithms on an AUV and allows its execution online during manipulation and inspection tasks.

4. Validation

To validate the online execution, the frame rate of the output information stream is evaluated. An online execution was performed during the immersions conforming the SPOOL-2 and SSEA-3 sets. In total, the online workflow was tested for 15′23″.

For each immersion, the inspected pipe and valve configuration are different, making the IEA and IUA algorithms execution time vary, as the number and shape of pipes and valves are different, making the time analysis more robust as it covers a wider variety of scenarios.

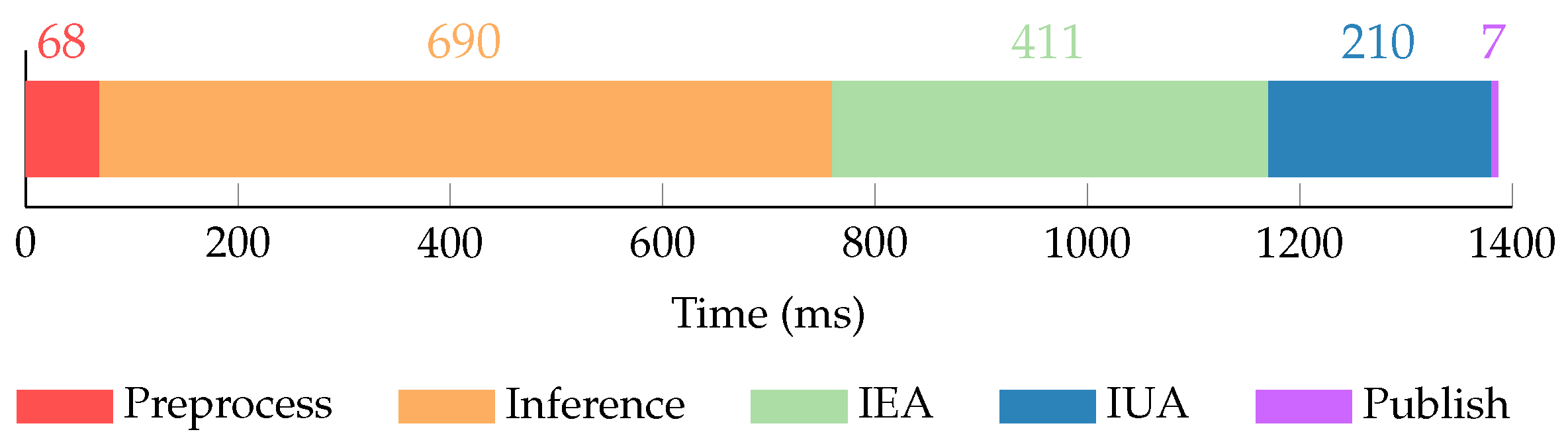

The average output information stream frame rate and times for each online execution step are calculated as the mean value from both executions. Figure 2 presents a breakdown of the total average online execution time into its different steps.

Figure 2. Online execution time breakdown.

The total average online execution time is 1.39 s, which results in an output information stream frame rate of 0.72 fps. The preprocessing step takes a mean of 68 ms (4.9% of the total time) and includes all operations to transform the images published from the stereo pair into point clouds to be processed by the neural network. The network inference takes the biggest amount of time with a mean of 690 ms (49.8% of the total time). Following, the information extraction and unification algorithms take a mean of 411 ms and 210 ms, accounting for 29.7% and 15.1% of the total time, respectively. Finally, the information publication takes a mean of 7 ms (0.5% of the total time).

The achieved output information stream frame rate is more than enough to perform manipulation tasks, as these kinds of operations in underwater scenarios tend to have slow and controlled dynamics. Additionally, for most manipulation tasks the IUA may not be executed, lowering the overall online execution time to 1.18 s, and thus increasing the achieved frame rate to 0.85 fps.

Regarding inspection tasks, a method to validate the achieved output information stream frame rate is to check if exists an overlap between the analysed point clouds, ensuring full coverage of the inspected area. To do so, the overlap between the original images from analysed point clouds is checked. This overlap depends on the camera displacement between the images from two consecutive analysed point clouds (dKF) and on the height of the image footprint (hFP). Then, the overlap can be expressed as:

where

v denotes the AUV velocity,

a the navigation altitude, himage

the image height pixels and

f the camera focal length.

During inspection tasks, an AUV such as the SPARUS II can achieve velocities up to v=0.4 m/s and navigate at a minimum altitude of a=1.5 m. Using these parameters along the Manta G283 camera intrinsic focal length of f=1505.5p and image height resolution of himage=1440p, the obtained overlap is 61.4%. Thus, the output information stream frame rate is high enough to get point clouds to overlap even when the AUV navigates at its maximum speed and minimum altitude.