The quantum computer has been claimed to show more quantum advantage than the classical computer in solving some specific problems. Many companies and research institutes try to develop quantum computers with different physical implementations. Currently, most people only focus on the number of qubits in a quantum computer and consider it as a standard to evaluate the performance of the quantum computer intuitively. However, it is quite misleading in most times, especially for investors or governments. This is because the quantum computer works in a quite different way than classical computers. Thus, quantum benchmarking is of great importance. Currently, many quantum benchmarks are proposed from different aspects.

1. Overview of Quantum Benchmarks

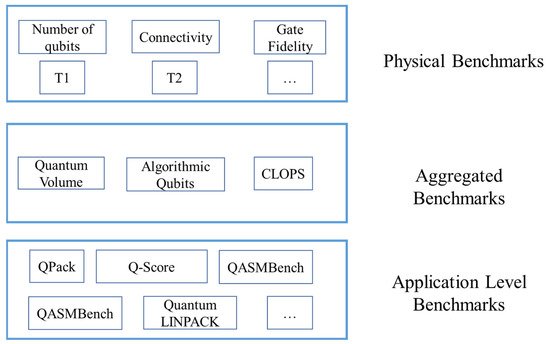

In this research, the researchers classify the benchmarks into three categories: the physical benchmarks, the aggregated benchmarks, and application-level benchmarks. Most news and reports place emphasis on the number of qubits in a quantum processor, which is mostly misleading for those who are not familiar with quantum computing. Definitely, the number of qubits can directly decide the quantum computing power of a quantum computer. Some people intuitively think that the quantum computing power of a quantum computer grows exponentially with the number of qubits. For instance, in 2019, Google first demonstrated “quantum supremacy” with a Sycamore quantum processor having 53 qubits. However, apart from the number of qubits, the noise and the quantum property of the qubits can greatly affect the correctness of the results. Thus, apart from the number of qubits, there are other physical properties that most people are concerned about.

Physical benchmarks include tools, models, and algorithms to reflect the physical properties of a quantum processor. Typical physical indicators of quantum computers include T1, T2, single qubit gate fidelity, two qubit gate fidelity, and readout fidelity. The aggregated benchmarks can help the user to determine the performance of a quantum processor with only one or several parameters. The aggregated metrics can be calculated with randomly generated quantum circuits or estimated based on the basic physical properties of a quantum processor. Typical aggregated benchmarks include quantum volume (QV) and algorithmic qubits (AQ). The application-level benchmarks refer to the metrics obtained by running real-world applications on the quantum computer. Many existing works propose using real world applications to benchmark the quantum computer’s performance because they assume that random circuits cannot reflect a quantum computer’s performance accurately. An overview of the existing quantum benchmarks is shown in Figure 1.

Figure 1. Overview of the quantum benchmarks.

2. Physical Benchmarks

Different physical implementations are concerned with different aspects of a quantum computing system. For instance, the trapped ion-based quantum computer focuses more on the stability of the trap frequency, the duration of a gate operation, and the stability of the control lasers. The superconducting quantum computers’ performance is affected by the controllability and scalability of the system. Mostly, they are affected by the precision of the Josephson junction, anharmonicity, and gate duration

[1].

In general, the quantum computation systems are concerned with the quantum correlations and controlling operation precision. In a superconducting quantum computer, generally researchers from the background of quantum information focus more on physical properties of quantum computers, such as the T1, T2, number of qubits, connectivity, single qubit gate fidelity, two qubit gate fidelity, and readout fidelity.

The indicators for quantum computers of IBM’s online quantum cloud (

Table 1, from

[2]) is shown in the following table.

Table 1. IBM quantum cloud’s performance metrics. Avg stands for average; N/A means not applicable.

| Name |

Number of Qubits |

QV |

Avg.T1 (μs) |

Avg.T2 (μs) |

Avg.Readout Fidelity |

Avg.CNOT Fidelity |

| brooklyn |

65 |

32 |

77.1686 |

74.6345 |

0.9682 |

0.9746 |

| manhattan |

65 |

32 |

110.1959 |

101.6078 |

0.9761 |

0.9543 |

| hanoi |

27 |

64 |

123.3959 |

93.4341 |

0.9837 |

0.991 |

| sydney |

27 |

32 |

266.1433 |

256.6081 |

0.9833 |

0.9898 |

| peekskill |

27 |

N/A |

97.4474 |

107.0911 |

0.9821 |

0.9896 |

| cairo |

27 |

64 |

76.01 |

97.6543 |

0.9796 |

0.989 |

| toronto |

27 |

32 |

180.3614 |

155.1329 |

0.9869 |

0.9814 |

| kolkata |

27 |

128 |

70.3363 |

75.2432 |

0.9698 |

0.9536 |

| mumbai |

27 |

128 |

117.2574 |

92.1067 |

0.9484 |

0.9526 |

| montreal |

27 |

128 |

81.004 |

104.678 |

0.938 |

0.4972 |

| guadalupe |

16 |

32 |

132.6257 |

40.5357 |

0.977 |

0.9896 |

| lagos |

7 |

32 |

158.6 |

57.702 |

0.9697 |

0.9912 |

| jakarta |

7 |

16 |

74.214 |

104.008 |

0.9728 |

0.9895 |

| perth |

7 |

32 |

155.0078 |

92.217 |

0.9118 |

0.9894 |

| casablanca |

7 |

32 |

82.2681 |

96.0744 |

0.9696 |

0.9883 |

| nairobi |

7 |

32 |

86.5337 |

107.1733 |

0.9428 |

0.9878 |

| quito |

5 |

16 |

130.2629 |

100.9629 |

0.9859 |

0.9932 |

| santiago |

5 |

32 |

105.2286 |

98.9143 |

0.9633 |

0.9909 |

| manila |

5 |

32 |

100.56 |

101.29 |

0.9739 |

0.99 |

| lima |

5 |

8 |

84.0278 |

84.4122 |

0.9829 |

0.9891 |

| belem |

5 |

16 |

75.936 |

94.722 |

0.9676 |

0.9828 |

| bogota |

5 |

32 |

92.454 |

124.096 |

0.959 |

0.9794 |

| armonk |

1 |

1 |

118.1 |

149.22 |

0.967 |

N/A |

4. Application-Based Benchmarks

The physical properties of a quantum computer can affect its performance. However, it is difficult to determine whether a quantum computer outperforms another only based on these properties. For instance, a quantum computer “A” has less qubits, but the qubits’ quality of another quantum computer “B” is higher. If a quantum application needs more qubits, then “A” is preferred. If a quantum application requires the qubits’ quality to be higher, then “B” is preferred. Therefore, some researchers propose to evaluate the performance of a quantum computer with a real-world quantum application.

A summary of the application-based quantum benchmarks is shown in Table 2. In Table 2, the researchers can see that most quantum benchmarks consider the typical combinational optimization problems and use variational quantum circuits (VQC) to solve the problem. This is mainly because the combinational optimization problems can be widely used in many real-world scenarios, such as traffic engineering and flight scheduling. Moreover, the variational quantum solutions, such as quantum approximation optimization algorithm (QAOA) and variational quantum eigensolver (VQE) are popular, due to the possibility to obtain a useful result on NISQ devices. Thus, most people believe that, in the NISQ era, the variational quantum solution will remain the most effective solution.

Table 2. Summary of the application-based quantum benchmarks.