| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Héctor Felipe Mateo Romero | -- | 4855 | 2022-10-20 11:55:21 | | | |

| 2 | Peter Tang | + 5 word(s) | 4860 | 2022-10-20 13:34:34 | | | | |

| 3 | Peter Tang | + 4 word(s) | 4864 | 2022-10-24 08:55:46 | | |

Video Upload Options

Solar energy is one of the most important renewable energies, and the investment of businesses and governments is increasing every year. Artificial intelligence (AI) is used to solve the most important problems found in photovoltaic (PV) systems, such as the tracking of the Max Power Point of the PV modules, the forecasting of the energy produced by the PV system, the estimation of the parameters of the equivalent model of PV modules or the detection of faults found in PV modules or cells. AI techniques perform better than classical approaches, even though they have some limitations such as the amount of data and the high computation times needed for performing the training.

1. Introduction

2. Artificial Intelligence Applied to PV Systems

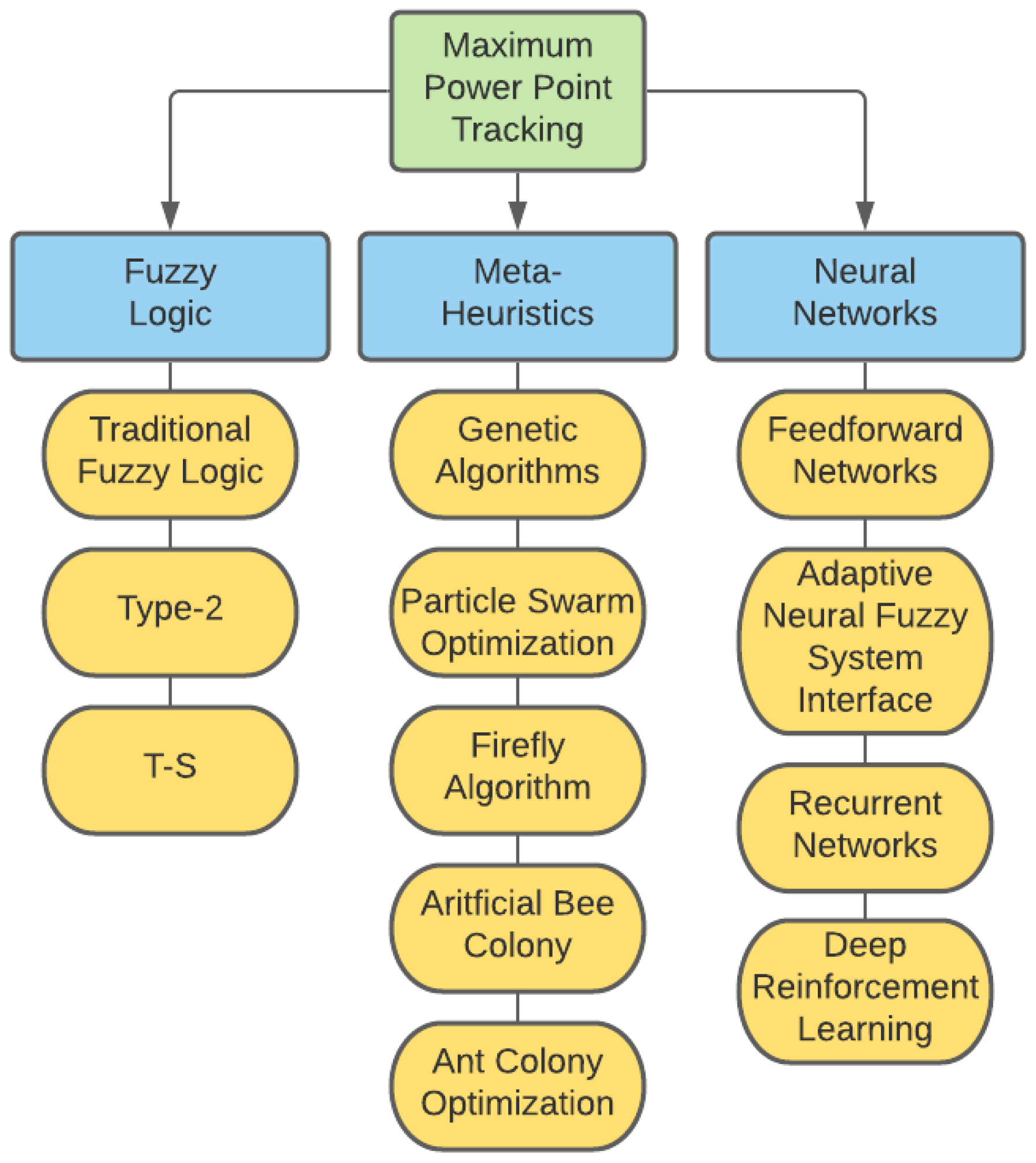

2.1. Maximum Power Point Tracking (MMPT)

|

Method |

Features |

|---|---|

|

FLC systems provide quick responses to changes and low oscillations near MPPT that reduce the power loss compared with traditional systems. The combination with FCN or the initial estimation of the MPP voltage further improves the results. |

|

|

Type-2 FL provides the methods to model and handle uncertainties, boosting the robustness of the system and hence its results. |

|

|

The parallel distributed control provided by the T-S FL further improves the results of FL systems, having an acceptable settling time, less oscillations and an accurate output. |

|

|

Other methods can take advantage of the benefits of FLC systems to improve their results in MPPT. |

|

Algorithm |

Features |

|---|---|

|

Genetic Algorithms improves the results of other methods such as ANN or FPSO |

|

|

PSO is used to optimize Neural Network learning |

|

|

FA [34] |

This algorithm is used directly to solve MPPT. It assures fast convergence with almost zero oscillations |

|

In MPPT, this algorithm provides quick converge and accuracy in tracking. |

|

|

ACO [38] |

ACO is used in the learning process for adjusting weights and biases or the neural networks in other to improve its results |

|

Type |

Reference |

Features |

|---|---|---|

|

FeedForward Neural Network |

[39] |

2 networks. Each one with a single hidden layer of 20 nodes. |

|

[29] |

5 Nodes on a single layer. Data preprocessed by Genetic Algorithm. |

|

|

[40] |

Three hidden layers with 8,7,7 nodes, respectively. Bayesian-Regulated back-propagation for training. |

|

|

[41] |

A Single hidden layer with 13 neurons. Data created by a Course Gaussian Support Vector Machine. |

|

|

[24] |

2-3-3-1 structure. The NN is optimized by FPSOGSA. |

|

|

[42] |

The topology and best weights are optimized by a PSO algorithm. |

|

|

[43] |

ACO is used to optimize the neural network. |

|

|

Adaptive Neural Fuzzy System Interface |

[25] |

Bat Algorithm is used to train the network. |

|

[44] |

Crowded Plant Height Optimization is in charge of performing the learning of the network. |

|

|

[45] |

Combines Fuzzy Logic and Neural Networks. Three intermediate layers in which the output is based on fuzzy rules. |

|

|

Recurrent Neural Network |

[23] |

A hidden layer and a context layer storing the results of the previous outputs of the hidden layer. A metaheuristic is used to optimize the structure and weights. |

|

Deep Reinforcement Learning |

[46] |

Four networks, one for computing the policy, one for the critic and two called targets that are used to stabilize the learning procedure |

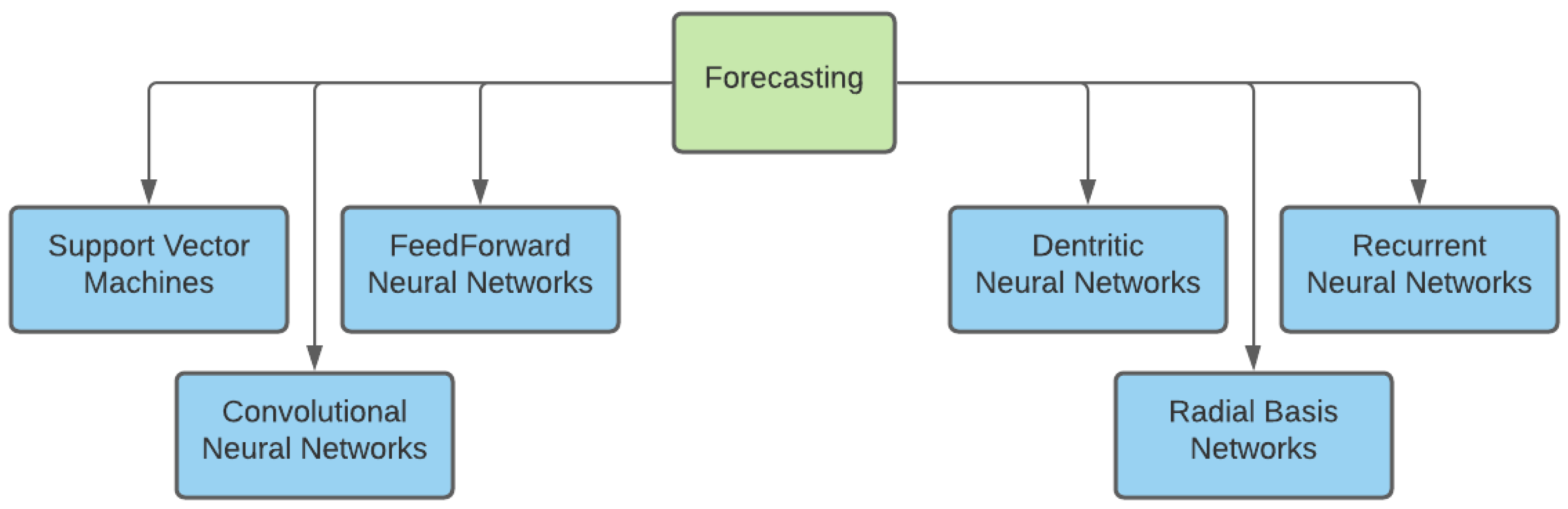

2.2. Forecasting

|

Type |

Features |

|---|---|

|

Feed-Forward Neural Network |

Nine inputs, 20 hidden nodes on a single layer. [50] |

|

Nine inputs, 2 hidden layers with 6 and 4 nodes, respectively. Trained by a hybrid PSO GA algorithm. [51] |

|

|

Two inputs, creates ensembles of neural networks. [53] |

|

|

Two inputs, 1 hidden layer, Conjugate Gradient as learning rule. [54] |

|

|

Three neural networks, one for each kind of weather. Uses Extreme Learning to optimize the parameters and architecture. [57] |

|

|

Fuzzy Logic is applied as a filter to the input data. Seven inputs, 2 hidden layers of 9 and 5 nodes, respectively. Trained by a hybrid of PSO and GA. [78] |

|

|

Uses Big Data. Multistep methodology decomposes the problems into subproblems. [81] |

|

|

Convolutional Neural Networks |

Two inputs. Parameters are selected by testing different combinations. [80] |

|

Dendritic Neural Networks |

Aided by WT. Provides better convergence speed and better fitting ability. [82] |

|

Radial Basis Network |

Two inputs, aided by Wavelet Transform to preprocess the input data. [59] |

|

High-resolution time series as input. Aided by Wavelet Transform to preprocess input data and PSO to optimize the neural network. [63] |

|

|

Recurrent Neural Network |

Aided by Wavelet Transform to deal with fluctuation in time series input data. [62] |

|

Preprocessed and normalized high-resolution time series as input. Two hidden layers of 35 neurons. [64] |

|

|

Tested Different RRN architectures. LSTM, which uses previous time steps, found the best one. [66] |

|

|

Uses Echo State Networks aided by Restricted Boltzmann Machine, Principal Component Analysis and DFP Quasi-Newton Algorithm to optimize the network. [67] |

|

|

Support Vector Machines |

Two inputs. A parameter to tune the number of SVM during training. [47] |

|

SMV compared with KNN. SMV was found to be better. [84] |

|

|

Multi-input SV. Different combinations of inputs were tested. Three inputs was the best one found. [49] |

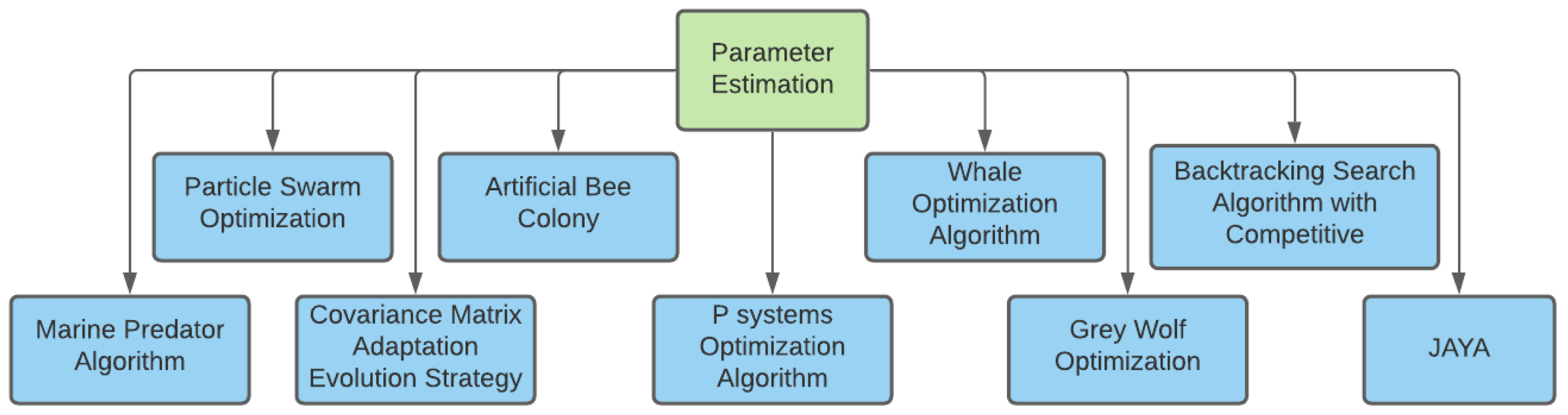

2.3. Parameter Estimation

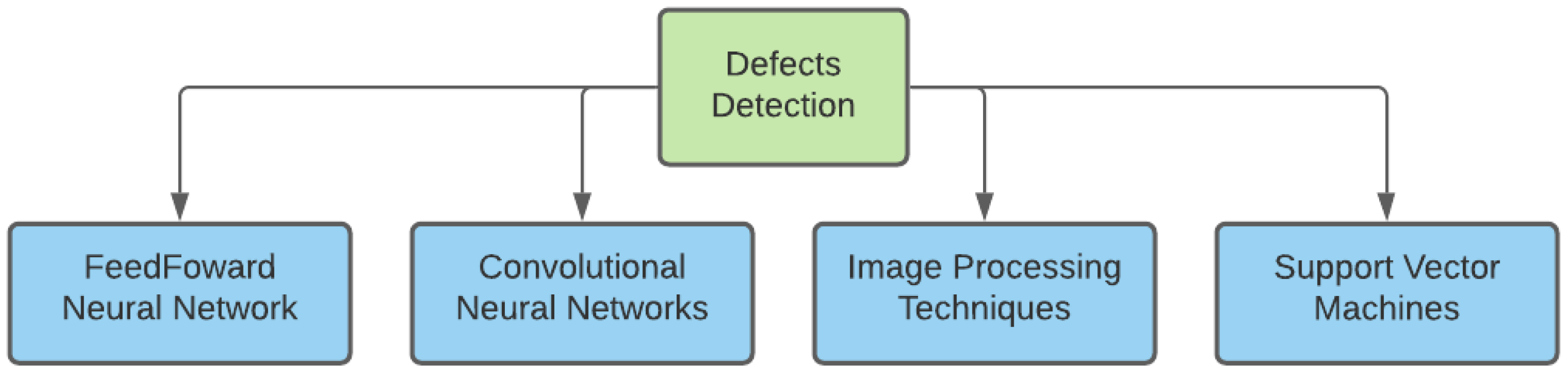

2.4. Defects Detection

|

Type |

Features |

Accuracy |

Dataset Size |

|---|---|---|---|

|

Image Processing Techniques |

Segmentation + obtention of binary image + classification. [90] |

from 80% to 99% |

— |

|

SVM + Image Processing Techniques |

Images are preprocessed and features are extracted from the image. These features are used in an SVM with penalty parameter weighting. [91] |

97% |

13,392 |

|

SVM and CNN |

Pretrained VGG19 using different feature descriptors. Similar results for both methods. [92] |

82.4% |

2624 |

|

CNN is composed of 2 layers using leaky-relu. SVM trained with different features extracted from the images. Similar behavior in both models. [93] |

98% |

540 |

|

|

CNN is composed of 2 convolutional layers. SVM parameters optimized by search grid. [95] |

96%. |

2840 |

|

|

CNN |

Thirteen convolutional layers, an adaptation of VGG16. Uses oversampling and data augmentation. [94] |

Uses a different measurement |

5400 |

|

Multichannel CNN. Accepts inputs of different sizes. Improves the feature extraction of single-channel CNN. [96] |

96.76% |

8301 |

|

|

Six convolutional layers. Regulation techniques such as batch optimization. [97] |

93% |

2624 |

|

|

Fully Convolutional Neural Network. Pretrained u-net, composed of 21 convolutional layers. [98] |

Uses a different measurement |

542 |

|

|

CNN aided by a Complementary Attention Network, composed of a channel-wise attention subnetwork connected with a spatial attention subnetwork. Usable with different CNNs. [100] |

99.17% |

2300 |

|

|

WT+ SVM and FFNN |

Combines discrete WT and stationary WT to extract features and SVM and FFNN to classify them. [99] |

93.6% |

2029 |

|

CNN + SVM, KNN, etc. |

Extracts features from different networks, combining them with minimum redundancy and maximum relevance for feature selection. Uses Resnet-50, VGG-16, VGG-19 and DarkNet-19. [102] |

94.52% |

2624 |

References

- Adib, R.; Zervos, A.; Eckhart, M.; David, M.E.A.; Kirsty, H.; Peter, H.; Governments, R.; Bariloche, F. Renewables 2021 Global Status Report. In REN21 Renewables Now; 2021; Available online: https://www.iea.org/reports/renewables-2021 (accessed on 10 September 2022).

- Kampa, M.; Castanas, E. Human health effects of air pollution. Environ. Pollut. 2008, 151, 362–367.

- Hersbach, H.; Bell, B.; Berrisford, P.; Hirahara, S.; Horányi, A.; Muñoz-Sabater, J.; Nicolas, J.; Peubey, C.; Radu, R.; Schepers, D.; et al. The ERA5 global reanalysis. Q. J. R. Meteorol. Soc. 2020, 146, 1999–2049.

- Eroğlu, H. Effects of Covid-19 outbreak on environment and renewable energy sector. Environ. Dev. Sustain. 2021, 23, 4782–4790.

- Danowitz, A. Solar Thermal vs. Photovoltaic. 2010. Available online: http://large.stanford.edu/courses/2010/ph240/danowitz2/ (accessed on 10 September 2022).

- GreenMatch. Differences Between Solar PV and Solar Thermal; GreenMatch: Copenhagen, Denmark, 2016; Available online: https://www.greenmatch.co.uk/blog/2015/04/solar-panels-vs-solar-thermal (accessed on 10 September 2022).

- Markvart, T.; Castañer, L. Principles of Solar Cell Operation. In Practical Handbook of Photovoltaics: Fundamentals and Applications; Elsevier: Amsterdam, The Netherlands, 2003; pp. 71–93.

- Satpathy, R.; Pamuru, V. Solar PV Power: Design, Manufacturing and Applications from Sand to Systems; Elsevier: Amsterdam, The Netherlands, 2020; pp. 1–493.

- Yetayew, T.T.; Workineh, T.G. A Comprehensive Review and Evaluation of Classical MPPT Techniques for a Photovoltaic System. In Advances of Science and Technology; Springer: Berlin/Heidelberg, Germany, 2021; Volume 384, pp. 259–272.

- Pallathadka, H.; Ramirez-Asis, E.H.; Loli-Poma, T.P.; Kaliyaperumal, K.; Ventayen, R.J.M.; Naved, M. Applications of artificial intelligence in business management, e-commerce and finance. Mater. Today Proc. 2021, in press.

- Mahmoud, A.M.A.; Mashaly, H.M.; Kandil, S.A.; Khashab, H.E.; Nashed, M.N.F. Fuzzy logic implementation for photovoltaic maximum power tracking. In Proceedings of the 26th Annual Conference of the IEEE-Industrial-Electronics-Society, Nagoya, Japan, 22–28 October 2000; pp. 735–740.

- Hui, J.; Sun, X. MPPT Strategy of PV System Based on Adaptive Fuzzy PID Algorithm. In Life System Modeling and Intelligent Computing Pt I, Proceedings of the International Conference on Life System Modeling and Simulation/International Conference on Intelligent Computing for Sustainable Energy and Environment, Wuxi, China, 17–20 September 2010; Springer: Berlin/Heidelberg, Germany, 2010; Volume 97, pp. 220–228.

- Kottas, T.L.; Karlis, A.D.; Boutalis, Y.S. Fuzzy Cognitive Networks for Maximum Power Point Tracking in Photovoltaic Arrays. Fuzzy Cogn. Maps Adv. Theory Methodol. Tools Appl. 2010, 247, 231–257.

- Adly, M.; El-Sherif, H.; Ibrahim, M. Maximum Power Point Tracker for a PV Cell using a Fuzzy Agent adapted by the Fractional Open Circuit Voltage Technique. In Proceedings of the IEEE International Conference on Fuzzy Systems (FUZZ 2011), Taipei, Taiwan, 27–30 June 2011; pp. 1918–1922.

- Qiao, X.; Wu, B.; Deng, Z.; You, Y. MPPT of photovoltaic generation system using fuzzy/PID control. Electr. Power Autom. Equip. 2008, 28, 92–95.

- Altin, N. Interval type-2 fuzzy logic controller based Maximum Power Point tracking in photovoltaic systems. Adv. Electr. Comput. Eng. 2013, 13, 65–70.

- Kececioglu, O.F.; Gani, A.; Sekkeli, M. Design and Hardware Implementation Based on Hybrid Structure for MPPT of PV System Using an Interval Type-2 TSK Fuzzy Logic Controller. Energies 2020, 13, 1842.

- Altin, N. Single phase grid interactive PV system with MPPT capability based on type-2 fuzzy logic systems. In Proceedings of the International Conference on Renewable Energy Research and Applications, ICRERA 2012, Istanbul, Turkey, 18–21 September 2012.

- Verma, P.; Garg, R.; Mahajan, P. Asymmetrical interval type-2 fuzzy logic control based MPPT tuning for PV system under partial shading condition. ISA Trans. 2020, 100, 251–263.

- Allouche, M.; Dahech, K.; Chaabane, M. Multiobjective maximum power tracking control of photovoltaic systems: T-S fuzzy model-based approach. Soft Comput. 2018, 22, 2121–2132.

- Zayani, H.; Allouche, M.; Kharrat, M.; Chaabane, M. T-S fuzzy Maximum Power Point tracking control of photovoltaic conversion system. In Proceedings of the 16th International Conference on Sciences and Techniques of Automatic Control and Computer Engineering, STA 2015, Monastir, Tunisia, 21–23 December 2016; pp. 534–539.

- Dahmane, M.; Bosche, J.; El-Hajjaji, A.; Davarifar, M. T-S implementation of an MPPT algorithm for photovoltaic conversion system using poles placement and H performances. In Proceedings of the 3rd International Conference on Systems and Control, ICSC 2013, Algiers, Algeria, 29–31 October 2013; pp. 1116–1121.

- Azali, S.; Sheikhan, M. Intelligent control of photovoltaic system using BPSO-GSA-optimized neural network and fuzzy-based PID for Maximum Power Point tracking. Appl. Intell. 2016, 44, 88–110.

- Duman, S.; Yorukeren, N.; Altas, I.H. A novel MPPT algorithm based on optimized artificial neural network by using FPSOGSA for standalone photovoltaic energy systems. Neural Comput. Appl. 2018, 29, 257–278.

- Sarkar, R.; Kumar, J.R.; Sridhar, R.; Vidyasagar, S. A New Hybrid BAT-ANFIS-Based Power Tracking Technique for Partial Shaded Photovoltaic Systems. Int. J. Fuzzy Syst. 2021, 23, 1313–1325.

- Naveen; Dahiya, A.K. Implementation and Comparison of Perturb Observe, ANN and ANFIS Based MPPT Techniques. In Proceedings of the International Conference on Inventive Research in Computing Applications, ICIRCA 2018, Coimbatore, India, 11–12 July 2018; pp. 1–5.

- Arora, A.; Gaur, P. Comparison of ANN and ANFIS based MPPT Controller for grid connected PV systems. In Proceedings of the 12th IEEE International Conference Electronics, Energy, Environment, Communication, Computer, Control: (E3-C3), INDICON 2015, New Delhi, India, 17–20 December 2016.

- Padmanaban, S.; Priyadarshi, N.; Bhaskar, M.S.; Holm-Nielsen, J.B.; Ramachandaramurthy, V.K.; Hossain, E. A Hybrid ANFIS-ABC Based MPPT Controller for PV System with Anti-Islanding Grid Protection: Experimental Realization. IEEE Access 2019, 7, 103377–103389.

- Kulaksiz, A.A.; Akkaya, R. Training data optimization for ANNs using genetic algorithms to enhance MPPT efficiency of a stand-alone PV system. Turk. J. Electr. Eng. Comput. Sci. 2012, 20, 241–254.

- Kulaksiz, A.A.; Akkaya, R. A genetic algorithm optimized ANN-based MPPT algorithm for a stand-alone PV system with induction motor drive. Sol. Energy 2012, 86, 2366–2375.

- Larbes, C.; Cheikh, S.M.A.; Obeidi, T.; Zerguerras, A. Genetic algorithms optimized fuzzy logic control for the maximum power point tracking in photovoltaic system. Renew. Energy 2009, 34, 2093–2100.

- Azab, M. Optimal power point tracking for stand-alone PV system using particle swarm optimization. In Proceedings of the IEEE International Symposium on Industrial Electronics, Bari, Italy, 4–7 July 2010; pp. 969–973.

- Alshareef, M.; Lin, Z.; Ma, M.; Cao, W. Accelerated Particle Swarm Optimization for Photovoltaic Maximum Power Point Tracking under Partial Shading Conditions. Energies 2019, 12, 623.

- Sundareswaran, K.; Peddapati, S.; Palani, S. MPPT of PV systems under partial shaded conditions through a colony of flashing fireflies. IEEE Trans. Energy Convers. 2014, 29, 463–472.

- soufyane Benyoucef, A.; Chouder, A.; Kara, K.; Silvestre, S.; Sahed, O.A. Artificial bee colony based algorithm for Maximum Power Point tracking (MPPT) for PV systems operating under partial shaded conditions. Appl. Soft Comput. J. 2015, 32, 38–48.

- Bilal, B. Implementation of Artificial Bee Colony algorithm on Maximum Power Point Tracking for PV modules. In Proceedings of the 8th International Symposium on Advanced Topics in Electrical Engineering, ATEE 2013, Bucharest, Romania, 23–25 May 2013.

- Oshaba, A.S.; Ali, E.S.; Elazim, S.M.A. PI controller design using ABC algorithm for MPPT of PV system supplying DC motor pump load. Neural Comput. Appl. 2017, 28, 353–364.

- Moreira, H.S.; Silva, J.L.D.S.; Prym, G.C.; Sakô, E.Y.; dos Reis, M.V.G.; Villalva, M.G. Comparison of Swarm Optimization Methods for MPPT in Partially Shaded Photovoltaic Systems. In Proceedings of the 2nd International Conference on Smart Energy Systems and Technologies (SEST), Porto, Portugal, 9–11 September 2019.

- Habibi, M.; Yazdizadeh, A. New MPPT Controller Design for PV Arrays Using Neural Networks (Zanjan City Case Study). In Proceedings of the 6th International Symposium on Neural Networks, Wuhan, China, 26–29 May 2009; Volume 5552, pp. 1050–1058.

- Veligorskyi, O.; Chakirov, R.; Vagapov, Y. Artificial neural network-based Maximum Power Point tracker for the photovoltaic application. In Proceedings of the 2015 1st International Conference on Industrial Networks and Intelligent Systems, INISCom 2015, Tokyo, Japan, 2–4 March 2015; pp. 133–138.

- Farayola, A.M.; Hasan, A.N.; Ali, A. Efficient photovoltaic mppt system using coarse gaussian support vector machine and artificial neural network techniques. Int. J. Innov. Comput. Inf. Control. 2018, 14, 323–339.

- Al-Majidi, S.D.; Abbod, M.F.; Al-Raweshidy, H.S. A particle swarm optimisation-trained feedforward neural network for predicting the Maximum Power Point of a photovoltaic array. Eng. Appl. Artif. Intell. 2020, 92, 103688.

- Babes, B.; Boutaghane, A.; Hamouda, N. A novel nature-inspired Maximum Power Point tracking (MPPT) controller based on ACO-ANN algorithm for photovoltaic (PV) system fed arc welding machines. Neural Comput. Appl. 2021, 34, 299–317.

- Pachaivannan, N.; Subburam, R.; Padmanaban, M.; Subramanian, A. Certain investigations of ANFIS assisted CPHO algorithm tuned MPPT controller for PV arrays under partial shading conditions. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 9923–9938.

- Mlakic, D.; Nikolovski, S. ANFIS as a method for determinating MPPT in the photovoltaic system simulated in MATLAB/Simulink. In Proceedings of the 39th International Convention on Information and Communication Technology, Electronics and Microelectronics, MIPRO 2016, Opatija, Croatia, 30 May–3 June 2016; pp. 1082–1086.

- Avila, L.; Paula, M.D.; Trimboli, M.; Carlucho, I. Deep reinforcement learning approach for MPPT control of partially shaded PV systems in Smart Grids. Appl. Soft Comput. 2020, 97, 106711.

- Leone, R.D.; Pietrini, M.; Giovannelli, A. Photovoltaic energy production forecast using support vector regression. Neural Comput. Appl. 2015, 26, 1955–1962.

- Kecman, V. Support Vector Machines—An Introduction; Springer: Berlin/Heidelberg, Germany, 2005; Volume 177, p. 605.

- Nageem, R.; Jayabarathi, R. Predicting the Power Output of a Grid-Connected Solar Panel Using Multi-Input Support Vector Regression. Procedia Comput. Sci. 2017, 115, 723–730.

- Senjyu, T.; Takara, H.; Uezato, K.; Funabashi, T. One-hour-ahead load forecasting using neural network. IEEE Trans. Power Syst. 2002, 17, 113–118.

- Caputo, D.; Grimaccia, F.; Mussetta, M.; Zich, R.E. Photovoltaic Plants Predictive Model by means of ANN trained by a Hybrid Evolutionary Algorithm. In Proceedings of the World Congress on Computational Intelligence (WCCI 2010), Barcelona, Spain, 18–23 July 2010.

- Gandelli, A.; Grimaccia, F.; Mussetta, M.; Pirinoli, P.; Zich, R.E. Development and validation of different hybridization strategies between GA and PSO. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, CEC 2007, Singapore, 25–28 September 2007; pp. 2782–2787.

- Rana, M.; Koprinska, I.; Agelidis, V.G. Forecasting solar power generated by grid connected PV systems using ensembles of neural networks. In Proceedings of the International Joint Conference on Neural Networks, Killarney, Ireland, 12–17 July 2015.

- Ehsan, R.M.; Simon, S.P.; Venkateswaran, P.R. Day-ahead forecasting of solar photovoltaic output power using multilayer perceptron. Neural Comput. Appl. 2016, 28, 3981–3992.

- Johansson, E.; Dowla, F.; Goodman, D. Backpropagation Learning for Multilayer Feed-Forward Neural Networks Using the Conjugate Gradient Method. Int. J. Neural Syst. 2011, 2, 291–301.

- NeuroDimension, Inc. Neurosolutions. Available online: http://www.neurosolutions.com/neurosolutions/ (accessed on 10 September 2022).

- Li, Z.; Zang, C.; Zeng, P.; Yu, H.; Li, H. Day-ahead Hourly Photovoltaic Generation Forecasting using Extreme Learning Machine. In Proceedings of the IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER), Shenyang, China, 8–12 June 2015; pp. 779–783.

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: A new learning scheme of feedforward neural networks. In Proceedings of the IEEE International Conference on Neural Networks—Conference Proceedings, Budapest, Hungary, 25–29 July 2004; Volume 2, pp. 985–990.

- Mandal, P.; Madhira, S.T.S.; haque, A.U.; Meng, J.; Pineda, R.L. Forecasting power output of solar photovoltaic system using wavelet transform and artificial intelligence techniques. Procedia Comput. Sci. 2012, 12, 332–337.

- Mallat, S.G. A Theory for Multiresolution Signal Decomposition: The Wavelet Representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 674–693.

- Park, J.; Sandberg, I.W. Approximation and Radial-Basis-Function Networks. Neural Comput. 1993, 5, 305–316.

- Capizzi, G.; Napoli, C.; Bonanno, F. Innovative second-generation wavelets construction with recurrent neural networks for solar radiation forecasting. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 1805–1815.

- Wen, Y.; AlHakeem, D.; Mandal, P.; Chakraborty, S.; Wu, Y.K.; Senjyu, T.; Paudyal, S.; Tseng, T.L. Performance Evaluation of Probabilistic Methods Based on Bootstrap and Quantile Regression to Quantify PV Power Point Forecast Uncertainty. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 1134–1144.

- Alzahrani, A.; Shamsi, P.; Dagli, C.; Ferdowsi, M. Solar Irradiance Forecasting Using Deep Neural Networks. Procedia Comput. Sci. 2017, 114, 304–313.

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780.

- Abdel-Nasser, M.; Mahmoud, K. Accurate photovoltaic power forecasting models using deep LSTM-RNN. Neural Comput. Appl. 2019, 31, 2727–2740.

- Yao, X.; Wang, Z.; Zhang, H. A novel photovoltaic power forecasting model based on echo state network. Neurocomputing 2019, 325, 182–189.

- Gallicchio, C.; Micheli, A. Deep Echo State Network (DeepESN): A Brief Survey. arXiv 2017, arXiv:abs/1712.04323.

- Hinton, G.E. A Practical Guide to Training Restricted Boltzmann Machines. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); 7700 Lecture N.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 599–619.

- Jolliffe, I.T. Principal Component Analysis; Springer: Berlin/Heidelberg, Germany, 2002.

- Davidon, W. Variable Metric Method for Minimization; Technical Report; Argonne National Laboratory (ANL): Lemont, IL, USA, 1959.

- Niu, D.; Wang, K.; Sun, L.; Wu, J.; Xu, X. Short-term photovoltaic power generation forecasting based on random forest feature selection and CEEMD: A case study. Appl. Soft Comput. J. 2020, 93, 106389.

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32.

- Behzadian, M.; Otaghsara, S.K.; Yazdani, M.; Ignatius, J. A state-of the-art survey of TOPSIS applications. Expert Syst. Appl. 2012, 39, 13051–13069.

- Yeh, J.R.; Shieh, J.S.; Huang, N.E. Complementary ensemble empirical mode decomposition: A novel noise enhanced data analysis method. Adv. Adapt. Data Anal. 2011, 2, 135–156.

- Li, H.; Tan, Q. A BP neural network based on improved particle swarm optimization and its application in reliability forecasting. Res. J. Appl. Sci. Eng. Technol. 2013, 6, 1246–1251.

- Jiao, B.; Lian, Z.; Gu, X. A dynamic inertia weight particle swarm optimization algorithm. Chaos Solitons Fractals 2008, 37, 698–705.

- Quan, D.M.; Ogliari, E.; Grimaccia, F.; Leva, S.; Mussetta, M. Hybrid model for hourly forecast of photovoltaic and wind power. IEEE Int. Conf. Fuzzy Syst. 2013.

- Grimaccia, F.; Mussetta, M.; Zich, R.E. Genetical swarm optimization: Self-adaptive hybrid evolutionary algorithm for electromagnetics. IEEE Trans. Antennas Propag. 2007, 55, 781–785.

- Koprinska, I.; Wu, D.; Wang, Z. Convolutional Neural Networks for Energy Time Series Forecasting. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018.

- Torres, J.F.; Troncoso, A.; Koprinska, I.; Wang, Z.; Martínez-Álvarez, F. Big data solar power forecasting based on deep learning and multiple data sources. Expert Syst. 2019, 36, e12394.

- Zhang, T.; Lv, C.; Ma, F.; Zhao, K.; Wang, H.; O’Hare, G.M. A photovoltaic power forecasting model based on dendritic neuron networks with the aid of wavelet transform. Neurocomputing 2020, 397, 438–446.

- Jiang, T.; Wang, D.; Ji, J.; Todo, Y.; Gao, S. Single dendritic neuron with nonlinear computation capacity: A case study on XOR problem. In Proceedings of the 2015 IEEE International Conference on Progress in Informatics and Computing, PIC 2015, Nanjing, China, 18–20 December 2016; pp. 20–24.

- Wolff, B.; Lorenz, E.; Kramer, O. Statistical learning for short-term photovoltaic power predictions. Stud. Comput. Intell. 2016, 645, 31–45.

- Siddiqui, M.U.; Abido, M. Parameter estimation for five- and seven-parameter photovoltaic electrical models using evolutionary algorithms. Appl. Soft Comput. J. 2013, 13, 4608–4621.

- Goldberg, D.E. Genetic Algorithms in Search, Optimization and Machine Learning, 1st ed.; Addison-Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 1989.

- Clerc, M. Particle Swarm Optimization; Wiley: Hoboken, NJ, USA, 2010.

- Opara, K.R.; Arabas, J. Differential Evolution: A survey of theoretical analyses. Swarm Evol. Comput. 2019, 44, 546–558.

- Glover, F.; Laguna, M. Tabu Search; Springer: New York, NY, USA, 1997.

- Nian, B.; Fu, Z.; Wang, L.; Cao, X. Automatic detection of defects in solar modules: Image processing in detecting. In Proceedings of the 6th International Conference on Wireless Communications, Networking and Mobile Computing, WiCOM 2010, Chengdu, China, 23–25 September 2010.

- Anwar, S.A.; Abdullah, M.Z. Micro-crack detection of multicrystalline solar cells featuring shape analysis and support vector machines. In Proceedings of the 2012 IEEE International Conference on Control System, Computing and Engineering, ICCSCE 2012, Penang, Malaysia, 23–25 November 2012; pp. 143–148.

- Deitsch, S.; Buerhop-Lutz, C.; Sovetkin, E.; Steland, A.; Maier, A.; Gallwitz, F.; Riess, C. Segmentation of Photovoltaic Module Cells in Uncalibrated Electroluminescence Images. Mach. Vis. Appl. 2018, 32, 84.

- Karimi, A.M.; Fada, J.S.; Liu, J.; Braid, J.L.; Koyuturk, M.; French, R.H. Feature Extraction, Supervised and Unsupervised Machine Learning Classification of PV Cell Electroluminescence Images. In Proceedings of the IEEE 7th World Conference on Photovoltaic Energy Conversion, WCPEC 2018—A Joint Conference of 45th IEEE PVSC, 28th PVSEC and 34th EU PVSEC, 2018, Waikoloa, HI, USA, 10–15 June 2018; pp. 418–424.

- Bartler, A.; Mauch, L.; Yang, B.; Reuter, M.; Stoicescu, L. Automated detection of solar cell defects with deep learning. In Proceedings of the 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 3–7 September 2018; pp. 2035–2039.

- Karimi, A.M.; Fada, J.S.; Hossain, M.A.; Yang, S.; Peshek, T.J.; Braid, J.L.; French, R.H. Automated Pipeline for Photovoltaic Module Electroluminescence Image Processing and Degradation Feature Classification. IEEE J. Photovoltaics 2019, 9, 1324–1335.

- Ying, Z.; Li, M.; Tong, W.; Haiyong, C. Automatic Detection of Photovoltaic Module Cells using Multi-Channel Convolutional Neural Network. In Proceedings of the 2018 Chinese Automation Congress, CAC 2018, Xi’an, China, 30 November–2 December 2018; pp. 3571–3576.

- Akram, M.W.; Li, G.; Jin, Y.; Chen, X.; Zhu, C.; Zhao, X.; Khaliq, A.; Faheem, M.; Ahmad, A. CNN based automatic detection of photovoltaic cell defects in electroluminescence images. Energy 2019, 189, 116319.

- Balzategui, J.; Eciolaza, L.; Arana-Arexolaleiba, N. Defect detection on Polycrystalline solar cells using Electroluminescence and Fully Convolutional Neural Networks. In Proceedings of the 2020 IEEE/SICE International Symposium on System Integration, SII 2020, Honolulu, HI, USA, 12–15 January 2020; pp. 949–953.

- Mathias, N.; Shaikh, F.; Thakur, C.; Shetty, S.; Dumane, P.; Chavan, D.S. Detection of Micro-Cracks in Electroluminescence Images of Photovoltaic Modules. SSRN Electron. J. 2020.

- Su, B.; Chen, H.; Chen, P.; Bian, G.; Liu, K.; Liu, W. Deep Learning-Based Solar-Cell Manufacturing Defect Detection with Complementary Attention Network. IEEE Trans. Ind. Inform. 2021, 17, 4084–4095.

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 13–16 December 2015; pp. 1440–1448.

- Demirci, M.Y.; Beşli, N.; Gümüşçü, A. Efficient deep feature extraction and classification for identifying defective photovoltaic module cells in Electroluminescence images. Expert Syst. Appl. 2021, 175, 114810.