Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Eman Khald Justaniah | -- | 1869 | 2022-06-19 12:10:33 | | | |

| 2 | Camila Xu | -3 word(s) | 1866 | 2022-06-21 04:24:19 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Justaniah, E.; Aldabbagh, G.; Alhothali, A.; , . Breast Density and Pre-Trained Convolutional Neural Network. Encyclopedia. Available online: https://encyclopedia.pub/entry/24186 (accessed on 15 January 2026).

Justaniah E, Aldabbagh G, Alhothali A, . Breast Density and Pre-Trained Convolutional Neural Network. Encyclopedia. Available at: https://encyclopedia.pub/entry/24186. Accessed January 15, 2026.

Justaniah, Eman, Ghadah Aldabbagh, Areej Alhothali, . "Breast Density and Pre-Trained Convolutional Neural Network" Encyclopedia, https://encyclopedia.pub/entry/24186 (accessed January 15, 2026).

Justaniah, E., Aldabbagh, G., Alhothali, A., & , . (2022, June 19). Breast Density and Pre-Trained Convolutional Neural Network. In Encyclopedia. https://encyclopedia.pub/entry/24186

Justaniah, Eman, et al. "Breast Density and Pre-Trained Convolutional Neural Network." Encyclopedia. Web. 19 June, 2022.

Copy Citation

Breast density describes the amount of fibrous and glandular tissue in a breast compared with the amount of fatty tissue. The breast density is assigned to one of four classes in the mammogram report based on the ACR BI-RADS standard. Convolutional Neural Network (CNN) are a type of artificial neural network usually used for classification and computer vision tasks. Therefore, CNNs are considered efficient tools for medical imaging classification.

breast density

breast cancer

Convolutional Neural Network (CNN)

1. Breast Density

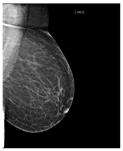

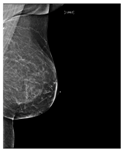

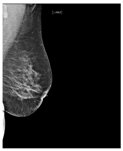

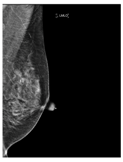

Breast density describes the amount of fibrous and glandular tissue in a breast compared with the amount of fatty tissue. The breast density is assigned to one of four classes in the mammogram report based on the ACR BI-RADS standard. In class A, the breasts are almost entirely fatty. A few areas of dense tissue scattered within the breasts indicate class B. In class C, the breasts are heterogeneously dense. Finally, in class D, breasts are extremely dense [1][2]. Breast density plays a significant role in detecting breast cancer and on the risk of developing breast cancer. The clinicians must identify breast density from a mammogram for each patient and write it in their reports. Usually, dense breasts—i.e., breasts categorized into class C or D—are more likely to be affected by breast cancer [3]. In [4], the researchers studied the relationship between mammographic densities and breast cancer risk. The results showed that the ratio of positive cancer cases for the different ACR classes were as follows: D (13.7%), C (3.3%), B (2.7%), and A (2.2%). Table 1 illustrates the difference between ACR classes.

Table 1. Breast density classes.

| ACR Class | Feature | Tissues Proportion | Example |

|---|---|---|---|

| A | Fatty | Less than 25% dense tissue |  |

| B | Fibro-glandular | 25–50% dense tissue |  |

| C | Heterogeneously dense | 50–75% dense tissue |  |

| D | Extremely dense | More than 75% dense tissue |  |

Breast density is important and must be specified in breast screening medical reports. Determining breast density can be challenging and mostly subjective, especially if they have been affected by treatment such as chemotherapy [5].

2. Convolutional Neural Network (CNN)

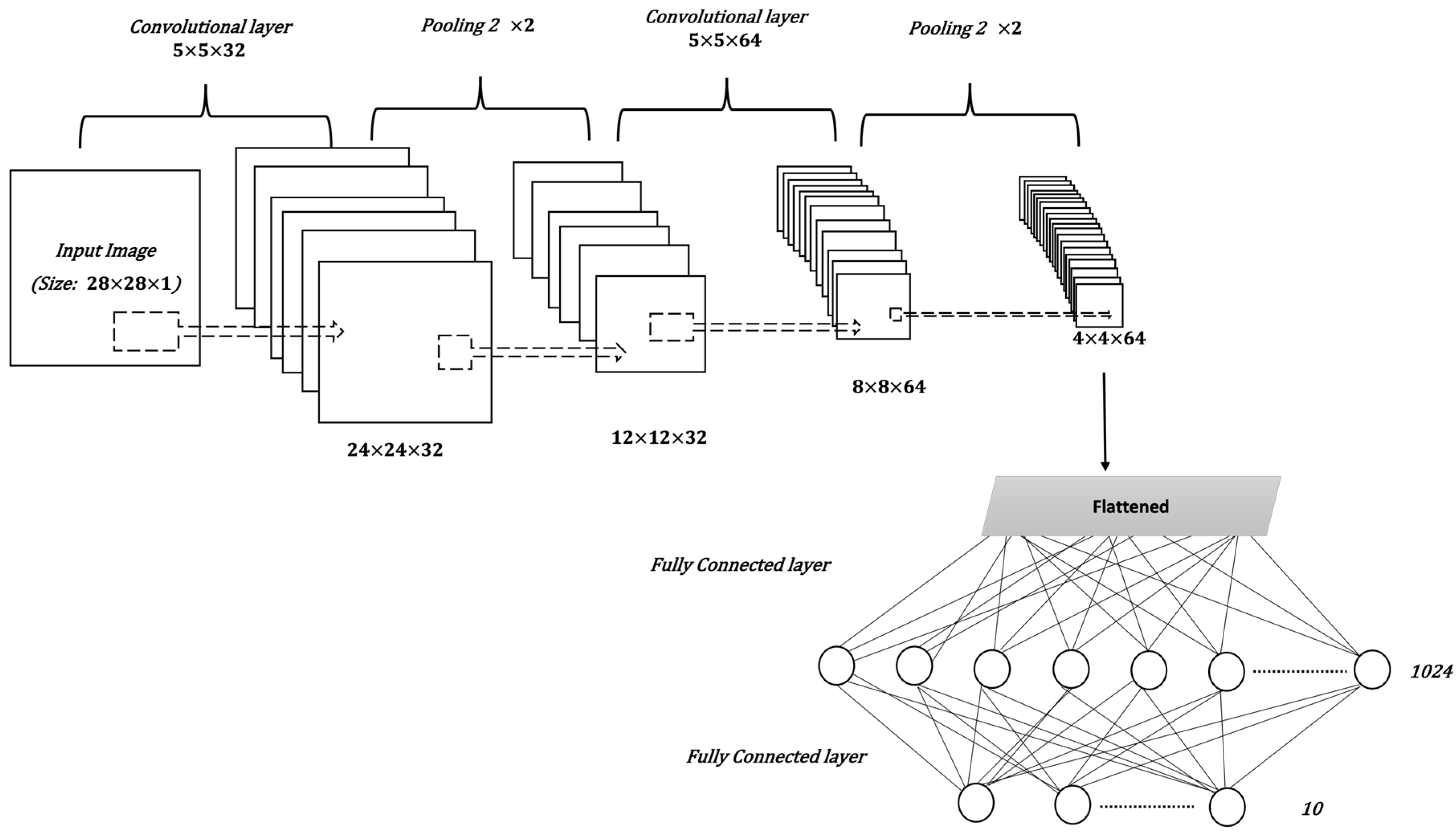

CNNs are a type of artificial neural network usually used for classification and computer vision tasks. Therefore, CNNs are considered efficient tools for medical imaging classification. In addition to input and output layers, CNNs include three main types of layers: convolutional, pooling, and fully connected. The convolutional layer is the main part of the CNN; it incorporates input data, a filter, and a feature map. The pooling layer or down-sampling layer seeks to reduce the number of parameters in the input. In the fully connected layer, a neuron applies a linear transformation to the input vector through a weight matrix [6]. Figure 1 illustrates the general structure of the CNN, assuming an input image size is 28 by 28, and a target task is classifying images into one of 10 classes [7].

Figure 1. The general structure of a CNN.

Figure 1. The general structure of a CNN.The CNN model is affected by many factors, including the number of layers, layer parameters, and other hyperparameters of the model, such as the optimizer and loss function. The loss function is used to calculate the difference between the predicted value and the actual value. An optimizer is a function that modifies the weights and learning rate of the DL model to reduce the loss value and increase accuracy. With classification problems, especially when there are more than two classes, the categorical_crossentropy function is the best choice to calculate loss value [8]. Root mean square propagation (RMSProp), and adaptive moment estimation(Adam), are the most commonly used optimizers [9][10].

3. Transfer Learning and Pre-Trained CNNs

Transfer learning reuses the knowledge gained from a previous task in a new deep learning model. Usually, deep learning requires a large amount of data to achieve good results. However, it is often difficult to gather enough data, especially in the medical field. Therefore, transfer learning enhances the learning process when the dataset has limited samples [11]. A pre-trained model is a model that was created and trained to solve a problem that is related to the target task. For example, in image classification tasks, such as flower image classification, researchers can use VGG19, which is a pre-trained CNN used to classify images that was trained on a huge image dataset called ImageNet [12]. Table 2, presents the main information about the pre-trained CNN that was used in this research [13][14]. This information includes the model name, the number of layers, the top-1 accuracy of the model in classifying ImageNet data, and the year the model was established. The top-1 accuracy checks if the class with the highest probability is the same as the target label [15]. All of the models were trained on ImageNet. The ImageNet is a large dataset of 14,197,122 annotated images belonging to more than 1000 categories [16].

Table 2. Pre-trained CNNs.

| CNN Model | Model Name Origin | Number of Layers | Top-1 Accuracy | Year Established/Updated |

|---|---|---|---|---|

| VGG16 | Visual Geometry Group | 16 | 71.3% | 2015 |

| VGG19 | Visual Geometry Group | 19 | 71.3% | 2015 |

| ResNet50V2 | Residual Neural Network | 103 | 76.0% | 2016 |

| InceptionV3 | Inception | 189 | 77.9% | 2016 |

| Xception | Extrem Inception | 81 | 79% | 2017 |

| InceptionResNetV2 | Inveption-Residual Neural Network | 449 | 80.3% | 2017 |

| DenseNet121 | Densely Connected Convolutional Networks | 242 | 75.0% | 2017 |

| MobileNetV2 | MobileNet | 105 | 71.3% | 2018 |

| EfficientNetB0 | EfficientNet | 132 | 77.1% | 2019 |

4. Related Works

Recently, many studies have sought to develop deep learning models to assess breast density. Some of these studies target two classes of density (fatty or dense), while other studies classify the breast as fatty, glandular, or dense. However, most studies classify breast density into four classes according to the BI-RADS system. Here researchers mention only recent works related to ACR classification, as it is a standard in medical reporting. In [17], the researchers proposed a breast density classification model based on convolutional neural networks (CNNs). They applied two techniques to 200,000 breast screenings. They called the first technique a baseline and the second a deep convolutional neural network (deep CNN). In the baseline, they used pixel intensity histograms of screening as input features. Then, softmax regression was used as a classifier. In deep CNN, the inputs were the four screening views, while the fully connected layer consisted of 1024 hidden units and the output layer used the softmax activation function. Additionally, they used the weights of the previously trained model of breast cancer detection to initialize the parameters of their network. Both techniques were measured by computing the area under the ROC curve (AUC), the accuracy of super-classes (dense or non-dense), and the ACR accuracy. For the baseline with 20 bins, AUC = 0.832, ACR accuracy = 67.9%, and super-classes accuracy = 81.1%. For deep CNN, AUC = 0.916, ACR accuracy = 76.7%, and super-classes accuracy = 86.5%. Another CNN was then applied to the MAIS dataset to classify breast density in [18]. Different preprocessing techniques were used, including pectoral muscle segmentation, image augmentation, and image resizing. The CNN consists of three convolutional layers, followed by two fully connected layers, and, finally, the output layer. The dataset was divided, with 20% used for testing and 80% for training, before five-fold cross-validation was applied. The overall accuracy of ACR classification was 83.6%. Moreover, in [19] the CNNs were applied with a squeeze-and-excitation network (SE-Net) mechanism to classify breast density from mammograms. The three CNN models used with SE-Net were Inception-V4, ResNeXt, and DenseNet. A 10-fold cross-validation was used to obtain better results. The dataset consisted of 18,157 images. The preprocessing entailed removing the background, grayscale transformation, augmentation by cropping and rotating images, and normalizing the images into a normal distribution. The classification accuracy was measured for each model with and without SE-Attention. The accuracy of Inception-V4 and Inception-V4-SE-Attention was 89.97% and 92.17%, respectively, while for ResNeXt50 and ResNeXt50-SE-Attention, the accuracy was 89.64% and 91.57%, respectively. Finally, the accuracy of DenseNet121 was 89.20%, and for DenseNet121-SE-Attention it was 91.79%. Furthermore, in [20], a fine-tuned model based on InceptionV3 was used to classify breast density. The dataset consists of 3813 mammogram screenings. The accuracy obtained by the model for the BI-RADS classification based on 150 screenings was 83.33%. Meanwhile, in [21], a deep learning model based on vgg16 was proposed to predict breast density class. The central idea of this work is to compute the amount of fibroglandular tissue in each image. The dataset consists of 1602 images, 70% of which were used for training, and 30% for testing. The accuracy of the model 79.6%. In [22], a deep CNN based on ResNet-18 was applied. The experiment was performed on a dataset with 41,479 digital screening mammograms for training and 8677 mammograms for testing. The accuracy of dense or non-dense classification was 86.88%. On the other hand, the accuracy of classification into the four BI-RADS categories was 76.78%. The researchers in [23] used another deep learning-based approach for fully automated breast density classification. This approach comprised three main stages. In the first stage, the breast area is isolated from the mammogram by removing the background and pectoral muscles. In the second stage, a binary mask containing the dense tissue is created by a generative adversarial network (cGAN). Then, in the third stage, breast density is classified by feeding the binary mask into a multi-class CNN. The INbreast dataset was used for training and testing. The overall accuracy of density classification was 98.75%. In [24], a range of deep CNN architectures with different numbers of filters, layers, dropout rates, and epochs were evaluated. The database used included 20,578 images, which were then reduced to 12,932 images to avoid over-representing ACR densities B and C, before being divided into 70% for training and 30% for testing. The chosen CNN architecture consists of 13 convolutional layers followed by max-pooling and dropout at a rate of 50%. The number of epochs was 120 and the patch size was 40. The performance was measured for MLO and CC views separately and the ACR classification accuracy was 90.9% and 90.1%, respectively. Researchers in [25], meanwhile, proposed a multi-path deep convolutional neural network (multi-path DCNN) in order to classify breast density. The proposed DCNN takes four inputs including subsamples of digital mammograms, largest square region of interest, a mask of dense area, and the percentage of breast density. They used ten-fold cross validation, resulting in an overall accuracy of 80.7%. In [26][27], a residual neural network was used to classify breast density according to two classes (fatty and dense), and BI-RADS classification. The collected dataset in this work consists of 7848 images. After excluding badly exposed images and cases involving one breast, the total number of images was reduced to 1962. The proposed model consists of 41 convolutional layers. The model was tested with different image sizes and the size 250×250 gave the highest accuracy with BI-RADS classification. The obtained accuracy for two classes classification and BI-RADS classification was 86.3% and 76.0%, respectively. In [28], the researcher proposed an artificial neural net- work called DualViewNet as a means to classifying breast density. The structure of this model was based on MobileNetV2. In this model, a joint classification on MLO and CC mammograms corresponding to the same breast was performed. They used CBIS-DDSM and applied image enhancement techniques and image augmentation during the preprocessing stage. Moreover, they excluded the images with suspect labels. The performance measurement was carried out by computing AUC equal to 0.9882. In [29], the researchers collected a dataset from 33 different clinics. This research was to test deep learning in classifying breast density from a large mammogram dataset that was collected from a range of multi-institutions. The dataset consists of 108,230 images. They used VGG16, ResNet, InceptionV3, and DenseNet121. The overall accuracy of the model was 66.7%. Researchers in [30], employed federated learning (FL) to classify breast density across seven clinical institutions. They applied a pre-trained model based on DensNet12, achieving an overall accuracy of 77%. Several residual nets were used in [31] to classify breast density, including ResNet43, ResNet50, and ResNet101. The models applied on a clinical dataset consisted of 1985 mammograms, in addition to the INbreast dataset.

References

- Kerlikowske, K.; Miglioretti, D.L.; Vachon, C.M. Discussions of Dense Breasts, Breast Cancer Risk, and Screening Choices in 2019. JAMA 2019, 322, 69–70.

- Center for Disease Control and Prevention. What Does It Mean to Have Dense Breasts? 2020. Available online: https://www.cdc.gov/cancer/breast/basic_info/dense-breasts.htm (accessed on 16 March 2021).

- Daniaux, M.; Gruber, L.; Santner, W.; Czech, T.; Knapp, R. Interval breast cancer: Analysis of occurrence, subtypes and implications for breast cancer screening in a model region. Eur. J. Radiol. 2021, 143, 109905.

- Ali, E.A.; Raafat, M. Relationship of mammographic densities to breast cancer risk. Egypt. J. Radiol. Nucl. Med. 2021, 52, 1–5.

- Mistry, K.A.; Thakur, M.H.; Kembhavi, S.A. The effect of chemotherapy on the mammographic appearance of breast cancer and correlation with histopathology. Br. J. Radiol. 2016, 89, 20150479.

- Education, I.C. Convolutional Neural Networks 2020. Available online: https://www.ibm.com/cloud/learn/convolutional-neural-networks (accessed on 22 April 2022).

- Sebastian Raschka, V.M. Python Machine Learning; Packt: Birmingham, UK, 2017.

- Brownlee, J. How to Choose Loss Functions When Training Deep Learning Neural Networks. 2020. Available online: https://machinelearningmastery.com/how-to-choose-loss-functions-when-training-deep-learning-neural-networks/ (accessed on 12 January 2022).

- Gupta, A. A Comprehensive Guide on Deep Learning Optimizers. 2021. Available online: https://www.analyticsvidhya.com/blog/2021/10/a-comprehensive-guide-on-deep-learning-optimizers/ (accessed on 16 December 2021).

- Hamdia, K.M.; Ghasemi, H.; Bazi, Y.; AlHichri, H.; Alajlan, N.; Rabczuk, T. A novel deep learning based method for the computational material design of flexoelectric nanostructures with topology optimization. Finite Elem. Anal. Des. 2019, 165, 21–30.

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76.

- Wu, Y.; Qin, X.; Pan, Y.; Yuan, C. Convolution neural network based transfer learning for classification of flowers. In Proceedings of the 2018 IEEE 3rd international conference on signal and image processing (ICSIP), Shenzhen, China, 13–15 July 2018; pp. 562–566.

- Tsochatzidis, L.; Costaridou, L.; Pratikakis, I. Deep learning for breast cancer diagnosis from mammograms—A comparative study. J. Imaging 2019, 5, 37.

- Keras Applications 2022. Available online: https://keras.io/api/applications/ (accessed on 16 November 2021).

- Dang, A.T. Accuracy and Loss: Things to Know about The Top 1 and Top 5 Accuracy. 2021. Available online: https://towardsdatascience.com/accuracy-and-loss-things-to-know-about-the-top-1-and-top-5-accuracy-1d6beb8f6df3 (accessed on 20 May 2021).

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252.

- Wu, N.; Geras, K.J.; Shen, Y.; Su, J.; Kim, S.G.; Kim, E.; Wolfson, S.; Moy, L.; Cho, K. Breast density classification with deep convolutional neural networks. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 6682–6686.

- Shi, P.; Wu, C.; Zhong, J.; Wang, H. Deep Learning from Small Dataset for BI-RADS Density Classification of Mammography Images. In Proceedings of the 2019 10th International Conference on Information Technology in Medicine and Education (ITME), Qingdao, China, 23–25 August 2019; pp. 102–109.

- Deng, J.; Ma, Y.; Li, D.a.; Zhao, J.; Liu, Y.; Zhang, H. Classification of breast density categories based on SE-Attention neural networks. Comput. Methods Programs Biomed. 2020, 193, 105489.

- Gandomkar, Z.; Suleiman, M.E.; Demchig, D.; Brennan, P.C.; McEntee, M.F. BI-RADS density categorization using deep neural networks. In Medical Imaging 2019: Image Perception, Observer Performance, and Technology Assessment; SPIE: San Diego, CA, USA, 2019; Volume 10952, pp. 149–155.

- Tardy, M.; Scheffer, B.; Mateus, D. Breast Density Quantification Using Weakly Annotated Dataset. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019; pp. 1087–1091.

- Lehman, C.D.; Yala, A.; Schuster, T.; Dontchos, B.; Bahl, M.; Swanson, K.; Barzilay, R. Mammographic breast density assessment using deep learning: Clinical implementation. Radiology 2019, 290, 52–58.

- Saffari, N.; Rashwan, H.A.; Abdel-Nasser, M.; Kumar Singh, V.; Arenas, M.; Mangina, E.; Herrera, B.; Puig, D. Fully Automated Breast Density Segmentation and Classification Using Deep Learning. Diagnostics 2020, 10, 988.

- Ciritsis, A.; Rossi, C.; Vittoria De Martini, I.; Eberhard, M.; Marcon, M.; Becker, A.S.; Berger, N.; Boss, A. Determination of mammographic breast density using a deep convolutional neural network. Br. J. Radiol. 2019, 92, 20180691.

- Ma, X.; Fisher, C.; Wei, J.; Helvie, M.A.; Chan, H.P.; Zhou, C.; Hadjiiski, L.; Lu, Y. Multi-path deep learning model for automated mammographic density categorization. In Medical Imaging 2019: Computer-Aided Diagnosis; SPIE: San Diego, CA, USA, 2019; Volume 10950, pp. 621–626.

- Lizzi, F.; Laruina, F.; Oliva, P.; Retico, A.; Fantacci, M.E. Residual convolutional neural networks to automatically extract significant breast density features. In International Conference on Computer Analysis of Images and Patterns; Springer: Berlin/Heidelberg, Germany, 2019; pp. 28–35.

- Lizzi, F.; Atzori, S.; Aringhieri, G.; Bosco, P.; Marini, C.; Retico, A.; Traino, A.C.; Caramella, D.; Fantacci, M.E. Residual Convolutional Neural Networks for Breast Density Classification. In BIOINFORMATICS; SciTePress: Setúbal, Portugal, 2019; pp. 258–263.

- Cogan, T.; Tamil, L. Deep Understanding of Breast Density Classification. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 1140–1143.

- Chang, K.; Beers, A.L.; Brink, L.; Patel, J.B.; Singh, P.; Arun, N.T.; Hoebel, K.V.; Gaw, N.; Shah, M.; Pisano, E.D.; et al. Multi-institutional assessment and crowdsourcing evaluation of deep learning for automated classification of breast density. J. Am. Coll. Radiol. 2020, 17, 1653–1662.

- Roth, H.R.; Chang, K.; Singh, P.; Neumark, N.; Li, W.; Gupta, V.; Gupta, S.; Qu, L.; Ihsani, A.; Bizzo, B.C.; et al. Federated learning for breast density classification: A real-world implementation. In Domain Adaptation and Representation Transfer, and Distributed and Collaborative Learning; Springer: Berlin/Heidelberg, Germany, 2020; pp. 181–191.

- Li, C.; Xu, J.; Liu, Q.; Zhou, Y.; Mou, L.; Pu, Z.; Xia, Y.; Zheng, H.; Wang, S. Multi-view mammographic density classification by dilated and attention-guided residual learning. IEEE/ACM Trans. Comput. Biol. Bioinform. 2020, 18, 1003–1013.

More

Information

Subjects:

Computer Science, Artificial Intelligence; Others

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.0K

Revisions:

2 times

(View History)

Update Date:

21 Jun 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No