Recently, many studies have sought to develop deep learning models to assess breast density. Some of these studies target two classes of density (fatty or dense), while other studies classify the breast as fatty, glandular, or dense. However, most studies classify breast density into four classes according to the BI-RADS system. Here

wresearche

rs mention only recent works related to ACR classification, as it is a standard in medical reporting. In

[32][17], the

resea

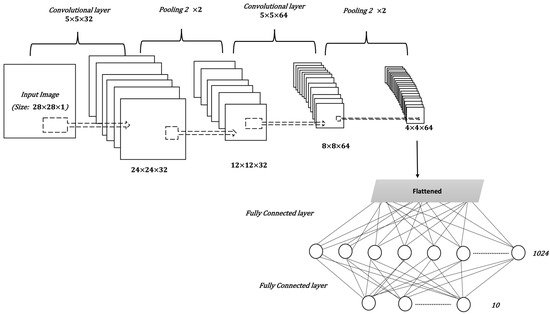

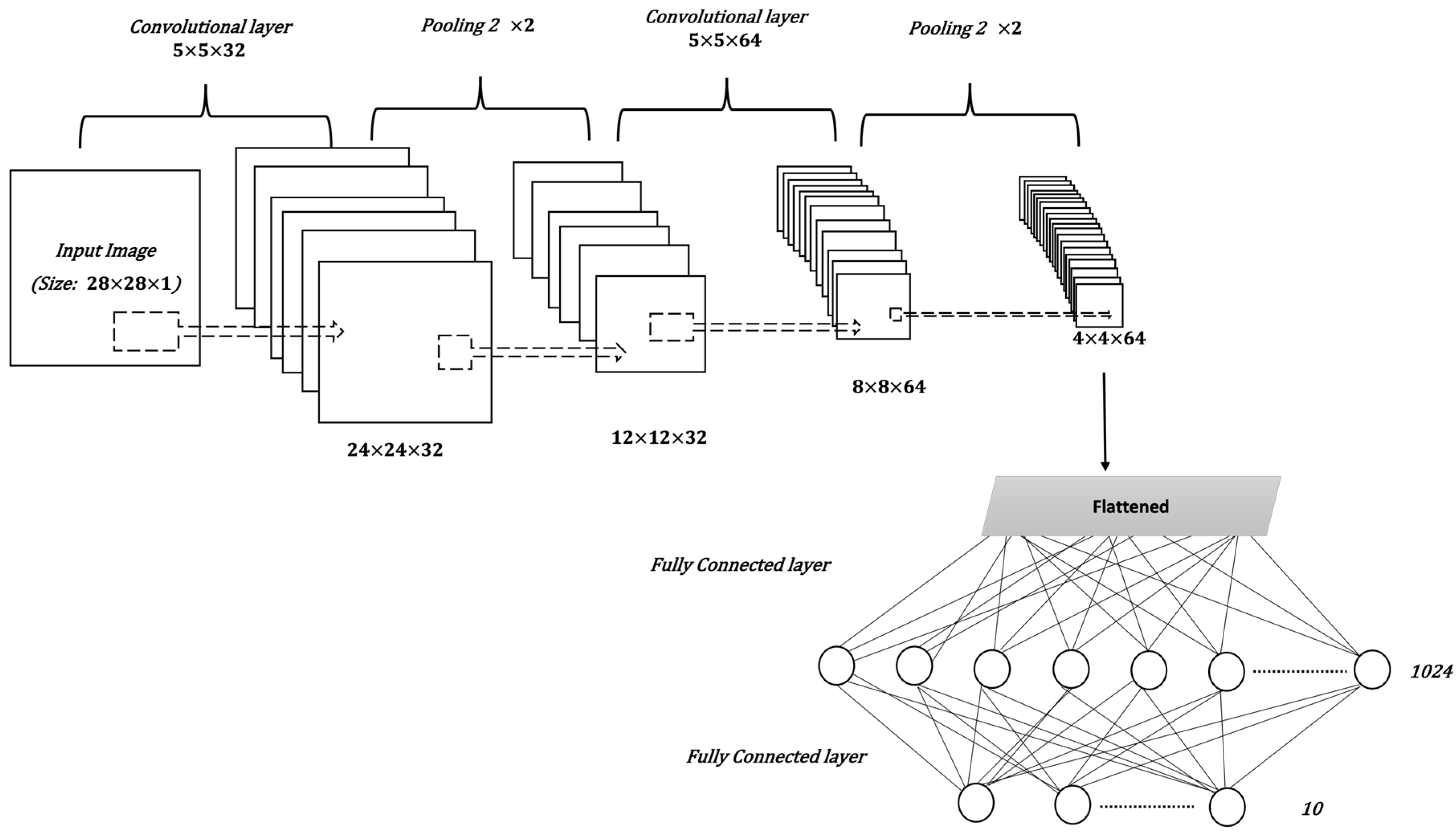

uthorchers proposed a breast density classification model based on convolutional neural networks (CNNs). They applied two techniques to 200,000 breast screenings. They called the first technique a baseline and the second a deep convolutional neural network (deep CNN). In the baseline, they used pixel intensity histograms of screening as input features. Then, softmax regression was used as a classifier. In deep CNN, the inputs were the four screening views, while the fully connected layer consisted of 1024 hidden units and the output layer used the softmax activation function. Additionally, they used the weights of the previously trained model of breast cancer detection to initialize the parameters of their network. Both techniques were measured by computing the area under the ROC curve (AUC), the accuracy of super-classes (dense or non-dense), and the ACR accuracy. For the baseline with 20 bins, AUC = 0.832, ACR accuracy = 67.9%, and super-classes accuracy = 81.1%. For deep CNN, AUC = 0.916, ACR accuracy = 76.7%, and super-classes accuracy = 86.5%. Another CNN was then applied to the MAIS dataset to classify breast density in

[33][18]. Different preprocessing techniques were used, including pectoral muscle segmentation, image augmentation, and image resizing. The CNN consists of three convolutional layers, followed by two fully connected layers, and, finally, the output layer. The dataset was divided, with 20% used for testing and 80% for training, before five-fold cross-validation was applied. The overall accuracy of ACR classification was 83.6%. Moreover, in

[34][19] the CNNs were applied with a squeeze-and-excitation network (SE-Net) mechanism to classify breast density from mammograms. The three CNN models used with SE-Net were Inception-V4, ResNeXt, and DenseNet. A 10-fold cross-validation was used to obtain better results. The dataset consisted of 18,157 images. The preprocessing entailed removing the background, grayscale transformation, augmentation by cropping and rotating images, and normalizing the images into a normal distribution. The classification accuracy was measured for each model with and without SE-Attention. The accuracy of Inception-V4 and Inception-V4-SE-Attention was 89.97% and 92.17%, respectively, while for ResNeXt50 and ResNeXt50-SE-Attention, the accuracy was 89.64% and 91.57%, respectively. Finally, the accuracy of DenseNet121 was 89.20%, and for DenseNet121-SE-Attention it was 91.79%. Furthermore, in

[35][20], a fine-tuned model based on InceptionV3 was used to classify breast density. The dataset consists of 3813 mammogram screenings. The accuracy obtained by the model for the BI-RADS classification based on 150 screenings was 83.33%. Meanwhile, in

[36][21], a deep learning model based on vgg16 was proposed to predict breast density class. The central idea of this work is to compute the amount of fibroglandular tissue in each image. The dataset consists of 1602 images, 70% of which were used for training, and 30% for testing. The accuracy of the model 79.6%. In

[37][22], a deep CNN based on ResNet-18 was applied. The experiment was performed on a dataset with 41,479 digital screening mammograms for training and 8677 mammograms for testing. The accuracy of dense or non-dense classification was 86.88%. On the other hand, the accuracy of classification into the four BI-RADS categories was 76.78%. The

resea

uthorchers in

[38][23] used another deep learning-based approach for fully automated breast density classification. This approach comprised three main stages. In the first stage, the breast area is isolated from the mammogram by removing the background and pectoral muscles. In the second stage, a binary mask containing the dense tissue is created by a generative adversarial network (cGAN). Then, in the third stage, breast density is classified by feeding the binary mask into a multi-class CNN. The INbreast dataset was used for training and testing. The overall accuracy of density classification was 98.75%. In

[39][24], a range of deep CNN architectures with different numbers of filters, layers, dropout rates, and epochs were evaluated. The database used included 20,578 images, which were then reduced to 12,932 images to avoid over-representing ACR densities B and C, before being divided into 70% for training and 30% for testing. The chosen CNN architecture consists of 13 convolutional layers followed by max-pooling and dropout at a rate of 50%. The number of epochs was 120 and the patch size was 40. The performance was measured for MLO and CC views separately and the ACR classification accuracy was 90.9% and 90.1%, respectively.

AutResearch

oers in

[40][25], meanwhile, proposed a multi-path deep convolutional neural network (multi-path DCNN) in order to classify breast density. The proposed DCNN takes four inputs including subsamples of digital mammograms, largest square region of interest, a mask of dense area, and the percentage of breast density. They used ten-fold cross validation, resulting in an overall accuracy of 80.7%. In

[41[26][27],

42], a residual neural network was used to classify breast density according to two classes (fatty and dense), and BI-RADS classification. The collected dataset in this work consists of 7848 images. After excluding badly exposed images and cases involving one breast, the total number of images was reduced to 1962. The proposed model consists of 41 convolutional layers. The model was tested with different image sizes and the size

250×250