You're using an outdated browser. Please upgrade to a modern browser for the best experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Filippo Sanfilippo | -- | 3362 | 2022-04-23 00:04:22 | | | |

| 2 | Amina Yu | -33 word(s) | 3329 | 2022-04-24 04:40:33 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Sanfilippo, F.; Blažauskas, T.; Gionata, S.; Ramos, I.; Vert, S.; Radianti, J.; Majchrzak, T.A.; Oliveira, D. Integrating VR/AR with Haptics into STEM Education. Encyclopedia. Available online: https://encyclopedia.pub/entry/22189 (accessed on 03 January 2026).

Sanfilippo F, Blažauskas T, Gionata S, Ramos I, Vert S, Radianti J, et al. Integrating VR/AR with Haptics into STEM Education. Encyclopedia. Available at: https://encyclopedia.pub/entry/22189. Accessed January 03, 2026.

Sanfilippo, Filippo, Tomas Blažauskas, Salvietti Gionata, Isabel Ramos, Silviu Vert, Jaziar Radianti, Tim A. Majchrzak, Daniel Oliveira. "Integrating VR/AR with Haptics into STEM Education" Encyclopedia, https://encyclopedia.pub/entry/22189 (accessed January 03, 2026).

Sanfilippo, F., Blažauskas, T., Gionata, S., Ramos, I., Vert, S., Radianti, J., Majchrzak, T.A., & Oliveira, D. (2022, April 22). Integrating VR/AR with Haptics into STEM Education. In Encyclopedia. https://encyclopedia.pub/entry/22189

Sanfilippo, Filippo, et al. "Integrating VR/AR with Haptics into STEM Education." Encyclopedia. Web. 22 April, 2022.

Copy Citation

Some concepts may be too difficult too understand in science, technology, engineering, and mathematics (STEM) fields using the traditional pedagogies, namely addressing the relevant topics in lectures and tutorials. Haptic feedback, also known as haptics, is the use of the sense of touch in a human–computer interface. A variety of possible applications are made possible by the use of haptics, including the possibility of expanding the abilities of humans: increasing physical strength, improving manual dexterity, augmenting the senses, and most fascinating, projecting human users into remote or virtual environments.

VR

AR

haptics

STEM

education

1. VR Technology

There are many definitions for Virtual reality (VR). VR is investigated from different perspectives, such as technology, interaction, immersion, semantics and philosophy [1]. All of these perspectives are important when talking about applications of VR in learning. For examples, Mütterlein [2] discusses the three pillars of VR, for example, immersion, presence and interactivity, and investigates how they are interrelated. When considering the application of VR solutions in learning, VR technology should support these three pillars as well as tackling sensory perception channels for multi-sensory learning [3].

Immersive VR devices seek to place a user in a virtual environment. The best-studied solutions are VR Cave Automatic Virtual Environment (CAVE) systems and head mounted displays (HMDs). Early HMD systems were seeking accurate and fast tracking of the head rotation. It was necessary to solve the Motion-to-Photon (End-to-End) latency problem [4], because high latency does not allow full immersion. People can sense the delay and the artificial nature of the environment. Nowadays this problem is largely solved, but some low-cost solutions, such as Google cardboard, still can not provide full immersion; even worse, response lags and jittery movements may lead to motion sickness [5]. The latter can also affect users of high-end HMDs if these do not cater for individual needs [6].

Another step into enhancing the immersiveness is allowing six degrees of movement freedom that requires tracking not only rotation, but also translation. The two approaches in use include outside-in and inside-out tracking. Outside-in tracking uses external devices (for example, Oculus Rift external cameras [6]) to ensure head motion within tracked area. The inside-out tracking adopts cameras inside HMDs to track movements.

The immersiveness is further enhanced by tracking body parts and even external devices (for example, pens, guns, sports equipment). This is relevant for learning applications that require the learning by doing approach. Tracking is done by using additional devices. The systems that could use such tracking are the outside-in systems. The inside-out systems can track palms and fingers, using infrared cameras, but the use-cases are limited in comparison to outside-in tracking systems. The additional trackers that are used in outside-in systems come in various dimensions and forms. For example, the VR ink device [7] from Logitech uses a similar form as a normal pen. This device or similar ones could be used for activities that require precision. Usage may span a wide range; a pen-like instrument could be used for such virtual activities as cutting tissue for a surgical procedure or for learning to solder.

VR technology enables educators to provide comprehensive assistance, because the tracked students’ activities can be used to give feedback in real-time or for briefing/debriefing purposes. Some recently introduced VR systems (for example, HTC VIVE Pro Eye [8], HoloLens [9], …) also include eye tracking capabilities. Eye tracking makes it possible to automatically adjust the interpupillary distance (IPD) and track the gaze of the user [10]. Gaze tracking is used in many areas and it might be important for learning applications as well [11].

Providing haptic feedback in VR to make the experience more realistic has become a strong focus of research in recent years [12]. Haptic feedback has been shown to have an added value to extend immersiveness and add additional dimension related to senses [13]. Haptic experiences with VR, however, remain a challenge [14]. Currently available VR systems are mostly commercial applications and games, employing mostly hardware input. For example, the HaptX Gloves provides true-contact haptics [15], with 133 points of tactile feedback per hand. Dexmo, a hand haptic device for VR medical education by Dexta Robotics [16] is one of the few, existing, haptic VR learning systems. It uses force feedback to enable user feelings of size and shape, and captures 11 degrees of freedom (DoF) of users’ hand motion. However, most of the existing high fidelity haptic rendering devices are still relatively costly. For this reason, a few frameworks exist to facilitate the integration of haptics with different applications. For instance, Interhaptics provides hand interactions and haptic feedback integration for VR, mobile devices, and console applications [17].

2. AR Technology

AR superimposes virtual information over a user’s view of the surrounding environment, in such a way that this information seems naturally part of the real environment [18]. The main advantage over VR is that “AR connects users to the people, locations and objects around them, rather than cutting them off from the surrounding environment” [19]. This effect has a big potential in education, as it is demonstrated in an increasing number notices [20][21][22].

While the performance of AR technology has increased steadily over time, the main components of the hardware have stayed the same: sensors, processors, and displays. The role of the sensors is to provide information for tracking and registration. This is mainly achieved through an optical camera, with or without the help of sensors such as Global Positioning System (GPS), accelerometers, and gyroscopes. Optical tracking is categorised as marker-based, in which a static image is recognised (such as a quick response (QR) code), or marker-less, in which natural features of the surrounding environment are recognised. Other forms of tracking exist, but are much less common, such as acoustic, electromagnetic or mechanic [23].

Displays are the most prominent part of the hardware and the most impactful for the end user. They are usually visual ones, in the form of head mounted displays (HMD), handheld displays (HHD) or spatial AR (SAR) [24]. Less common forms of display are: tactile, audio and olfactory. Notably, audio displays are still much more common than tactile and olfactory displays, as there are already consumer devices specifically aimed at audio AR [25]. Input devices are often considered as a separate category, ranging from keyboards to voice inputs.

Nowadays, due to their ubiquity and versatility, handheld devices, in the form of smartphones, have become the main vehicle for AR experiences in many fields, including education [26], while hardware in smartphones has not changed fundamentally in recent years (yet continued to advance gradually), software evolution has been much more prominent (for example, see the work presented in [27]).

Regarding software in AR, besides the low-level software that powers sensors, processors and displays to perform their tasks, higher-level software is used to enable creators to design different AR experiences. Depending on the technical skills of the creator, and the business or educational needs, one can use AR software development kits (SDKs) such as Vuforia, Wikitude, ARKit or ARCore, or all-in-one platforms, such as Cospaces Edu or EON Reality (which do not require programming skills).

A search in the scientific one revealed no consistent existing research on exploiting AR in STEM education for fully-immersive remote laboratory learning. Most surveys cover the whole spectrum of target groups, from early childhood education to doctoral education. In general, AR is known to increase the understanding of the learning content, especially in spatial structure and function, compared to other forms of media, such as books or video; to aid with long-term memory retention, compared to non-AR experiences; to improve physical task performance, but also collaboration; to increase student motivation, through providing satisfaction and fun to the activities [28]. Use cases for education might be transferable from non-educational, professional AR usage such as from application that counter information overload [29].

For how AR is supporting STEM education [26] showed that the majority of the developed applications were exploration apps and simulation tools. At the same time, most were self-developed native applications, while the others used AR development tools. Furthermore, the vast majority were marker-based and only a few were location-based. These existing applications almost exclusively stimulate sight, leaving other senses unexplored. It was also surveyed what learning outcomes were measured and how, concluding that are missing a deeper understanding of how AR learning experiences take place in STEM environments [26].

For AR in STEM recognises intensive notice in this area in recent years [30], although it still mainly addresses early childhood education. It was categorised the advantages of applying AR in STEM: contribution to learner, educational outcomes, student interaction and others. However, they also identified challenges. Most of these are owed to technical problems (for example, weak detection of markers or GPS position). Other challenges include teachers’ resistance to adopting the AR technology, in which the time required prolonged periods to develop high-quality content plays an important role [30].

In contrast to VR, where it has somewhat established devices, both low-cost, low performance ones (Google Cardboard and similar) and high-cost, high-performance ones (for example, Oculus Quest 2 or HTC Vive Pro 2), in AR that can rely only on the first type. These are the smartphones, which can actually be considered zero-cost, since the vast majority of the users already own one, and medium performance, since the a lot of effort has been put into developing high-performing AR on these devices, by big players (Google, Apple). Their disadvantage, however, in virtual labs in STEM education for example, is that (at least) one of the hands of the student is busy holding the smartphone, so haptic interaction is limited.

More appropriate and powerful AR devices, as in AR glasses, are still to become mainstream. Google’s AR glasses, launched for the general public in 2013, have been retired quickly, in 2015, and are now produced only for the enterprise domain. Apple’s AR glasses have been rumoured to be released for several years now, so they are still to come. Other brands of glasses, such as Moverio or Magic Leap One, have failed to become mainstream (at least in the sense that Oculus or HTC Vive are in the VR world).

In the area of mixed reality (MR), a form of AR in which the interaction of the user with the virtual objects is more profound [31], by far the best-known devices are Microsoft’s Hololens. These high-cost, high-performance devices have set a standard for the mixed reality technology up until now.

3. Haptic Technology

Touch is one of the most reliable and robust senses, and is fundamental to the human memory and in discerning the surrounding environment. In fact, touch provides more certainty than other senses, especially vision. To provide the user with tactile information, haptic technology can be employed. Haptic feedback, also known as haptics, is the use of the sense of touch in a human–computer interface. A variety of possible applications are made possible by the use of haptics, including the possibility of expanding the abilities of humans [32]: increasing physical strength, improving manual dexterity, augmenting the senses, and most fascinating, projecting human users into remote or virtual environments. Haptic technology is the key for achieving the tactile feedback experience of the VAKT model.

Early examples of haptic technology applied for gaining “touch” experience of the users through the sensation of forces, vibration or motion can be found in [33][34].

Most of the haptic devices available on the market like the sigma.x, omega.x and delta.x series (Force Dimension, Swiss) or the Phantom Premium (3D Systems Inc., USA) [35] are usually very accurate from a rendering perspective, and able to provide a wide range of forces. However, such devices present a limited workspace with a high cost of production. The pursuit for bigger workspaces and the possibility to achieve multi-contact interaction [36] lead researchers to the development and design of exoskeletons, a type of haptic interface grounded to the body [37]. Exoskeletons can be seen as wearable haptic systems, however they are rather cumbersome and usually heavy to carry, reducing their applicability and effectiveness.

To deal with these limitations, a new generation of wearable haptic interfaces have been investigated [38]. Haptic thimbles [39][40][41], haptic rings [42][43], and haptic armbands [44], have been designed for several applications, ranging from tele-operation to VR or AR interaction. Most of the available wearable haptic interfaces are only capable of providing cutaneous cues that indent and stretch the skin [45], and not kinaesthetic cues, for example, stimuli that act on skeleton, muscles, and joints [46]. Wearable haptic interfaces, providing only cutaneous stimuli, do not exhibit any unstable behaviour due, for instance, to the presence of communication delay in the closed haptic loop [47]. To close-loop control the haptic feedback, the platform requires a cohesively integrated system. As a consequence, the haptic loop with wearable tactile interfaces results to be intrinsically stable. Wearable haptic devices are light, portable and can be used in combination to achieve multi-contact interaction [36]. Moreover, it was recently demonstrated that wearable haptics can also be used in virtual and mixed reality to alter the perception of physical proprieties of tangible objects including stiffness, friction and shape perception [48].

Most of the proposed devices are built combining rapid prototyping techniques with off-the-shelves components including servomotors, vibromotors, programmable board and so on. This aspect can dramatically foster the diffusion of these devices in “at home” scenarios. It can be imagined a future scenario where students could easily download, print and build their own devices and access haptic contents available for the novel VAKT model of e-learning.

4. Evaluation, Assessment and Eye-Tracking Technology

Haptic feedback can be used to increase the degree of presence in a virtual environment, allowing one to touch and feel virtual objects [49], which is very important for learning. Most scholars in educational research have acknowledged and concur that there is a strong connection between assessment and student learning. Traditionally, evaluation and assessment can be done using various methods such as: knowledge tests (for example, written multiple choice, open-ended questions, oral examinations); practical knowledge evaluation and reports with narrative feedback or peer feedback and portfolio containing reflections [50]. However, Kreimeier et. al. [49] that how to assess and evaluate haptic feedback on its task-based presence and performance in virtual reality for STEM education is still rarely discussed.

Many evaluation efforts often focus on the usability of VR [51]. In particular areas, such as medical education, haptic-based VR for learning has increasingly been adopted, for example for simulating surgeries. However, the evaluation part often emphasises the overall impression on realism of the VR simulator, realism of tactile sensation, and other simulator elements, for example, [52], while usability, acceptability and user experience assessment can be considered very relevant, there is also a need for exploring what evaluation and assessment are possible for multi-sensory learning and the use of VR. It was argued on the need for integrating immersive learning into the learning process. In this perspective, it was showed a model proposing two evaluation measurements, for example, measuring outcome (knowledge and skills, acquisition, and retention) and measuring experience (learner motivation, engagement, and immersion) to support multisensory learning.

Moreover, the shift in education theories from behavioralist toward a constructivist perspective [53] assume that students should be regarded as active learners of their own knowledge, skills, and competencies. It was suggested assessment variations such as using social interaction, reflection and feedback involving peers and teacher both narrative/ oral feedback and multi-source feedback, in addition to other assessment method such as portfolio or collection of student products that reveal the achievements and efforts in specific areas. In fact, “simple” pass/fail decisions for learning assessment are gradually changing the assessment environment encouraging students to be more responsible to enhance their own learning [54]. This shift further supports the idea of incorporating immersive learning into the learning process.

Recently there are new developments and possibilities in combining eye-tracking with VR for usability and evaluation ones. Previously, eye-tracking products have appeared as screen-based devices, eye-tracking webcam, or wearable glasses. These devices have been applied in research and business settings to understand how humans interact with systems, machines, and processes. Eye tracking has also been used for understanding media habits including preferences and visual perception on various digital media devices [55], including in educational settings. By tracking gaze behaviour, researchers can measure visual attention to specific elements [56].

Thus, not only VR and haptic technology are advancing, but the eye tracking capability has been combined with VR technology. There are two variants in the market so far, for example, a VR device that has built-in eye-tracking capabilities, for example HTC-Vive Pro Eye, and an independent eye-tracking device that can be mounted to existing VR device. Through such eye-tracking enhanced VR, it is possible to obtain user tracking data that allow researchers to learn about various behaviours with respect to the distribution of the visual attention within the virtual environment.

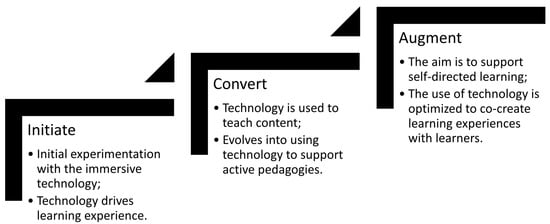

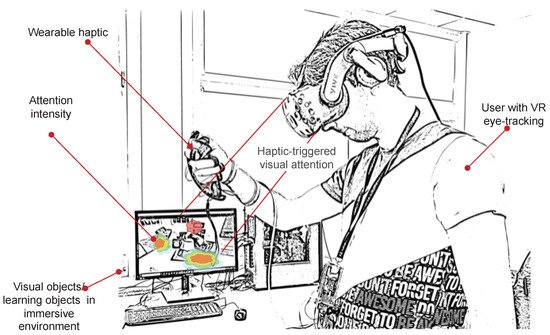

When taking the eye-tracking enhanced VR into learning context, different evaluation possibilities can be considered. Considering Figure 1, the model suggests two evaluation measurements, i.e., measuring outcome (knowledge and skills, acquisition, and retention) and measuring experience (learner motivation, engagement, and immersion). Instructors can verify whether the expected behaviours are achieved, the right objects are seen touched or moved, whether unnecessary distractions occur. There are many more criteria that can be used to evaluate the success of expected behaviour in the virtual environment. Advanced visualisation techniques presenting visual attention data can be presented in many ways such as heat-maps, as shown in Figure 2. Different eye-tracking metrics can be calculated from the raw data and have been proposed, such as pupil diameter (mean of left and right), gaze entropy, fixation duration, and percentage of eyelid closure to decide the importance objects seen by the eye-tracking users [57][58]. The power of incorporating eye-tracking into the multisensory learning case, lies especially on the capability to link kinesthetics/tactile, visual, and auditory feedback of the learners with virtual environment, and generate various data that can be evaluated after the learning session.

Figure 1. Immersive learning maturity model.

Figure 2. Assessment and evaluation concept using VR with eye-tracking capability.

Instructors can predetermine some metrics to show successful sensory-based learning outcomes, for example, by linking haptic feedback to a specific object in the virtual environment, such as objects that relate to eye-hand coordination [59]. Some scholars have used metrics such as task time, economy of motion, drops, instrument collisions, excessive instrument force, instrument out of view, and master workspace range to assess perceived workload. Higher intensity in particular object can be interpreted as perceived higher workload [57]. One can even determine distracting elements that push away user attention from their actual mission/learning points. Similar principles can be reused for integrating VR/AR with haptics into STEM education.

The use of VR in combination with eyetracker can actually enrich the variations of learning assessment method and can engage peers and teachers. For example, it can be done by projecting the virtual environment so that the peers and teachers can observe together and provide feedback on how to improve the learning acquisition and documented for the student’s own learning.

In [60][61], eye-tracking was combined with different information, such as audio, video, bio-metric data, and annotations to improve planning, execution and assessment of demanding training operations, by adopting newly designed risk-evaluation tools. This integration is the base for research on novel situational awareness (SA) assessment methodologies. This can serve the industry for the purpose of improving operational effectiveness and safety through the use of simulators. Such capability has the potential to be adopted for evaluation and assessment of the multi-sensory learning process using VR and haptic technologies, both for measuring the outcome (knowledge and skills, acquisition, and retention) and experience (learner motivation, engagement, and immersion).

VR-eye-tracking assessment can also be employed when seeking more understanding about visual attentions [62], decision making and judgement capability [63], and visual-driven emotional attention [64]. The students can reflect their own learning, perspective and other contextual factors that influence the learning stage. However, to be beneficial and maximising the learning impacts, it is the task of the teacher and facilitator to formulate the assignments, imminence of assessment, the design of the assessment system and the cues. In other words, no single solution what the best assessment systems would be, as the assessment itself really needs innovation and creativity and be anchored to the overall course goal.

References

- Zhou, N.N.; Deng, Y.L. Virtual reality: A state-of-the-art survey. Int. J. Autom. Comput. 2009, 6, 319–325.

- Mütterlein, J. The three pillars of virtual reality? Investigating the roles of immersion, presence, and interactivity. In Proceedings of the 51st Hawaii International Conference on System Sciences, Waikoloa Village, HI, USA, 2–6 January 2018.

- Freina, L.; Ott, M. A literature review on immersive virtual reality in education: State of the art and perspectives. Int. Sci. Conf. Elearning Softw. Educ. 2015, 1, 10–1007.

- Zhao, J.; Allison, R.S.; Vinnikov, M.; Jennings, S. Estimating the motion-to-photon latency in head mounted displays. In Proceedings of the IEEE Virtual Reality (VR), Los Angeles, CA, USA, 18–22 March 2017; pp. 313–314.

- Clay, V.; König, P.; Koenig, S. Eye tracking in virtual reality. J. Eye Mov. Res. 2019, 12.

- Munafo, J.; Diedrick, M.; Stoffregen, T.A. The virtual reality head-mounted display Oculus Rift induces motion sickness and is sexist in its effects. Exp. Brain Res. 2017, 235, 889–901.

- Logitech. VR Ink Stylus. 2021. Available online: https://www.logitech.com/en-roeu/promo/vr-ink.html (accessed on 6 May 2021).

- Sipatchin, A.; Wahl, S.; Rifai, K. Eye-tracking for low vision with virtual reality (VR): Testing status quo usability of the HTC Vive Pro Eye. bioRxiv 2020.

- Ogdon, D.C. HoloLens and VIVE pro: Virtual reality headsets. J. Med. Libr. Assoc. 2019, 107, 118.

- Stengel, M.; Grogorick, S.; Eisemann, M.; Eisemann, E.; Magnor, M.A. An affordable solution for binocular eye tracking and calibration in head-mounted displays. In Proceedings of the 23rd ACM international conference on Multimedia, Brisbane, Australia, 26–30 October 2015; pp. 15–24.

- Syed, R.; Collins-Thompson, K.; Bennett, P.N.; Teng, M.; Williams, S.; Tay, D.W.W.; Iqbal, S. Improving Learning Outcomes with Gaze Tracking and Automatic Question Generation. In Proceedings of the Web Conference, Taipei, Taiwan, 20–25 April 2020; pp. 1693–1703.

- Muender, T.; Bonfert, M.; Reinschluessel, A.V.; Malaka, R.; Döring, T. Haptic Fidelity Framework: Defining the Factors of Realistic Haptic Feedback for Virtual Reality. 2022; preprint.

- Kang, N.; Lee, S. A meta-analysis of recent studies on haptic feedback enhancement in immersive-augmented reality. In Proceedings of the 4th International Conference on Virtual Reality, Hong Kong, China, 24–26 February 2018; pp. 3–9.

- Edwards, B.I.; Bielawski, K.S.; Prada, R.; Cheok, A.D. Haptic virtual reality and immersive learning for enhanced organic chemistry instruction. Virtual Real. 2019, 23, 363–373.

- HaptX. HaptX Gloves. 2022. Available online: https://haptx.com/ (accessed on 23 February 2021).

- Gu, X.; Zhang, Y.; Sun, W.; Bian, Y.; Zhou, D.; Kristensson, P.O. Dexmo: An inexpensive and lightweight mechanical exoskeleton for motion capture and force feedback in VR. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 1991–1995.

- Interhaptics. Haptics for Virtual Reality (VR) and Mixed Reality (MR). 2022. Available online: https://www.interhaptics.com/ (accessed on 23 February 2021).

- Azuma, R.T. A survey of augmented reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385.

- Azuma, R.T. Making augmented reality a reality. In Applied Industrial Optics: Spectroscopy, Imaging and Metrology; Optical Society of America: Washington, DC, USA, 2017; p. JTu1F-1.

- Bacca Acosta, J.L.; Baldiris Navarro, S.M.; Fabregat Gesa, R.; Graf, S.; Kinshuk, D. Augmented reality trends in education: A systematic review of research and applications. J. Educ. Technol. Soc. 2014, 17, 133–149.

- Chen, P.; Liu, X.; Cheng, W.; Huang, R. A review of using Augmented Reality in Education from 2011 to 2016. In Innovations in Smart Learning; Springer: Berlin/Heidelberg, Germany, 2017; pp. 13–18.

- Garzón, J.; Pavón, J.; Baldiris, S. Systematic review and meta-analysis of augmented reality in educational settings. Virtual Real. 2019, 23, 447–459.

- Craig, A.B. Understanding Augmented Reality: Concepts and Applications Newnes; Morgan Kaufmann: Burlington, MA, USA, 2013.

- Wang, J.; Zhu, M.; Fan, X.; Yin, X.; Zhou, Z. Multi-Channel Augmented Reality Interactive Framework Design for Ship Outfitting Guidance. IFAC Pap. Online 2020, 53, 189–196.

- Ren, G.; Wei, S.; O’Neill, E.; Chen, F. Towards the design of effective haptic and audio displays for augmented reality and mixed reality applications. Adv. Multimed. 2018, 2018, 4517150.

- Ibáñez, M.B.; Delgado-Kloos, C. Augmented reality for STEM learning: A systematic review. Comput. Educ. 2018, 123, 109–123.

- Rieger, C.; Majchrzak, T.A. Towards the Definitive Evaluation Framework for Cross-Platform App Development Approaches. J. Syst. Softw. 2019, 153, 175–199.

- Radu, I. Augmented reality in education: A meta-review and cross-media analysis. Pers. Ubiquitous Comput. 2014, 18, 1533–1543.

- Fromm, J.; Eyilmez, K.; Ba’feld, M.; Majchrzak, T.A.; Stieglitz, S. Social Media Data in an Augmented Reality System for Situation Awareness Support in Emergency Control Rooms. Inf. Syst. Front. 2021, 1–24.

- Sırakaya, M.; Alsancak Sırakaya, D. Augmented reality in STEM education: A systematic review. Interact. Learn. Environ. 2020, 1–14.

- Alizadehsalehi, S.; Hadavi, A.; Huang, J.C. From BIM to extended reality in AEC industry. Autom. Constr. 2020, 116, 103254.

- Sanfilippo, F.; Weustink, P.B.; Pettersen, K.Y. A coupling library for the force dimension haptic devices and the 20-sim modelling and simulation environment. In Proceedings of the 41st Annual Conference (IECON) of the IEEE Industrial Electronics Society, Yokohama, Japan, 9–12 November 2015; pp. 168–173.

- Williams, R.L., II; Chen, M.Y.; Seaton, J.M. Haptics-augmented high school physics tutorials. Int. J. Virtual Real. 2001, 5, 167–184.

- Williams, R.L.; Srivastava, M.; Conaster, R.; Howell, J.N. Implementation and evaluation of a haptic playback system. Haptics-e Electron. J. Haptics Res. 2004. Available online: http://hdl.handle.net/1773/34888 (accessed on 27 March 2022).

- Teklemariam, H.G.; Das, A. A case study of phantom omni force feedback device for virtual product design. Int. J. Interact. Des. Manuf. 2017, 11, 881–892.

- Salvietti, G.; Meli, L.; Gioioso, G.; Malvezzi, M.; Prattichizzo, D. Multicontact Bilateral Telemanipulation with Kinematic Asymmetries. IEEE/ASME Trans. Mechatron. 2017, 22, 445–456.

- Leonardis, D.; Barsotti, M.; Loconsole, C.; Solazzi, M.; Troncossi, M.; Mazzotti, C.; Castelli, V.P.; Procopio, C.; Lamola, G.; Chisari, C.; et al. An EMG-controlled robotic hand exoskeleton for bilateral rehabilitation. IEEE Trans. Haptics 2015, 8, 140–151.

- Pacchierotti, C.; Sinclair, S.; Solazzi, M.; Frisoli, A.; Hayward, V.; Prattichizzo, D. Wearable haptic systems for the fingertip and the hand: Taxonomy, review, and perspectives. IEEE Trans. Haptics 2017, 10, 580–600.

- Leonardis, D.; Solazzi, M.; Bortone, I.; Frisoli, A. A wearable fingertip haptic device with 3 DoF asymmetric 3-RSR kinematics. In Proceedings of the 2015 IEEE World Haptics Conference (WHC), Evanston, IL, USA, 22–26 June 2015; pp. 388–393.

- Minamizawa, K.; Fukamachi, S.; Kajimoto, H.; Kawakami, N.; Tachi, S. Gravity grabber: Wearable haptic display to present virtual mass sensation. In Proceedings of the ACM SIGGRAPH 2007 Emerging Technologies, San Diego, CA, USA, 5–9 August 2007; p. 8.

- Prattichizzo, D.; Chinello, F.; Pacchierotti, C.; Malvezzi, M. Towards wearability in fingertip haptics: A 3-dof wearable device for cutaneous force feedback. IEEE Trans. Haptics 2013, 6, 506–516.

- Maisto, M.; Pacchierotti, C.; Chinello, F.; Salvietti, G.; De Luca, A.; Prattichizzo, D. Evaluation of wearable haptic systems for the fingers in augmented reality applications. IEEE Trans. Haptics 2017, 10, 511–522.

- Pacchierotti, C.; Salvietti, G.; Hussain, I.; Meli, L.; Prattichizzo, D. The hRing: A wearable haptic device to avoid occlusions in hand tracking. In Proceedings of the 2016 IEEE Haptics Symposium (HAPTICS), Philadelphia, PA, USA, 8–11 April 2016; pp. 134–139.

- Baldi, T.L.; Scheggi, S.; Aggravi, M.; Prattichizzo, D. Haptic guidance in dynamic environments using optimal reciprocal collision avoidance. IEEE Robot. Autom. Lett. 2017, 3, 265–272.

- Chinello, F.; Malvezzi, M.; Pacchierotti, C.; Prattichizzo, D. Design and development of a 3RRS wearable fingertip cutaneous device. In Proceedings of the IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Busan, Korea, 7–11 July 2015; pp. 293–298.

- Hayward, V.; Astley, O.R.; Cruz-Hernandez, M.; Grant, D.; Robles-De-La-Torre, G. Haptic interfaces and devices. Sens. Rev. 2004, 24, 16–29.

- Pacchierotti, C.; Meli, L.; Chinello, F.; Malvezzi, M.; Prattichizzo, D. Cutaneous haptic feedback to ensure the stability of robotic teleoperation systems. Int. J. Robot. Res. 2015, 34, 1773–1787.

- Salazar, S.V.; Pacchierotti, C.; de Tinguy, X.; Maciel, A.; Marchal, M. Altering the stiffness, friction, and shape perception of tangible objects in virtual reality using wearable haptics. IEEE Trans. Haptics 2020, 13, 167–174.

- Kreimeier, J.; Hammer, S.; Friedmann, D.; Karg, P.; Bühner, C.; Bankel, L.; Götzelmann, T. Evaluation of different types of haptic feedback influencing the task-based presence and performance in virtual reality. In Proceedings of the 12th ACM International Conference on PErvasive Technologies Related to Assistive Environments, Rhodes, Greece, 5–7 June 2019; pp. 289–298.

- Heeneman, S.; Oudkerk Pool, A.; Schuwirth, L.W.; van der Vleuten, C.P.; Driessen, E.W. The impact of programmatic assessment on student learning: Theory versus practice. Med. Educ. 2015, 49, 487–498.

- Kamińska, D.; Zwoliński, G.; Wiak, S.; Petkovska, L.; Cvetkovski, G.; Barba, P.D.; Mognaschi, M.E.; Haamer, R.E.; Anbarjafari, G. Virtual Reality-Based Training: Case Study in Mechatronics. Technol. Knowl. Learn. 2021, 26, 1043–1059.

- Fucentese, S.F.; Rahm, S.; Wieser, K.; Spillmann, J.; Harders, M.; Koch, P.P. Evaluation of a virtual-reality-based simulator using passive haptic feedback for knee arthroscopy. Knee Surg. Sport. Traumatol. Arthrosc. 2015, 23, 1077–1085.

- Yurdabakan, İ. The view of constructivist theory on assessment: Alternative assessment methods in education. Ank. Univ. J. Fac. Educ. Sci. 2011, 44, 51–78.

- Schuwirth, L.W.; Van der Vleuten, C.P. Programmatic assessment: From assessment of learning to assessment for learning. Med. Teach. 2011, 33, 478–485.

- Vraga, E.; Bode, L.; Troller-Renfree, S. Beyond self-reports: Using eye tracking to measure topic and style differences in attention to social media content. Commun. Methods Meas. 2016, 10, 149–164.

- Alemdag, E.; Cagiltay, K. A systematic review of eye tracking research on multimedia learning. Comput. Educ. 2018, 125, 413–428.

- Wu, C.; Cha, J.; Sulek, J.; Zhou, T.; Sundaram, C.P.; Wachs, J.; Yu, D. Eye-tracking metrics predict perceived workload in robotic surgical skills training. Hum. Factors 2020, 62, 1365–1386.

- Da Silva, A.C.; Sierra-Franco, C.A.; Silva-Calpa, G.F.M.; Carvalho, F.; Raposo, A.B. Eye-tracking Data Analysis for Visual Exploration Assessment and Decision Making Interpretation in Virtual Reality Environments. In Proceedings of the 2020 22nd Symposium on Virtual and Augmented Reality (SVR), Porto de Galinhas, Brazil, 7–10 November 2020; pp. 39–46.

- Pernalete, N.; Raheja, A.; Segura, M.; Menychtas, D.; Wieczorek, T.; Carey, S. Eye-Hand Coordination Assessment Metrics Using a Multi-Platform Haptic System with Eye-Tracking and Motion Capture Feedback. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 17–21 July 2018; pp. 2150–2153.

- Sanfilippo, F. A multi-sensor system for enhancing situational awareness in offshore training. In Proceedings of the IEEE International Conference On Cyber Situational Awareness, Data Analytics Furthermore, Assessment (CyberSA), London, UK, 13–16 June 2016; pp. 1–6.

- Sanfilippo, F. A multi-sensor fusion framework for improving situational awareness in demanding maritime training. Reliab. Eng. Syst. Saf. 2017, 161, 12–24.

- Ziv, G. Gaze behavior and visual attention: A review of eye tracking studies in aviation. Int. J. Aviat. Psychol. 2016, 26, 75–104.

- Chen, Y.; Jermias, J.; Panggabean, T. The role of visual attention in the managerial Judgment of Balanced-Scorecard performance evaluation: Insights from using an eye-tracking device. J. Account. Res. 2016, 54, 113–146.

- Fan, S.; Shen, Z.; Jiang, M.; Koenig, B.L.; Xu, J.; Kankanhalli, M.S.; Zhao, Q. Emotional attention: A study of image sentiment and visual attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7521–7531.

More

Information

Subjects:

Engineering, Electrical & Electronic

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.8K

Revisions:

2 times

(View History)

Update Date:

24 Apr 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No