| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Birgitta Dresp | + 1942 word(s) | 1942 | 2022-03-01 05:01:54 | | | |

| 2 | Catherine Yang | + 1 word(s) | 1943 | 2022-03-02 02:41:48 | | |

Video Upload Options

Multifunctional control in real time is a critical target in intelligent robotics. Combined with behavioral flexibility, such control enables real-time robot navigation and adaption to complex, often changing environments. Multifunctionality is observed across a wide range of living species and behaviors. As made clear above, even seemingly simple organisms such as invertebrates demonstrate multifunctional control. Living systems rely on the ability to shift from one behavior to another, and to vary a specific behavior for successful action under changing environmental conditions. Truly multifunctional control is a major challenge in robotics. A plausible approach is to develop a methodology that maps multifunctional biological system properties onto simulations to potentiate rapid prototyping and real-time simulation of solutions (control architectures).

1. Repetitive or Rhythmic Behavior

2. Sensorimotor Integration

3. Movement Planning

References

- Ren, L.; Li, B.; Wei, G.; Wang, K.; Song, Z.; Wei, Y.; Ren, L.; Liu, Q. Biology and bioinspiration of soft robotics: Actuation, sensing, and system integration. iScience 2021, 24, 103075.

- Ni, J.; Wu, L.; Fan, X.; Yang, S.X. Bioinspired Intelligent Algorithm and Its Applications for Mobile Robot Control: A Survey. Comput. Intell. Neurosci. 2016, 2016, 3810903.

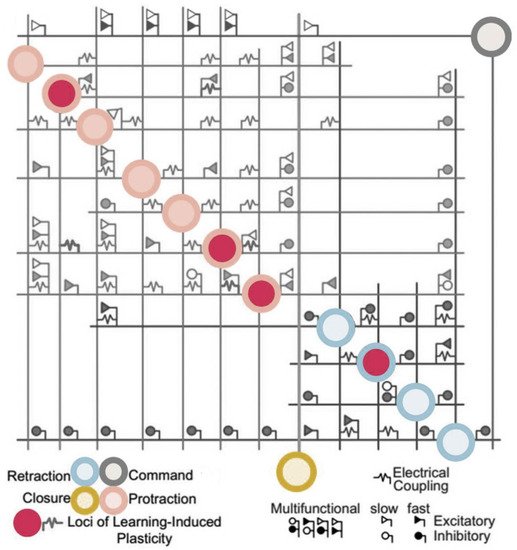

- Webster-Wood, V.A.; Gill, J.P.; Thomas, P.J.; Chiel, H.J. Control for multifunctionality: Bioinspired control based on feeding in Aplysia californica. Biol. Cybern. 2020, 114, 557–588.

- Costa, R.M.; Baxter, D.A.; Byrne, J.H. Computational model of the distributed representation of operant reward memory: Combinatoric engagement of intrinsic and synaptic plasticity mechanisms. Learn. Mem. 2020, 27, 236–249.

- Jing, J.; Cropper, E.C.; Hurwitz, I.; Weiss, K.R. The Construction of Movement with Behavior-Specific and Behavior-Independent Modules. J. Neurosci. 2004, 24, 6315–6325.

- Jing, J.; Weiss, K.R. Neural Mechanisms of Motor Program Switching inAplysia. J. Neurosci. 2001, 21, 7349–7362.

- Jing, J.; Weiss, K.R. Interneuronal Basis of the Generation of Related but Distinct Motor Programs in Aplysia: Implications for Current Neuronal Models of Vertebrate Intralimb Coordination. J. Neurosci. 2002, 22, 6228–6238.

- Hunt, A.; Schmidt, M.; Fischer, M.; Quinn, R. A biologically based neural system coordinates the joints and legs of a tetrapod. Bioinspiration Biomim. 2015, 10, 55004.

- Hunt, A.J.; Szczecinski, N.S.; Quinn, R.D. Development and Training of a Neural Controller for Hind Leg Walking in a Dog Robot. Front. Neurorobotics 2017, 11, 18.

- Szczecinski, N.S.; Chrzanowski, D.M.; Cofer, D.W.; Terrasi, A.S.; Moore, D.R.; Martin, J.P.; Ritzmann, R.E.; Quinn, R.D. Introducing MantisBot: Hexapod robot controlled by a high-fidelity, real-time neural simulation. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 3875–3881.

- Szczecinski, N.S.; Hunt, A.J.; Quinn, R.D. A Functional Subnetwork Approach to Designing Synthetic Nervous Systems That Control Legged Robot Locomotion. Front. Neurorobotics 2017, 11, 37.

- Szczecinski, N.S.; Quinn, R.D. Leg-local neural mechanisms for searching and learning enhance robotic locomotion. Biol. Cybern. 2017, 112, 99–112.

- Capolei, M.C.; Angelidis, E.; Falotico, E.; Lund, H.H.; Tolu, S. A Biomimetic Control Method Increases the Adaptability of a Humanoid Robot Acting in a Dynamic Environment. Front. Neurorobotics 2019, 13, 70.

- Nichols, E.; McDaid, L.J.; Siddique, N. Biologically Inspired SNN for Robot Control. IEEE Trans. Cybern. 2013, 43, 115–128.

- Bing, Z.; Meschede, C.; Röhrbein, F.; Huang, K.; Knoll, A.C. A Survey of Robotics Control Based on Learning-Inspired Spiking Neural Networks. Front. Neurorobotics 2018, 12, 35.

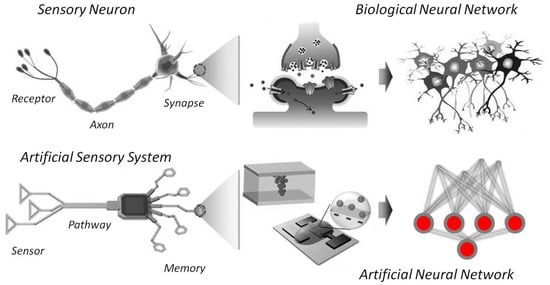

- Wan, C.; Chen, G.; Fu, Y.; Wang, M.; Matsuhisa, N.; Pan, S.; Pan, L.; Yang, H.; Wan, Q.; Zhu, L.; et al. An Artificial Sensory Neuron with Tactile Perceptual Learning. Adv. Mater. 2018, 30, e1801291.

- Wan, C.; Cai, P.; Guo, X.; Wang, M.; Matsuhisa, N.; Yang, L.; Lv, Z.; Luo, Y.; Loh, X.J.; Chen, X. An artificial sensory neuron with visual-haptic fusion. Nat. Commun. 2020, 11, 4602.

- Liu, S.C.; Delbruck, T. Neuromorphic sensory systems. Curr. Opin. Neurobiol. 2010, 20, 288–295.

- Wan, C.; Cai, P.; Wang, M.; Qian, Y.; Huang, W.; Chen, X. Artificial Sensory Memory. Adv. Mater. 2020, 32, e1902434.

- Wilson, S.; Moore, C. S1 somatotopic brain maps. Scholarpedia 2015, 10, 8574.

- Braun, C.; Heinz, U.; Schweizer, R.; Wiech, K.; Birbaumer, N.; Topka, H. Dynamic organization of the somatosensory cortex induced by motor activity. Brain 2001, 124, 2259–2267.

- Arber, S. Motor Circuits in Action: Specification, Connectivity, and Function. Neuron 2012, 74, 975–989.

- Weiss, T.; Miltner, W.H.; Huonker, R.; Friedel, R.; Schmidt, I.; Taub, E. Rapid functional plasticity of the somatosensory cortex after finger amputation. Exp. Brain Res. 2000, 134, 199–203.

- Tripodi, M.; Arber, S. Regulation of motor circuit assembly by spatial and temporal mechanisms. Curr. Opin. Neurobiol. 2012, 22, 615–623.

- Dresp-Langley, B. Seven Properties of Self-Organization in the Human Brain. Big Data Cogn. Comput. 2020, 4, 10.

- Kohonen, T. Self-Organizing Maps; Springer: New York, NY, USA, 2001.

- Dresp-Langley, B. Towards Expert-Based Speed–Precision Control in Early Simulator Training for Novice Surgeons. Information 2018, 9, 316.

- Batmaz, A.U.; De Mathelin, M.; Dresp-Langley, B. Getting nowhere fast: Trade-off between speed and precision in training to execute image-guided hand-tool movements. BMC Psychol. 2016, 4, 55.

- Batmaz, A.U.; De Mathelin, M.; Dresp-Langley, B. Seeing virtual while acting real: Visual display and strategy effects on the time and precision of eye-hand coordination. PLoS ONE 2017, 12, e0183789.

- Batmaz, A.U.; de Mathelin, M.; Dresp-Langley, B. Effects of 2D and 3D image views on hand movement trajectories in the surgeon’s peri-personal space in a computer controlled simulator environment. Cogent Med. 2018, 5, 1426232.

- Dresp-Langley, B.; Nageotte, F.; Zanne, P.; De Mathelin, M. Correlating Grip Force Signals from Multiple Sensors Highlights Prehensile Control Strategies in a Complex Task-User System. Bioengineering 2020, 7, 143.

- De Mathelin, M.; Nageotte, F.; Zanne, P.; Dresp-Langley, B. Sensors for expert grip force profiling: Towards benchmarking manual control of a robotic device for surgical tool movements. Sensors 2019, 19, 4575.

- Staderini, F.; Foppa, C.; Minuzzo, A.; Badii, B.; Qirici, E.; Trallori, G.; Mallardi, B.; Lami, G.; Macrì, G.; Bonanomi, A.; et al. Robotic rectal surgery: State of the art. World J. Gastrointest. Oncol. 2016, 8, 757–771.

- Diana, M.; Marescaux, J. Robotic surgery. Br. J. Surg. 2015, 102, e15–e28.

- Liu, R.; Nageotte, F.; Zanne, P.; De Mathelin, M.; Dresp-Langley, B. Wearable Wireless Biosensors for Spatiotemporal Grip Force Profiling in Real Time. In Proceedings of the 7th International Electronic Conference on Sensors and Applications, Zürich, Switzerland, 20 November 2020; Volume 2, p. 40.

- Liu, R.; Dresp-Langley, B. Making Sense of Thousands of Sensor Data. Electronics 2021, 10, 1391.

- Dresp-Langley, B.; Liu, R.; Wandeto, J.M. Surgical task expertise detected by a self-organizing neural network map. arXiv 2016, arXiv:2106.08995.

- Liu, R.; Nageotte, F.; Zanne, P.; de Mathelin, M.; Dresp-Langley, B. Deep Reinforcement Learning for the Control of Robotic Manipulation: A Focussed Mini-Review. Robotics 2021, 10, 22.

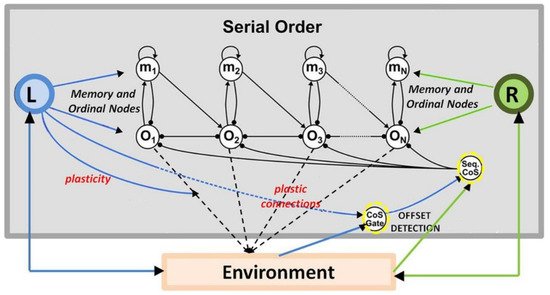

- Tekülve, J.; Fois, A.; Sandamirskaya, Y.; Schöner, G. Autonomous Sequence Generation for a Neural Dynamic Robot: Scene Perception, Serial Order, and Object-Oriented Movement. Front. Neurorobotics 2019, 13.

- Scott, S.H.; Cluff, T.; Lowrey, C.R.; Takei, T. Feedback control during voluntary motor actions. Curr. Opin. Neurobiol. 2015, 33, 85–94.

- Marques, H.G.; Imtiaz, F.; Iida, F.; Pfeifer, R. Self-organization of reflexive behavior from spontaneous motor activity. Biol. Cybern. 2013, 107, 25–37.

- Der, R.; Martius, G. Self-Organized Behavior Generation for Musculoskeletal Robots. Front. Neurorobotics 2017, 11, 8.

- Martius, G.; Der, R.; Ay, N. Information Driven Self-Organization of Complex Robotic Behaviors. PLoS ONE 2013, 8, e63400.

- Alnajjar, F.; Murase, K. Self-organization of spiking neural network that generates autonomous behavior in a real mobile robot. Int. J. Neural. Syst. 2006, 16, 229–239.

- Zhang, Z.; Cheng, Z.; Lin, Z.; Nie, C.; Yang, T. A neural network model for the orbitofrontal cortex and task space acquisition during reinforcement learning. PLoS Comput. Biol. 2018, 14, e1005925.

- Weidel, P.; Duarte, R.; Morrison, A. Unsupervised Learning and Clustered Connectivity Enhance Reinforcement Learning in Spiking Neural Networks. Front. Comput. Neurosci. 2021, 15, 543872.