Recent progress in neuromorphic sensory systems which mimic the biological receptor functions and sensorial processing [

129,

130,

131,

132] trends toward sensors and sensory systems that communicate through asynchronous digital signals analogous to neural spikes [

127], improving the performance of sensors and suggesting novel sensory processing principles that exploit spike timing [

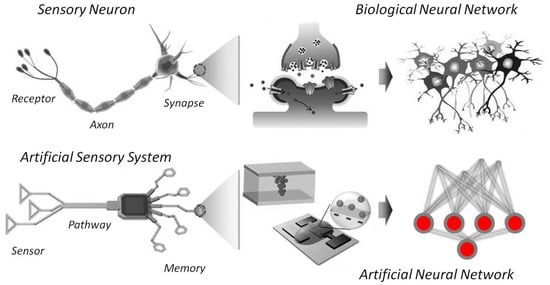

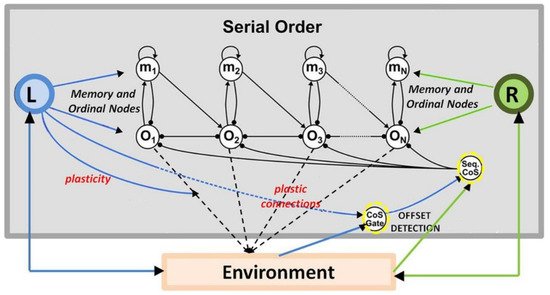

128], leading to experiments in robotics and human–robot interaction that can impact how we think the brain processes sensory information. Sensory memory is formed at the earliest stages of neural processing (

Figure 8), underlying perception and interaction of an agent with the environment. Sensory memory is based on the same plasticity principles as all true learning, and it is, therefore, an important source of intelligence in a more general sense. Sensory memory is consolidated while perceiving and interacting with the environment, and a primary source of intelligence in all living species. Transferring such biological concepts into electronic implementation aims at achieving perceptual intelligence, which would profoundly advance a broad spectrum of applications, such as prosthetics, robotics, and cyborg systems [

129]. Moreover, transferring biologically intelligent sensory processing into electronic implementations [

130,

131,

132] achieves new forms of perceptual intelligence (

Figure 8). These have the potential to profoundly advance a broader spectrum of applications in robotics, artificial intelligence, and control systems.

These new, bioinspired systems offer unprecedented opportunities for hardware architecture solutions coupled with artificial intelligence, with the potential of extending the capabilities of current digital systems to psychological attributes such as sensations and emotions. Challenges to be met in this field concern integration levels, energy efficiency, and functionality to shed light on the translational potential of such implementations. Neuronal activity and the development of functionally specific neural networks in the brain are continuously modulated by sensory signals. The somatosensory cortical network [

133] in the primate brain refers to a neocortical area that responds primarily to tactile stimulation of skin or hair. This cortical area is conceptualized in the current state of the art [

133,

134,

135,

136] as containing a single map of the receptor periphery, connected to a cortical neural network with modular functional architecture and connectivity binding functionally distinct neuronal subpopulations from other cortical areas into motor circuit modules at several hierarchical levels [

133,

134,

135,

136]. These functional modules display a hierarchy of interleaved circuits connecting, via interneurons in the spinal cord, to visual and auditory sensory areas, and to the motor cortex, with feedback loops and bilateral communication with the supraspinal centers [

135,

136,

137]. This enables ’from-local-to-global’ functional organization [

134], a ground condition for self-organization [

106,

107], with plastic connectivity patterns that are correlated with specific behavioral variations such as variations in motor output or grip force, which fulfills an important adaptive role and ensures that humans are able to reliably grasp and manipulate objects in the physical world under constantly changing conditions in their immediate sensory environment. Neuroscience-inspired biosensor technology for the development of robot-assisted minimally invasive surgical training [

138,

139,

140,

141,

142,

143] is a currently relevant field of application here as it has direct clinical, ergonomic, and functional implications, with clearly identified advantages over traditional surgical procedures [

144,

145]. Individual grip force profiling using wireless wearable (gloves or glove-like assemblies) sensor systems for the monitoring of task skill parameters [

138,

139,

140,

141] and their evolution in real time on robotic surgery platforms [

141,

142,

143,

146,

147,

148] permits studying the learning curves [

140,

141,

142] of experienced robotic surgeons, surgeons with experience as robotic platform tableside assistants, surgeons with laparoscopic experience, surgeons without laparoscopic experience, and complete novices. Grip force monitoring in robotic surgery [

146,

147,

148] is a highly useful means of tracking the evolution of the surgeon’s individual force profile during task execution. Multimodal feedback systems may represent a slight advantage over the not very effective traditional feedback solutions, and the monitoring of individual grip forces of a surgeon or a trainee in robotic task execution through wearable multisensory systems is by far the superior solution, as real-time grip force profiling by such wearable systems can directly help prevent incidents [

146,

147] because it includes the possibility of sending a signal (sound or light) to the surgeon before their grip force exceeds a critical limit, and damage occurs. Proficiency, or expertise, in the control of a robotic system for minimally invasive surgery is reflected by a lesser grip force during task execution, as well as by a shorter task execution times [

146,

147,

148]. Grip forces self-organize progressively in a way that is similar to the self-organization of neural oscillations during task learning, and, in surgical human–robot interaction, a self-organizing neural network model was found to reliably account for grip force expertise [

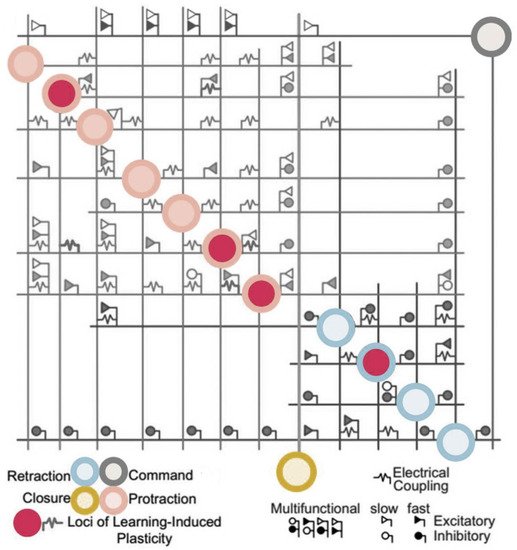

149].