Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Aleksandr Ometov | + 2896 word(s) | 2896 | 2022-02-10 09:57:22 | | | |

| 2 | Nora Tang | + 131 word(s) | 3027 | 2022-02-23 04:42:16 | | | | |

| 3 | Nora Tang | Meta information modification | 3027 | 2022-02-25 11:02:43 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Ometov, A. Background on Computing Paradigms. Encyclopedia. Available online: https://encyclopedia.pub/entry/19676 (accessed on 08 February 2026).

Ometov A. Background on Computing Paradigms. Encyclopedia. Available at: https://encyclopedia.pub/entry/19676. Accessed February 08, 2026.

Ometov, Aleksandr. "Background on Computing Paradigms" Encyclopedia, https://encyclopedia.pub/entry/19676 (accessed February 08, 2026).

Ometov, A. (2022, February 21). Background on Computing Paradigms. In Encyclopedia. https://encyclopedia.pub/entry/19676

Ometov, Aleksandr. "Background on Computing Paradigms." Encyclopedia. Web. 21 February, 2022.

Copy Citation

General overview of the different mentioned paradigms needs to be provided in order to be oriented in the computing field. For clarity and consistency, each paradigm is carefully discussed concisely in the oncoming text. The reason for discussing each of these paradigms is to have an overview that will guide the understanding of the research goal for this entry, which is primarily the information security and privacy aspects for each paradigm.

computing

survey

security

privacy

distributed systems

1. Cloud-Related Aspects

Historically, the growth and expansion of the infrastructures of many companies have come from evolving technologies and innovations. Cloud computing is seen as a unique solution to provide applications for enterprises [1]. It uses different components such as hardware and software to render services, especially over the Internet. The possibility of accessing various data and applications provided was originally made straightforward by Cloud computing.

Several industrial giants and standardization bodies attempted to define Cloud computing in their understandings and views. The National Institute of Standards and Technology (NIST) is widely considered to provide the most reliable and precise definition for Cloud computing as “a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction” [2].

Five different models particularly characterize Cloud computing: on-demand self-service, broad network access, multi-tenancy and resource pooling, rapid elasticity, and scalability. Generally, more Cloud computing resources can be provided as required by manufacturers and different enterprises while avoiding interactions with humans involving service providers, e.g., database instances, storage space, virtual machines, and many others. Having access to corporate Cloud accounts is essential as it helps corporations to virtualize the various services, Cloud usage, and supply of services as demanded [3].

Simultaneously, there is a need for broad network access, i.e., accessing capabilities via established channels across the network advance the use of heterogeneous thick and thin customer devices such as workstations, tablets, laptops, and mobile phones [4]. This access leads to the resource pooling aspect, i.e., computing resources from the provider are grouped using a particular multi-tenant model used in serving various clients. The unseen and non-virtual resources are carefully allocated and reallocated according to the customer’s needs. Usually, customers do not understand or access the spot-on position or area provided. However, location specification can be established at an advanced state of situation or abstraction followed by various examples of resources such as network bandwidth, processing, memory, and storage [5].

Such a massive heterogeneous environment leads to the scalability aspect [6]. The growth of a client marketplace or business is made possible due to the tremendous ability to create specific Cloud resources, enabling improvement or reducing costs. Sometimes, changes might occur on the user’s need for Cloud computing, which will be immediately responded to by the platform or system.

Finally, the resource use is keenly observed, regulated, and feedback is given to established billing based on usage (e.g., accounts of frequent customers, bandwidth, processing, and storage). The proper reporting of essential services used can be done transparently if the used resources are adequately looked into, controlled and account is given [2].

From the architectural perspective, big, medium, and small enterprises use Cloud computing technology to save or store vital data in the Cloud, enabling them to access this stored information from any part of the world via connecting to the Internet. Service-oriented and event-driven architectures are the main combination that makes up the Cloud computing architecture. The two important parts dividing the Cloud computing architecture are naturally Front End (FE) and Back End (BE) [7].

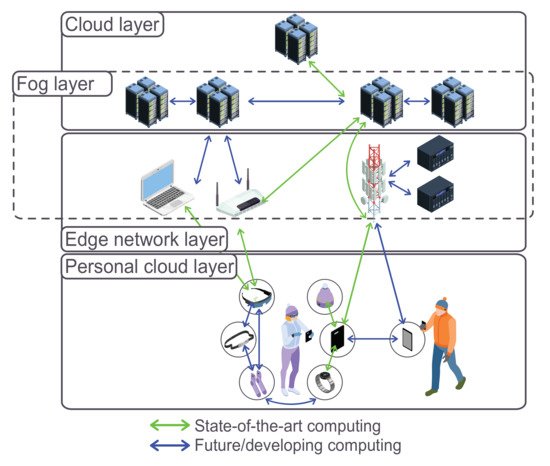

As seen in Figure 1, various components are involved in the computing architecture [8]. Furthermore, as can be seen that a network connects both front and back ends via the wired or wireless medium.

Figure 1. Most common task offloading models.

2. Edge-Related Aspects

As a new generation of computational offloading, Edge arrived to allocate the resources at the network edge, i.e., closer to various office and home appliances such as mobile devices, Internet of Things (IoT) devices, clients, and client’s sensors. In recent years, there has been fast growth in industrial and research investment in Edge computing. The pivot for Edge computing is the physical availability and closeness, of which end-to-end latency is influenced by this essential point of Cloudlets, with bandwidth achievable economically, trust creation, and ability to survive [9].

Communication overheads between a customer and a server site are reduced due to a decrease in actual transmission distances (in terms of geography and number of hops) brought about by the Edge computing in the network. As one of the definitions, “Edge computing is a networking philosophy focused on bringing computing as close to the source of data as possible to reduce latency and bandwidth use. In simpler terms, Edge computing means running fewer processes in the Cloud and moving those processes to local places, such as on a user’s computer, an IoT device, or an Edge server” [10]. Some other definitions of Edge computing are “a physical compute infrastructure positioned on the spectrum between the device and the hyper-scale Cloud, supporting various applications. Edge computing brings processing capabilities closer to the end-user/device/source of data which eliminates the journey to the Cloud data center and reduces latency” [11]. There are several cases in which architectural designs are specifically intended, considering their work plan and setting up the infrastructure is based on its need.

Considered a state-of-the-art paradigm, Edge computing takes services and applications from the Cloud known to be centralized to the nearest sites to the main source and offers computational power to process data. It also provides added links for connecting the Cloud and the end-user devices. One of the best ways to solve or reduce Cloud computing issues is to make sure there is an increase in Edge nodes in a particular location, which will also help in decreasing the number of devices attributed to a sole Cloud [12].

Overall, the main Edge service consumers are resource-constrained devices, e.g., wearables, tracker bands for fitness and medical uses, or smartphones [13]. Fog devices, in turn, subdues the shortcomings of Cloud by transferring some of the core functions of Cloud towards the network Edge while keeping the Cloud-like operation possible [14], e.g., Edge and Fog nodes may act as interfaces attaching these devices to the Cloud [15].

A typical Edge computing architecture comprises three important nodes (see Figure 1): the Cloud, local Edge, and the Edge Device. Notably, Local Edge involves a well-defined structure with several sublayers of different Edge servers with a bottom-up power flow in computation. Both Access Points (APs) and Base Stations (BSs) are Edge servers situated at the sublayer considered to be the lowest together with proximity-based communications [16]. These are particularly installed to obtain data during communication from various Edge devices, returning a control flow using several wireless interfaces.

Cellular BSs transmit the data to the Edge servers found in the (upper) sublayer after receiving data from Edge devices. Here, the upper sublayer is particularly concerned with operating computation work. Very fundamental analysis and computation are done after data are forwarded from BSs. At a recent Edge server, the computational restriction is placed such that if the difficulty in a given work surpasses it, the work is offloaded and sent to the upper sublayers with adequate computation abilities. A chain of flow control is then concluded by these servers with passing back to the access points, and finally, in the end, send them to Edge devices [17].

The Edge architecture allowed to switch more delay intolerant applications closer to the computation demanders, e.g., Augmented/Virtual/Mixed Reality (AR/VR/MR) gaming, cellular offloading, etc., all together following the proximity-driven nature of the paradigm [18]. Generally, there are two approaches to the proximity between the Edge and user’s equipment: physical and logical proximity.

Physical proximity refers to the exact distance between the top segment of data computation and user equipment. Logical proximity refers to the count of hops between the Edge computing segment and the users’ equipment. There are potential occurrences of congestion because of the lengthy route caused by multiple hops, leading to increased latency issues. To avoid queuing that can result in delays, logical proximity needs to limit such events at the back-haul of the computing network systems.

Despite the shortcomings of the normal Cloud paradigm innovations to match up with great demands, given lower energy level, real-time, and in particular security and privacy aspects, the Edge paradigm is not considered a substitute for the Cloud paradigm. Edge and Cloud paradigms are known to assist each other in a cordial manner in several situations. The Cloud and Edge paradigms cooperate in some network areas, including autonomous cars, industrial Internet, as well as smart cities, offices and homes. Importantly, Edge and Cloud paradigm collaboration offers many chances for reduced latency in robust software such as autonomous cars, network assets of companies, and information analysis on the IoT [19].

Nevertheless, Edge operation is executed through supported capabilities from several actors. Cellular LTE, short-range Bluetooth Low Energy (BLE), Zigbee, and Wi-Fi are various technologies that create connectivity by linking endpoint equipment and nodes of the Edge computing layer. There is great importance for access modalities as it establishes the endpoint equipment bandwidth availability, the connection scope, and the various device type assistance rendered [20].

3. Fog-Related Aspects

Access gateways or set-top-boxes are end devices that can accommodate Fog computing services. The new paradigm infrastructure permits applications to operate nearby to observe activities easily and handle huge data originating from individuals, processes, or items. The creation of automated feedback is a driving value for the Fog computing concept [21]. Customers benefit from Fog and Cloud services, such as storage, computation, application services, and data provision. In general, it is possible to separate Cloud from Fog, which is closer to clients in terms of proximity, mobile assistance for mobility, and dense location sharing [22], while keeping the Cloud functionality in a distributed and transparent for the user manner.

According to NIST, “Fog computing is a layered model for enabling ubiquitous access to a shared continuum of scalable computing resources. The model facilitates the deployment of distributed, latency-aware applications and services, and consists of fog nodes (physical or virtual), residing between smart end-devices and centralized (cloud) services. The fog nodes are context aware and support common data management and communication system. They can be organized in clusters – either vertically (to support isolation), horizontally (to support federation), or relative to fog nodes’ latency-distance to the smart end-devices” [23]. Generally, Fog computing is considered to be an extension or advancement of Cloud computing, as the latter one ideally focuses mostly on a central system for computing, and it occurs on the upper section of the layers, and Fog is responsible for reducing the load at the Edge layer, particularly at the entrance points and for resource-constrained devices [24].

The use of the term “Fog Computing” and “ Edge Computing” refers to the hosting and performing duties from the network end by Fog devices instead of having a centralized Cloud platform. This means putting certain processes, intelligence, and resources to the Cloud’s Edge rather than deriving use and storage in the Cloud. Fog computing is rated as the future huge player when it comes to the Internet of Everything (IoE) [25], and its subgroup of the Internet of Wearable Things (IoWT) [26].

Communication, storage, control, decision-making, and computing close to the Edge of the network are specially chosen by Fog architecture. Here, the executions and data storage are executed to solve the shortcomings of the current infrastructure to access critical missions and use cases, e.g., the data density. OpenFog consortium defines Fog computing as “a horizontal, system-level architecture that distributes computing, storage, control, and networking functions closer to the users along a Cloud-to-thing continuum” [27]. Another definition explains Fog as “an alternative to Cloud computing that puts a substantial amount of storage, communication, control, configuration, measurement, and management at the Edge of a network, rather than establishing channels for the centralized Cloud storage and use, which extends the traditional Cloud computing paradigm to the network Edge” [28].

The deployment of Fog computing systems is somewhat similar to Edge but dedicated to applications that require higher processing power while still being closer to the user. This explains why devices belonging to the Fog are heterogeneous, raising the question of the ability of Fog computing to overcome the newly created adversaries of managing resources and problem-solving in this heterogeneous setup. Therefore, investigation of related areas such as simulations, resource management, deployment matters, services, and fault tolerance are very simple requirements [29].

As of today, Fog computing architecture lacks standardization, and until recently, there is no definite architecture with given criteria. Despite so, many research articles and journals have managed to develop their versions of Fog computing architecture. In this section, an attempted explanation is detailed in an understanding manner, which describes the different components which make up the general architecture [29].

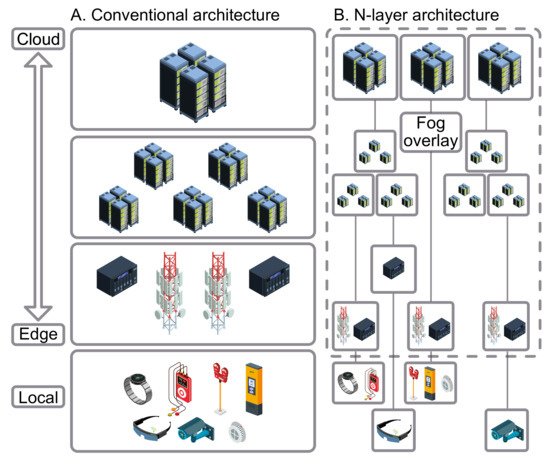

Generally, most of the research projects performed on Fog computing have mostly been represented as a three-layer model in its architecture [30], see Figure 2. Moreover, there is a detailed N-layer reference architecture [31], established by the OpenFog Consortium, being regarded as an improvement to the three-layer model.

Figure 2. Most commonly analyzed computing architectures.

Fog computing is considered to be non-trivial addition regarding Cloud computing based on Cloud-to-Things setup. In fact, it displays a middle layer (also known as the Fog layer), closing the gap between the local end devices and Cloud infrastructure [32].

Notably, and as in the Cloud, the Fog layer also uses local virtualization technologies. On the other hand, taking into consideration the available resources, it will be more adequate to implement virtualization with container-based solutions [29]. It should also be remembered that Fog nodes found in this layer are large in number. Based on OpenFog Consortium, Fog node is referred to as “the physical and logical network element that implements Fog computing services” [33]. Fog nodes have the capability of performing computation, transmission, and also storing data temporarily and are located in between the Cloud and end-user devices [34].

The essential pushes for the eminent migration from Cloud computing to Fog computing are caused by load from computations and bringing Cloud computing close to Edge. Several characteristics define Fog computing by the tremendous variety of applications and IoT design services [35]. The major one corresponds to the extreme heterogeneity of the ecosystem, which provides services between centralized Cloud and different devices found at the Edge, such as end-user applications via Fog. The heterogeneity of Fog computing servers comprises shared locations with hierarchically structured blocks.

At the same time, the entire system is highly distributed geographically. Fog computing models consist of extensively shared deployments in actuality to offer a Quality of Service (QoS) regarding mobile and non-mobile user appliances [36]. The nodes and sensors of the Fog computing are geographically shared in the case of various stage environments, for instance, monitoring different aspects such as chemical vats, healthcare systems, sensors, and the climate.

The ability to effectively react to the primary goal and objective can be called cognition. Customers’ requirements are better alerted by analytics in a Fog-focused data gateway, which helps give a good position to understand where to make a transmission, storage possibilities, and the control operations along the whole process from Cloud to the Internet of Things continuum. Customers enjoy the best experience due to applications’ closeness to user devices and creating a better precision and reactiveness concerning the clients’ needs [37].

4. Differences and Similarities of Paradigms

The main goal of Fog and Edge paradigms are similar in some areas, unlike the Cloud. Both of those bring the capabilities of the Cloud closer to the users and offer customers with lower latency services while making sure, on the one hand, that highly delay-tolerant applications would achieve the required QoS, and, on the other hand, lowering the overall network load [38]. It is not straightforward to differentiate and compare Cloud, Edge, and Fog Computing. This subsection attempts to discern and look into similar features between the computing paradigms [39]. The differences and similarities of the various paradigms are summarized in Table 1.

Table 1. Comparison on different computing paradigms.

| Attributes | Cloud Computing | Edge Computing | Fog Computing |

|---|---|---|---|

| Architecture | Centralized | Distributed | Distributed |

| Expected Task Execution Time 1 | High | High-Medium | Low |

| Provided Services | Universal services | Often uses mobile networks | Vital for a particular domain and distributed |

| Security | Centralized (guaranteed by the Cloud provider) | Centralized (guaranteed by the Cellular operator) | Mixed (depending on the implementation) |

| Energy Consumption | High | Low | Varying but higher than for Edge |

| Identifying location | No | Yes | Yes |

| Main Providers | Amazon and Google | Cellular network providers | Proprietary |

| Mobility | Inadequate | Offered with limited support | Supported |

| Interaction in Real-Time | Available | Available | Available |

| Latency | High | Low | Varying but higher than for Edge |

| Bandwidth Cost | High | Low | Low |

| Storage capacity and Computation | High | Very limited | Varying |

| Scalability | Average | High | High |

| Overall usage | Computation distribution for huge data (Google MapReduce), Apps virtualization, Storage of data scalability | Control of traffic, data caching, wearable applications | CCTV surveillance, imaging of subsurface in real-time, IoT, Smart city, Vehicle-to-Vehicle (V2X) |

1 Importantly, Edge may provide higher results but only for computationally simple tasks (benefiting in terms of communication latency), while Fog would provide higher computational speed maintaining the latency (for, e.g., AR/VR applications). Executions in the Cloud would always provide the worst results as the computational unit is geographically distant from the user, which would naturally require tremendous communication overheads compared to geographically closer locations.

Nonetheless, it is essential to overview each of these indicated paradigms to address security and privacy aspects in Cloud, Edge, and Fog paradigms. This subsection described some fundamental features that constitute each of the said paradigms, making them unique in their ways.

Cloud being a centralized architecture and an IoT promoter has several shortcomings such as high latency, location sensibility, and computation time, just to name a few. Researchers then suggested upgraded technologies known as Edge and Fog paradigms to lessen the burden on Cloud systems and resolve the issues indicated.

Finally, the Edge paradigm is advantageous over the Cloud paradigm, especially regarding security and privacy. However, the Fog paradigm consisting of Fog nodes is regarded as an outstanding architecture uniquely created so that IoT appliances render improved services and support.

References

- Nieuwenhuis, L.J.; Ehrenhard, M.L.; Prause, L. The Shift to Cloud Computing: The Impact of Disruptive Technology on the Enterprise Software Business Ecosystem. Technol. Forecast. Soc. Chang. 2018, 129, 308–313.

- NIST Special Publication 800-145: Definition of Cloud Computing Recommendations of the National Institute of Standards and Technology. Available online: https://nvlpubs.nist.gov/nistpubs/Legacy/SP/nistspecialpublication800-145.pdf (accessed on 21 December 2021).

- Five Characteristics of Cloud Computing. Available online: https://www.controleng.com/articles/five-characteristics-of-cloud-computing/ (accessed on 21 December 2021).

- Application Management in the Cloud. Available online: http://www.sciencedirect.com/science/article/pii/B9780128040188000048 (accessed on 21 December 2021).

- Cloud Computing. Available online: https://masterworkshop.skillport.com/skillportfe/main.action?assetid=47045 (accessed on 21 December 2021).

- Spatharakis, D.; Dimolitsas, I.; Dechouniotis, D.; Papathanail, G.; Fotoglou, I.; Papadimitriou, P.; Papavassiliou, S. A Scalable Edge Computing Architecture Enabling Smart Offloading for Location Based Services. Pervasive Mob. Comput. 2020, 67, 101217.

- Jadeja, Y.; Modi, K. Cloud Computing—Concepts, Architecture and Challenges. In Proceedings of the International Conference on Computing, Electronics and Electrical Technologies (ICCEET), Nagercoil, India, 21–22 March 2012; pp. 877–880.

- Ometov, A.; Chukhno, O.; Chukhno, N.; Nurmi, J.; Lohan, E.S. When Wearable Technology Meets Computing in Future Networks: A Road Ahead. In Proceedings of the 18th ACM International Conference on Computing Frontiers, Virtual Event, Italy 11–13 May 2021; pp. 185–190.

- Satyanarayanan, M. Edge Computing. Computer 2017, 50, 36–38.

- Edge Computing Learning Objectives. Available online: https://www.cloudflare.com/en-gb/learning/serverless/glossary/what-is-edge-computing/ (accessed on 21 December 2021).

- Edge Computing—What Is Edge Computing? Available online: https://stlpartners.com/edge-computing/what-is-edge-computing/ (accessed on 21 December 2021).

- Gezer, V.; Um, J.; Ruskowski, M. An Extensible Edge Computing Architecture: Definition, Requirements and Enablers. In Proceedings of the UBICOMM, Barcelona, Spain, 12–16 November 2017.

- Mäkitalo, N.; Flores-Martin, D.; Berrocal, J.; Garcia-Alonso, J.; Ihantola, P.; Ometov, A.; Murillo, J.M.; Mikkonen, T. The Internet of Bodies Needs a Human Data Model. IEEE Internet Comput. 2020, 24, 28–37.

- Sarkar, S.; Misra, S. Theoretical Modelling of Fog Computing: A Green Computing Paradigm to Support IoT Applications. IET Netw. 2016, 5, 23–29.

- Mukherjee, M.; Matam, R.; Shu, L.; Maglaras, L.; Ferrag, M.A.; Choudhury, N.; Kumar, V. Security and Privacy in Fog Computing: Challenges. IEEE Access 2017, 5, 19293–19304.

- Ometov, A.; Olshannikova, E.; Masek, P.; Olsson, T.; Hosek, J.; Andreev, S.; Koucheryavy, Y. Dynamic Trust Associations over Socially-Aware D2D Technology: A Practical Implementation Perspective. IEEE Access 2016, 4, 7692–7702.

- Xiao, Y.; Jia, Y.; Liu, C.; Cheng, X.; Yu, J.; Lv, W. Edge Computing Security: State of the Art and Challenges. Proc. IEEE 2019, 107, 1608–1631.

- Kozyrev, D.; Ometov, A.; Moltchanov, D.; Rykov, V.; Efrosinin, D.; Milovanova, T.; Andreev, S.; Koucheryavy, Y. Mobility-Centric Analysis of Communication Offloading for Heterogeneous Internet of Things Devices. Wirel. Commun. Mob. Comput. 2018, 2018, 3761075.

- Jiang, C.; Cheng, X.; Gao, H.; Zhou, X.; Wan, J. Toward Computation Offloading in Edge Computing: A Survey. IEEE Access 2019, 7, 131543–131558.

- Dolui, K.; Datta, S.K. Comparison of Edge Computing Implementations: Fog Computing, Cloudlet and Mobile Edge Computing. In Proceedings of the Global Internet of Things Summit (GIoTS), Geneva, Switzerland, 6–9 June 2017; pp. 1–6.

- Mäkitalo, N.; Aaltonen, T.; Raatikainen, M.; Ometov, A.; Andreev, S.; Koucheryavy, Y.; Mikkonen, T. Action-Oriented Programming Model: Collective Executions and Interactions in the Fog. J. Syst. Softw. 2019, 157, 110391.

- Stojmenovic, I.; Wen, S.; Huang, X.; Luan, H. An Overview of Fog Computing and Its Security Issues. Concurr. Comput. Pract. Exp. 2016, 28, 2991–3005.

- NIST Special Publication 500-325: Fog Computing Conceptual Model Recommendations of the National Institute of Standards and Technology. Available online: https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.500-325.pdf (accessed on 21 December 2021).

- Ometov, A.; Shubina, V.; Klus, L.; Skibińska, J.; Saafi, S.; Pascacio, P.; Flueratoru, L.; Gaibor, D.Q.; Chukhno, N.; Chukhno, O.; et al. A Survey on Wearable Technology: History, State-of-the-Art and Current Challenges. Comput. Netw. 2021, 193, 108074.

- Mahmood, Z.; Ramachandran, M. Fog Computing: Concepts, Principles and Related Paradigms. In Fog Computing; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–21.

- Qaim, W.B.; Ometov, A.; Molinaro, A.; Lener, I.; Campolo, C.; Lohan, E.S.; Nurmi, J. Towards Energy Efficiency in the Internet of Wearable Things: A Systematic Review. IEEE Access 2020, 8, 175412–175435.

- IEEE Std 1934-2018; IEEE Standard for Adoption of OpenFog Reference Architecture for Fog Computing; IEEE: New York, NY, USA, 2018; pp. 1–176.

- Peng, M.; Yan, S.; Zhang, K.; Wang, C. Fog-Computing-based Radio Access Networks: Issues and Challenges. IEEE Netw. 2016, 30, 46–53.

- Naha, R.K.; Garg, S.; Georgakopoulos, D.; Jayaraman, P.P.; Gao, L.; Xiang, Y.; Ranjan, R. Fog Computing: Survey of Trends, Architectures, Requirements, and Research Directions. IEEE Access 2018, 6, 47980–48009.

- Lin, J.; Yu, W.; Zhang, N.; Yang, X.; Zhang, H.; Zhao, W. A Survey on Internet of Things: Architecture, Enabling Technologies, Security and Privacy, and Applications. IEEE Internet Things J. 2017, 4, 1125–1142.

- OpenFog Consortium. OpenFog Reference Architecture for Fog Computing; OpenFog Consortium: Fremont, CA, USA, 2017; pp. 1–162.

- Hu, P.; Dhelim, S.; Ning, H.; Qiu, T. Survey on Fog Computing: Architecture, Key Technologies, Applications and Open Issues. J. Netw. Comput. Appl. 2017, 98, 27–42.

- OpenFog Consortium Architecture Working Group. OpenFog Architecture Overview. White Pap. OPFWP001 2016, 216, 35.

- De Donno, M.; Tange, K.; Dragoni, N. Foundations and Evolution of Modern Computing Paradigms: Cloud, IoT, Edge, and Fog. IEEE Access 2019, 7, 150936–150948.

- Fog Computing: An Overview of Big IoT Data Analytics. Available online: https://www.hindawi.com/journals/wcmc/2018/7157192/#references (accessed on 21 December 2021).

- Bonomi, F.; Milito, R.; Zhu, J.; Addepalli, S. Fog Computing and Its Role in the Internet of Things. In Proceedings of the MCC workshop on Mobile Cloud Computing, Helsinki, Finland, 17 August 2012; pp. 13–16.

- Chiang, M.; Zhang, T. Fog and IoT: An Overview of Research Opportunities. IEEE Internet Things J. 2016, 3, 854–864.

- Khan, S.U. The Curious Case of Distributed Systems and Continuous Computing. IT Prof. 2016, 18, 4–7.

- Anawar, M.R.; Wang, S.; Azam Zia, M.; Jadoon, A.K.; Akram, U.; Raza, S. Fog Computing: An overview of big IoT data analytics. Wirel. Commun. Mob. Comput. 2018, 2018, 7157192.

More

Information

Subjects:

Computer Science, Information Systems

Contributor

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

4.1K

Revisions:

3 times

(View History)

Update Date:

25 Feb 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No