| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Agnieszka Kuras | + 2940 word(s) | 2940 | 2021-09-07 05:38:44 | | | |

| 2 | Lindsay Dong | Meta information modification | 2940 | 2021-09-08 03:09:39 | | |

Video Upload Options

The urban land cover consists of very complex physical materials and surfaces that are constantly having anthropological impacts. The urban surface types are a mosaic of seminatural surfaces such as grass, trees, bare soil, water bodies, and human-made materials of diverse age and composition, such as asphalt, concrete, roof tiles for energy conservation and fire danger, and generally impervious surfaces for urban flooding studies and pollution. The complexity of urban analysis also depends on the scale chosen and its purpose.

1. Introduction

2. Classified Urban Land Cover Classes

2.1. Buildings

2.2. Vegetation

2.3. Roads

2.4. Miscellaneous

3. Key Characteristics of Hyperspectral and Lidar Data

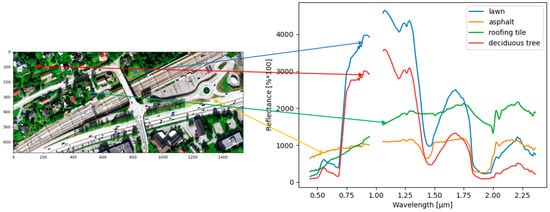

3.1. Hyperspectral (HS) Images

3.1.1. Spectral Features

3.1.2. Spatial Information

3.2. Lidar Data

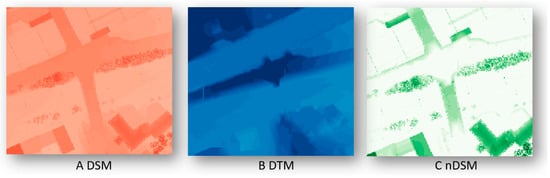

3.2.1. Height Features and Their Derivatives (HD)

3.2.2. Intensity Data

3.2.3. Multiple-Return

3.2.4. Waveform-Derived Features

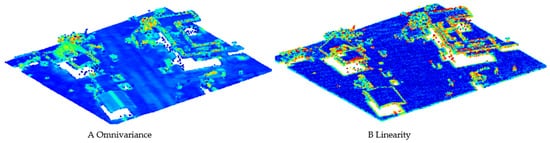

3.2.5. Eigenvalue-Based Features

3.3. Common Features—HS and Lidar

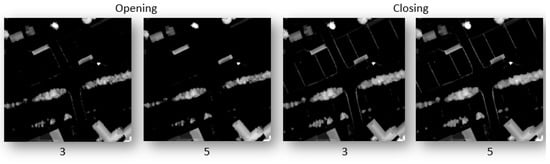

3.3.1. Textural Features

3.3.2. Morphological Features

3.4. Hyperspectral-Lidar Data Fusion

HL-Fusion combines spectral-contextual information obtained by an HS sensor and a lidar scanner’s spectral-spatial-geometrical information. Even if the active and passive sensors characterize different physics, their features can be combined from both sensors. Both sensors cover the reflective spectral range intersecting either in the VIS (532 nm) or the SWIR (1064, 1550 nm) wavelength regions. More rarely, multi-spectral lidar systems are used, which overlap in several of the three common wavelengths, allowing the identification of materials or objects using spectral properties [100]. Under laboratory conditions, prototypical hyperspectral lidar systems are being developed [50][101][102].

References

- United Nations. 2018 Year in Review; United Nations: New York, NY, USA, 2018.

- Chen, F.; Kusaka, H.; Bornstein, R.; Ching, J.; Grimmond, C.S.B.; Grossman-Clarke, S.; Loridan, T.; Manning, K.W.; Martilli, A.; Miao, S. The integrated WRF/urban modelling system: Development, evaluation, and applications to urban environmental problems. Int. J. Climatol. 2011, 31, 273–288.

- Lee, J.H.; Woong, K.B. Characterization of urban stormwater runoff. Water Res. 2000, 34, 1773–1780.

- Forster, B.C. Coefficient of variation as a measure of urban spatial attributes, using SPOT HRV and Landsat TM data. Int. J. Remote Sens. 1993, 14, 2403–2409.

- Sadler, G.J.; Barnsley, M.J.; Barr, S.L. Information extraction from remotely-sensed images for urban land analysis. In Proceedings of the 2nd European GIS Conference (EGIS’91), Brussels, Belgium, 2–5 April 1991; pp. 955–964.

- Carlson, T. Applications of remote sensing to urban problems. Remote Sens. Environ. 2003, 86, 273–274.

- Coutts, A.M.; Harris, R.J.; Phan, T.; Livesley, S.J.; Williams, N.S.G.; Tapper, N.J. Thermal infrared remote sensing of urban heat: Hotspots, vegetation, and an assessment of techniques for use in urban planning. Remote Sens. Environ. 2016, 186, 637–651.

- Huo, L.Z.; Silva, C.A.; Klauberg, C.; Mohan, M.; Zhao, L.J.; Tang, P.; Hudak, A.T. Supervised spatial classification of multispectral LiDAR data in urban areas. PLoS ONE 2018, 13.

- Jürgens, C. Urban and suburban growth assessment with remote sensing. In Proceedings of the OICC 7th International Seminar on GIS Applications in Planning and Sustainable Development, Cairo, Egypt, 13–15 February 2001; pp. 13–15.

- Hepinstall, J.A.; Alberti, M.; Marzluff, J.M. Predicting land cover change and avian community responses in rapidly urbanizing environments. Landsc. Ecol. 2008, 23, 1257–1276.

- Batty, M.; Longley, P. Fractal Cities: A Geometry of Form and Function; Academic Press: London, UK; San Diego, CA, USA, 1994.

- Ben-Dor, E.; Levin, N.; Saaroni, H. A spectral based recognition of the urban environment using the visible and near-infrared spectral region (0.4-1.1 µm). A case study over Tel-Aviv, Israel. Int. J. Remote Sens. 2001, 22, 2193–2218.

- Herold, M.; Gardner, M.E.; Roberts, D.A. Spectral resolution requirements for mapping urban areas. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1907–1919.

- Brenner, A.R.; Roessing, L. Radar Imaging of Urban Areas by Means of Very High-Resolution SAR and Interferometric SAR. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2971–2982.

- Soergel, U. Review of Radar Remote Sensing on Urban Areas. In Radar Remote Sensing of Urban Areas; Soergel, U., Ed.; Springer: Berlin, Germany, 2010; pp. 1–47.

- Ghamisi, P.; Höfle, B.; Zhu, X.X. Hyperspectral and LiDAR data fusion using extinction profiles and deep convolutional neural network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10.

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258.

- Debes, C.; Merentitis, A.; Heremans, R.; Hahn, J.; Frangiadakis, N.; Kasteren, T.v.; Liao, W.; Bellens, R.; Pizurica, A.; Gautama, S.; et al. Hyperspectral and LiDAR data fusion: Outcome of the 2013 GRSS data fusion contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 550.

- Dalponte, M.; Bruzzone, L.; Gianelle, D. Fusion of hyperspectral and LiDAR remote sensing data for classification of complex forest areas. IEEE Trans. Geosci. Remote Sens. 2008. Available online: https://rslab.disi.unitn.it/papers/R59-TGARS-Dalponte.pdf (accessed on 2 May 2021).

- Sohn, H.-G.; Yun, K.-H.; Kim, G.-H.; Park, H.S. Correction of building height effect using LIDAR and GPS. In Proceedings of the International Conference on High Performance Computing and Communications, Sorrento, Italy, 21–23 September 2005; pp. 1087–1095.

- Guislain, M.; Digne, J.; Chaine, R.; Kudelski, D.; Lefebvre-Albaret, P. Detecting and correcting shadows in urban point clouds and image collections. In Proceedings of the Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 537–545.

- George, G.E. Cloud Shadow Detection and Removal from Aerial Photo Mosaics Using Light Detection and Ranging (LIDAR) Reflectance Images; The University of Southern Mississippi: Hattiesburg, MS, USA, 2011.

- Brell, M.; Segl, K.; Guanter, L.; Bookhagen, B. Hyperspectral and Lidar Intensity Data Fusion: A Framework for the Rigorous Correction of Illumination, Anisotropic Effects, and Cross Calibration. IEEE Trans. Geosci. Remote Sens. 2017. Available online: https://www.researchgate.net/publication/313687025_Hyperspectral_and_Lidar_Intensity_Data_Fusion_A_Framework_for_the_Rigorous_Correction_of_Illumination_Anisotropic_Effects_and_Cross_Calibration (accessed on 2 May 2021).

- Hui, L.; Di, L.; Xianfeng, H.; Deren, L. Laser intensity used in classification of LiDAR point cloud data. In Proceedings of the International Symposium on Geoscience and Remote Sensing, Boston, MA, USA, 8–11 July 2008.

- Liu, W.; Yamazaki, F. Object-based shadow extraction and correction of high-resolution optical satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1296–1302.

- Zhou, W.; Huang, G.; Troy, A.; Cadenasso, M.L. Object-based land cover classification of shaded areas in high spatial resolution imagery of urban areas: A comparison study. Remote Sens. Environ. 2009, 113, 1769–1777.

- Priem, F.; Canters, F. Synergistic use of LiDAR and APEX hyperspectral data for high-resolution urban land cover mapping. Remote Sens. 2016, 8, 787.

- Li, H.; Ghamisi, P.; Soergel, U.; Zhu, X.X. Hyperspectral and LiDAR fusion using deep three-stream convolutional neural networks. Remote Sens. 2018, 10, 1649.

- Alonzo, M.; Bookhagen, B.; Roberts, D.A. Urban tree species mapping using hyperspectral and lidar data fusion. Remote Sens. Environ. 2014, 148, 70–83.

- Kokkas, N.; Dowman, I. Fusion of airborne optical and LiDAR data for automated building reconstruction. In Proceedings of the ASPRS Annual Conference, Reno, Nevada, 1–5 May 2006.

- Torabzadeh, H.; Morsdorf, F.; Schaepman, M.E. Fusion of imaging spectroscopy and airborne laser scanning data for characterization of forest ecosystems. ISPRS J. Photogramm. Remote Sens. 2014, 97, 25–35.

- Medina, M.A. Effects of shingle absorptivity, radiant barrier emissivity, attic ventilation flowrate, and roof slope on the performance of radiant barriers. Int. J. Energy Res. 2000, 24, 665–678.

- Ridd, M.K. Exploring a V-I-S-(vegetation—impervious surface-soil) model for urban ecosystem analysis through remote sensing: Comparative anatomy for cities. Int. J. Remote Sens. 1995, 16, 2165–2185.

- Haala, N.; Brenner, C. Extraction of buildings and trees in urban environments. ISPRS J. Photogramm. Remote Sens. 1999, 54, 130–137.

- Shirowzhan, S.; Trinder, J. Building classification from LiDAR data for spatial-temporal assessment of 3D urban developments. Procedia Eng. 2017, 180, 1453–1461.

- Zhou, Z.; Gong, J. Automated residential building detection from airborne LiDAR data with deep neural networks. Adv. Eng. Inform. 2018, 36, 229–241.

- Shajahan, D.A.; Nayel, V.; Muthuganapathy, R. Roof classification from 3-D LiDAR point clouds using multiview CNN with self-attention. IEEE Geosci. Remote Sens. Lett. 2019, 99, 1–5.

- Matikainen, L.; Hyyppa, J.; Hyyppa, H. Automatic detection of buildings from laser scanner data for map updating. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Dresden, Germany, 8–10 October 2003.

- Hug, C.; Wehr, A. Detecting and identifying topographic objects in imaging laser altimetry data. In Proceedings of the International Archives of the Photogrammetry and Remote Sensing, Stuttgart, Germany, 17–19 September 1997; pp. 16–29.

- Maas, H.G. The potential of height texture measures for the segmentation of airborne laserscanner data. In Proceedings of the 4th International Airborne Remote Sensing Conference and Exhibition and 21st Canadian Symposium on Remote Sensing, Ottawa, ON, Canada, 21–24 June 1999; pp. 154–161.

- Tóvári, D.; Vögtle, T. Object classifiaction in laserscanning data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.—ISPRS Arch. 2012, 36. Available online: https://www.researchgate.net/publication/228962142_Object_Classification_in_LaserScanning_Data (accessed on 8 May 2021).

- Galvanin, E.A.; Poz, A.P.D. Extraction of building roof contours from LiDAR data using a markov-random-field-based approach. IEEE Trans. Geosci. Remote Sens. 2012, 50, 981–987.

- Vosselmann, G. Slope based filtering of laser altimetry data. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Amsterdam, The Netherlands, 16–22 July 2000; pp. 935–942.

- Lohmann, P.; Koch, A.; Schaeffer, M. Approaches to the filtering of laser scanner data. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Amsterdam, The Netherlands, 16–22 July 2000.

- Tarsha-Kurdi, F.; Landes, T.; Grussenmeyer, P.; Smigiel, E. New approach for automatic detection of buildings in airborne laser scanner data using first echo only. In Proceedings of the ISPRS Commission III Symposium, Photogrammetric Computer Vision, Bonn, Germany, 20–22 September 2006; pp. 25–30.

- Rutzinger, M.; Höfle, B.; Pfeifer, N. Detection of high urban vegetation with airborne laser scanning data. In Proceedings of the Forestsat, Montpellier, France, 5–7 November 2007; pp. 1–5.

- Morsdorf, F.; Nichol, C.; Matthus, T.; Woodhouse, I.H. Assessing forest structural and physiological information content of multi-spectral LiDAR waveforms by radiative transfer modelling. Remote Sens. Environ. 2009, 113, 2152–2163.

- Wang, C.K.; Tseng, Y.H.; Chu, H.J. Airborne dual-wavelength LiDAR data for classifying land cover. Remote Sens. 2014, 6, 700–715.

- Wichmann, V.; Bremer, M.; Lindenberger, J.; Rutzinger, M.; Georges, C.; Petrini-Monteferri, F. Evaluating the potential of multispectral airborne LiDAR for topographic mapping and land cover classification. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, La Grande Motte, France, 28 September–3 October 2015.

- Puttonen, E.; Hakala, T.; Nevalainen, O.; Kaasalainen, S.; Krooks, A.; Karjalainen, M.; Anttila, K. Artificial target detection with a hyperspectral LiDAR over 26-h measurement. Opt. Eng. 2015. Available online: https://www.spiedigitallibrary.org/journals/optical-engineering/volume-54/issue-01/013105/Artificial-target-detection-with-a-hyperspectral-LiDAR-over-26-h/10.1117/1.OE.54.1.013105.full?SSO=1 (accessed on 8 May 2021).

- Ghaderpour, E.; Abbes, A.B.; Rhif, M.; Pagiatakis, S.D.; Farah, I.R. Non-stationary and unequally spaced NDVI time series analyses by the LSWAVE software. Int. J. Remote Sens. 2020, 41, 2374–2390.

- Martinez, B.; Gilabert, M.A. Vegetation dynamics from NDVI time series analysis using the wavelet transform. Remote Sens. Environ. 2009, 113, 1823–1842.

- Okin, G.S. Relative spectral mixture analysis—A multitemporal index of total vegetation cover. Remote Sens. Environ. 2007, 106, 467–479.

- Yang, H.; Chen, W.; Qian, T.; Shen, D.; Wang, J. The Extraction of Vegetation Points from LiDAR Using 3D Fractal Dimension Analyses. Remote Sens. 2015, 7, 10815–10831.

- Widlowski, J.L.; Pinty, B.; Gobron, N.; Verstraete, M.M. Detection and characterization of boreal coniferous forests from remote sensing data. J. Geophys. Res. 2001, 106, 33405–33419.

- Koetz, B.; Sun, G.; Morsdorf, F.; Ranson, K.J.; Kneubühler, M.; Itten, K.; Allgöwer, B. Fusion of imaging spectrometer and LIDAR data over combined radiative transfer models for forest canopy characterization. Remote Sens. Environ. 2007, 106, 449–459.

- Dian, Y.; Pang, Y.; Dong, Y.; Li, Z. Urban tree species mapping using airborne LiDAR and hyperspectral data. J. Indian Soc. Remote Sens. 2016, 44, 595–603.

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual u-net. IEEE Geosci. Remote Sens. Lett. 2018. Available online: https://arxiv.org/abs/1711.10684 (accessed on 8 May 2021).

- Yang, X.; Li, X.; Ye, Y.; Zhang, X.; Zhang, H.; Huang, X.; Zhang, B. Road detection via deep residual dense u-net. In Proceedings of the International Joint Conference on Neural Networks, Budapest, Hungary, 14–19 July 2019.

- Miliaresis, G.; Kokkas, N. Segmentation and object-based classification for the extraction of the building class from LiDAR DEMs. Comput. Geosci. 2007, 33, 1076–1087.

- Zhao, X.; Tao, R.; Li, W.; Li, H.C.; Du, Q.; Liao, W.; Philips, W. Joint Classification of Hyperspectral and LiDAR Data Using Hierarchical Random Walk and Deep CNN Architecture. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7355–7370.

- Herold, M.; Roberts, D.; Smadi, O.; Noronha, V. Road condition mapping with hyperspectral remote sensing. In Proceedings of the Airborne Earth Science Workshop, Pasadena, CA, USA, 31 March–2 April 2004.

- Kong, H.; Audibert, J.Y.; Ponce, J. General Road Detection from a Single Image. IEEE Trans. Image Process. 2010. Available online: https://www.di.ens.fr/willow/pdfs/tip10b.pdf (accessed on 7 May 2021).

- Wu, P.C.; Chang, C.Y.; Lin, C. Lane-mark extraction for automobiles under complex conditions. Pattern Recognit. 2014, 47, 2756–2767.

- Clark, R.N. Spectroscopy of rocks and minerals, and principles of spectroscopy. In Manual of Remote Sensing, Remote Sensing for the Earth Sciences; Rencz, A.N., Ed.; John Wiley and Sons: New York, NY, USA, 1999; Volume 3.

- Signoroni, A.; Savardi, M.; Baronio, A.; Benini, S. Deep learning meets hyperspectral image analysis: A multidisciplinary review. J. Imaging 2019, 5, 52.

- Ben-Dor, E. Imaging spectrometry for urban applications. In Imaging Spectrometry; van der Meer, F.D., de Jong, S.M., Eds.; Kluwer Academic Publishers: Amsterdam, The Netherlands, 2001; pp. 243–281.

- Ortenberg, F. Hyperspectral Sensor Characteristics. In Fundamentals, Sensor Systems, Spectral Libraries, and Data Mining for Vegetation, 2nd ed.; Huete, A., Lyon, J.G., Thenkabail, P.S., Eds.; Hyperspectral remote sensing of vegetation Volume I; CRC Press: Boca Raton, FL, USA, 2011; p. 449.

- Heiden, U.; Segl, K.; Roessner, S.; Kaufmann, H. Determination of robust spectral features for identification of urban surface materials in hyperspectral remote sensing data. Remote Sens. Environ. 2007, 111, 537–552.

- Heiden, U.; Segl, K.; Roessner, S.; Kaufmann, H. Determination and verification of robust spectral features for an automated classification of sealed urban surfaces. In Proceedings of the EARSeL Workshop on Imaging Spectroscopy, Warsaw, Poland, 27–29 April 2005.

- Lacherade, S.; Miesch, C.; Briottet, X.; Men, H.L. Spectral variability and bidirectional reflectance behavior of urban materials at a 20 cm spatial resolution in the visible and near-infrared wavelength. A case study over Toulouse (France). Int. J. Remote Sens. 2005, 26, 3859–3866.

- Herold, M.; Roberts, D.A.; Gardner, M.E.; Dennison, P.E. Spectrometry for urban area remote sensing—Development and analysis of a spectral library from 350 to 2400 nm. Remote Sens. Environ. 2004, 91, 304–319.

- Ilehag, R.; Schenk, A.; Huang, Y.; Hinz, S. KLUM: An Urban VNIR and SWIR Spectral Library Consisting of Building Materials. Remote Sens. 2019, 11, 2149.

- Xue, J.; Zhao, Y.; Bu, Y.; Liao, W.; Chan, J.C.-W.; Philips, W. Spatial-Spectral Structured Sparse Low-Rank Representation for Hyperspectral Image Super-Resolution. IEEE Trans. Image Process. 2021, 30, 3084–3097.

- Rasti, B.; Scheunders, P.; Ghamisi, P.; Licciardi, G.; Chanussot, J. Noise Reduction in Hyperspectral Imagery: Overview and Application. Remote Sens. 2018, 3, 482.

- Gómez-Chova, L.; Alonso, L.; Guanter, L.; Camps-Valls, G.; Calpe, J.; Moreno, J. Correction of systematic spatial noise in push-broom hyperspectral sensors: Application to CHRIS/PROBA images. Appl. Opt. 2008, 47, 46–60.

- Yan, W.Y.; El-Ashmawy, N.; Shaker, A. Urban land cover classification using airborne LiDAR data: A review. Remote Sens. Environ. 2015.

- Wehr, A.; Lohr, U. Airborne laser scanning—An introduction and overview. ISPRS J. Photogramm. Remote Sens. 1999, 54, 68–82.

- Clode, S.; Rottensteiner, F.; Kootsookos, P.; Zelniker, E. Detection and vectorization of roads from LiDAR data. Photogramm. Eng. Remote Sens. 2007, 73, 517–535.

- Chehata, N.; Guo, L.; Mallet, C. Airborne LiDAR feature selection for urban classification using random forests. Laserscanning 2009, 38.

- Guo, L.; Chehata, N.; Mallet, C.; Boukir, S. Relevance of airborne LiDAR and multispectral image data for urban scene classification using random forests. ISPRS J. Photogramm. Remote Sens. 2011, 66, 56–66.

- Priestnall, G.; Jaafar, J.; Duncan, A. Extracting urban features from LiDAR digital surface models. Comput. Environ. Urban. Syst. 2000, 24, 65–78.

- Song, J.H.; Han, S.H.; Yu, K.Y.; Kim, Y.I. Assessing the possibility of land-cover classification using LiDAR intensity data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2002, 34, 259–262.

- Yoon, J.-S.; Lee, J.-I. Land cover characteristics of airborne LiDAR intensity data: A case study. IEEE Geosci. Remote Sens. Lett. 2008, 5, 801–805.

- Bartels, M.; Wei, H. Maximum likelihood classification of LiDAR data incorporating multiple co-registered band. In Proceedings of the 4th International Workshop on Pattern Recognition in Remote Sensing in conjunction with the 18th International Conference on Pattern Recognition, Hong Kong, 20–24 August 2006.

- Mallet, C.; Bretar, F. Full-waveform topographic LiDAR: State-of-the-art. ISPRS J. Photogramm. Remote Sens. 2009, 64, 1–16.

- Bretar, F.; Chauve, A.; Mallet, C.; Jutzi, B. Managing full waveform LiDAR data: A challenging task for the forthcoming years. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2008, XXXVII, 415–420.

- Kirchhof, M.; Jutzi, B.; Stilla, U. Iterative processing of laser scanning data by full waveform analysis. ISPRS J. Photogramm. Remote Sens. 2008, 63, 99–114.

- Höfle, B.; Pfeifer, N. Correction of laser scanning intensity data: Data and model-driven approaches. ISPRS J. Photogramm. Remote Sens. 2007, 62, 1415–1433.

- Gross, H.; Thoennessen, U. Extraction of lines from laser point clouds. In Proceedings of the ISPRS Conference Photogrammetric Image Analysis (PIA), Bonn, Germany, 20–22 September 2006; pp. 87–91.

- West, K.F.; Webb, B.N.; Lersch, J.R.; Pothier, S.; Triscari, J.M.; Iverson, A.E. Context-driven automated target detection in 3-D data. In Proceedings of the Automatic Target Recognition XIV, Orlando, FL, USA, 13–15 April 2004; pp. 133–143.

- Ojala, T.; Pietikainen, M.; Maenpaa, T.T. Multi resolution gray scale and rotation invariant texture classification with local binary pattern. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987.

- Ge, C.; Du, Q.; Sun, W.; Wang, K.; Li, J.; Li, Y. Deep Residual Network-Based Fusion Framework for Hyperspectral and LiDAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2458–2472.

- Peng, B.; Li, W.; Xie, X.; Du, Q.; Liu, K. Weighted-Fusion-Based Representation Classifiers for Hyperspectral Imagery. Remote Sens. 2015, 7, 14806–14826.

- Manjunath, B.S.; Ma, W.Y. Texture features for browsing and retrieval of image data. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 837–842.

- Rajadell, O.; García-Sevilla, P.; Pla, F. Textural Features for Hyperspectral Pixel Classification. In Proceedings of the Iberian Conference on Pattern Recognition and Image Analysis, Póvoa de Varzim, Portugal, 10–12 June 2009; pp. 208–216.

- Aksoy, S. Spatial techniques for image classification. In Signal and Image Processing for Remote Sensing; CRC Press: Boca Raton, FL, USA, 2006; pp. 491–513.

- Zhang, G.; Jia, X.; Kwok, N.M. Spectral-spatial based super pixel remote sensing image classification. In Proceedings of the 4th International Congress on Image and Signal Processing, Shanghai, China, 15–17 October 2011; pp. 1680–1684.

- Pesaresi, M.; Benediktsson, J.A. A New Approach for the Morphological Segmentation of High-Resolution Satellite Imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 309–320.

- Morsy, S.S.A.; El-Rabbany, A. Multispectral LiDAR Data for Land Cover Classification of Urban Areas. Sensors 2017, 17, 958.

- Suomalainen, J.; Hakala, T.; Kaartinen, H.; Räikkönen, E.; Kaasalainen, S. Demonstration of a virtual active hyperspectral LiDAR in automated point cloud classification. ISPRS J. Photogramm. Remote Sens. 2011, 66, 637–641.

- Hakala, T.; Suomalainen, J.; Kaasalainen, S.; Chen, Y. Full waveform hyperspectral LiDAR for terrestrial laser scanning. Opt. Express 2012, 20.