| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Marco Martalò | + 1391 word(s) | 4654 | 2020-12-22 08:51:30 | | | |

| 2 | Lily Guo | Meta information modification | 1391 | 2020-12-23 08:50:27 | | |

Video Upload Options

Advanced Driver-Assistance Systems (ADASs) are used for increasing safety in the automotive domain, yet current ADASs notably operate without taking into account drivers’ states, e.g., whether she/he is emotionally apt to drive.

1. Introduction

In recent years, the automotive field has been pervaded by an increasing level of automation. This automation has introduced new possibilities with respect to manual driving. Among all the technologies for vehicle driving assistance, on-board Advanced Driver-Assistance Systems (ADASs), employed in cars, trucks, etc.[1], bring about remarkable possibilities in improving the quality of driving, safety, and security for both drivers and passengers. Examples of ADAS technologies are Adaptive Cruise Control (ACC)[2], Anti-lock Braking System (ABS)[3], alcohol ignition interlock devices[4], automotive night vision[5], collision avoidance systems[6], driver drowsiness detection[7], Electronic Stability Control (ESC)[8], Forward Collision Warnings (FCW)[9], Lane Departure Warning System (LDWS)[10], and Traffic Sign Recognition (TSR)[11]. Most ADASs consist of electronic systems developed to adapt and enhance vehicle safety and driving quality. They are proven to reduce road fatalities by compensating for human errors. To this end, safety features provided by ADASs target accident and collision avoidance, usually realizing safeguards, alarms, notifications, and blinking lights and, if necessary, taking control of the vehicle itself.

ADASs rely on the following assumptions: (i) the driver is attentive and emotionally ready to perform the right operation at the right time, and (ii) the system is capable of building a proper model of the surrounding world and of making decisions or raising alerts accordingly. Unfortunately, even if modern vehicles are equipped with complex ADASs (such as the aforementioned ones), the number of crashes is only partially reduced by their presence. In fact, the human driver is still the most critical factor in about 94% of crashes[12].

However, most of the current ADASs implement only simple mechanisms to take into account drivers’ states or do not take them into account it at all. An ADAS informed about the driver’s state could take contextualized decisions compatible with his/her possible reactions. Knowing the driver’s state means to continuously recognize whether the driver is physically, emotionally, and physiologically apt to guide the vehicle as well as to effectively communicate these ADAS decisions to the driver. Such an in-vehicle system to monitor drivers’ alertness and performance is very challenging to obtain and, indeed, would come with many issues.

-

The incorrect estimation of a driver’s state as well as of the status of the ego-vehicle (also denoted as subject vehicle or Vehicle Under Test (VUT) and referring to the vehicle containing the sensors perceiving the environment around the vehicle itself)[13][14] and the external environment may cause the ADAS to incorrectly activate or to make wrong decisions. Besides immediate danger, wrong decisions reduce drivers’ confidence in the system.

-

Many sensors are needed to achieve such an ADAS. Sensors are prone to errors and require several processing layers to produce usable outputs, where each layer introduces delays and may hide/damage data.

-

Dependable systems that recognize emotions and humans’ states are still a research challenge. They are usually built around algorithms requiring heterogeneous data as input parameters as well as provided by different sensing technologies, which may introduce unexpected errors into the system.

-

An effective communication between the ADAS and the driver is hard to achieve. Indeed, human distraction plays a critical role in car accidents[15] [15] and can be caused by both external and internal causes.

In this paper, we first present a literature review on the application of human state recognition for ADAS, covering psychological models, the sensors employed for capturing physiological signals, algorithms used for human emotion classification, and algorithms for human–car interaction. In particular, some of the aspects that researchers are trying to address can be summarized as follows:

-

adoption of tactful monitoring of psychological and physiological parameters (e.g., eye closure) able to significantly improve the detection of dangerous situations (e.g., distraction and drowsiness)

-

improvement in detecting dangerous situations (e.g., drowsiness) with a reasonable accuracy and based on the use of driving performance measures (e.g., through monitoring of “drift-and-jerk” steering as well as detection of fluctuations of the vehicle in different directions)

-

introduction of “secondary” feedback mechanisms, subsidiary to those originally provided in the vehicle, able to further enhance detection accuracy—this could be the case of auditory recognition tasks returned to the vehicle’s driver through a predefined and prerecorded human voice, which is perceived by the human ear in a more acceptable way compared to a synthetic one.

Moreover, the complex processing tasks of modern ADASs are increasingly tackled by AI-oriented techniques. AIs can solve complex classification tasks that were previously thought to be very hard (or even impossible). Human state estimation is a typical task that can be approached by AI classifiers. At the same time, the use of AI classifiers brings about new challenges. As an example, ADAS can potentially be improved by having a reliable human emotional state identified by the driver, e.g., in order to activate haptic alarms in case of imminent forward collisions. Even if such an ADAS could tolerate a few misclassifications, the AI component for human state classification needs to have a very high accuracy to reach an automotive-grade reliability. Hence, it should be possible to prove that a classifier is sufficiently robust against unexpected data [16].

We then introduce a novel perception architecture for ADAS based on the idea of Driver Complex State (DCS). The DCS of the vehicle’s driver monitors his/her behavior via multiple non-obtrusive sensors and AI algorithms, providing emotion cognitive classifiers and emotion state classifiers to the ADAS. We argue that this approach is a smart way to improve safety for all occupants of a vehicle. We believe that, to be successful, the system must adopt unobtrusive sensing technologies for human parameters detection, safe and transparent AI algorithms that satisfy stringent automotive requirements, as well as innovative Human–Machine Interface (HMI) functionalities. Our ultimate goal is to provide solutions that improve in-vehicle ADAS, increasing safety, comfort, and performance in driving. The concept will be implemented and validated in the recently EU-funded NextPerception project[17], which will be briefly introduced.

2. ADAS Using Driver Emotion Recognition

In the automotive sector, the ADAS industry is a growing segment aiming at increasing the adoption of industry-wide functional safety in accordance with several quality standards, e.g., the automotive-oriented ISO 26262 standard[21]. ADAS increasingly relies on standardized computer systems, such as the Vehicle Information Access API[22], Volkswagen Infotainment Web Interface (VIWI) protocol[23], and On-Board Diagnostics (OBD) codes [24], to name a few.

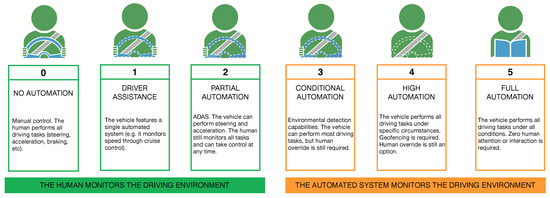

In order to achieve advanced ADAS beyond semiautonomous driving, there is a clear need for appropriate knowledge of the driver’s status. These cooperative systems are captured in the Society of Automotive Engineers (SAE) level hierarchy of driving automation, summarized in Figure 1. These levels range from level 0 (manual driving) to level 5 (fully autonomous vehicle), with intermediate levels representing semiautonomous driving situations, with a mixed driver–vehicle degree of cooperation.

According to SAE levels, in these mixed systems, automation is partial and does not cover every possible anomalous condition that can happen during driving. Therefore, the driver’s active presence and his/her reaction capability remain critical. In addition, the complex data processing needed for higher automation levels will almost inevitably require various forms of AI (e.g., Machine Learning (ML) components), in turn bringing security and reliability issues.

Driver Monitoring Systems (DMSs) are a novel type of ADAS that has emerged to help predict driving maneuvers, driver intent, and vehicle and driver states, with the aim of improving transportation safety and driving experience as a whole[25]. For instance, by coupling sensing information with accurate lane changing prediction models, a DMS can prevent accidents by warning the driver ahead of time of potential danger[26]. As a measure of the effectiveness of this approach, progressive advancements of DMSs can be found in a number of review papers. Lane changing models have been reviewed in[27], while in [28][29], developments in driver’s intent prediction with emphasis on real-time vehicle trajectory forecasting are surveyed. The work in[30] reviews driver skills and driving behavior recognition models. A review of the cognitive components of driver behavior can also be found in[7], where situational factors that influence driving are addressed. Finally, a recent survey on human behavior prediction can be found in[31].

References

- Ziebinski, A.; Cupek, R.; Grzechca, D.; Chruszczyk, L. Review of Advanced Driver Assistance Systems (ADAS). AIP Conf. Proc. 2017, 1906, 120002.

- • Vollrath, M.; Schleicher, S.; Gelau, C. The Influence of Cruise Control and Adaptive Cruise Control on Driving Behaviour—A driving simulator study. Accid. Anal. Prev. 2011, 43, 1134–1139.

- • Satoh, M.; Shiraishi, S. Performance of Antilock Brakes with Simplified Control Technique. In SAE International Congress and Exposition; SAE International: Warrendale, PA, USA, 1983.

- • Centers for Disease Control and Prevention (CDC). Increasing Alcohol Ignition Interlock Use. Available online: https://www.cdc.gov/motorvehiclesafety/impaired_driving/ignition_interlock_states.html (accessed on 28 September 2020).

- • Martinelli, N.S.; Seoane, R. Automotive Night Vision System. In Thermosense XXI. International Society for Optics and Photonics; SPIE: Bellingham, WA, USA, 1999; Volume 3700, pp. 343–346.

- • How Pre-Collision Systems Work. Available online: https://auto.howstuffworks.com/car-driving-safety/safety-regulatory-devices/pre-collision-systems.htm (accessed on 28 September 2020).

- • Jacobé de Naurois, C.; Bourdin, C.; Stratulat, A.; Diaz, E.; Vercher, J.L. Detection and Prediction of Driver Drowsiness using Artificial Neural Network Models. Accid. Anal. Prev. 2019, 126, 95–104.PubMed

- • How Electronic Stability Control Works. Available online: https://auto.howstuffworks.com/car-driving-safety/safety-regulatory-devices/electronic-stability-control.htm (accessed on 28 September 2020).

- • Wang, C.; Sun, Q.; Li, Z.; Zhang, H.; Fu, R. A Forward Collision Warning System based on Self-Learning Algorithm of Driver Characteristics. J. Intell. Fuzzy Syst. 2020, 38, 1519–1530.

- • Kortli, Y.; Marzougui, M.; Atri, M. Efficient Implementation of a Real-Time Lane Departure Warning System. In Proceedings of the 2016 International Image Processing, Applications and Systems (IPAS), Hammamet, Tunisia, 5–7 November 2016; pp. 1–6.

- • Luo, H.; Yang, Y.; Tong, B.; Wu, F.; Fan, B. Traffic Sign Recognition Using a Multi-Task Convolutional Neural Network. IEEE Trans. Intell. Transp. Syst. 2018, 19, 1100–1111.

- • Singh, S. Critical Reasons for Crashes Investigated in the National Motor Vehicle Crash Causation Survey. Technical Report. (US Department of Transportation—National Highway Traffic Safety Administration). Report No. DOT HS 812 115. 2015. Available online: https://crashstats.nhtsa.dot.gov/Api/Public/ViewPublication/812506 (accessed on 11 November 2020).

- Ego Vehicle—Coordinate Systems in Automated Driving Toolbox. Available online: https://www.mathworks.com/help/driving/ug/coordinate-systems.html (accessed on 12 November 2020).

- • Ego Vehicle—The British Standards Institution (BSI). Available online: https://www.bsigroup.com/en-GB/CAV/cav-vocabulary/ego-vehicle/ (accessed on 12 November 2020).

- • Regan, M.A.; Lee, J.D.; Young, K. Driver Distraction: Theory, Effects, and Mitigation; CRC Press: Boca Raton, FL, USA, 2008.

- • Tomkins, S. Affect Imagery Consciousness: The Complete Edition: Two Volumes; Springer Publishing Company: Berlin/Heidelberg, Germany, 2008.

- • Russell, J.A. A Circumplex Model of Affect. J. Personal. Soc. Psychol. 1980, 39.

- • Ekman, P. Basic Emotions. In Handbook of Cognition and Emotion; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2005; Chapter 3; pp. 45–60.

- • International Organization for Standardization (ISO). ISO 26262-1:2018—Road Vehicles—Functional Safety. Available online: https://www.iso.org/standard/68383.html (accessed on 24 September 2020).

- • Salman, H.; Li, J.; Razenshteyn, I.; Zhang, P.; Zhang, H.; Bubeck, S.; Yang, G. Provably Robust Deep Learning via Adversarially Trained Smoothed Classifiers. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 11292–11303. Available online: http://papers.nips.cc/paper/9307-provably-robust-deep-learning-via-adversarially-trained-smoothed-classifiers.pdf (accessed on 12 November 2020).

- • NextPerception—Next Generation Smart Perception Sensors and Distributed Intelligence for Proactive Human Monitoring in Health, Wellbeing, and Automotive Systems—Grant Agreement 876487. Available online: https://cordis.europa.eu/project/id/876487 (accessed on 1 October 2020).

- World Wide Web Consortium (W3C). Vehicle Information Access API. Available online: https://www.w3.org/2014/automotive/vehicle_spec.html (accessed on 24 September 2020).

- • World Wide Web Consortium (W3C). Volkswagen Infotainment Web Interface (VIWI) Protocol. Available online: https://www.w3.org/Submission/2016/SUBM-viwi-protocol-20161213/ (accessed on 24 September 2020).

- • Society of Automotive Engineers (SAE). SAE E/E Diagnostic Test Modes J1979_201702. Available online: https://www.sae.org/standards/content/j1979_201702/ (accessed on 24 September 2020).

- • AbuAli, N.; Abou-zeid, H. Driver Behavior Modeling: Developments and Future Directions. Int. J. Veh. Technol. 2016, 2016.

- • Amparore, E.; Beccuti, M.; Botta, M.; Donatelli, S.; Tango, F. Adaptive Artificial Co-pilot as Enabler for Autonomous Vehicles and Intelligent Transportation Systems. In Proceedings of the 10th International Workshop on Agents in Traffic and Transportation (ATT 2018), Stockholm, Sweden, 13–19 July 2018.

- • Rahman, M.; Chowdhury, M.; Xie, Y.; He, Y. Review of Microscopic Lane-Changing Models and Future Research Opportunities. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1942–1956.

- • Doshi, A.; Trivedi, M.M. Tactical Driver Behavior Prediction and Intent Inference: A Review. In Proceedings of the 2011 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011; pp. 1892–1897.

- • Moridpour, S.; Sarvi, M.; Rose, G. Lane Changing Models: A Critical Review. Transp. Lett. 2010, 2, 157–173.

- • Wang, W.; Xi, J.; Chen, H. Modeling and Recognizing Driver Behavior Based on Driving Data: A Survey. Math. Probl. Eng. 2014, 2014.

- • Brown, K.; Driggs-Campbell, K.; Kochenderfer, M.J. Modeling and Prediction of Human Driver Behavior: A Survey. arXiv 2020, arXiv:2006.08832.