| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Zhipeng He | + 2025 word(s) | 2025 | 2020-10-13 08:04:30 | | | |

| 2 | Karina Chen | -391 word(s) | 1634 | 2020-10-14 03:39:39 | | | | |

| 3 | Karina Chen | -391 word(s) | 1634 | 2020-10-14 03:47:22 | | |

Video Upload Options

Multimodal affective brain-computer interfaces(aBCI) use techniques from psychological theories and methods (concepts and protocols), neuroscience (brain function and signal processing) and computer science (machine learning and human-computer interaction) to induce, measure and detect emotional states and apply the resulting information to improve interaction with machines (Mühl, C.; et al.).We assume that multimodal emotion recognition based on EEG should integrate not only EEG signals, an objective method of emotion measurement, but also a variety of peripheral physiological signals or behaviors. Compared to single patterns, multimodal emotion processing can achieve more reliable results by extracting additional information; consequently, it has attracted increasing attention. Li at el. (Li, Y.; et al.) proposed that in addition to combining different input signals, emotion recognition should include a variety of heterogeneous sensory stimuli (such as audio-visual stimulation) to induce emotions. Many studies (Huang, H.; et al.; Wang, F.; et al.) have shown that integrating heterogeneous sensory stimuli can enhance brain patterns and further improve brain–computer interface performance.

1. Multimodal Emotion Recognition based on BCI

Single modality information is easily affected by various types of noise, which makes it difficult to capture emotional states. D'mello [1] used statistical methods to compare the accuracy of single-modality and multimodal emotion recognition using a variety of algorithms and different datasets. The best multimodal emotion-recognition system reached an accuracy of 85% and was considerably more accurate than the optimal single-modality correspondence system, with an average improvement of 9.83% (the median was 6.60%). A comprehensive analysis of multiple signals and their interdependence can be used to construct a model that more accurately reflects the potential nature of human emotional expression.

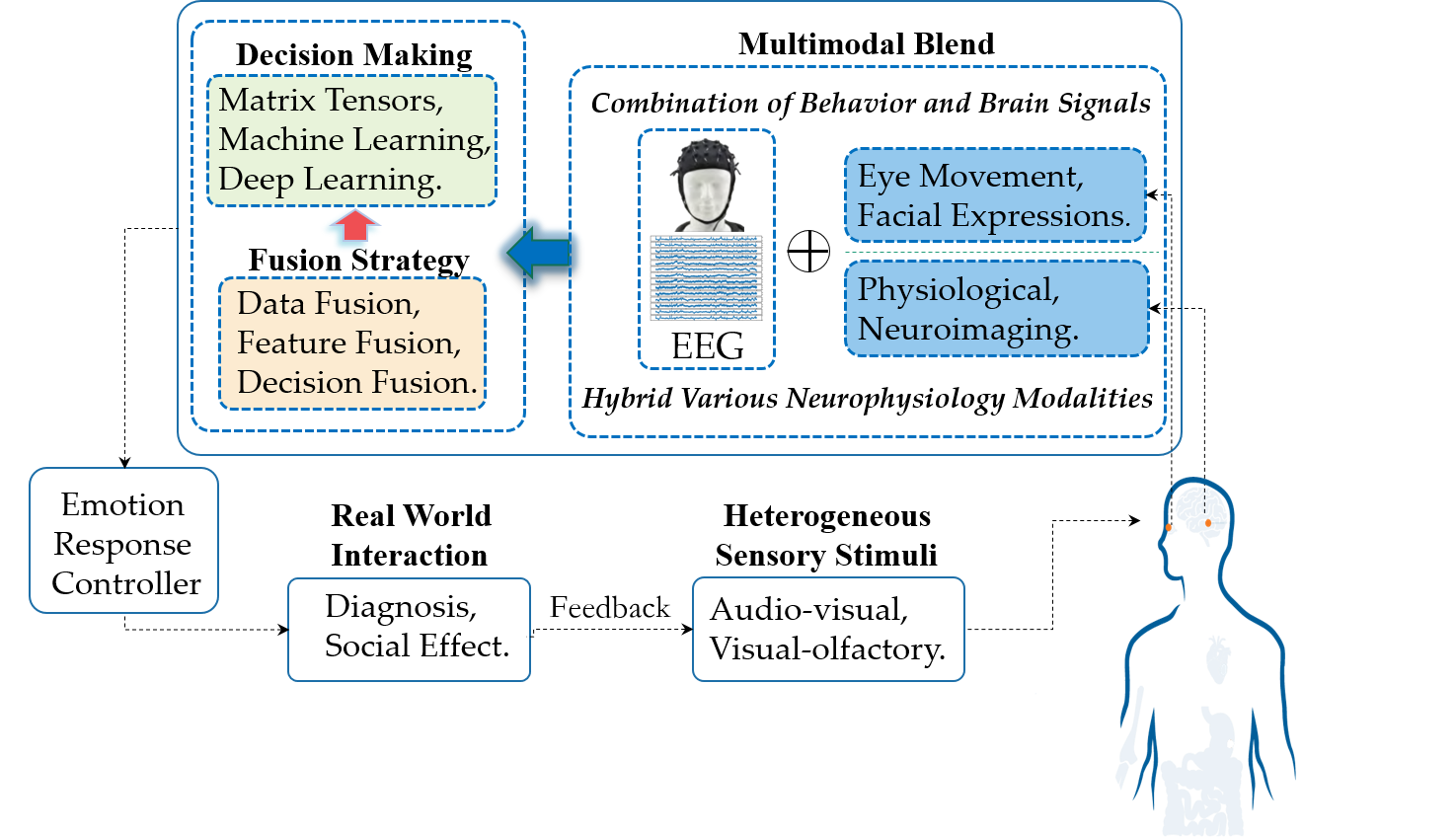

The signal flow in a multimodal aBCI system is depicted in Figure 1. The workflow generally includes three stages: multimodal signal acquisition, signal processing (including basic modality data processing, signal fusion and decision-making) and emotion reflection control. These stages are described in detail below:

Figure 1. Flowchart of multimodal emotional involvement for electroencephalogram (EEG)-based affective brain–computer interfaces (BCI).

2. Combination of Behavior and Brain Signals

The core challenge of multimodal emotion recognition is to model the internal working of modalities and their interactions. Emotion is usually expressed through the interaction between neurophysiology and behavior; thus, it is crucial to capture the relationship between them accurately and make full use of the related information.

Basic emotion theory has shown that when emotions are aroused, a variety of human neurophysiological and external behavioral response systems are activated[2]. Therefore, theoretically, the combination of a subject's internal cognitive state and external subconscious behavior should greatly improve the recognition performance. Concomitantly, the development and promotion of neurophysiological and behavioral signal acquisition equipment has made emotion recognition using mixed multimodalities of human neurophysiological and behavioral performances a research hotspot in international emotional computing. Therefore, mixed modalities involving physiological and external behavior modalities have attracted more attention from emotion recognition researchers.

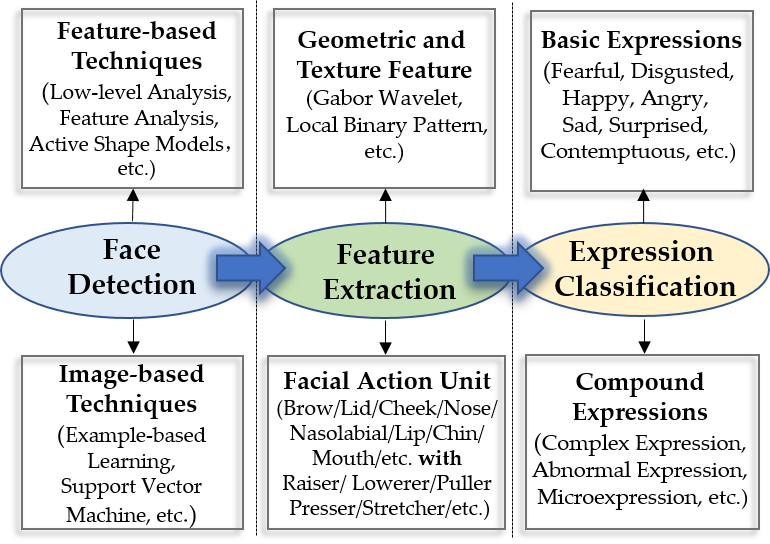

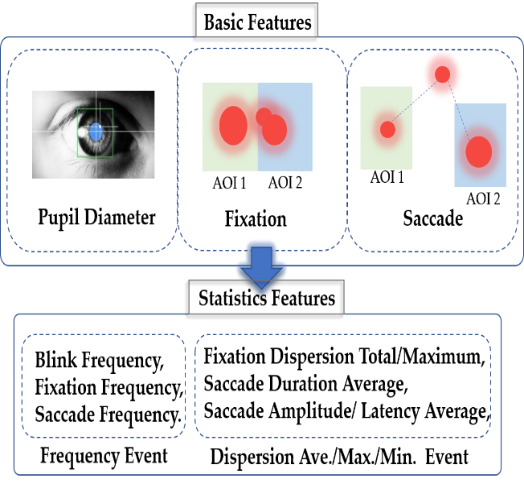

Among the major modalities of behavior, eye movement tracking signals and facial expressions are two modalities with content-aware information. Facial expression is the most direct method of emotional representation. By analyzing information from facial expressions and combining the results with a priori knowledge of emotional information (Figure 2a), we can infer a person's emotional state from facial information. Eye movement tracking signals can provide a variety of eye movement indicators and eye movement tracking signals can reflect user subconscious behaviors and provide important clues to the context of the subjects' current activity[3].

(a) (b)

Figure 2. (a) Flowchart of emotion recognition based on expression. The facial expression recognition process includes three stages: face location recognition, feature extraction and expression classification. In the face location and recognition part, two technologies are usually adopted: feature-based and image-based [8]. The most commonly used emotion recognition methods for facial expressions are geometric and texture feature recognition and facial action unit recognition. Expressions are usually classified into seven basic expressions—fear, disgust, joy, anger, sadness, surprise and contempt—but people's emotional states are complex and can be further divided into a series of combined emotions, including complex expressions, abnormal expressions and microexpressions; (b) Overview of emotionally relevant features of eye movement (AOI 1: The first area of interest, AOI 2: The second area of interest). By collecting data regarding pupil diameter, gaze fixation and saccade, which are three basic eye movement characteristics, their characteristics can be analyzed and counted, including frequency events and special values of frequency event information (e.g., observing fixed frequency and collecting fixed dispersion total/maximum values).

3. Various Hybrid Neurophysiology Modalities

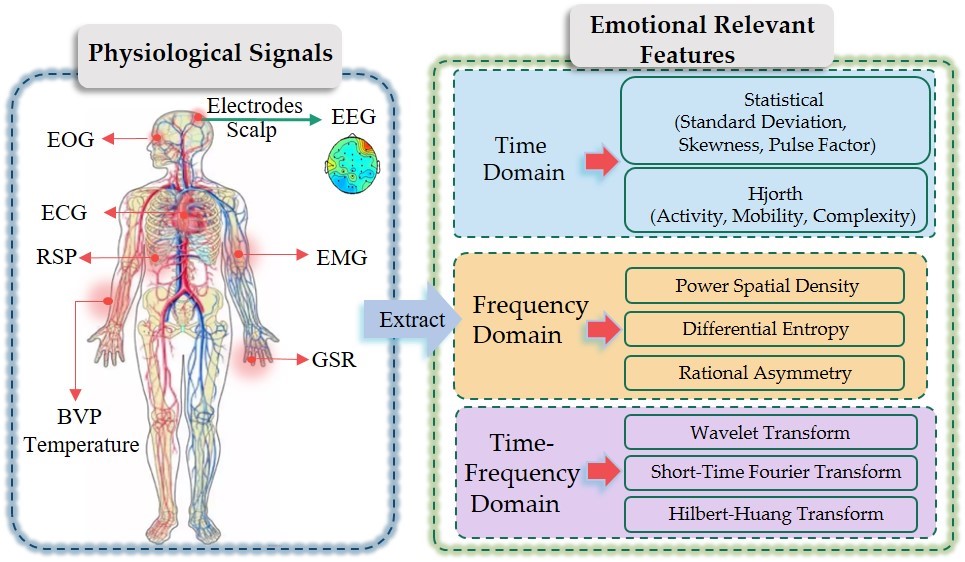

Emotion usually refers to a state of mind that occurs spontaneously rather than consciously and is often accompanied by physiological changes in the central nervous system and the periphery, affecting EEG signals and heart rate. Many efforts have been made to reveal the relationships between explicit neurophysiology modalities and implicit psychological feelings.

(a) (b)

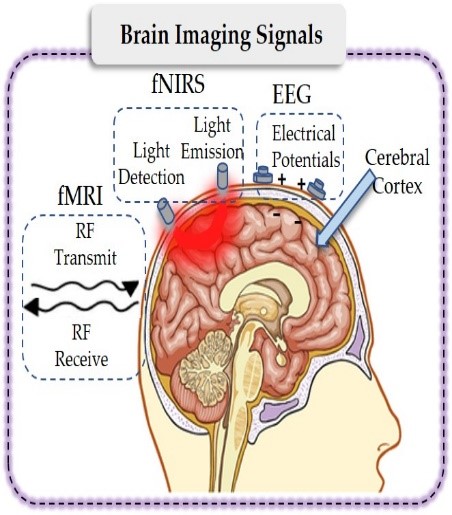

Figure 3. (a) Relevant emotion features extracted from different physiological signals. Physiological features and where they were collected are listed, such as temperature. We can extract emotionally relevant features from these physiological signals that change over time in three dimensions: the time, frequency and time-frequency domains; (b) Measurement of brain activity by EEG, fNIRS and fMRI. Briefly, we introduced how these three kinds of brain imaging signals collected from the cerebral cortex work with fMRI based on radio frequency (RF) transmit and RF receive; fNIRS is based on light emission and light detection and EEG is based on electrical potentials.

4. Heterogeneous Sensory Stimuli

In the study of emotion recognition, to better simulate, detect and study emotions, researchers have employed use a variety of materials to induce emotions, such as pictures (visual), sounds (audio) and videos (audio-visual). Many studies have shown that video-induced stimulation based on visual and auditory multisensory channels is effective because audio-visual integration enhances brain patterns and further improves the performance of brain–computer interfaces. Vision and audition, as the most commonly used sensory organs, can effectively provide objective stimulation to the brain, while the induction ability of other sensory channels, such as olfaction and tactile sensation, is still lacking. As the most primitive sense of human beings, olfaction plays an important role in brain development and the evolution of human survival. Therefore, in theory, it can provide more effective stimulation than can vision and audition.

5. Application

Emotion recognition based on BCI has a wide range of applications that involve all aspects of our daily lives. This section introduce potential applications from two aspects: medical and nonmedical.

5.1. Medical Applications

In the medical field, emotion recognition based on BCI can provides a basis for the diagnosis and treatment of mental illnesses. Computer-aided evaluation of the emotions of patients with consciousness disturbances can help doctors better diagnose the physical condition and consciousness of patients. The existing research on emotion recognition mainly involves offline analysis. For the first time, Huang et al. [4] applied an aBCI online system to the emotion recognition of patients with disorders of consciousness. Using this system, they were able to successfully induce and detect the emotional characteristics of some patients with consciousness disorders in real time. These experimental results showed that aBCI systems hold substantial promise for detecting emotions of patients with disorders of consciousness.

Depression is a serious mental health disease that has high social costs. Current clinical practice depends almost entirely on self-reporting and clinical opinions; consequently. There is a risk of a series of subjective biases. The authors of[5]used emotional sensing methods to develop diagnostic aids to support clinicians and patients during diagnosis and to help monitoring treatment progress in a timely and easily accessible manner.

Mood disorders are not the only criteria for diagnosing autism spectrum disorders (ASD). However, clinicians have long relied on the emotional performances of patients as a basis for autism. The results of the study in [6] suggested that cognitive reassessment strategies may be useful for children and adolescents with ASD. Many studies have shown that emotion classification based on EEG signal processing can significantly improve the social integration abilities of patients with neurological diseases such as amyotrophic lateral sclerosis (ALS) or acute Alzheimer's disease[7].

5.2. Non-Medical Applications

In the field of education, students wore portable EEG devices with an emotion-recognition function, allowing teachers to monitor the students’ emotional states during distance instruction. Elatlassi[8] proposed to model student engagement in online environments using real-time biometric measures and using acuity, performance and motivation as dimensions of student engagement. Real-time biometrics are used to model acuity, performance and motivation include EEG and eye-tracking measures. These biometrics have been measured in an experimental setting that simulates an online learning environment.

In the field of driverless vehicles, emotion recognition based on EEG adds an emotion-recognition system to the autopilot system, thus increasing the driving reliability of the automatic driving system [9]. At the same time, a human-machine hybrid intelligent automatic driving system in man’s loop is built. To date, in the automatic driving system, because passengers do not know whether the driverless vehicle can correctly identify and assess the traffic condition or whether it can make the correct judgment and response in the process of driving, passengers are still very worried about the safety of pilotless driving. The brain computer interface technology can detect the passenger’s emotion in real time and transmit the real feeling of the passenger in the driving process to the driverless system, which can adjust the driving mode according to the passenger's emotional feeling. In the whole system, the human being as a link of an automatic driving system is very good for man-machine cooperation.

In entertainment research and development, we can build a game assistant system for emotional feedback regulation based on EEG and physiological signals that provided players with a full sense of immersion and extremely interactive experiences. In the study of[10], EEG-based "serious" games for concentration training and emotion-enable applications including emotion-based music therapy on the web were proposed and implemented.

References

- D'mello, S.K.; Kory, J. A review and meta-analysis of multimodal affect detection systems. ACM Comput. Surv. (CSUR) 2015, 47, 1–36.

- Bulling, A.; Ward, J.A.; Gellersen, H.; Troster, G. Eye movement analysis for activity recognition using electrooculography. IEEE transactions on pattern analysis and machine intelligence 2010, 33, 741-753.

- Ekman, P. An argument for basic emotions. Cognition & emotion 1992, 6, 169-200.

- Huang, H.; Xie, Q.; Pan, J.; He, Y.; Wen, Z.; Yu, R.; Li, Y. An EEG-based brain computer interface for emotion recognition and its application in patients with Disorder of Consciousness. IEEE Trans. Affect. Comput. 2019, doi:10.1109/TAFFC.2019.2901456.

- Joshi, J.; Goecke, R.; Alghowinem, S.; Dhall, A.; Wagner, M.; Epps, J.; Parker, G.; Breakspear, M. Multimodal assistive technologies for depression diagnosis and monitoring. Journal on Multimodal User Interfaces 2013, 7, 217-228.

- Samson, A.C.; Hardan, A.Y.; Podell, R.W.; Phillips, J.M.; Gross, J.J. Emotion regulation in children and adolescents with autism spectrum disorder. Autism Research 2015, 8, 9-18.

- Gonzalez, H.A.; Yoo, J.; Elfadel, I.A.M. EEG-based Emotion Detection Using Unsupervised Transfer Learning. In Proceedings of 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 694-697.

- Elatlassi, R. Modeling Student Engagement in Online Learning Environments Using Real-Time Biometric Measures: Electroencephalography (EEG) and Eye-Tracking. Master’s Thesis, Oregon State University, Corvallis, OR, USA, 2018.

- Park, C.; Shahrdar, S.; Nojoumian, M. EEG-based classification of emotional state using an autonomous vehicle simulator. In Proceedings of 2018 IEEE 10th Sensor Array and Multichannel Signal Processing Workshop (SAM), Sheffield, UK, 8–11 July 2018; pp. 297-300.

- Sourina, O.; Wang, Q.; Liu, Y.; Nguyen, M.K. A Real-time Fractal-based Brain State Recognition from EEG and its Applications. In Proceedings of BIOSIGNALS 2011 - Proceedings of the International Conference on Bio-inspired Systems and Signal Processing, Rome, Italy, 26-29 January, 2011.