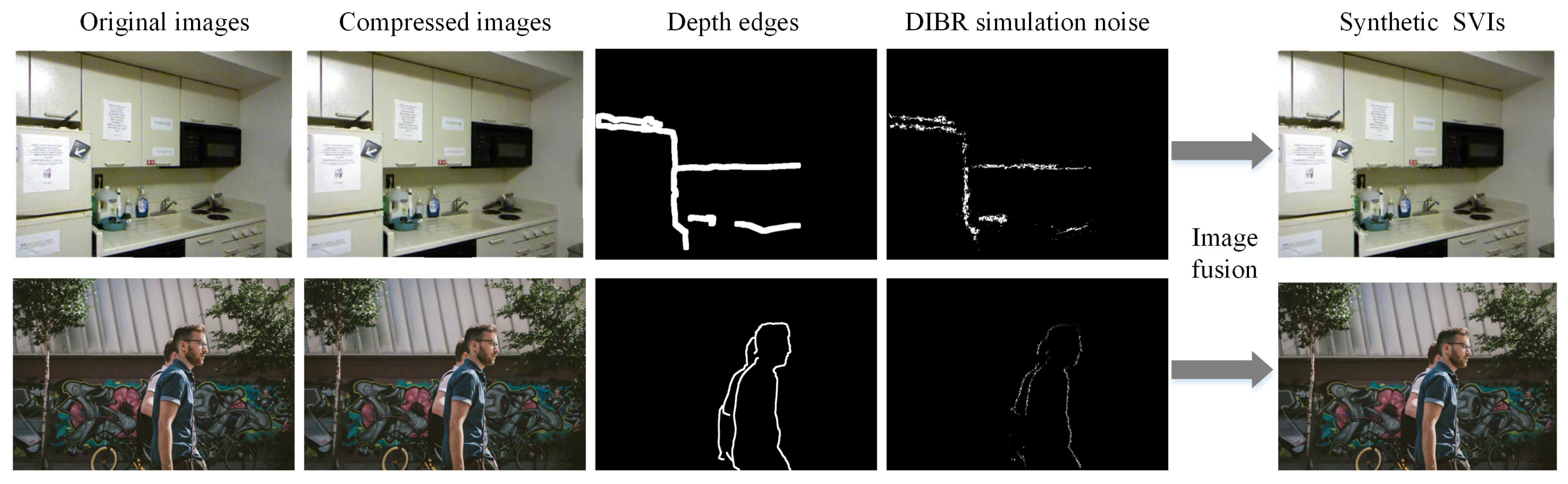

Deep learning-based image quality enhancement models have been proposed to improve the perceptual quality of distorted synthesized views impaired by compression and the Depth Image-Based Rendering (DIBR) process in a multi-view video system. Due to the lack of Multi-view Video plus Depth (MVD) data, a deep learning-based model using more synthetic Synthesized View Images (SVI) is proposed, in which a random irregular polygon-based SVI synthesis method is proposed to simulate the DIBR distortion based on existing massive RGB/RGBD data. In addition, the DIBR distortion mask prediction network is embedded to further enhance the performance.

- quality enhancement

- synthetic images

- data augmentation

- 3D video system

- DIBR

1. DIBR Distortion Simulation

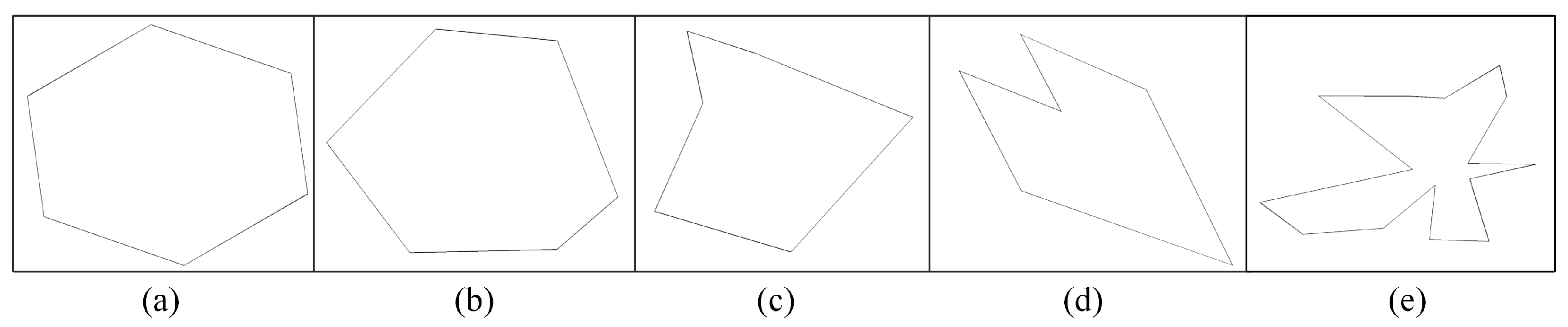

2. Different Local Noise Comparison and Proposed Random Irregular Polygon-Based DIBR Distortion Generation

where denotes the synthetic SVI, I denotes the compressed captured view images, 1 denotes the matrix with all elements as 1, M denotes the mask area corresponding to the detected strong depth edges, ⊙ denotes dot product, and denotes the images added with random noise, i.e., Gaussian noise, speckle noise, or the patch-shuffled version of I. It could be observed that synthesized by Gaussian noise and speckle noise is not very visually resembling synthesis distortion, and synthesized by patch-based noise exhibits similar behaviors a little in the way that the pixels in a local patch appear as disorderly and irregular.

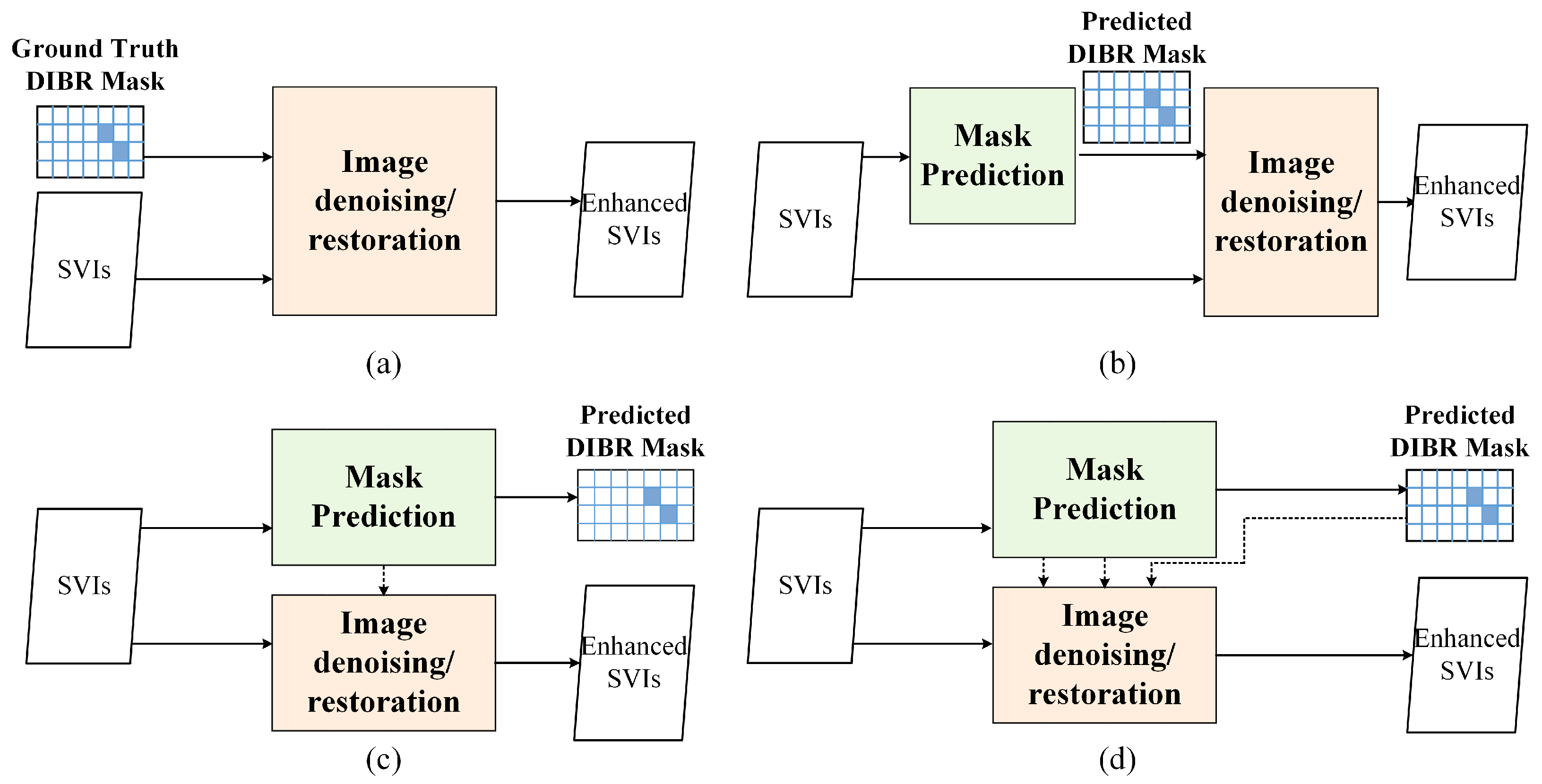

3. DIBR Distortion Mask Prediction Network Embedding

This entry is adapted from the peer-reviewed paper 10.3390/s22218127

References

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor indoor segmentation and support inference from RGBD images. In Proceedings of the 12th European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012; pp. 746–760.

- Timofte, R.; Gu, S.; Wu, J.; Van Gool, L.; Zhang, L.; Yang, M.H.; Haris, M.; Shakhnarovich, G.; Ukita, N.; Hu, S.; et al. NTIRE 2018 challenge on single image super-resolution: Methods and results. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 852–863.

- Ranftl, R.; Lasinger, K.; Hafner, D.; Schindler, K.; Koltun, V. Towards robust monocular depth estimation: Mixing datasets for zero-shot cross-dataset transfer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1623–1637.

- Shih, M.L.; Su, S.Y.; Kopf, J.; Huang, J.B. 3D photography using context-aware layered depth inpainting. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 8025–8035.

- Kang, G.; Dong, X.; Zheng, L.; Yang, Y. Patchshuffle regularization. arXiv 2017, arXiv:1707.07103.

- Hada, P.S. Approaches for Generating 2D Shapes. Master’s Dissertation, Department of Computer Science, University of Nevada, Las Vegas, NV, USA, 2014.

- Random Polygon Generation. Available online: https://stackoverflow.com/questions/8997099/algorithm-to-generate-random-2d-polygon (accessed on 19 October 2022).

- Li, L.; Zhou, Y.; Gu, K.; Lin, W.; Wang, S. Quality assessment of DIBR-synthesized images by measuring local geometric distortions and global sharpness. IEEE Trans. Multimed. 2018, 20, 914–926.

- Wang, G.; Wang, Z.; Gu, K.; Xia, Z. Blind quality assessment for 3D-synthesized images by measuring geometric distortions and image complexity. In Proceedings of the 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 4040–4044.

- Zhang, H.; Patel, V.M. Density-aware single image de-raining using a multi-stream dense network. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 695–704.

- Cun, X.; Pun, C.; Shi, C. Towards ghost-free shadow removal via dual hierarchical aggregation network and shadow matting GAN. In Proceedings of the 34th AAAI Conference on Artificial Intelligence (AAAI), New York, NY, USA, 7–12 February 2020; pp. 10680–10687.

- Purohit, K.; Suin, M.; Rajagopalan, A.N.; Boddeti, V.N. Spatially-adaptive image restoration using distortion-guided networks. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 2289–2299.

- Pan, Z.; Yu, W.; Lei, J.; Ling, N.; Kwong, S. TSAN: Synthesized view quality enhancement via two-stream attention network for 3D-HEVC. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 345–358.

- Chen, L.; Chu, X.; Zhang, X.; Sun, J. Simple baselines for image restoration. arXiv 2022, arXiv:2204.04676.

- Wan, Z.; Zhang, B.; Chen, D.; Zhang, P.; Chen, D.; Liao, J.; Wen, F. Bringing old photos back to life. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2744–2754.