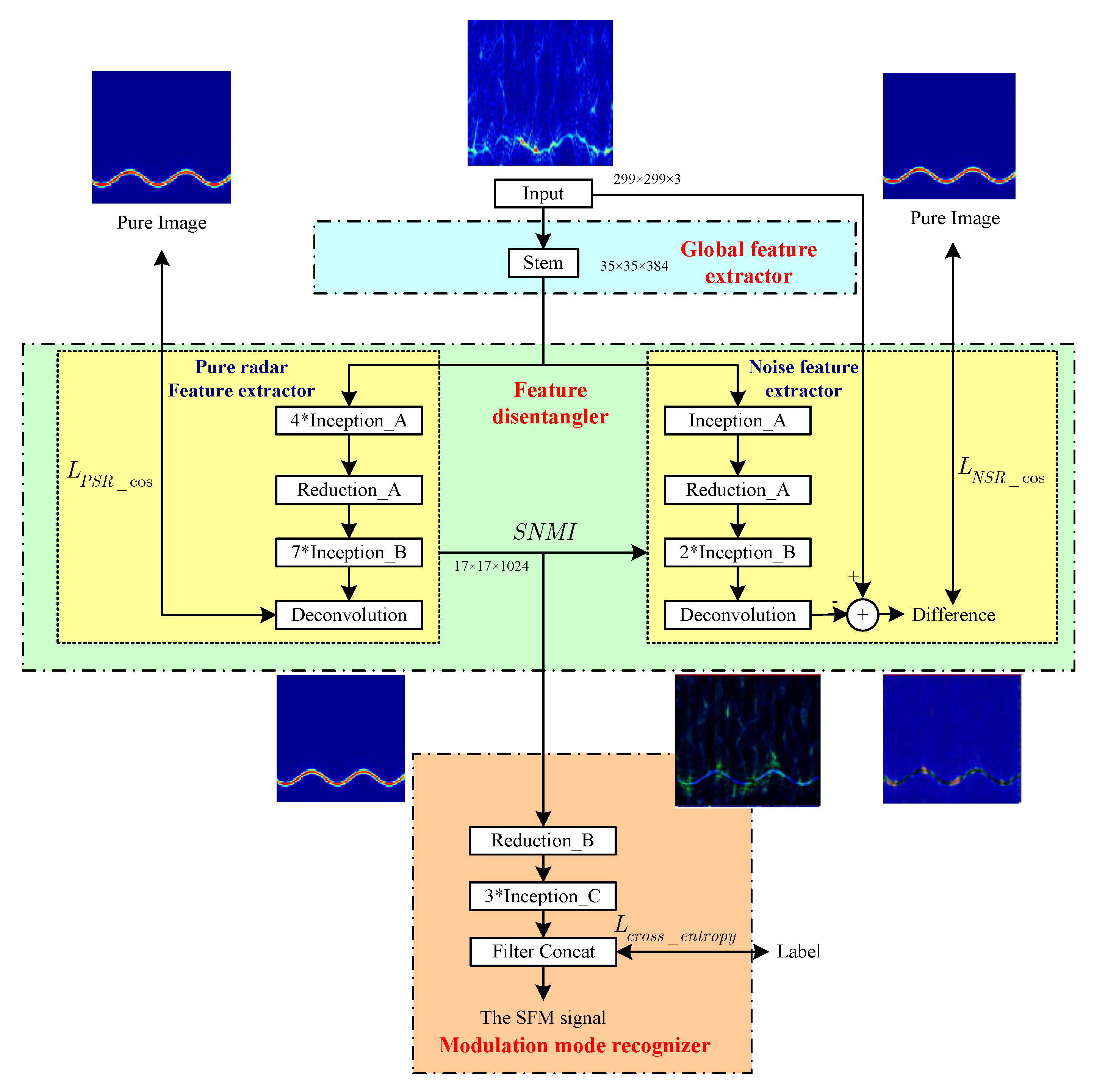

Accurate recognition of radar modulation mode helps to better estimate radar echo parameters, thereby occupying an advantageous position in the radar electronic warfare (EW). The pure radar signal representation (PSR) is disentangled from the noise signal representation (NSR) through a feature disentangler and used to learn a radar signal modulation recognizer under low-SNR environments. Signal noise mutual information loss is proposed to enlarge the gap between the PSR and the NSR.

- radar signal

- modulation recognition

- denoising

- signal-to-noise ratio

1. Introduction

2. Conventional IPMR under Low SNR

3. Deep-Learning-Based IPMR in Low-SNR Conditions

4. Disentangled Learning

5. DGDNet

5.1. Structure of The Network

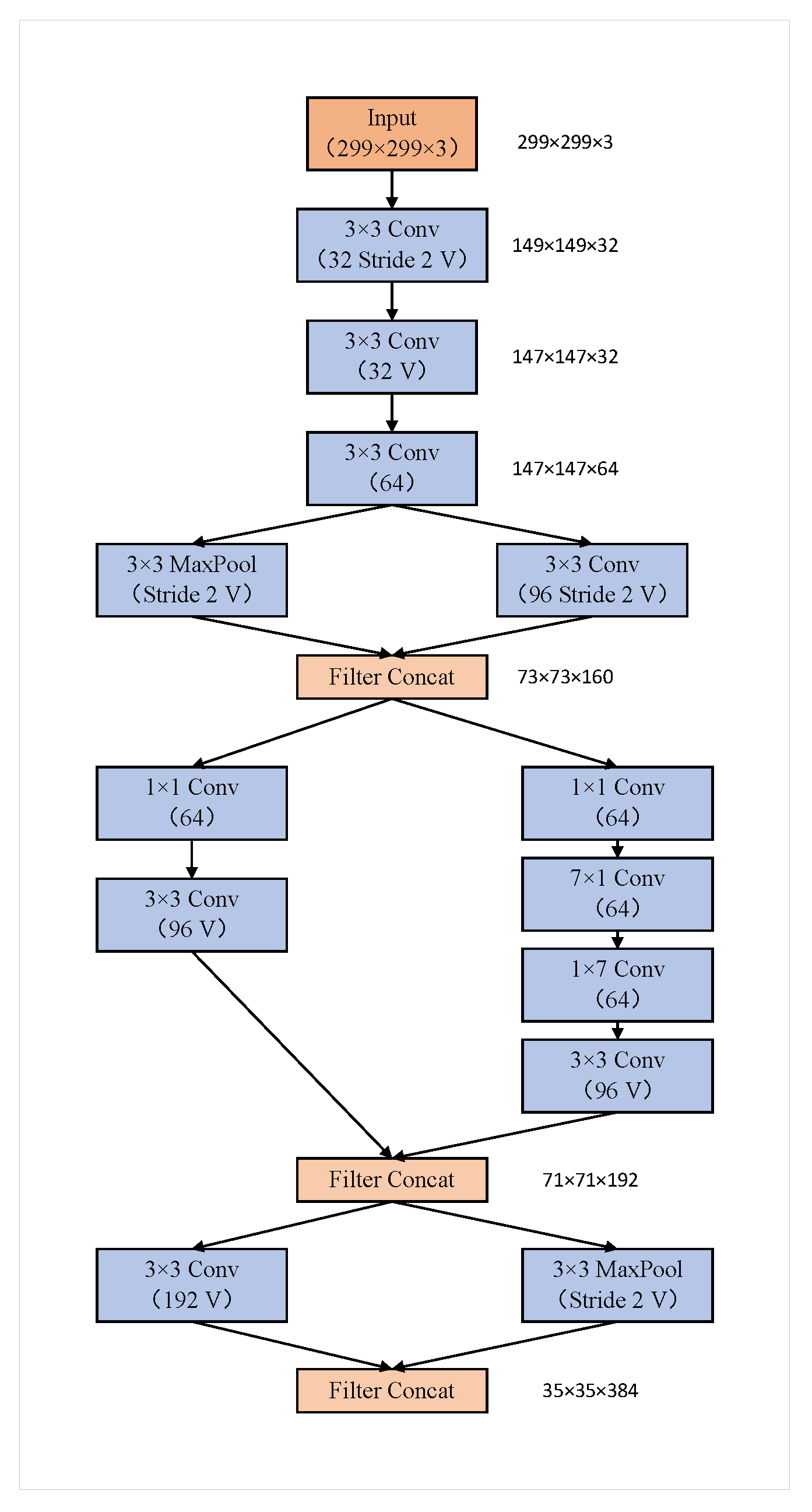

5.2. Global Feature Extractor

5.3. Feature Disentangler

- Pure Radar Feature Extractor

- Noise Feature Extractor

Similar to the pure radar feature extractor, the noise signal extractor is based on the Inception structure. It contains one Inception_A module, one Reduction_A module, two Inception_B, and one Deconvolution module. The output of the noise feature extractor is the NSR, which can be used to reconstruct the noise images through the deconvolution module. Similar to the pure radar feature extraction process, the TFIs transformed from the radar signal under SNR of 16 dB can be used as the ideal denoising images. Therefore, the ideal noising images can be calculated as the difference between the input noisy TFIs and the ideal denoising images.

6. Conclusions

This entry is adapted from the peer-reviewed paper 10.3390/rs14051252

References

- Zuo, L.; Wang, J.; Sui, J.; Li, N. An Inter-Subband Processing Algorithm for Complex Clutter Suppression in Passive Bistatic Radar. Remote Sens. 2021, 13, 4954.

- Xu, J.; Zhang, J.; Sun, W. Recognition of The Typical Distress in Concrete Pavement Based on GPR and 1D-CNN. Remote Sens. 2021, 13, 2375.

- Zhu, M.; Li, Y.; Pan, Z.; Yang, J. Automatic Modulation Recognition of Compound Signals Using a Deep Multilabel Classifier: A Case Study with Radar Jamming Signals. Signal Process. 2020, 169, 107393.

- Ravi Kishore, T.; Rao, K.D. Automatic Intrapulse Modulation Classification of Advanced LPI Radar Waveforms. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 901–914.

- Sadeghi, M.; Larsson, E.G. Adversarial Attacks on Deep Learning-based Radio Signal Classification. IEEE Wirel. Commun. Lett. 2019, 8, 213–216.

- Wang, Y.; Gui, G.; Ohtsuki, T.; Adachi, F. Multi-Task Learning for Generalized Automatic Modulation Classification under Non-Gaussian Noise with Varying SNR Conditions. IEEE Trans. Wirel. Commun. 2021, 20, 3587–3596.

- Yu, Z.; Tang, J.; Wang, Z. GCPS: A CNN Performance Evaluation Criterion for Radar Signal Intrapulse Modulation Recognition. IEEE Commun. Lett. 2021, 25, 2290–2294.

- Hassan, K.; Dayoub, I.; Hamouda, W.; Nzeza, C.N.; Berbineau, M. Blind Digital Modulation Identification for Spatially Correlated MIMO Systems. IEEE Trans. Wirel. Commun. 2012, 11, 683–693.

- Wang, Y.; Gui, J.; Yin, Y.; Wang, J.; Sun, J.; Gui, G.; Adachi, F. Automatic Modulation Classification for MIMO Systems via Deep Learning and Zero-Forcing Equalization. IEEE Trans. Veh. Technol. 2020, 69, 5688–5692.

- Ali, A.; Yangyu, F. Automatic Modulation Classification Using Deep Learning Based on Sparse Autoencoders with Nonnegativity Constraints. IEEE Signal Process. Lett. 2017, 24, 1626–1630.

- Peng, S.; Jiang, H.; Wang, H.; Alwageed, H.; Zhou, Y.; Sebdani, M.M.; Yao, Y.D. Modulation Classification Based on Signal Constellation Diagrams and Deep Learning. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 718–727.

- Tian, C.; Xu, Y.; Li, Z.; Zuo, W. Attention-guided CNN for Image Denoising. Neural Netw. 2020, 124, 117–129.

- Qu, Z.; Hou, C.; Wang, W. Radar Signal Intra-Pulse Modulation Recognition Based on Convolutional Neural Network and Deep Q-Learning Network. IEEE Access 2020, 8, 49125–49136.

- Qu, Z.; Wang, W.; Hou, C. Radar Signal Intra-Pulse Modulation Recognition Based on Convolutional Denoising Autoencoder and Deep Convolutional Neural Network. IEEE Access 2019, 7, 112339–112347.

- Azzouz, E.E.; Nandi, A.K. Automatic Identification of Digital Modulation Types. Signal Process. 1995, 47, 55–69.

- Zhang, L.; Yang, Z.; Lu, W. Digital Modulation Classification Based on Higher-order Moments and Characteristic Function. In Proceedings of the 2020 IEEE 5th International Conference on Signal and Image Processing (ICSIP), Nanjing, China, 23–25 October 2020.

- Zaerin, M.; Seyfe, B. Multiuser Modulation Classification Based on Cumulants in Additive White Gaussian Noise Channel. IET Signal Process. 2012, 6, 815–823.

- Lunden, J.; Terho, L.; Koivunen, V. Waveform Recognition in Pulse Compression Radar Systems. In Proceedings of the 2005 IEEE Workshop on Machine Learning for Signal Processing, Mystic, CT, USA, 28 September 2005.

- Wu, A.; Han, Y.; Zhu, L.; Yang, Y. Instance-Invariant Domain Adaptive Object Detection via Progressive Disentanglement. IEEE Trans. Pattern Anal. Mach. Intell. 2021; in press.

- Qu, Z.; Mao, X.; Deng, Z. Radar Signal Intrapulse Modulation Recognition Based on Convolutional Neural Network. IEEE Access 2018, 6, 43874–43884.

- Deng, W.; Zhao, L.; Liao, Q.; Guo, D.; Kuang, G.; Hu, D.; Liu, L. Informative Feature Disentanglement for Unsupervised Domain Adaptation. IEEE Trans. Multimed. 2021; in press.

- Han, M.; Özdenizci, O.; Wang, Y.; Koike-Akino, T.; Erdoğmuş, D. Disentangled Adversarial Autoencoder for Subject-Invariant Physiological Feature Extraction. IEEE Signal Process. Lett. 2020, 27, 1565–1569.